…he explains to her that Sinclair, the British inventor, had a way of getting things right, but also exactly wrong. Foreseeing the market for affordable personal computers, Sinclair decided that what people would want to do with them was to learn programming. The ZX81, marketed in the United States as the Timex 1000, cost less than the equivalent of a hundred dollars, but required the user to key in programs, tapping away on that little motel keyboard-sticker. This had resulted both in the short market-life of the product and, in Voytek’s opinion, twenty years on, in the relative preponderance of skilled programmers in the United Kingdom. They had had their heads turned by these little boxes, he believes, and by the need to program them. “Like hackers in Bulgaria,” he adds, obscurely.

“But if Timex sold it in the United States,” she asks him, “why didn’t we get the programmers?”

“You have programmers, but America is different. America wanted Nintendo. Nintendo gives you no programmers…”

— William Gibson, Pattern Recognition

A couple of years ago I ventured out of the man cave to give a talk about the Amiga at a small game-development conference in Oslo. I blazed through as much of the platform’s history as I could in 45 minutes or so, emphasizing for my audience of mostly young students from a nearby university the Amiga’s status as the preeminent gaming platform in Europe for a fair number of years. They didn’t take much convincing; even this crowd, young as they were, had their share of childhood memories involving Amiga 500s and 1200s. Mostly they seemed surprised that the Amiga hadn’t ever been all that terribly popular in the United States. During the question-and-answer session, someone asked a question that stopped me short: if American kids hadn’t been playing games on their Amigas, just what the hell had they been playing on?

The answer itself wasn’t hard to arrive at: the sorts of kids who migrated from 8-bit Sinclairs, Acorns, Amstrads, and Commodores to 16-bit Amigas and Atari STs in Britain made a much more lateral move in the United States, migrating to the 8-bit Nintendo Entertainment System.

More complex and interesting are the ramifications of these trends. Because the Atari VCS console was never a major presence in Britain and the rest of Europe during its heyday, and because Nintendo arrived only very belatedly, for many years videogames played in the home there meant games played on home computers. One could say much about how having a device useful for creation as well as consumption as the favored platform of most people affected the market across Europe. The magazines were filled with stories of bedroom gamers who had become bedroom coders and finally Software Stars. Such stories make a marked contrast to an American console-gaming magazine like Nintendo Power, all about consumption without the accompanying ethos of creation.

But most importantly for our purposes today, the relative neglect of Britain in particular by the big computing powers in the United States and Japan — for many years, Commodore was the only company of either nation to make a serious effort to sell their machines into British homes — gave space for a flourishing domestic trade in homegrown machines. When Britain became the nation with the most computers per capita on the planet at mid-decade, most of the computers in question bore the logo of either Acorn or Sinclair, the two great rivals at the heart of the young British microcomputer industry.

Acorn, co-founded by Clive Sinclair’s former right-hand man Chris Curry and an Austrian academic named Hermann Hauser, was an archetypal example of an engineering-driven company. Their machines were a little more baroque, a little better built, and consequently a little more expensive than they needed to be, while their public persona was reserved and just a little condescending, much like that of the BBC that had given its official imprimatur to Acorn’s most popular machine, the BBC Micro. Despite “Uncle Clive’s” public reputation as the British Inspector Gadget, Sinclair was just the opposite; cheap and cheerful, they had the common touch. Acorns sold to the educators, to the serious hobbyists, and to the posh, while Sinclairs dominated with the masses.

Yet Acorn and Sinclair were similar in one important respect: they were both in their own ways very poorly managed companies. When the British home-computer market hit an iceberg in 1985, both were caught in untenable positions, drowning in excess inventory. Acorn — quintessentially British, based in the storied heart of Britain’s “Silicon Fen” of Cambridge — was faced with a choice between dissolution and selling themselves to the Italian typewriter manufacturer Olivetti; after some hand-wringing, they chose the latter course. Sinclair also sold out: to the new kid on the block of British computing, Amstrad, owned by a gruff Cockney with a penchant for controversy named Alan Sugar who was well on his way to becoming the British Donald Trump.

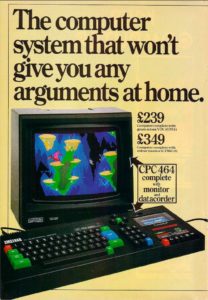

Ever mindful of the practical concerns of their largely working-class customers, Amstrad made much of the CPC’s bundled monitor in their advertising, noting that Junior could play on the CPC without tying up the family television.

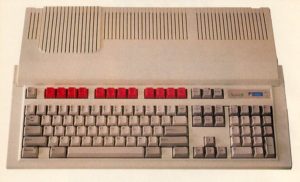

Amstrad had already been well-established as a maker of inexpensive stereo equipment and other consumer electronics when their first computers, the CPC (“Colour Personal Computer”) line, debuted in June of 1984. The CPC range was created and sold as a somewhat more capable Sinclair Spectrum. It consisted of well-built and smartly priced if technically unimaginative computers that were fine choices for gaming, boasting as they did reasonably good if hardly revolutionary graphics and sound. Like most Amstrad products, they strained to be as easy to use as possible, shipping as complete units — tape or disk drive and monitor included — at a time when virtually all of their rivals had to be assembled piece by piece via separate purchases.

The CPC line did very well from the outset, even as Acorn and Sinclair were soon watching their own sales implode. Pundits attributed the line’s success to what they called “the Amstrad Effect”: Alan Sugar’s instinct for delivering practical products at a good price at the precise instant when the technology behind them was ready for the mass market — i.e., was about to become desirable to his oft-stated target demographic of “the truck driver and his wife.” Sugar preferred to let others advance the technical state of the art, then swoop in to reap the rewards of their innovations when the time was right. The CPC line was a great example of him doing just that.

But the most dramatic and surprising iteration of the Amstrad Effect didn’t just feed the existing market for colorful game machines; it found an entirely new market segment, one that Amstrad’s competitors had completely missed until now. The story of the creation of the Amstrad PCW line is a classic tale of Alan Sugar, a man who knew almost nothing about computers but knew all he needed to about the people who bought them.

One day just a few months after the release of the first CPC machines, Sugar found himself in an airplane over Asia with Bob Watkins, one of his most trusted executives. A restless Sugar asked Watkins for a piece of paper, and proceeded to draw on it a contraption that included a computer, a monitor, a disk drive, and a printer, all in one unit. Looking at the market during the run-up to the CPC launch, Sugar had recognized that the only true mainstream uses for the current generation of computers in the home were as game machines and word processors. With the CPC, he had the former application covered. But what about the latter? All of the inexpensive machines currently on the market, like the Sinclair Spectrum, were oriented toward playing games rather than word processing, trading the possibility of displaying crisp 80-column text for colorful graphics in lower resolutions. Meanwhile all of the more expensive ones, like the BBC Micro, were created by and for hardcore techies rather than Sugar’s truck drivers. If they could apply their patented technology-for-the-masses approach to a word processor for the home and small business — making a cheap, well-built, all-in-one design emphasizing ease of use for the common person — Amstrad might just have another hit on their hands, this time in a market of their own utterly without competition. Internally, the project was named after Sugar’s secretary Joyce, since it would hopefully make her job and those of many like her much easier. It would eventually come to market as the “PCW,” or “Personal Computer Word Processor.”

The first Amstrad PCW machine, complete with bundled printer. Note how the disk drive and the computer itself are built into the same case as the monitor, a very unusual design for the period.

Even more so than the CPC, the PCW was a thoroughly underwhelming package for technophiles. It was build around the tried-and-true Z80 8-bit CPU and ran CP/M, an operating system already considered obsolete by big business, MS-DOS having become the standard in the wake of the IBM PC. The bundled word-processing software, contracted out to a company called Locomotive Software, wasn’t likely to impress power users of WordStar or WordPerfect overmuch — but it was, in keeping with the Amstrad philosophy, unusually friendly and easy to use. Sugar knew his target customers, knew that they “didn’t give a shit whether there was an elastic band or an 8086 or a 286 driving the thing. They wouldn’t know what you were talking about.”

As usual, most of Amstrad’s hardware-engineering efforts went into packaging and cost-cutting. It was decided that the printer would have to be housed separately from the system unit for technical reasons, but otherwise the finished machine conformed remarkably well to Sugar’s original vision. Best of all, it had a price of just £399. By way of comparison, Acorn’s most recent BBC Micro Model B+ had half as much memory and no disk drive, monitor, or printer included — and was priced at £499.

Nervous as ever about intimidating potential customers, Amstrad was at pains to market the PCW first and foremost as a turnkey word-processing solution for homes and small businesses, as a general-purpose computer only secondarily if at all. “It’s more than a word processor for less than most typewriters,” ran their tagline. At the launch event in the heart of the City in August of 1985, three female secretaries paraded across the stage: a snooty one who demanded one of the competition’s expensive computer systems; a tarty one who said a typewriter was more than good enough; and a smart, reasonable one who naturally preferred the PCW. Man-of-the-people Sugar crowed extravagantly that Amstrad had “brought word-processing within the reach of every small business, one-man band, home-worker, and two-finger typist in the country.” Harping on one of his favorite themes, he noted that once again Amstrad had “produced what the customer wants and not a boffin’s ego trip.”

Sugar’s aggressive manner may have grated with many buttoned-down trade journalists, but few could deny that he might just open up a whole new market for computers with the PCW. Electrical Retailer and Trader was typical, calling the PCW “a grown-up computer that does something people want, packaged and sold in a way they can understand, at a price they’ll accept.” But even that note of optimism proved far too mild for the reality of the machine’s success. The PCW exploded out of the gate, selling 350,000 units in the first eight months. It probably could have sold a lot more than that, but Amstrad, caught off-guard by the sales numbers despite their founder’s own bullishness on the product, couldn’t make and ship them fast enough.

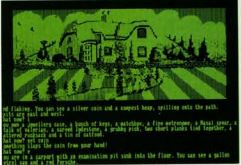

Surprisingly for such a utilitarian package, the PCW garnered considerable loyalty and even love among the millions in Britain and all across Europe who eventually bought one. Their enthusiasm was enough to sustain a big, glossy newsstand magazine dedicated to the PCW alone — an odd development indeed for this machine that seemed on the face of it to be anything but a hacker’s darling. A thriving software ecosystem that reached well beyond word processing sprung up around the machine. Despite the PCW’s monochrome display and virtually nonexistent animation and sound capabilities, even games were far from unheard of on the platform. For obvious reasons, text adventures in particular became big favorites of PCW owners; with its comfortable full-travel keyboard, its fast disk drive, its relatively cavernous 256 K of memory, and its 80-column text display, a PCW was actually a far better fit for the genre than the likes of a Sinclair Spectrum. The PCW market for text adventures was strong enough to quite possibly allow companies like Magnetic Scrolls and Level 9 to hang on a year or two longer than they might otherwise have managed.

So, Amstrad was already soaring on the strength of the CPC and especially the PCW when they shocked the nation and cemented their position as the dominant force in mainstream British computing with the acquisition of Sinclair in April of 1986. Eminently practical man of business that he was, Sugar bought Sinclair partly to eliminate a rival, but also because he realized that, home-computer slump or no, the market for a machine as popular as the Sinclair Spectrum wasn’t likely to just disappear overnight. He could pick up right where Uncle Clive had left off, selling the existing machine just as it was to new buyers who wanted access to the staggering number of cheap games available for the platform. Sugar thought he could make a hell of a lot of money this way while needing to expend very little effort.

Once again, time proved him more correct than even he had ever imagined. Driven by that huge base of games, demand for new Spectrums persisted into the 1990s. Amstrad repackaged the technology from time to time and, perhaps most importantly, dramatically improved on Sinclair’s infamously shoddy quality control. But they never seriously re-imagined the Spectrum. It was now what Sugar liked to call “a commodity product.” He compared it to suntan lotion of all things: the department stores “put it in their window in July and August and they take it away in the winter.” The Spectrum’s version of July and August was of course November and December; every Christmas sparked a new rush of sales to the parents of a new group of youngsters just coming of age and discovering the magic of videogames.

A battered and uncertain Acorn, now a subsidiary of Olivetti, faced a formidable rival indeed in Alan Sugar’s organization. In a sense, the fundamental dichotomies hadn’t changed that much since Amstrad took Sinclair’s place as the yin to Acorn’s yang. Acorn remained as technology-driven as ever, while Amstrad was all about giving the masses what they craved in the form of cheap computers that were technically just good enough. Amstrad, however, was a much more dangerous form of people’s computer company than had been their predecessor in the role. After releasing some notoriously shoddy stereo equipment under the Amstrad banner in the 1970s and paying the price in returns and reputation, Alan Sugar had learned a lesson that continued to elude Clive Sinclair: that selling well-built, reliable products, even at a price of a few more quid on the final price tag and/or a few less in the profit margin, pays off more than corner-cutting in the long run. Unlike Uncle Clive, who had bumbled and stumbled his way to huge success and just as quickly back to failure, Sugar was a seasoned businessman and a master marketer. The diffident boffins of Acorn looked destined to have a hard time against a seasoned brawler like Sugar, raised on the mean streets of the cutthroat Tottenham Court Road electronics trade. It hardly seemed a fair fight at all.

But then, in the immediate wake of their acquisition by Olivetti nothing at all boded all that well for Acorn. New hardware releases were limited to enhanced versions of the 1981-vintage, 8-bit BBC Micro line that were little more ambitious than Amstrad’s re-packagings of the Spectrum. It was an open secret that Acorn was putting much effort into designing a new CPU in-house to serve as the heart of their eventual next-generation machine, an unprecedented step in an industry where CPU-makers and computer-makers had always been separate entities. For many, it seemed yet one more example of Acorn’s boffinish tendencies getting the best of them, causing them to laboriously reinvent the wheel rather than do what the rest of the microcomputer world was doing: grabbing a 68000 from Motorola or an 80286 from Intel and just getting on with the 16-bit machine their customers were clamoring for. While Acorn dithered with their new chip, they continued to fall further and further behind Amstrad, who in the wake of the Sinclair acquisition had now gone from a British home-computer market share of 0 to 60 percent in less than two years. Acorn was beginning to look downright irrelevant to many Britons in the market for the sorts of affordable, practical computer systems Amstrad was happily providing them with by the bucketful.

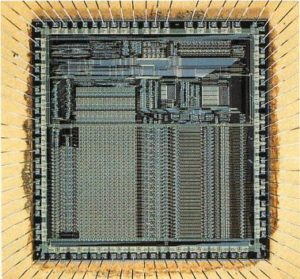

Measured in terms of public prominence, Acorn’s best days were indeed already behind them; they would never recapture those high-profile halcyon days of the early 1980s, when the BBC Micro had first been anointed as the British establishment’s officially designated choice for those looking to get in on the ground floor of the computer revolution. Yet the new CPU they were now in the midst of creating, far from being a pointless boondoggle, would ultimately have a far greater impact than anything they’d done before — and not just in Britain but over the entire world. For the CPU architecture Acorn was creating in those uncertain mid-1980s was the one that has gone on to become the most popular ever: the ubiquitous ARM. Since retrofitted into “Advanced RISC Machine,” “ARM” originally stood for “Acorn RISC Machine.” Needless to say, no one at Acorn had any idea of the monster they were creating. How could they?

“RISC” stands for “Reduced Instruction Set Computer.” The idea didn’t originate with Acorn, but had already been kicking around American university and corporate engineering departments for some time. (As Hermann Hauser later wryly noted, “Normally British people invent something, and the exploitation is in America. But this is a counterexample.”) Still, the philosophy behind ARM was adhered to by only a strident minority before Acorn first picked it up in 1983.

The overwhelming trend in commercial microprocessor design up to that point had been for chips to offer ever larger and more complex instruction sets. By making “opcodes” — single instructions issued directly to the CPU — capable of doing more in a single step, machine-level code could be made more comprehensible for programmers and the programs themselves more compact. RISC advocates came to call this traditional approach to CPU architecture “CISC,” or “Complex Instruction Set Computing.” They believed that CISC was becoming increasingly counterproductive with each new generation of microprocessors. Seeing how the price and size of memory chips continued to drop significantly almost every year, they judged — in the long term, correctly — that memory usage would become much less important than raw speed in future computers. They therefore also judged that it would be more than acceptable in the future to trade smaller programs for faster ones. And they judged that they could accomplish exactly that trade-off by traveling directly against the prevailing winds in CPU design — by making a CPU that offered a radically reduced instruction set of extremely simple opcodes that were each ruthlessly optimized to execute very, very quickly.

A program written for a RISC processor might need to execute far more opcodes than the same program written for a CISC processor, but those opcodes would execute so quickly that the end result would still be a dramatic increase in throughput. Yes, it would use more memory, and, yes, it would be harder to read as machine code — but already fewer and fewer people were programming computers at such a low level anyway. The trend, which they judged likely only to accelerate, was toward high-level languages that abstracted away the details of processor design. In this prediction again, time would prove the RISC advocates correct. Programs may not even need to be as much larger as one might think; RISC advocates argued, with some evidence to back up their claims, that few programs really took full advantage of the more esoteric opcodes of the CISC chips, that the CISC chips were in effect being programed as if they were RISC chips much of the time anyway. In short, then, a definite but not insubstantial minority of academic and corporate researchers were beginning to believe that the time was ripe to replace CISC with RISC.

And now Acorn was about to act on their belief. In typical boffinish fashion, their ARM project was begun as essentially a personal passion project by Roger Wilson [1]Roger Wilson now lives as Sophie Wilson. As per my usual editorial policy on these matters, I refer to her as “he” and by her original name only to avoid historical anachronisms and to stay true to the context of the times. and Steve Furber, two key engineers behind the original BBC Micro. Hermann Hauser admits that for quite some time he gave them “no people” and “no money” to help with the work, making ARM “the only microprocessor ever to be designed by just two people.” When talks began with Olivetti in early 1985, ARM remained such a back-burner long-shot that Acorn never even bothered to tell their potential saviors about it. But as time went on the ARM chip came more and more to the fore as potentially the best thing Acorn had ever done. Having, almost perversely in the view of many, refused to produce a 16-bit replacement for the BBC Micro line for so long, Acorn now proposed to leapfrog that generation entirely; the ARM, you see, was a 32-bit chip. Early tests of the first prototype in April of 1985 showed that at 8 MHz it yielded an average throughput of about 3.5 MIPS, compared to 2.5 MIPS at 10 MHz for the 68020, the first 32-bit entry in Motorola’s popular 68000 line of CISC processors. And the ARM was much, much cheaper and simpler to produce than the 68020. It appeared that Wilson and Furber’s shoestring project had yielded a world-class microprocessor.

ARM made its public bow via a series of little-noticed blurbs that appeared in the British trade press around October of 1985, even as the stockbrokers in the City and BBC Micro owners in their homes were still trying to digest the news of Acorn’s acquisition by Olivetti. Acorn was testing a new “super-fast chip,” announced the magazine Acorn User, which had “worked the first time”: “It is designed to do a limited set of tasks very quickly, and is the result of the latest thinking in chip design.” From such small seeds are great empires sown.

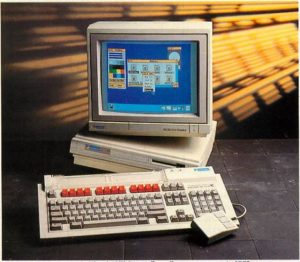

The machine that Acorn designed as a home for the new chip was called the Acorn Archimedes — or at times, because Acorn had been able to retain the official imprimatur of the BBC, the BBC Archimedes. It was on the whole a magnificent piece of kit, in a different league entirely from the competition in terms of pure performance. It was, for instance, several times faster than a 68000-based Amiga, Macintosh, or Atari ST in many benchmarks despite running at a clock speed of just 8 MHz, roughly the same as all of the aforementioned competitors. Its graphic capabilities were almost as impressive, offering 256 colors onscreen at once from a palette of 4096 at resolutions as high as 640 X 512. So, Acorn had the hardware side of the house well in hand. The problem was the software.

Graphical user interfaces being all the rage in the wake of the Apple Macintosh’s 1984 debut, Acorn judged that the Archimedes as well had to be so equipped. Deciding to go to the source of the world’s very first GUI, they opened a new office for operating-system development a long, long way from their Cambridge home: right next door to Xerox’s famed Palo Alto Research Center, in the heart of California’s Silicon Valley. But the operating-system team’s progress was slow. Communication and coordination were difficult over such a distance, and the team seemed to be infected with the same preference for abstract research over practical product development that had always marked Xerox’s own facility in Palo Alto. The new operating system, to be called ARX, lagged far behind hardware development. “It became a black hole into which we poured effort,” remembers Wilson.

At last, with the completed Archimedes hardware waiting only on some software to make it run, Acorn decided to replace ARX with something they called Arthur, a BASIC-based operating environment very similar to the old BBC BASIC with a rudimentary GUI stuck on top. “All operating-system geniuses were firmly working on ARX,” says Wilson, “so we couldn’t actually spare any of the experts to work on Arthur.” The end result did indeed look like something put together by Acorn’s B team. Parts of Arthur were actually written in interpreted BASIC, which Acorn was able to get away with thanks to the blazing speed of the Archimedes hardware. Still, running Arthur on hardware designed for a cutting-edge Unix-like operating system with preemptive multitasking and the whole lot was rather like dropping a two-speed gearbox into a Lamborghini; it got the job done, after a fashion, but felt rather against the spirit of the thing.

When the Archimedes debuted in August of 1987, its price tag of £975 and up along with all of its infelicities on the software side gave little hope to those not blinded with loyalty to Acorn that this extraordinary machine would be able to compete with Amstrad’s good-enough models. The Archimedes was yet another Acorn machine for the boffins and the posh. Most of all, though, it would be bought by educators who were looking to replace aging BBC Micros and might still be attracted by the BBC branding and the partial compatibility of the new machine with the old, thanks to software emulators and the much-loved BBC BASIC still found as the heart of Arthur.

Even as Amstrad continued to dominate the mass market, a small but loyal ecosystem sprang up around the Archimedes, enough to support a software scene strong on educational software and technical tools for programming and engineering, all a natural fit for the typical Acorn user. And, while the Archimedes was never likely to become the first choice for pure game lovers, a fair number of popular games did get ported. After all, even boffins and educators — or, perhaps more likely, their students — liked to indulge in a bit of pure fun sometimes.

In April of 1989, after almost two long, frustrating years of delays, Acorn released a revision of Arthur comprehensive enough to be given a whole new name. The new RISC OS incorporated many if not all of the original ambitions for ARX, at last providing the Archimedes with an attractive modern operating system worthy of its hardware. But by then, of course, it was far too late to capture the buzz a more complete Archimedes package might have garnered at its launch back in 1987.

Much to the frustration of many of their most loyal customers, Acorn still seemed not so much inept at marketing their wares to the common person as completely disinterested in doing so. It was as if they felt themselves somehow above it all. Perhaps they had taken a lesson from their one earlier attempt to climb down from their ivory tower and sell a computer for the masses. That attempt had taken the form of the Acorn Electron, a cut-down version of the BBC Micro released in 1983 as a direct competitor to the Sinclair Spectrum. Poor sales and overproduction of the Electron had been the biggest single contributor to Acorn’s mid-decade financial collapse and the loss of their independence to Olivetti. Having survived that trauma (after a fashion), Acorn seemed content to tinker away with technology for its own sake and to let the chips fall where they would when it came to actually selling the stuff that resulted.

If it provided any comfort to frustrated Acorn loyalists, Amstrad also began to seem more and more at sea after their triumphant first couple of years in the computer market. In September of 1986, they added a fourth line of computers to their catalog with the release of the PC — as opposed to PCW — range. The first IBM clones targeted at the British mass market, the Amstrad PC line might have played a role in its homeland similar to that of the Tandy 1000 in the United States, popularizing these heretofore business-centric machines among home users. As usual with Amstrad, the price certainly looked right for the task. The cheapest Amstrad PC model, with a generous 512 K of memory but no hard drive, cost £399; the most expensive, which included a 20 Mb hard drive, £949. Before the Amstrad PC’s release, the cheapest IBM clone on the British market had retailed for £1429.

But, while not a flop, the PC range never took off quite as meteorically as some had expected. For months the line was dogged by reports of overheating brought on by the machine’s lack of a fan (shades of the Apple III fiasco) that may or may not have had a firm basis in fact. Alan Sugar himself was convinced that the reports could be traced back to skulduggery by IBM and other clone manufacturers trying to torpedo his cheaper machines. When he finally bowed to the pressure to add a fan, he did so as gracelessly as imaginable.

I’m a realistic person and we are a marketing organization, so if it’s the difference between people buying the machine or not, I’ll stick a bloody fan in it. And if they say they want bright pink spots on it, I’ll do that too. What is the use of me banging my head against a brick wall and saying, “You don’t need the damn fan, sunshine?”

But there were other problems as well, problems that were less easily fixed. Amstrad struggled to source hard disks, which had proved a far more popular option than expected, resulting in huge production backlogs on many models. And, worst of all, they found that they had finally overreached themselves by setting the prices too low to be realistically sustainable; prices began to creep upward almost immediately.

For that matter, prices were creeping upward across Amstrad’s entire range of computers. In 1986, after years of controversy over the alleged dumping of memory chips into the international market on the part of the Japanese semiconductor industry, the United States pressured Japan into signing a trade pact that would force them to throttle back their production and increase their prices. Absent the Japanese deluge, however, there simply weren’t enough memory chips being made in the world to fill an ever more voracious demand. By 1988, the situation had escalated into a full-blown crisis for volume computer manufacturers like Amstrad, who couldn’t find enough memory chips to build all the computers their customers wanted — and certainly not at the prices their customers were used to paying for them. Amstrad’s annual sales declined for the first time in a long time in 1988 after they were forced to raise prices and cut production dramatically due to the memory shortage. Desperate to secure a steady supply of chips so he could ramp up production again, Sugar bought into Micron Technology, one of only two American firms making memory chips, in October of 1988 to the tune of £45 million. But within a year the memory-chip crisis, anticipated by virtually everyone at the time of the Micron buy-in to go on for years yet, petered out when factories in other parts of Asia began to come online with new technologies to produce memory chips more cheaply and quickly than ever. Micron’s stock plummeted, another major loss for Amstrad. The buy-in hadn’t been “the greatest deal I’ve ever done,” admitted Sugar.

Many saw in the Amstrad of these final years of the 1980s an all too typical story in business: that of a company that had been born and grown wildly as a cult of personality around its founder, until one day it got too big for any one man to oversee. The founder’s vision seemed to bleed away as the middle managers and the layers of bureaucracy moved in. Seduced by the higher profit margins enjoyed by business computers, Amstrad strayed ever further from Sugar’s old target demographic. New models in the PC range crept north of £1000, even £2000 for the top-of-the-line machines, while the more truck-driver-focused PCW and CPC lines were increasingly neglected. The CPC line would be discontinued entirely in 1990, leaving only the antique Spectrum to soldier on for a couple more years for Amstrad in the role of general-purpose home computer. It seemed that Amstrad at some fundamental level didn’t really know how to go about producing a brand new machine in the spirit of the CPC in this era when making a new home computer was much more complicated than plugging together some off-the-shelf chips and hiring a few hackers to knock out a BASIC for the thing. Amstrad would continue to make computers for many years to come, but by the time the 1990s dawned their brief-lived glory days of 60 percent market share were already fading into the rosy glow of nostalgia.

For all their very real achievements over the course of a very remarkable decade in British computing, Acorn and Amstrad each had their own unique blind spot that kept them from achieving even more. In the Archimedes, Acorn had a machine that was a match for any other microcomputer in the world in any application you cared to name, from games to business to education. Yet they released it in half-baked form at too high a price, then failed to market it properly. In their various ranges, Amstrad had the most comprehensive lineup of computers of anyone in Britain during the mid- to late-1980s. Yet they lacked the corporate culture to imagine what people would want five years from now in addition to what they wanted today. The world needs visionaries and commodifiers alike. What British computing lacked in the 1980s was a company capable of integrating the two.

That lack left wide open a huge gap in the market: space for a next-generation home computer with a lot more power and much better graphics and sound than the likes of the old Sinclair Spectrum, but that still wouldn’t cost a fortune. Packaged, priced, and marketed differently, the Archimedes might have been that machine. As it was, buyers looked to foreign companies to provide. Neglected as Europe still was by the console makers of Japan, the British punters’ choice largely came down to one of two American imports, the Commodore Amiga and the Atari ST. Both — especially the former — would live very well in this gap that neither Acorn nor Amstrad deigned to fill for too long. Acorn did belatedly try with the release of the Archimedes A3000 model in mid-1989 — laid out in the all-in-one-case, disk-drive-on-the-side fashion of an Amiga 500, styled to resemble the old BBC Micro, and priced at a more reasonable if still not quite reasonable enough £745. But by that time the Archimedes’s fate as a boutique computer for the wealthy, the dedicated, and the well-connected was already decided. As the decade ended, an astute observer could already detect that the wild and woolly days of British computing as a unique culture unto itself were numbered.

And that would be that, but for one detail: the fairly earth-shattering detail of ARM. The ARM CPU’s ability to get extraordinary performance out of a relatively low clock speed had a huge unintended benefit that was barely even noticed by Acorn when they were in the process of designing it. In the world of computer engineering, higher clock speeds translate quite directly into higher power usage. Thus the ARM chip could do more with less power, a quality that, along with its cheapness and simplicity, made it the ideal choice for an emerging new breed of mobile computing devices. In 1990 Apple Computer, hard at work on a revolutionary “personal digital assistant” called the Newton, came calling on Acorn. A new spinoff was formed in November of 1990, a partnership among Acorn, Apple, and the semiconductor firm VLSI Technology, who had been fabricating Acorn’s ARM chips from the start. Called simply ARM Holdings, it was intended as a way to popularize the ARM architecture, particularly in the emerging mobile space, among end-user computer manufacturers like Apple who might be leery of buying ARM chips directly from a direct competitor like Acorn.

And popularize it has. To date about ten ARM CPUs have been made for every man, woman, and child on the planet, and the numbers look likely to continue to soar almost exponentially for many years to come. ARM CPUs are found today in more than 95 percent of all mobile phones. Throw in laptops (even laptops built around Intel processors usually boast several ARM chips as well), tablets, music players, cameras, GPS units… well, you get the picture. If it’s portable and it’s vaguely computery, chances are there’s an ARM inside. ARM, the most successful CPU architecture the world has ever known, looks likely to continue to thrive for many, many years to come, a classic example of unintended consequences and unintended benefits in engineering. Not a bad legacy for an era, is it?

(Sources: the book Sugar: The Amstrad Story by David Thomas; Acorn User of July 1985, October 1985, March 1986, September 1986, November 1986, June 1987, August 1987, September 1987, October 1988, November 1988, December 1988, February 1989, June 1989, and December 1989; Byte of November 1984; 8000 Plus of October 1986; Amstrad Action of November 1985; interviews with Hermann Hauser, Sophie Wilson, and Steve Furber at the Computer History Museum.)

Footnotes

| ↑1 | Roger Wilson now lives as Sophie Wilson. As per my usual editorial policy on these matters, I refer to her as “he” and by her original name only to avoid historical anachronisms and to stay true to the context of the times. |

|---|

whomever

June 23, 2016 at 6:15 pm

“Truck driver”…Lorry Driver, surely?

Jimmy Maher

June 24, 2016 at 7:58 am

In the biography of Sugar by David Thomas, he’s quoted many times as saying, “truck driver.” I admit it never occurred to me until now to see it as an odd usage, being American myself. I have to assume that either some British dialects do favor “truck” over “lorry,” or for some (perhaps obscurely rhetorical) reason Sugar himself just favored the Americanism.

Michael Davis

June 23, 2016 at 7:53 pm

“Amstrad was already been well-established”

“had already been” or “was already”, probs :)

Jimmy Maher

June 24, 2016 at 8:00 am

Thanks!

John Elliot

June 23, 2016 at 7:53 pm

In the light of your opening paragraphs, it’s perhaps noteworthy that the PCW,, marketed entirely as a word processor, was nevertheless supplied with a BASIC interpreter (and, for that matter, a LOGO interpreter).

Internally, the PCW design was based on ANT [Arnold Number Two], which would have been another computer for the CPC niche.The PCW hardware has some implemented-but-unused features from the ANT, such as joystick support in the keyboard controller, and a CPC-compatible memory paging mode.

Roger

June 23, 2016 at 8:30 pm

The amusing thing is that your “standard” Intel PC is stuffed full of ARM chips too (and some MIPS) – far more than the solitary Intel x86 chip. For example they are in storage (spinning and flash media need something internal for processing), networking interfaces, USB, and various other controllers and peripherals. Consensus is around 10 of those non-x86 chips per “Intel” computer!

Jimmy Maher

June 24, 2016 at 8:01 am

Interesting. I actually didn’t realize that. Made a little edit to the ending. Thanks!

Pierre

June 23, 2016 at 11:25 pm

The ARM origin story is one of my favourites. Thanks for telling it again. It never gets old.

Keith Palmer

June 24, 2016 at 12:37 am

I was generally familiar with “RISC vs. CISC” from a Macworld article or two from around the time of the PowerPC processor’s introduction, but not so much with the beginning of ARM and its own connections to that; that provided a good bit of interest to this piece. I seem to recall a comment or two in works by Graham Nelson about the Acorn Archimedes, though…

Jimmy Maher

June 24, 2016 at 8:08 am

Yes, all of his hugely important early work reverse-engineering the Z-Machine, developing the Inform language, and writing Curses! was done on an Archimedes running RISC OS. An Oxford academic, Nelson was right in the usual Acorn demographic. ;)

skg

June 24, 2016 at 1:05 am

Great article, fascinating as always.

Although you probably did not have enough space for it, I would have love have seen an expansion of this passage:

“Many saw in the Amstrad of these final years of the 1980s an all too typical story in business: that of a company that had been born and grown wildly as a cult of personality around its founder, until one day it got too big for any one man to oversee.”

Do you see it this way? Or are you summarizing a consensus?

I’m curious, because Sugar seems like an interesting parallel to Jack Tramiel, in that both were businessmen first, and therefore computer magnates primarily for business reasons.

For this reason, it seems just as likely that the problem was Sugar’s vision itself (of the lack thereof)- if the company was too dominated by a strong personality, then any trend he could not foresee, the company as a whole could not foresee.

Since Sugar’s genius was understanding a demographic previous computer manufacturers had ignored, not from any understanding of computers themselves, an inability to adjust to the fact that “making a new home computer was much more complicated than plugging together some off-the-shelf chips and hiring a few hackers to knock out a BASIC for the thing” seems like the kind of flaw that can be squarely placed in Sugar’s lap.

Jimmy Maher

June 24, 2016 at 8:32 am

The consensus I refer to is that of several pundits mentioned in David Thomas’s excellent book Sugar, which I relied on heavily for the Amstrad side of this article. I actually thought of the parallels with the Jack Tramiel-led Commodore myself, and thought about introducing them into the article, but judged that would be roaming a little too far afield.

It is true that, judging again from Thomas’s book, Sugar began to step back a bit from the day-to-day business of running Amstrad in the latter 1980s, and this took its toll on the company. On the other hand, he seems to have been pretty much exhausted with building such a huge business from nothing over the course of almost two decades, and had doubtless earned a little more free time. I tend to see what happened to Amstrad more as simply in the nature of the sort of beast the company was — a cult of personality around its founder, built to exploit existing technology but not to develop visionary new technology — than as a reason to point fingers too enthusiastically. But yes, I think the consensus I mentioned of what went “wrong” with Amstrad is largely correct.

Nick

April 18, 2023 at 7:10 pm

I suppose Sugar’s takeover of Tottenham Hotspur in 1991 and the sometimes fractious decade until he sold up would have diverted a lot of time and energy from the computer business. Amstrad were also very much moving into kit to support the burgeoning satellite tv industry.

Pedro Timóteo

June 24, 2016 at 10:02 am

It had one advantage: that’s how the PCW (and the CPC, whose disk versions could also run CP/M) got many Infocom adventures; Infocom simply copied their CP/M Z-Machine interpreter and data files to 3 inch disks, and instantly had PCW / CPC versions of their games.

I don’t know how well they sold, though.

Jimmy Maher

June 24, 2016 at 10:20 am

While we have the North American sales for Infocom, we unfortunately lack the European numbers. They’re given a fairly prominent place in many issues of 8000 Plus, however, which makes me think they tended to do pretty well on the PCW. Their biggest drawback was always their high price in comparison to homegrown games.

Your anecdote raises another interesting question: is it possible to run the CP/M versions of older Infocom games on the Commodore 128 in CP/M mode? It could actually be a more pleasant experience than playing in 64 mode — 80 columns, more memory for buffering, etc. Anyone know?

David Kinder

June 24, 2016 at 9:01 pm

At least AMFV, Beyond Zork, Bureaucracy and Trinity were released for the C128’s CP/M 80 column mode – you can play them with the x128 interpreter in VICE.

Jimmy Maher

June 25, 2016 at 7:05 am

Those actually run in the 128’s native mode, not under CP/M. I was curious whether you could buy the CP/M version of, say, the original Zork, and run it on a 128 in CP/M mode. I’m guessing the biggest stumbling block might be the disk format. I seem to recall that the 1571 couldn’t read some or many CP/M disks, but could be mistaken.

Pedro Timóteo

June 25, 2016 at 9:59 am

I’ve thought of that, too. Theoretically, it should be possible; it should be a question of getting the CP/M interpreter and data file in a disk (image?) that the C128 (emulator?) can access.

I’ll try to do something like this in the next few days. The first problem may be where to find the CP/M interpreters, which I think aren’t that common out there. Maybe from Amstrad .DSK images? I’ll let you know how it goes.

Jimmy Maher

June 25, 2016 at 10:12 am

The original Zork trilogy for CP/M is here: http://www.retroarchive.org/cpm/games/games.htm.

Pedro Timóteo

June 26, 2016 at 1:54 pm

I’ve had success with Zork I from that link… though I had to work around some VICE bugs (I couldn’t even format a CP/M disk using an emulated 1571, I had to change the drive to a 1541 for it to work). It worked fine, though Zork I (in its Solid Gold re-release) happens to be one of the games that actually runs in native C128 mode.

Next I’ll try to extract the Amstrad CPC version of a game that didn’t run in C128 mode…

Jimmy Maher

June 27, 2016 at 7:17 am

Interesting. Thanks for taking the time to do this. I’m surprised no one back in the day ever seems to have caught on to this, especially as 128 owners were always looking for ways to play games without just going to 64 mode all the time. Maybe because by the time the 128 debuted the CP/M versions were getting hard to find?

It should be possible to play lots of other games using the Zork III interpreter. Just rename the new story files to match the old, and you’re off.

Pedro Timóteo

June 27, 2016 at 11:08 am

OK, here’s a zip with a CP/M boot disk for the C128, one with “extra” utilities” for C128 CP/M (including rdcbm, which I used to copy files from a C64 .D64 image to a CP/M .D64 image; I found no way to do it directly; I also had to use a D64 editor to first copy files from my Windows PC to the C64 disk image), Zork 1 from the link you provided, and Enchanter extracted from the Amstrad CPC version.

The latter works, but instead of running enchante.com as I would in a CPC (in CP/M mode), I have to run ench64.com or ench128.com, both of which were also included in the CPC disk. Also, it seems to lose the ability to position text on the screen, which means the status bar’s text is repeated after each command entry. Maybe there was some CP/M code in there specific to the Amstrad?

I haven’t tried your suggestion of using the Zork 3 interpreter and renaming files; I’ll try doing so later.

Pedro Timóteo

June 27, 2016 at 5:32 pm

For extra fun: the ZX Spectrum +3 can also run CP/M (although it wasn’t included with it, it required an additional purchase, instead of having it in ROM (accessed by typing |cpm (that’s a pipe) as in an Amstrad CPC 6128), and it can read Amstrad disks (or disk images in a Spectrum emulator) directly.

Just tried it with the CPC version of Planetfall, and it works! Like Enchanter, it had several executables in the disk: planet.com, plan64.com, plan128.com, and plan256.com (I hadn’t copied the latter to a C128 CP/M disk, as the disk ran out of space). Much like on the C128, the “normal” executable (planet.com) just returns you to the CP/M prompt, but the others work. Each “size” seems to be more fully featured (in terms of screen positioning) than the one before; plan64.com can’t do a status bar, 128 does so but seems to cut some text, and plan256.com seems fully featured, even on the Spectrum’s 40×24 screen under CP/M.

I wonder why there are these several executables… I assume they correspond to memory sizes, though no CPC had 256KB. Maybe it was for the PCW? And planet.com would just detect the available system and run the appropriate executable on an Amstrad machine, but on a Spectrum or C128 it does nothing?

Anyway, I’ll have to try copying just the “256” executable to a C128 disk (plus the data file), to see if the screen will work fine.

Pedro Timóteo

June 27, 2016 at 6:50 pm

I was probably wrong about the CPC having CP/M in ROM. :)

whomever

June 27, 2016 at 7:00 pm

Interesting thread. I wonder if you could also run the CP/M version on an Apple ][ with the Microsoft Z80 softcard? I actually did my AP CS (which was Pascal back in the day) on Turbo Pascal running on Apple ][es with Softcards, infinitely better than Apple Pascal.

Pedro Timóteo

June 27, 2016 at 7:32 pm

Just checked: I loaded CP/M 3 on a CPC emulator, then tried the three versions (plan64.com, 128 and 256) of Planetfall on the CPC version’s disk. (*)

plan256 seems to believe the screen has more than 80 colums (it correctly wraps around to the next line when it needs, but then writes a couple more words and unnecessarily begins a new line). This is apparently for the PCW’s 90 column screen.

plan128 works best on the CPC.

plan64, like I’d seen on the Spectrum, is more basic; it doesn’t show a status bar, and repeats the text in it after you enter each command. Maybe it was for the short-lived CPC 664, or for a 464 with an external drive?

I can also confirm that running planet.com on a CPC starts up the plan128 version. On a Spectrum +3 or C128, it just returns to the CP/M prompt.

(*) note that you don’t actually need to boot the CP/M disk to run Infocom games, you just insert the game disk and type “|cpm”. That’s why I thought it was in the ROM. :)

Jimmy Maher

June 28, 2016 at 8:33 am

Cool! Very interesting. Again, if there’s a separate story file it should be possible to play just about any pre-1987, non-Interactive Fiction Plus Infocom game by renaming files. This was commonly done by owners of several orphaned platforms for which Infocom dropped official support.

Andrew Paul

July 10, 2016 at 8:02 pm

It’s worth noting that Acorn offered a CP/M solution too, in the shape of their Z80 second processor. http://classic.technology/wp-content/uploads/2014/12/acornz80processor.pdf

Kroc Camen

June 25, 2016 at 12:34 am

The PCW had a 90-column display, not 80; it was a requirement that it could display 80 cols + margins. :)

I strongly recommend Lord Sugar’s book “What you see is what you get” for lots of background info on the ideas behind the CPC/PCW et al, I’m honestly surprised you hadn’t used it as a source!

Jimmy Maher

June 25, 2016 at 7:16 am

Yes, I was aware that it was technically 90 columns, but judged it one of those details likely to distract more than enlighten in an overview like this one.

I tend to be leery of glossy lessons-of-my-buisness-success autobiographies, especially from accomplished self-promoters like Sugar. But the reviews on Amazon would seem to indicate that I may have underestimated this one.

Carl

June 25, 2016 at 12:39 am

Since I’m a professional chip designer I was a bit worried when you started discussing CISC vs. RISC and the like but I shouldn’t have been… you have a very cogent and accurate description and account of the different philosophies of processor design.

One interesting trivia tidbit:

While Intel processors still provide a CISC instruction set, they are RISC internally and instructions are translated on the fly into an internal instruction set that is more efficient. So RISC really did end up winning the day, even if most RISC chips (and ARM is included here) include some relatively complex instructions that would have been considered heresy back in the RISC crusade of the early 80s.

And for the CPU nerds reading, probably the pinnacle of the CISC approach was the VAX instruction set… if you look through it there are some ludicrously complex instructions (that almost never got used in practice, hence the migration to RISC).

Jimmy Maher

June 25, 2016 at 7:16 am

Always great to be validated by an expert. :) Thanks!

Nate

June 26, 2016 at 2:10 am

With Intel instructions like AES-NI, the pendulum is swinging back to CISC or even VLIW like Cray.

Martin

October 9, 2016 at 4:22 am

The IBM mainframe CPUs have always been CISC and the latest instructions added are to aid in the running of Java on the mainframe. Will anyone use these new instructions in their programs, probably not but the point is that IBM will use these instructions in their Java virtual machine code giving them faster Java execution. I guess what I am saying is that these days, CISC instructions seem to be expanding to deal with specific problems rather than more general usage complex instructions.

Alexander Freeman

June 25, 2016 at 3:49 am

I guess I’m a bit puzzled. I understand that RISC has simpler instructions, yet Wikipedia states:

In the C programming language, the subtraction-based Euclidean algorithm is:

while (i != j) // We enter the loop when ij, not when i==j

{

if (i > j) // When i>j we do that

i -= j;

else // When i j) // When i>j we do that

i -= j;

else if (i < j) // When i j),

; or “LT” if (i < j)

SUBGT Ri, Ri, Rj ; if "GT" (Greater Than), i = i-j;

SUBLT Rj, Rj, Ri ; if "LT" (Less Than), j = j-i;

BNE loop ; if "NE" (Not Equal), then loop

However, in IBM PC assembly language, a CISC language, it would be something like this:

MOV AX,i

MOV CX,j

LOOP:

CMP AX,CX

JE FINISH

JL SECOND

SUB AX,CX

SECOND:

SUB CX,AX

JMP LOOP

FINISH:

The latter seems to consist of simpler instructions and more of them even if you overlook the two preliminary MOV instructions.

Jimmy Maher

June 25, 2016 at 7:37 am

The instructions being written there in ARM assembler don’t really correspond to individual opcodes. Almost any ARM opcode can be executed conditionally, based on the currently set flags. So what’s written there for the convenience of the programmer as “SUBGT,” for instance, is really just a “SUB” with a condition-code selector set to cause it to only execute if the last comparison yielded a “greater than” result. This is one of the ways that ARM is able to reduce the instruction set so radically — by making almost *all* opcodes responsive to the same set of conditional flags.

But you are correct that in this case and doubtless some others ARM will actually yield a shorter program than a CISC processor.

Alexander Freeman

June 25, 2016 at 5:19 pm

True, but a conditional subtraction statement is more complex than an unconditional one, and my point is that that seems to go against the idea of RISC instructions being simpler. I’ve decided to look this up, and page 6 of this explains it in more detail:

http://bear.ces.cwru.edu/eecs_382/ARM7-TDMI-manual-pt2.pdf

The first four bits of something such as a subtraction statement are used to determine the condition if there is one. The last four bits determine the operand.

Well, anyway, I’d like to say how much I enjoy reading your blog. You know the topics so well and make them easy to read about. If I ever get to live my dream somehow of designing a museum for computer science and games, I’d be sure to reach out to you. It’s also nice to be able to discuss things meta-ethics and the existential implications of evolution. I think you’re the only person I’ve come across so far who can talk about those AND obscure computer topics like RISC vs. CISC. I’ve decided to donate to this blog.

Oh, I’ve just noticed I forgot a JMP LOOP instruction after SUB AX,CX in my IBM code snippet. ^_^()

Jimmy Maher

June 26, 2016 at 8:16 am

Thank you!

Carl

June 27, 2016 at 5:27 pm

I should say there that ARM isn’t strictly RISC and x86 ISA isn’t strictly CISC… RISC and CISC have heavily influenced and cross-pollinated each other, as any vibrant technology should.I can tell you from experience that CISC/RISC discussions quickly descend to No True Scotsman type arguments and are best considered “design philosophies” as much as anything.

Nate makes an interesting point regarding VLIW (or Very Long Instruction Word… a technology in which the compiler is heavily leaned on to do the optimizations). Clock speed increases enabled RISC, and now that we’ve hit the Power Wall CISC is interesting again…

Also interesting that Nate would mention Cray. While he made no claim to its invention, The CDC 6600, designed primarily by Seymour Cray, had a stripped-down ISA that was definitely informed by a “proto-RISC philosophy”.

Interesting stuff!

Jimmy Maher

June 28, 2016 at 8:24 am

I did come upon the 1970s Crays as examples of a RISC architecture in my research. I even mentioned it in the article for a while, but I wound up cutting it as a needless distraction (the most important aspect of making articles like this one readable is often what you leave out). I’m not sure that anyone at Acorn was aware that RISC had effectively already been implemented in a commercial project at Cray. They’ve always pointed back to the Berkeley research, which is discussed in some detail in the November 1984 Byte, as their wellspring of ideas.

Mike Spooner

July 11, 2016 at 12:32 pm

It has often been observed that the IBM 801 CPU (1975) a was an early RISC processor, with anecdotal evidence that it was so for similar reasons to those mentioned in the article; and that Patterson’s Berkeley team were well aware of it and what it could do.

Jimmy Maher

July 11, 2016 at 1:57 pm

Yes, along with the Cray Supercomputer the IBM 801 is the most likely candidate for the title of RISC “prior art” to ARM. Both predate the acronym, however, and neither was a mass-produced consumer-grade item like ARM.

Alexander Freeman

June 25, 2016 at 3:51 am

Eh, something went wrong with the formatting. You can look here for what I mean:

https://en.wikipedia.org/wiki/ARM_architecture#Conditional_execution

Paul

June 25, 2016 at 5:41 am

s/boost/boast/

It might be worth adding something of the engineering mindset/methodology that was used during ARM development?

They used the old Victorian methodology of adding in an order of magnitude as a rule of thumb. So if you’re aiming for a bridge to withstand 10 tonnes build for for 100 tonnes to be safe.

Having heard Sophie talk about this as a key part of their thinking, and how in the end, this was part of the reason it delivered so much for so little.

Not sure if you also want to retread the fact that they initially went shopping for 69k/x86 but weren’t happy with their memory access times or throughput per instruction. After visiting WDC, with the legendary Bill Mensch, they realised they could actually do what they want themselves from scratch.

Jimmy Maher

June 25, 2016 at 7:43 am

Interesting stuff all of it, but I think a little too far down in the weeds for what’s intended to be a very readable high-level overview.

Kroc Camen

June 25, 2016 at 8:12 am

Interesting anecdote — the screenshot on the CPC ad is of Bugaboo the flea: https://en.wikipedia.org/wiki/Bugaboo_%28The_Flea%29 I had it on C64, but had no idea it had also been released on CPC

J Griffin

June 28, 2016 at 5:27 am

As mentioned on that wiki article, it was best known to CPC owners as Roland In The Caves, given the typically mystifying Amsoft rebranding where “Roland” could be anything from a bug to an intrepid explorer (who looked a lot like the dude from “Fred”).

It might actually be better known to Amstrad players than on any other format, as it was often part of the bundled software that came with the system.

ZUrlocker

June 26, 2016 at 7:06 pm

I forget if this has been mentioned previously on this blog, but the BBC did a fun (if somewhat fictionalized) drama-documentary on the rivalry between Sinclair and the BBC Micro team called Micro Men

https://en.wikipedia.org/wiki/Micro_Men

https://www.youtube.com/watch?v=XXBxV6-zamM

Pedro Timóteo

June 28, 2016 at 8:06 pm

Just follow the very first link in this post. :)

ZUrlocker

June 30, 2016 at 12:23 pm

Doh

Ibrahim Gucukoglu

June 29, 2016 at 7:42 pm

I was going to suggest to you that you watch the Mico Men documentary, OK, it’s drama but it is lovingly produced and tells the story of the lively and ill-fated British computer industry of the early eighties and the dynamic friendship and rivalry between Clive and Chris. Another highly readable and enjoyable look back is Tom Lean’s book Electronic Dreams, available in both audio and digital eBook formats which charts the history of the British computing industry. As always, a great article, keep up the great work!

UNIX admin

July 9, 2016 at 1:58 pm

Technical correction which nags at me like a splinter in my eye:

Motorola’s first 32-bit processor in the 68000 family is the 68000, and not the 68020. While the 68000 had a 24-bit address bus multiplexer, the central processing unit is fully 32-bit internally. For example, this 32-bit code is perfectly valid and will move 32-bit values from memory into a processor registers on a vanilla 68000:

move.l #$deadbeef, d0

move.l #$d00fd00d, a0

the 68020 was the first in the family to have a 32-bit address bus.

Jimmy Maher

July 9, 2016 at 2:16 pm

I do understand what you’re saying, but in common usage that just wasn’t how the 68000 was regarded. The Amiga, Atari ST, and Mac, all of which used the 68000 in their most common 1980s variants, were always considered 16-bit machines. For instance, a British magazine that focused on the ST and Amiga, The One, trumpeted on its cover that it was “for 16-bit games.” In a more technical article, sure, your point would be worth elaborating at length. In an article designed to be readable and accessible for the layperson, as this one was intended to be, it’s worthy of at best a footnote… which you’ve just helpfully provided. ;) Please understand that I don’t mean to be dismissive. It’s just that readable writing is often defined more by what you leave out than what you put in.

Iffy Bonzoolie

May 20, 2020 at 11:52 pm

If anything, it suggests that using the number of bits, while popular, is an ultimately misleading taxonomy. For example, the Intel 8086 has 20-bit addressing, but 16-bit registers, an 8-bit bus, and CGA is 2 bits per pixel. So what do we call it? TEST to match Atari ST?

It hasn’t gotten easier since then, either. 64-bit data primitives can really inflate program size, and affect performance in emergent ways, like cache locality. So, even for 64-bit instruction sets like AMD’s x86_64, most things in practice still operate on 32-bit operands, with pointers generally being the exception.

Ed Dorosh

July 9, 2016 at 5:23 pm

I lived in Iceland from 1980 until the summer of 1986. There I learned about and bought a Beeb. It took me to levels of programming that for me was a lot of fun, especially the 6502 coding. At that time I had very little sense of where computing was going, it was just fun and I suspect for most that’s what it was. Thank you for the well put together article giving a reasonable history of that era and of the one I was distanced from due to moving back to Canada.

xxx

July 11, 2016 at 3:40 am

Typo: “boost several ARM chips”.

Jimmy Maher

July 11, 2016 at 7:26 am

Thanks!

Jonathan Graham

September 18, 2016 at 7:26 pm

I’ll point out that RISC assembly code is usually easier to read when it comes to looking at hex dumps. RISC ISAs are generally fixed width making finding the next instruction easier. OItherwise you have to be constantly remembering how many parameters an instruction uses in order to find the next instruction. Similarly RISC instruction sets are more likely to be orthogonal which are also easier to read because the ISA is structured so that particular bits represent things like addressing modes.

Wolfeye M.

September 26, 2019 at 7:51 am

Never heard of ARM chips before, and it turns out that I’m probably holding one in my hand right now, as I type this on my phone. Funny how that works out sometimes.

Leo Vellés

December 24, 2019 at 5:59 pm

“The machine that Acorn designed as a home for the new chip was called the Acorn Archimedes — or at times, because Acorn at been able to retain the official imprimatur of the BBC, the BBC Archimede”.

The second “at” (“because Acorn at been able.. “) seems wrong

Jimmy Maher

December 25, 2019 at 11:21 am

Thanks!

Ben

October 19, 2023 at 10:21 pm

gone on to be become -> gone on to become

profits margins -> profit margins

Jimmy Maher

October 20, 2023 at 12:24 pm

Thanks!