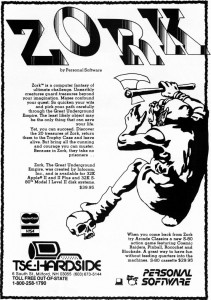

In November of 1980 Personal Software began running the advertisement above in computer magazines, plugging a new game available then on the TRS-80 and a few months later on the Apple II. It’s not exactly a masterpiece of marketing; its garish, amateurish artwork is defensible only in being pretty typical of the era, and the text is remarkably adept at elucidating absolutely nothing that might make Zork stand out from its text-adventure peers. A jaded adventurer might be excused for turning the page on Zork‘s “mazes [that] confound your quest” and “20 treasures” needing to be returned to the “Trophy Case.” Even Scott Adams, not exactly a champion of formal experimentation, had after all seen fit to move on at least from time to time from simplistic fantasy treasure hunts, and Zork didn’t even offer the pretty pictures of On-Line Systems’s otherwise punishing-almost-to-the-point-of-unplayability early games.

In fact, though, Zork represented a major breakthrough in the text-adventure genre — or maybe I should say a whole collection of breakthroughs, from its parser that actually displayed some inkling of English usage in lieu of simplistic pattern matching to the in-game text that for the first time felt crafted by authors who actually cared about the quality of their prose and didn’t find proper grammar and spelling a needless distraction. In one of my favorite parts of Jason Scott’s Get Lamp documentary, several interviewees muse about just how truly remarkable Zork was in the computing world of 1980-81. The consensus is that it was, for a brief window of time, the most impressive single disk you could pull out to demonstrate what your new TRS-80 or Apple II was capable of.

Zork was playing in a whole different league from any other adventure game, a fact that’s not entirely surprising given its pedigree. You’d never guess it from the advertisement above, but Zork grew out of the most storied area of the most important university in computer-science history: MIT. In fact, Zork‘s pedigree is so impressive that it’s hard to know where to begin and harder to know where to end in describing it, hard to avoid getting sucked into an unending computer-science version of “Six Degrees of Kevin Bacon.” To keep things manageable I’ll try as much as I can to restrict myself to people directly involved with Zork or Infocom, the company that developed it. So, let’s begin with Joseph Carl Robnett Licklider, a fellow who admittedly had more of a tangential than direct role in Infocom’s history but who does serve as an illustration of the kind of rarified computer-science air Infocom was breathing.

Born in 1915 in St. Louis, Licklider was a psychologist by trade, but had just the sort of restless intellect that Joseph Weizenbaum would lament the (perceived) loss of in a later generation of scholars at MIT. He received a triple BA degree in physics, mathematics, and psychology from St. Louis’s Washington University at age 22, having also flirted with chemistry and fine arts along the way. He settled down a bit to concentrate on psychology for his MA and PhD, but remained consistently interested in connecting the “soft” science of psychology with the “hard” sciences and with technology. And so, when researching the psychological component of hearing, he learned more about the physical design of the human and animal auditory nervous systems than do many medical specialists. (He once described it as “the product of a superb architect and a sloppy workman.”) During World War II, research into the effects of high altitude on bomber crews led him to get equally involved with the radio technology they used to communicate with one another and with other airplanes.

After stints at various universities, Licklider came to MIT in 1950, initially to continue his researches into acoustics and hearing. The following year, however, the military-industrial complex came calling on MIT to help create an early-warning network for the Soviet bombers they envisioned dropping down on America from over the Arctic Circle. Licklider joined the resulting affiliated institution, Lincoln Laboratory, as head of its human-engineering group, and played a role in the creation of the Semi-Automatic Ground Environment (SAGE), by far the most ambitious application of computer technology conceived up to that point and, for that matter, for many years afterward. Created by MIT’s Lincoln Lab with IBM and other partners, the heart of SAGE was a collection of IBM AN/FSQ-7 mainframes, physically the largest computers ever built (a record that they look likely to hold forever). The system compiled data from many radar stations to allow operators to track a theoretical incoming strike in real time. They could scramble and guide American aircraft to intercept the bombers, enjoying a bird’s eye view of the resulting battle. Later versions of SAGE even allowed them to temporarily take over control of friendly aircraft, guiding them to the interception point via a link to their autopilot systems. SAGE remained in operation from 1959 until 1983, cost more than the Manhattan Project that had opened this whole can of nuclear worms in the first place, and was responsible for huge advances in computer science, particularly in the areas of networking and interactive time-sharing. (On the other hand, considering that the nuclear-bomber threat SAGE had been designed to counter had been largely superseded by the ICBM threat by the time it went operational, its military usefulness is debatable at best.)

During the 1950s most people, including even many of the engineers and early programmers who worked on them, saw computers as essentially huge calculators. You fed in some numbers at one end and got some others out at the other, whether they be the correct trajectory settings for a piece of artillery to hit some target or other or the current balances of a million bank customers. As he watched early SAGE testers track simulated threats in real time, however, Licklider was inspired to a radical new vision of computing, in which human and computer would actively work together, interactively, to solve problems, generate ideas, perhaps just have fun. He took these ideas with him when he left the nascent SAGE project in 1953 to float around MIT in various roles, all the while drifting slowly away from traditional psychology and toward computer science. In 1957 he became a full-time computer scientist when he (temporarily, as it turned out) left MIT for the consulting firm Bolt Beranek and Newman, a company that would play a huge role in the development of computer networking and what we’ve come to know as the Internet. (Loyal readers of this blog may recall that BBN is also where Will Crowther was employed when he created the original version of Adventure as a footnote to writing the code run by the world’s first computerized network routers.)

Licklider, who insisted that everyone, even his undergraduate students, just call him “Lick,” was as smart as he was unpretentious. Speaking in a soft Missouri drawl that could obscure the genius of some of his ideas, he never seemed to think about personal credit or careerism, and possessed not an ounce of guile. When a more personally ambitious colleague stole one of his ideas, Lick would just shrug it off, saying, “It doesn’t matter who gets the credit; it matters that it gets done.” Everyone loved the guy. Much of his work may have been funded by the realpolitik of the military-industrial complex, but Lick was by temperament an idealist. He became convinced that computers could mold a better, more just society. In it, humans would be free to create and to explore their own potential in partnership with the computer, which would take on all the drudgery and rote work. In a surprising prefiguring of the World Wide Web, he imagined a world of “home computer consoles” connected to a larger network that would bring the world into the home — interactively, unlike the passive, corporate-controlled medium of television. He spelled out all of these ideas carefully in a 1960 paper, “Man-Computer Symbiosis,” staking his claim as one of a long line of computing utopianists that would play a big role in the development of more common-man friendly technologies like the BASIC programming language and eventually of the microcomputer itself.

In 1958, the U.S. government formed the Advanced Research Projects Agency in response to alleged Soviet scientific and technological superiority in the wake of their launch of Sputnik, the world’s first satellite, the previous year. ARPA was intended as something of a “blue-sky” endeavor, pulling together scientists and engineers to research ideas and technology that might not be immediately applicable to ongoing military programs, but that might just prove to be in the future. It became Lick’s next stop after BBN: in 1962 he took over as head of their “Information Processing Techniques Office.” He remained at ARPA for just two years, but is credited by many with shifting the agency’s thinking dramatically. Previously ARPA had focused on monolithic mainframes operating as giant batch-processing “answer machines.” From Where Wizards Stay Up Late:

The computer would be fed intelligence information from a variety of human sources, such as hearsay from cocktail parties or observations of a May Day parade, and try to develop a best-guess scenario on what the Soviets might be up to. “The idea was that you take this powerful computer and feed it all this qualitative information, such as ‘The air force chief drank two martinis,’ or ‘Khrushchev isn’t reading Pravda on Mondays,” recalled Ruina. “And the computer would play Sherlock Holmes and conclude that the Russians must be building an MX-72 missile or something like that.”

“Asinine kinds of things” like this were the thrust of much thinking about computers in those days, including plenty in prestigious universities such as MIT. Lick, however, shifted ARPA in a more manageable and achievable direction, toward networks of computers running interactive applications in partnership with humans — leave the facts and figures to the computer, and leave the conclusions and the decision-making to the humans. This shift led to the creation of the ARPANET later in the decade. And the ARPANET, as everyone knows by now, eventually turned into the Internet. (Whatever else you can say about the Cold War, it brought about some huge advances in computing.) The humanistic vision of computing that Lick championed, meanwhile, remains viable and compelling today as we continue to wait for the strong AI proponents to produce a HAL.

Lick returned to MIT in 1968, this time as the director of the legendary Project MAC. Formed in 1963 to conduct research for ARPA, MAC stood for either (depending on whom you talked to) Multiple Access Computing or Machine Aided Cognition. Those two names also define the focus of its early research: into time-shared systems that let multiple users share resources and use interactive programs on a single machine; and into artificial intelligence, under the guidance of the two most famous AI proponents of all, John McCarthy (inventor of the term itself) and Marvin Minsky. I could write a few (dozen?) more posts on the careers and ideas of these men, fascinating, problematic, and sometimes disturbing as they are. I could say the same about many other early computing luminaries at MIT with whom Lick came into close contact, such as Ivan Sutherland, inventor of the first paint program and, well, pretty much the whole field of computer-graphics research as well as the successor to his position at ARPA. Instead, I’ll just point you (yet again) to Steven Levy’s Hackers for an accessible if necessarily incomplete description of the intellectual ferment at 1960s MIT, and to Where Wizards Stay Up Late by Matthew Lyon and Katie Hafner for more on Lick’s early career as well as BBN, MIT, and our old friend Will Crowther.

Project MAC split into two in 1970, becoming the MIT AI Laboratory and the Laboratory for Computer Science (LCS). Lick stayed with the latter as a sort of grandfather figure to a new generation of young hackers that gradually replaced the old guard described in Levy’s book as the 1970s wore on. His was a shrewd mind always ready to take up their ideas, and one who, thanks to his network of connections in the government and industry, could always get funding for said ideas.

LCS consisted of a number of smaller working groups, one of which was known as the Dynamic Modeling Group. It’s oddly difficult to pin any of these groups down to a single purpose. Indeed, it’s not really possible to do so even for the AI Lab and LCS themselves; plenty of research that could be considered AI work happened at LCS, and plenty that did not comfortably fit under that umbrella took place at the AI Lab. (For instance, Richard Stallman developed the ultimate hacker text editor, EMACS, at the AI Lab — a worthy project certainly but hardly one that had much to do with artificial intelligence.) Groups and the individuals within them were given tremendous freedom to hack on any justifiable projects that interested them (with the un-justifiable of course being left for after hours), a big factor in LCS and the AI Lab’s becoming such beloved homes for hackers. Indeed, many put off graduating or ultimately didn’t bother at all, so intellectually fertile was the atmosphere inside MIT in contrast to what they might find in any “proper” career track in private industry.

The director of the Dynamic Modeling Group was a fellow named Albert (Al) Vezza; he also served as an assistant director of LCS as a whole. And here we have to be a little bit careful. If you know something about Infocom’s history already, you probably recognize Vezza as the uptight corporate heavy of the story, the guy who couldn’t see the magic in the new medium of interactive fiction that the company was pursuing, who insisted on trivializing the game division’s work as a mere source of funding for a “serious” business application, and who eventually drove the company to ruin with his misplaced priorities. Certainly there’s no apparent love lost between the other Infocom alumni and Vezza. An interview with Mike Dornbrook for an MIT student project researching Infocom’s history revealed the following picture of Vezza at MIT:

Where Licklider was charismatic and affectionately called “Lick” by his students, Vezza rarely spoke to LCS members and often made a beeline from the elevator to his office in the morning, shut the door, and never saw anyone. Some people at LCS were unhappy with his managerial style, saying that he was unfriendly and “never talked to people unless he had to, even people who worked in the Lab.”

On the other hand, Lyon and Hafner have this to say:

Vezza always made a good impression. He was sociable and impeccably articulate; he had a keen scientific mind and first-rate administrative instincts.

Whatever his failings, Vezza was much more than an unimaginative empty suit. He in fact had a long and distinguished career which he largely spent furthering some of the ideas first proposed by Lick himself; he appears in Lyon and Hafner’s book, for instance, because he was instrumental in organizing the first public demonstration of the nascent ARPANET’s capabilities. Even after the Infocom years, his was an important voice on the World Wide Web Consortium that defined many of the standards that still guide the Internet today. Certainly it’s a disservice to Vezza that his Wikipedia page consists entirely of his rather inglorious tenure at Infocom, a time he probably considers little more than a disagreeable career footnote. That footnote is of course the main thing we’re interested in, but perhaps we can settle for now on a picture of a man with more of the administrator or bureaucrat than the hacker in him and who was more of a pragmatist than an idealist — and one who had some trouble relating to his charges as a consequence.

Many of those charges had names that Infocom fans would come to know well: Dave Lebling, Marc Blank, Stu Galley, Joel Berez, Tim Anderson, etc., etc. Like Lick, many of these folks came to hacking from unexpected places. Lebling, for instance, obtained a degree in political science before getting sucked into LCS, while Blank commuted back and forth between Boston and New York, where he somehow managed to complete medical school even as he hacked like mad at MIT. One thing, however, most certainly held true of everyone: they were good. LCS didn’t suffer fools gladly — or at all.

One of the first projects of the DMG was to create a new programming language for their own projects, which they named with typical hacker cheekiness “Muddle.” Muddle soon became MDL (MIT Design Language) in response to someone (Vezza?) not so enamoured with the DMG’s humor. It was essentially an improved version of an older programming language developed at MIT by John McCarthy, one which was (and remains to this day) the favorite of AI researchers: LISP.

With MDL on hand, the DMG took on a variety of projects, individually or cooperatively. Some of these had real military applications to satisfy the folks who were ultimately funding all of these shenanigans; Lebling, for instance, spent quite some time on computerized Morse-Code recognition systems. But there were plenty of games, too, in some of which Lebling was also a participant, including the best remembered of them all, Maze. Maze ran over a network, with up to 8 Imlac PDS-1s, very simple minicomputers with primitive graphical capabilities, serving as “clients” connected to a single DEC PDP-10 “server.” Players on the PDS-1s could navigate around a shared environment and shoot at each other — the ancestor of modern games like Counterstrike. Maze became a huge hit, and a real problem for administrative types like Vezza; not only did a full 8-player game stretch the PDP-10 server to the limit, but it had a tendency to eventually crash entirely this machine that others needed for “real” work. Vezza demanded again and again that it be removed from the systems, but trying to herd the cats at DMG was pretty much a lost cause. Amongst other “fun” projects, Lebling also created a trivia game which allowed users on the ARPANET to submit new questions, leading to an eventual database of thousands.

And then, in the spring of 1977, Adventure arrived at MIT. Like computer-science departments all over the country, work there essentially came to a standstill while everyone tried to solve it; the folks at DMG finally got the “last lousy point” with the aid of a debugging tool. And with that accomplished, they began, like many other hackers in many other places, to think about how they could make a better Adventure. DMG, however, had some tools to hand that would make them almost uniquely suited to the task.

Jason Scott

January 2, 2012 at 7:58 am

Now we’re cooking with gas.

gnome

January 3, 2012 at 4:27 pm

Another excellently excellent article, and, well, Happy 2012!

honorabili

January 3, 2012 at 5:14 pm

Thank you for posting their history.

Todd

January 4, 2012 at 5:06 am

I always look forward to your posts, keep up the awesome work..

Ziusudra

January 30, 2015 at 9:38 pm

So, it was Vezza. I always thought it was Reagan.

Jimmy Maher

January 31, 2015 at 9:13 am

That Vezza was an evil jerk, wasn’t he? I heard he strangled kittens just for fun. Thanks!

Leecolins

September 1, 2018 at 1:24 am

Mmm. Gas.

But, you know, the good cooking gas.

Erik Mason

January 12, 2019 at 10:48 pm

“…SAGE remained in operation from 1959 until 1983, cost more than the Manhattan Project that had opened this whole can of nuclear worms in the first place,…”

So the Third Reich nor the Soviet Union were not working on their own “Manhattan Projects”?

The “Manhattan Project” was the only nuclear research going on in the entire world at this time, never occurred to any country engaged in world domination?

Stick to facts and keep the politics out of a simple history of Infocom.

Jeff Nyman

June 21, 2021 at 7:24 pm

Agreed. This kind of thing in these articles can be a little off-putting. It’s also not even historically accurate. The Tube Alloys project in the United Kingdom (working with Canada) to develop nuclear weapons and this was before the Manhattan Project. And, of course, there was the German Uranprojekt, started well before either of the above projects. The Soviet program never really got off the ground, particularly after Germany invaded the Soviet Union in 1941. But no doubt they would have continued that work.

This is the danger of being (or at least sounding) selective with history.

Heather L

August 15, 2025 at 6:16 am

The Nazis were barking up the wrong tree on nuclear physics, after driving off so many of those who built bombs for the US and USSR. You’ll note they were crushed by purely conventional warfare months before Trinity succeeded.

The British were unable to proceed with Tube Alloys in the long term, hence throwing in with the US.

The Soviets relied heavily on having moles and other collaboration all over the Manhattan Project in order to run their own program. And even with all that, it still took them to 1949 to pull their own weapon off.

Shoot, before the US nuked Japan, we were already burning and bombing as many of their cities into smoldering rubble as we could and they were barely able to muster any response. It wasn’t exactly necessary to have nukes ready to get Japan to surrender, Hiroshima and Nagasaki were sitting ducks for the incendiary and conventional bombing raids that had leveled over 90% of Tokyo.

Now of course we get to know how well or how badly the other nuclear programs were going because it’s all hindsight for us. Obviously things looked different at the time.

But his point is very much true – SAGE strictly existed because we had gone and taught what was first an enemy, then a short term ally, then an enemy again how to make the bombs in the first place! Unknowingly, but it happened anyway, and in the end we can say that the Manhattan Project just hadn’t needed to exist. Just like SAGE ultimately didn’t need to exist beyond a very short time where the threat it could handle mattered – ICBMs essentially relegating the 40s nuclear bomber concept to something that might at most be a follow-up taunt after the missiles already wiped out cities and military bases alike.

Jeff Nyman

September 27, 2025 at 6:10 pm

Great discussion here! Your broader point about nuclear proliferation creating new security challenges like SAGE has merit, without doubt, but characterizing the Manhattan Project as unnecessary requires more hindsight than was available to decision-makers at the time, as you note. The main point here was the contention that the Manhattan Project opened up the nuclear can of worms. That’s the false part, or at least it’s far more nuanced.

A more accurate assessment would be that the Manhattan Project was the first to successfully exploit the can of worms, but it didn’t open it. The discovery of fission itself opened it. If the U.S. hadn’t pursued nuclear weapons, it’s highly likely that either the Soviet Union, Britain, or potentially Germany would have developed them eventually anyway, just possibly later and with different geopolitical consequences. Heck, even Japan had two separate, largely uncoordinated, and definitely underfunded nuclear research programs during World War II, code-named Ni-Go and F-Go.

To say that “in the end we can say that the Manhattan Project just hadn’t needed to exist” is severely oversimplified and reflects classic hindsight bias. Saying “it didn’t need to exist” is like saying you didn’t need to buy fire insurance because your house didn’t burn down. The decision has to be evaluated based on the information and risks available at the time, not the outcome we know happened. Similarly, we can’t argue that because other players failed to win a race, there was no need to run it. That completely ignores the potentially catastrophic consequences if they had been wrong.

So, while the Manhattan Project certainly accelerated nuclear proliferation and created the specific Cold War dynamics (like SAGE), characterizing it as uniquely responsible for “opening” the nuclear age vastly overstates its causal role. The nuclear genie was already emerging from multiple bottles simultaneously.

This is all far removed from games and even Infocom, of course, but it does highlight an important part of any historical writing: the need to read history forwards, not backwards. That comes up a lot in game coverage, where I often see a lot of hindsight bias around game mechanics or decisions of game companies and so on. So, while this (fun) discussion may seem peripheral, it’s actually highlighting a really important consideration for these kinds of articles in general.

Will Moczarski

September 28, 2019 at 5:32 pm

Loyal readers of this blog may also recall that BBN is also where Will Crowther was employed

This seems like one also too many.

— A loyal reader of this blog ;-)

Will Moczarski

September 28, 2019 at 5:34 pm

Lick was by temperment an idealist.

-> by temperament?

Jimmy Maher

October 1, 2019 at 6:53 am

Thanks!

Ben

April 22, 2020 at 6:31 pm

such Ivan Sutherland -> such as Ivan Sutherland

much to with artificial intelligence -> much to do with artificial intelligence

game’s division work -> game division’s work

Jimmy Maher

April 23, 2020 at 8:11 am

Thanks!

Busca

March 3, 2022 at 12:12 pm

Just wondering as a non-native speaker: Shouldn’t the dash in “high-altitude” be omitted here since it is not used as a combined adjective, but as a noun plus adjective?

Jimmy Maher

March 4, 2022 at 8:53 am

Yes. Thanks!

DZ-Jay

May 11, 2023 at 6:51 pm

Just re-read this article and noticed an error:

The first instance of the term “ARPANET” should actually be “ARPA.” You first pointed out their original vision, and proceeded to describe their pívot to a new direction under Licklider.

So, it should be:

>> “Lick, however, shifted _ARPA_ in a more manageable and achievable direction […]”

dZ.

Jimmy Maher

May 12, 2023 at 6:39 am

Thanks!

Vince

July 12, 2023 at 4:53 am

“Licklider was inspired to a radical new vision of computing, in which human and computer would actively work together, interactively, to solve problems, generate ideas, perhaps just have fun. ”

Reading this in 2023, it’s really interesting how this could describe an interaction with an advanced LLM model. Visionary indeed.

“It was essentially an improved version of an older programming language developed at MIT by John McCarthy, one which was (and remains to this day) the favorite of AI researchers: LISP.”

It’s safe to say that with the recent focus on neural networks and the need for computing power afforded by special hardware and libraries, LISP has been finally dethroned by Python.

Adam Huemer

February 2, 2025 at 12:01 am

“Even after the Infocom years, his was an important voice on the World Wide Web”

Shouldn’t this be “(…), he was an (…)”?

Jimmy Maher

February 3, 2025 at 1:46 pm

It’s a little idiomatic, but as intended. ;)