We saw in the last article how CompuServe’s user-driven philosophy led to this online service becoming an online community, steered to a large extent by its subscribers. Yet the choice between a content-driven model and a user-driven model has never really constituted a zero-sum proposition, whether on the Internet of today or the CompuServe of the 1980s. In fact, virtually from the moment that Jeff Wilkins decided the nascent MicroNET had potential that was worth seriously investing in — a moment we can date to the very end of 1979 — he started casting about for information and applications which CompuServe’s users couldn’t possibly create for themselves.

The list of top-down initiatives CompuServe would launch over the next several years reads amazingly similar to the list of aspirations, sketchily fulfilled if at all, with which The Source had made its much more high-profile debut. But whether Wilkins really was checking off the items on Bill von Meister’s original list or coming up with this stuff on his own doesn’t matter much in the end. What is important is how much of the daily online life of today was first tried out on CompuServe in the 1980s. Sometimes, as we’ve already seen in the case of the attempt to launch a digital-download service for commercial software, the world would prove not quite ready for what CompuServe strove to offer it. Still, the simple fact of the striving has historical significance of its own.

Very early on, Wilkins determined to bring the news to CompuServe. With The Source having cornered United Press International, he chose to ask the other national news wire, the Associated Press, to make their feed of important stories available to his subscribers. The almost accidental result of his inquiries was something even more prescient, a full-blown collision between the titans of Old Media and what would soon be known as the New Media. Jeff Wilkins:

I had been thinking about news for a long time — the potential to have it be searchable and immediate. And of course it lent itself to text pretty well; we were still at that point in time limited to all text.

I called the local newspaper, the Columbus Dispatch, and said that we’re building this service, and we’d like to have the AP wire; that’s where all the news came from in those days. They said the AP didn’t do that, but you could work on a test to convince them to participate. So, they gave us a test feed, and our technical team took that and parsed it and figured out how to set up menus and all that sort of thing. So, we had a crude working model of a news feed.

Then I called the Associated Press in New York and said I’d like to come talk to them about an idea. Of course, they gave me to a lower-level staffer. But I met him in New York and told him what we were trying to do. He said, “The AP is all the newspapers. They have a board of directors who make all the decisions. I doubt they’d be interested in this, but we’re having our conference in Hawaii next week. If you’ll let me take this demo you’ve just shown me out there, I’ll show it to them and see what they think. Then I’ll get back to you.” His name was Henry Heilman. He was a great guy.

About a week later, I’m in Columbus in my office and the phone rings. “This is Henry Heilman. I’m in Hawaii. Our board would like to come to Columbus to talk about your proposal.”

I said, “That’d be great! When would they like to come?”

He said, “They’d like to come next week.”

I said, “Who’s coming?”

He started to name names. And I recognized a couple of them. One was Katharine Graham from the Washington Post. Another was [Arthur Ochs] Sulzberger from the New York Times.

So, we set it up. It was really funny. Katherine Graham’s secretary called me and said, “Can you have a car for Mrs. Graham?”

I said, “What do you mean by a car?”

He said, “A limousine.”

We didn’t have a limousine service in Columbus, at least not that I ever used. But anyway, I made arrangements to have her and everybody else picked up.

So, ten of these people came to our little conference room, and we made a presentation.

They said, “What’s your proposal?”

I said, “Well, I would like to have ten newspapers participate in a test of an electronic-newspaper service, and in exchange I’d like advertising in your newspapers worth $250,000 apiece, talking about this project.”

They said, “Can we have a few minutes to talk?” They were in there 45 minutes. I remember sweating profusely, thinking they were never going to go on with this. But they came back and said, “Yes, we’ll accept the proposal — with one condition: we offer it to all our newspapers, and let any participate that want to, provided that the ten of us can [also] be in the test.”

So, that was how we kicked it all off. I said, “I have one final request: the Columbus Dispatch will be the first newspaper that comes online.”

They agreed, and that was how the electronic-newspaper [service] launched.

CompuServe is demonstrated to members of the Associated Press in 1980. Standing in the back row from left are Jeff Wilkins, Katherine Graham of the Washington Post, and John F. Wolfe of the Columbus Dispatch, which was soon to become the first newspaper in history to go online.

Wilkins’s tale serves to illustrate that the entrenched forces of establishment media aren’t always quite as hidebound as they may first appear. In fact, the vaguely defined idea of “electronic publishing” was very much en vogue at the time in certain circles, albeit greeted with equal measures of excitement and trepidation. It was the former impulse that led Readers Digest, by reputation at least about the most hidebound media institution of all, to buy a controlling interest in The Source in 1980, the same year CompuServe struck their newspaper deal.

But the fear that would always remain at the root of traditional publishing’s long, fraught negotiation with the online world was never hard to find just below the surface. Jim Batton of Knight-Ridder Newspapers was one of the more prominent skeptics, voicing fears that were in their way as prescient as the more optimistic rhetoric that came to surround this brave new world of online news: “Our concern was that if people might get their information in this way, they might no longer need newspapers.” Katherine Graham was playing both sides of the fence, lobbying in Congress for legislation that would prevent telephone companies from becoming “information providers” even as she was signing on with CompuServe.

Indeed, it appeared the newspaper industry in general didn’t entirely know its own mind. Keith Fuller, president of the Associated Press, summed up the two views that were at war within the psyches of people like Graham in these terms: “One [view is] that electronic delivery is the future knocking at the door, and the other [is] that electronic delivery is a disaster hunting a victim.” The decision to get in bed with CompuServe was not without controversy inside the AP’s member newspapers. One union, The Twin Cities Newspaper Guild No. 2, held a 26-day strike against the Minneapolis Star and Tribune after they elected to participate in the experiment. The union’s delivery carriers demanded guarantees that they would not lose their positions with a switch to electronic delivery, while editors and writers demanded that they receive the same residuals on electronically published articles as those they were accustomed to receiving for articles published on paper. It seems an absurdly early point for such conflicts to have begun, given the vanishingly small number of people who actually had the equipment and the willingness to reach their local newspaper online in 1980, but there you have it.

For CompuServe, on the other hand, the deal represented just another way to reach out to Middle America, to reach customers early and make their online service the only credible example of same in the eyes of most of them. They saw the importation of actual newspapers rather than just a news wire to CompuServe was a very significant step toward those goals. The news wires provided the skeleton of what people looked for in their hometown newspapers, but the meat, the bones, and the personality were found in the local human-interest stories, the opinion columns, the entertainment guides. These were the things that made spending a long, lazy weekend morning over the local newspaper and a pot of coffee such a mainstay of American life. In this light, the fact that it was the little Columbus Dispatch that first established an online presence rather than one of the big papers of record feels appropriate.

Each of the newspapers that participated in the program offered free time on CompuServe to any of their subscribers who wished to get a glimpse of the cyberspace future of journalism. The enormous attention the experiment garnered throughout the mainstream media made CompuServe a household name for the first time, at least among those interested in technology in the abstract. Rich Baker, a CompuServe executive:

All of a sudden, we had the biggest newspapers in the country running stories about CompuServe Information Service. The news stories spun off into wire stories, and our getting on the Today show. The Today crew came here so Garrick Utley could deliver the story. We got an incredible amount of exposure from the newspaper experiment. No amount of paid advertising could have accomplished such a feat.

While the experiment was a roaring success from the standpoint of CompuServe, the results from the newspapers’ standpoint were considerably more mixed — doubtless much to the relief of organizations like The Twin Cities Newspaper Guild No. 2. In the initial flurry of excitement, a few of the newspapers had devoted entire editorial staffs to their online editions, a practice that quickly proved untenable given the small number of online readers. Meanwhile even many of the early adopters among the reading public who had greeted the idea of an online newspaper with excitement had to admit in the end that it was a heck of a lot more pleasant to read a 25¢ physical newspaper than it was to watch stories scroll slowly onto a computer screen, bereft of illustrations or proper typesetting, at a price of $6 per hour — not to mention that it was a heck of a lot easier to read a paper-based newspaper at the breakfast table than it was to set up a computer there.

The trial program officially ended in June of 1982, and most of the fifteen or so newspapers who had participated ended their presence on CompuServe along with it. CompuServe’s grander plans for online news were eventually replaced by something called the Executive News Service, a much more limited digest of relevant wire reports for, as the name would indicate, the busy businessperson on the go. Tellingly, CompuServe shifted from telling potential customers about all the prestigious newspapers on offer to offering them the opportunity to “create your own newspaper” — a formulation much more in keeping with the user-driven ethos that had come to define so much of the service.

Another area where CompuServe reached toward a future that would prove to be just out of their grasp was online banking. On October 9, 1980, they announced a partnership with Radio Shack and the United American Bank of Knoxville, Tennessee, to offer the bank’s customers online access to their accounts. According to the press release, customers would be able to “receive current information on their checking accounts, use a bookkeeping service, and apply for loans,” with many more functions, including online bill paying and tax services, planned for the future. The service would represent, according to the bank’s president, “convenience banking without leaving home.” It certainly sounded promising, but it was a struggle to find any takers for the offer, limited as it was to United American Bank’s existing customers in eastern Tennessee. With computer security in its relative infancy, the safety of this, the most important of all their personal information, was a concern repeatedly and justifiably expressed by those who were surveyed on the topic. In the end, instead of becoming the first of many banks to go online, United American Bank elected to terminate the experiment within six months. It seemed that online banking, even more so than online newspapers, was an idea that was still just a little too far ahead of its time.

But other far-seeing ventures proved more successful. In 1982, just as the big newspaper experiment was ending, another electronic-publishing initiative was getting started. The World Book Encyclopedia went online with CompuServe that year, thus inadvertently hammering the first nail into the coffin of the paper-based encyclopedia. Countless wired schoolchildren were soon using this early ancestor of our own ubiquitous Wikipedia to write their reports without ever having to darken the door of a library.

Another, even more important initiative arrived in early 1984 in the form of the Electronic Mall. Once again, it had been The Source who had originated the idea of an online shopping emporium, making it part of their service from Day One. But, once again, online shopping had always been more of an aspiration than a reality there: few retailers initially set up storefronts, which led to few of The Source’s already scant subscribers taking an interest, which gave few other retailers much encouragement to join the fray. And so it was left to the more methodical CompuServe to become the real pioneers of e-commerce.

In contrast to The Source’s shopping mall, CompuServe’s Electronic Mall debuted with a very impressive list of online storefronts, a tribute to how powerful and well-connected Jeff Wilkins’s erstwhile corporate data processor was becoming in the consumer marketplace. Many of the early names in the Electronic Mall could indeed be found in the typical American brick-and-mortar shopping mall: Sears, Waldenbooks, American Express, Kodak, E.F. Hutton, in addition to the expected list of computer-oriented shops, which boasted names like Commodore and Microsoft. But just as notable as all the big names were all the little ones. In another early testament to the leveling effect of so much of online life, small online-only vendors clustered side by side with some of the biggest corporate trademarks in the country. The Electronic Mall would remain a fixture for the next decade and change, doing very well for CompuServe and many of the entities who opened storefronts there. In the process, it became the first really successful example of e-commerce, yet another blueprint for what the future would eventually bring to everyone.

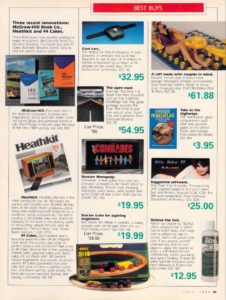

This page from CompuServe’s print magazine Online Today shows some of the wide variety of products that could be purchased from the Electronic Mall by 1989.

Speaking of which: the same year that the Electronic Mall went online, Trans World Airlines opened a gateway to their internal reservations system on CompuServe, allowing subscribers to book their own travel. “This will be the first time that comprehensive worldwide airline information and fares will be available to consumers,” said a proud Jeff Wilkins. Other airlines followed, as did rental-car providers and hotels, precipitating a slow-rolling transformation in the way that people travel — and making life much more difficult for lots of professional travel agents.

So, already by the dawn of 1985 CompuServe encompassed an astonishing swathe of what we’ve come to think of as modern online life, some of it driven by users, some by content providers: email, forums, chat, news, encyclopedias, shopping, travel reservations. And even some of the things missing from that list, like digital distribution of commercial software and online banking, had been tried but had proved impractical. The range is so broad and so far-reaching that some of the technical pioneers who worked for CompuServe have in recent years made a lucrative sideline out of testifying to their prior art in patent cases, ruining the days of heaps of people who had believed themselves to be the innovators. “Almost everything people [have] tried to patent on the Internet,” notes Jeff Wilkins, “CompuServe had done in the early eighties.”

Having thus done his part for online posterity, Wilkins left the company in 1985 in order to get in on the ground floor of CD-ROM by opening a CD-pressing plant. His successor, Charlie McCall, made no dramatic changes to the solid framework Wilkins had left in place. For the remainder of the 1980s and well into the 1990s, CompuServe would just keep on trucking in business-as-usual mode, adding hundreds of thousands of new subscribers each year.

Prior to 1983, CompuServe had had the market for services like theirs virtually to themselves. Potential customers had only two other places to turn: The Source, which, perpetually mismanaged as it was, never posed all that much of a threat after 1980; and the network of private bulletin-board systems, which were regional, difficult to connect with, and, being usually able to host only one user at a time, were unable to offer anything like the same sense of real-time community. Indeed, CompuServe had deliberately tried to give the impression that theirs was the only online service that was or ever could be, deploying the word “utility” to foster a mental connection with the telephone system or the power grid (or, for modern sensibilities, with what the World Wide Web has become today).

But it was inevitable that others, seeing the growth CompuServe was enjoying, would want to enter the field. The first of these was DELPHI in March of 1983. Originally conceived as an online encyclopedia, it would always maintain a certain intellectual or literary focus. Shortly after its founding, for example, the service hosted what may have been the first online collaborative novel. In 1984, the science-fiction writer Orson Scott Card posted on DELPHI the entirety of Ender’s Game, destined to become his most famous novel, a year before it would see publication in print. Such coups aside, though, DELPHI lacked the corporate clout and the financial resources to challenge CompuServe for mainstream mindshare, and was never regarded by the latter as all that serious of a threat.

In October of 1985, however, a more serious threat did arrive in the form of GEnie. Formed by General Electric out of largely the same motivation that had led Jeff Wilkins to start MicroNET back in the day — the frustration of watching an expensive computing and telecommunications infrastructure sit all but dormant more than half of the time — GEnie arrived with an impressive array of offerings, many of them all too plainly modeled on those of its biggest competitor: chat, a forum system, shopping, news services, etc. Most of all, though, its owners planned to compete on the basis of price. In contrast to CompuServe’s $6.50 per hour, GEnie launched at a price of $5 per hour, an initial salvo in a slow-moving pricing cold war that would gradually bring down the average connection charge across the entire online-services industry over the years to come. While it would never even come close to catching CompuServe, GEnie would remain a force to be reckoned with in its own right for a long time.

And so it went, in accord with the implacable logic of capitalism. By the late 1980s there were several other viable online services as well, all orbiting the star that was CompuServe, defining themselves sometimes in their convergence, sometimes in their divergence. “We’re the more intellectual CompuServe!” said DELPHI; “We’re a cheaper version of CompuServe!” said GEnie; etc., etc. The fact that none of the services had any way of communicating with one another meant that each developed its own unique personality, partly defined by the priorities of its administrators but also partly, one senses, by random chance — or, rather, by the priorities of the people who happened to sign up in its earliest days.

For its part, CompuServe maintained always its reputation as the safe, steady online service, the one that might cost a little more than some of the others but that you knew you could rely on. A certain tradition of technical excellence which John Goltz had instilled from the company’s earliest days as a provider of corporate time-sharing services served them well in the consumer market. Their systems never — but never — went down, and even the odd glitches which often dogged their rivals’ offerings were all but unheard of. Some of their solutions to contemporary problems of the moment were so thorough that they have remained with us to this day. In 1987, for example, CompuServe developed the Graphics Interchange Format, or GIF, as a way to allow their subscribers using many different models of computer running many different kinds of software to share pictures with one another. It would go on to become the first truly ubiquitous cross-platform graphical standard; GIF images have been created in the literal billions in the decades since the format’s inception.

Even as it expanded, the burgeoning online-services industry managed to survive at least one existential threat. In mid-1987, the Federal Communications Commission made plans to implement a fee on the local access numbers which customers used to connect to the services without incurring long-distance charges. Discount long-distance services for voice calls that made use of a similar system had always been required to send part of their revenue back to the local telephone exchanges whose equipment they used, something CompuServe and the other online services had heretofore managed to avoid. In effect, the FCC argued, users of everyday telephone services were subsidizing users of these newfangled online services. They now planned to charge the latter $4.50 to $5.40 per hour for the privilege, a move with the potential to wipe out the whole industry at a stroke. “My opinion is that online information is horrendously overpriced right now,” said one analyst. “If you raise the price, you’re cutting out more and more people.” When word of the plan got to CompuServe, they enlisted their subscribers and everyone else they could find in a furious campaign to get it rescinded before it went into effect on January 1, 1988 — and they succeeded, another testimony to their growing clout. “Aunt Minnie,” as one of the FCC’s stymied attorneys put it, would have to go on subsidizing “Joe Computer User” in the name of keeping a developing industry alive. Not that the users of CompuServe and the other online services thought of it in those terms: for them, it meant simply that they got to keep on chatting and reading and writing and shopping and playing and all the rest without seeing the prices they paid for the privilege more than double.

As they were fending off this threat at home, CompuServe was already casting an eye outside their country’s borders. They expanded into Japan in 1987, then into Switzerland and Britain the following year; other European countries then followed. Soon the stories of friendship and romance that constantly swirled around CB Simulator took on an international character: an Indiana woman moved to Dublin to marry an Irish man; a Japanese woman and her daughter moved to California to join an American man. “I would feel the same about Suzuko if she were from South Africa or lived in Moscow,” said the last. Like so many Internet chatters who would come after them, the users of CB Simulator were learning the valuable lesson that people shouldn’t be judged by the passport they happen to hold.

Another landmark moment in Charlie McCall’s tenure — if one of more symbolic than practical importance by the time it arrived — came in 1989, when CompuServe, now 500,000 members strong, gobbled up their old arch-rival The Source, which was still straggling along with 50,000 members. Thus did a pioneer which had never quite lived up to its founders’ ambitions finally meet its end.

By the early 1990s, this net before the Web which Jeff Wilkins and Bill von Meister had first conceived almost simultaneously back in 1979 was reaching its peak, with CompuServe snowballing toward an eventual 3 million subscribers, with GEnie well into the hundreds of thousands, and with all the other services beavering along as well, filling their various niches.

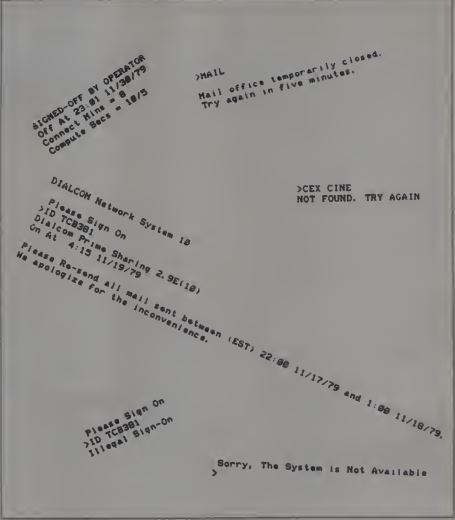

And now, having reached this high-water mark, loading you down with so many data points describing so many firsts along the way, I feel keenly my failure to convey a more impressionistic sense of what it was really like to log onto one of these services. Unfortunately, I run into a problem that’s doomed to dog any digital antiquarian who tries to write about what the kids today like to call computing in the cloud: the lack of permanent artifacts to study in such an ephemeral form of media. There is, in other words, no preserved version of CompuServe that I can play in for research purposes or point you to to do the same, as I can the offline games I write about. I have only my imperfect memories from decades ago to go on — I was actually a GEnie man, having been lured by the cheaper price — along with what was written about the experience at the time. So, I’m going to take an unusual step, sort of an inversion of what we usually do around here. Instead of using the historical environment as a pathway to understanding why a certain game is the way it is, I’m going to do the opposite: suggest a game that you might play as a way of understanding the environment that spawned it.

Judith Pintar was a CompuServe regular in 1991 when she decided to write a game to simulate and gently satirize online life as she then knew it. Working with the text-adventure language AGT, she made Cosmoserve. If you’re at all interested in learning more about pre-Web online culture, I strongly encourage you to play it. Try to solve it if you like — it’s a very good game in its own right — but feel free to use a walkthrough if you prefer.

In Cosmoserve you’ll find much of what I’ve been writing about in this and the previous article: email, the Forums, chat, the Electronic Mall. From the struggle you sometimes had just to get online at all to the suggestive gossip on CB Simulator, from the ubiquity of Turbo Pascal to a killer computer virus — yes, we already had them this early on — it’s a perfect time capsule of online life circa 1991. I’ll have more to write about Cosmoserve in a future article, but for now suffice to say that it conveys all the experiential context that I can’t quite manage to give you in non-interactive, purely historical articles like these have been. You can almost hear the hair-raising howl of the modem connecting and the heavy clunk of a vintage IBM keyboard. Whether it happens to be a voyage of discovery or a nostalgia trip for you personally, I think you’ll find it has a lot to offer. Bless Judith Pintar for writing it.

As it happened, though, the online milieu Pintar so ably captured in 1991 was already being threatened at the time she wrote Cosmoserve. What we’ve been tracing to this point has been a certain approach to the commercial online service, one based entirely or almost entirely on text, allowing subscribers to connect using almost any terminal program. Yet by 1991 there was another approach out there as well, which in time would lead to the biggest single online service of all — yes, bigger even than CompuServe. And when we trace its origins back to the beginning, we find the familiar name of Bill von Meister. Jeff Wilkins may have wound up stealing his thunder last time around, but the magnificent rogue wasn’t yet done shaping history.

(Sources: the book On the Way to the Web: The Secret History of the Internet and its Founders by Michael A. Banks; Online Today of February 1988 and July 1989; 80 Microcomputing of January 1981; InfoWorld of November 24 1980, April 9 1984, May 21 1984, November 5 1984, and October 21 1985; Personal Computing of January 1981 and October 1981; Family Computing of March 1984; MacWorld of September 1987; New York Times of June 16 1987; Alexander Trevor’s brief technical history of CompuServe, which was first posted to Usenet in 1988; interviews with Jeff Wilkins from the Internet History Podcast and Conquering Columbus.)