A philosopher’s life is a dangerous one…

Category Archives: Interactive Fiction

Phoenix and Acornsoft

Cambridge was the heart of the early British PC industry, home of both Sinclair and Acorn as well as many supporting and competing concerns. Indeed, Cambridge University can boast of some of the major achievements in computing history, to such an extent that easy characterizations of the university as “the MIT of Britain” or the town as “the Silicon Valley of the UK” seem slightly condescending. It was Cambridge that nurtured Alan Turing, the most important thinker in the history of computer science, and that supplied much of the talent (Turing among them) to the World War II code-breaking effort at Bletchley Park that laid the foundation for the modern computer. Most spectacularly of all, it was at Cambridge in 1949 that EDSAC-1 — the first stored-program fully electronic computer, meaning the first that could be programmed the way we understand that term today — first came online. (The earlier American ENIAC was programmable only by switching logic gates and rerouting cabling in an elaborate game of Mouse Trap that could consume weeks. At risk of wading into a debate that has swirled for years, there’s a real argument to be made that EDSAC-1 was the first real computer in the sense of being something that operated reasonably akin to what we mean when we use the term today.) In 1953 Cambridge became the first university to recognize computer science as a taught discipline.

For decades computing in and around Cambridge centered on whatever colossus was currently installed in the bowels of the Computing Laboratory. After EDSAC-1 came EDSAC-2 in 1958, which was in turned replaced by Titan in 1964. All of these had been essentially one-off, custom-built machines constructed by the university itself in cooperation with various British technology companies. It must therefore have seemed a dismaying sign of the changing times when the university elected to buy its next big mainframe off the shelf, as it were — and from an American company at that. Coming online in February of 1973, Phoenix — the name was meant to evoke a phoenix rising from the ashes of the newly decommissioned Titan — was a big IBM 370 mainframe of the sort found in major companies all over the world. However, just as the hackers at MIT had made their DEC machines their own by writing their own operating systems and tools from scratch, those at Cambridge replaced most of IBM’s standard software with new programs of their own. Thus Phoenix became, literally, a computing environment like no other.

For more than two decades Phoenix was a central fixture of life at Cambridge. (In hardware terms, there were actually three Phoenixes; newer IBM mainframes replaced older hardware in 1982 and 1989.) It was used for the expected computer-science research, much of it groundbreaking. But it also, like the contemporary American PLATO, became a social gathering place. It provided email access to a whole generation of students along with lively public discussion boards. The administrators delighted in replacing the stodginess of IBM’s standard MVS operating system with their own quirky sensibility. Phoenix’s responses to various pleas for HELP are particularly remembered.

HELP SEX

Phoenix/MVS, being of essentially neuter gender, cannot help with emotional, personal or physical human problems

HELP GOD

Please appeal to deities directly, not via Phoenix/MVS

HELP PHEONIX

Pheonix is spelt Phoenix and pronounced Feenicks.

HELP CS

CS is a standard abbreviation for Computing Service; it is also a "gas" used for riot control.

Given this freewheeling atmosphere, you’d expect to find plenty of games on Phoenix as well. And you wouldn’t be disappointed. Phoenix had all the usual suspects, from card games to chess to an implementation of Scrabble with an impressively fiendish AI opponent to play against. And, beginning in the late 1970s, there were also adventures.

Both Crowther and Woods’s Adventure and Zork (in its Dungeon incarnation, as “liberated” from MIT by Bob Supnik and ported to FORTRAN) arrived at Cambridge as one of their first destinations outside the United States. Like hackers across the U.S. and, soon enough, the world, those at Cambridge went crazy over the games. And also like so many of their American counterparts, they had no sooner finished playing them than they started speculating about writing their own. In 1978 John Thackray and David Seal, two Cambridge graduate students, started working on a grand underground treasure hunt called Acheton. It’s often claimed that Acheton represents just the third adventure game ever created, after Adventure itself and Zork. That’s a very difficult claim to substantiate in light of the number of people who were tinkering with adventures in various places in the much less interconnected institutional computing world of the late 1970s. Amongst just the finished, documented games, Mystery Mansion and Stuga have at least as strong a claim to the title of third as Acheton. Still, Acheton was a very early effort, almost certainly the first of its kind in Britain. And it was also a first in another respect.

Looking at the problem of writing an adventure game, Thackray and Seal decided that the best approach would be to create a new, domain-specific programming language before writing Acheton proper. The result, which has been retroactively dubbed T/SAL (“Thackray/Seal Adventure Language”) today, but was simply known as “that language on Phoenix used to write adventures” during its heyday at Cambridge, represents the first ever specialized adventure programming language. (Even the PDP-10 Zork had been written in the already extant, if unusually text-adventure-suitable, MDL.) The T/SAL system is something of a hybrid between the database-driven design of Scott Adams and the more flexible fully programmable virtual machine of Infocom. Objects, rooms, and other elements are defined as static database elements, but the designer can also make use of “programs,” routines written in an interpreted, vaguely BASIC-like language that let her implement all sorts of custom behaviors. Thackray and Seal improved T/SAL steadily as dictated by the needs of their own game in progress, always leaving it available for anyone else who might want to give adventure writing a shot. Meanwhile they also continued to work on Acheton, soon with the aid of a third partner, a PhD candidate in mathematics named Jonathan Partington. It grew into a real monster: more than 400 rooms in the final form it reached by about 1980, thus dwarfing even Zork in size and still qualifying today, at least in terms of sheer geographical scope, as one of the largest text adventures ever created.

Yet the most important outcome of the Acheton project was T/SAL and the community it spawned. The system was used to create at least fourteen more games over a decade. Freed as they were by virtue of running on a big mainframe from the memory restrictions of contemporary PC adventures, designers could craft big, sometimes surprisingly intricate playgrounds for a brainy audience of budding mathematicians and scientists that reveled in the toughest of puzzles. For those on their wavelength, they became an indelible part of their student memories. Graham Nelson, easily the most important figure in interactive fiction of the post-Infocom era, was an undergraduate at Cambridge during the heyday of the Phoenix games. He writes in Proustian terms of his own memories of the games: “They [the Phoenix games] are as redolent of late nights in the User Area as the soapy taste of Nestlé’s vending machine chocolate or floppy, rapidly-yellowing line printer paper.” Nelson’s later puzzle-filled epics Curses and Jigsaw show the influence of these early experiences at Cambridge in their erudition and sprawl.

Yet we shouldn’t overestimate the popularity of the Phoenix games. Running under a custom operating system on an IBM design that was seldom open to fun and games at other installations, they had no chance to spread beyond Cambridge in their original incarnations. Even at the university, their sheer, unapologetic difficulty made them something of a niche interest. And authoring new games in the rather cryptic T/SAL required an especial dedication. Fifteen games in over ten years is not really a huge number, and most of those were front-loaded into the excitement that surrounded the arrival of Adventure and the novelty of Acheton. During most years of the mid- and late 1980s it was only Jonathan Partington, who in authoring or co-authoring no fewer than eight of the fifteen games was by far the most prolific and dedicated of the T/SAL authors, that continued to actively create new work with the system. It’s probably safe to say that most of the Phoenix games had (at best) hundreds rather than thousands of serious players. Still, they would have a larger impact on the British adventuring scene outside of Cambridge’s ivory tower in a different, commercialized form.

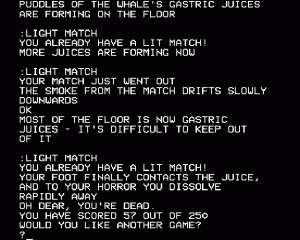

One of the first at Cambridge to pick up on the T/SAL system was an oceanography professor in his early thirties named Peter Killworth, who had been taught a healthy appreciation for the brave new world of possibility that Adventure represented by his seven- and three-year-old sons: “I was constrained by what I knew about computers, but they treated the terminal as a person. While I was trying to work out what an axe was doing in a computer program, they were chopping the nearest tree down.” Killworth was intrigued enough to start tinkering with T/SAL as soon as he noticed it. He wrote a simple physics problem using the language. Through it he got his introduction to the interconnected web of social problem-solving that made the experience of playing and writing early institutional adventures so different from those on PCs.

“I had a problem which revolved around using a pivot to get up a cliff. Put weight on one end, and the other goes up — but you have to be careful to get the weight right. I programmed it on the mainframe, and left it for a friend to have a look at. When I came back next morning, I was deluged with messages from people I’d never heard of, all telling me where I’d gone wrong in the program.”

Encouraged by all this interest, Killworth decided to make a full-fledged game to house the puzzle. The result, which he completed even before Acheton was done, he called Brand X. It was a treasure hunt fairly typical of the general Phoenix aesthetic in its cruel puzzles and relatively heady (by adventure-game standards) allusions to Descartes, Coleridge, and the Bible. Figuring that was that, Killworth then returned his full attention to oceanography — until the arrival of the BBC Micro and Acornsoft caused him to start thinking about his game again a couple of years later.

Of all the technology companies in and around Cambridge, Acorn worked hardest to foster ties with the university itself. Chris Curry and Hermann Hauser made the most of the connections Hauser had formed there during the years he spent as a Cambridge PhD candidate. With Acorn’s office located literally just around the corner from the Computing Laboratory, the two had ample opportunity to roam the corridors sniffing out the best and the brightest to bring in for their own projects. They considered Cambridge something of a secret weapon for Acorn, taking the university itself into their confidence and making it almost a business partner. Cambridge reciprocated by taking Acorn’s side almost en masse as the British computer wars of the 1980s heated up. The university grew to consider the BBC Micro to be the machine that they had built — and not without cause, given the number of Cambridge students and graduates on Acorn’s staff. Clive Sinclair, meanwhile, who like Chris Curry had not attended university, displayed only a grudging respect for the Cambridge talent, mingled with occasional expressions of contempt that rather smack of insecurity.

Acorn Computers had helped one David Johnson-Davis to set up a software publisher specializing in software for their first popular machine, the Atom, in 1980. Now, as the BBC Micro neared launch, Acornsoft would prove to be a valuable tool to advance the goal of making as much software as possible, and hopefully of as high a quality as possible, available for the new machine. Just as their big brothers had in designing the BBC Micro’s hardware, Acornsoft turned to the university — where prototypes were floating around even before the machine’s official launch — for help finding quality software of all types. They offered prospective programmers a brand new BBC Micro of their own as a sort of signing bonus upon acceptance of a program. After a friend got a statistics package accepted, Peter Killworth started to ponder whether he had something to give them; naturally, his thoughts turned to Brand X. He rewrote the game in the relatively advanced BBC BASIC, using every technique he could devise to save memory and, when that failed, simply jettisoning much of the original. (Ironically, the physics problem that got the whole ball rolling was one of those that didn’t make the final cut.) He then presented it to Acornsoft, who agreed to publish it under the title of Philosopher’s Quest, a tribute to the game’s intellectual tone proposed by Johnson-Davis’s right-hand man Chris Jordan. It was published in mid-1982, the first game in the Acornsoft line. Killworth hoped it would sell at least 500 copies or so and earn him a little bit of extra pocket money; in the end it sold more than 20,000.

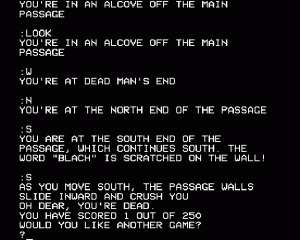

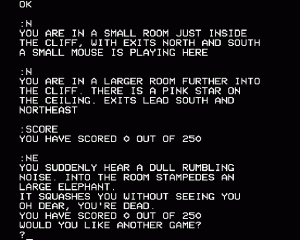

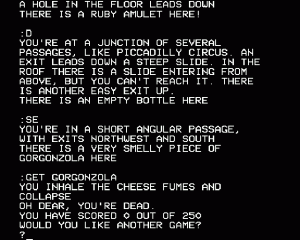

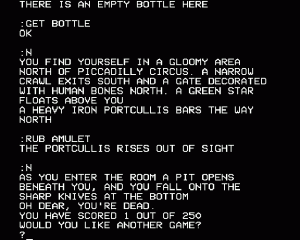

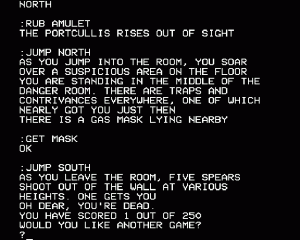

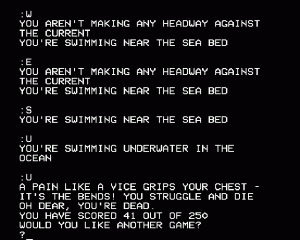

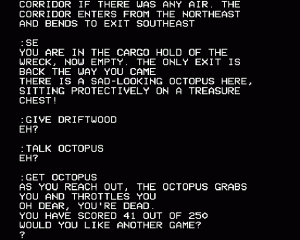

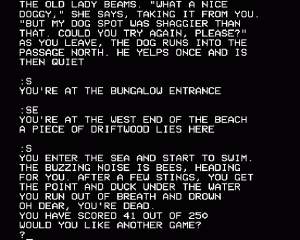

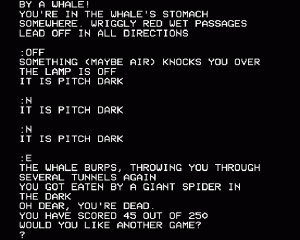

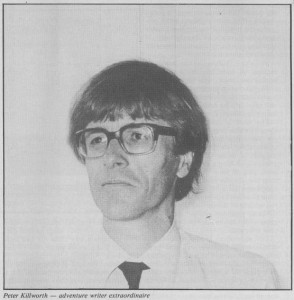

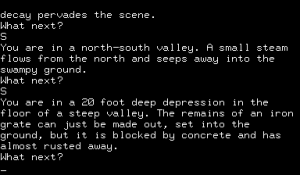

Even in its chopped-down microcomputer incarnation, Philosopher’s Quest provides a pretty good introduction to the Phoenix aesthetic as a whole. An unabashed treasure hunt with no pretensions toward narrative or mimesis, its geography simply serves as the intellectual landscape to house its puzzles. To understand the game’s level of commitment to physical reality, consider that, after swimming underwater for a while, you can still strike a match inside the whale that swallows you. (Don’t ask.) We’re a long way from Zork III and its realistic simulation of the effects of swimming in a lake on a battery-powered lantern.

Yet the writing is as solid as it can be within the constraints of 32 K, with occasional flashes of dry wit and an awareness of culture beyond Dungeons and Dragons and Star Wars that’s pretty rare amongst games of its era. The parser is the typical two-worder of the era, but the game doesn’t strain to push beyond its limits, so it only occasionally frustrates. While not huge, the game is amazingly large given the memory restrictions under which Killworth was operating and the fact that he was working in BASIC. He was already an experienced programmer of weather and ocean simulations thanks to his day job, and his expertise comes through here. He would later speak of an “unofficial competition” with the Austin brothers of Level 9, kings of text compression, over how much text they could cram into the minuscule amounts of memory they had to work with.

The puzzles are a mixed bag, sometimes brilliant but always heartless. The very beginning of the game tells you everything you need to know about what you’re in for. You start in a store from which you can only remove two out of four items. You can increase this number to three through a clever action, but there’s no way to know which one of the four you’re not going to need later in the game; trial and error and learning by death are the rules of the day here. Still, some of the puzzles border on the beautiful, including one of my favorite guess-the-verb puzzles of all time. (Yes, like much in the game this puzzle violates the Player’s Bill of Rights in depending on outside knowledge, but, atrocities like Zork II‘s baseball maze aside, this has always struck me as the least of adventure-game design sins, especially in this era of readily accessible information on virtually any subject. I rather like it when a game sends me scurrying to the Internet in search of outside knowledge to apply. And I feel really special when, as in this case, I already have the knowledge I need.)

Other puzzles, however, are cheap and unforgivable in that way all too typical of early text adventures. There’s a vital room exit that goes completely un-described and thus can be sussed out only by beating your head against every wall in every room when you’ve reached the point of total frustration. More than anything else, the game takes delight in killing you, a parade of gruesome if often clever deaths, most of which you’re going to experience at least once in the course of playing; these are not jumping-off-a-cliff-to-see-what-happens deaths, but rather innocently-entering-a-room-only-to-be-trampled-by-an-elephant deaths. The deaths are so numerous and so absurd that they almost come off as parody. More so even than many other old games, Philosopher’s Quest can be enjoyed today, but only if you can get yourself in tune with its old-school sensibilities. Unsurprisingly, it and the other Phoenix games are very polarizing these days. Graham Nelson among others remains a big fan, while still others find them an exercise in masochism; see this old newsgroup thread for a sample of typical reactions.

Peter Killworth continued to write adventures after Philosopher’s Quest, for Acornsoft and later Topologika (who also published quite a few of the other Phoenix games for PCs). Already by 1983 he was earning twice as much from his games as he did from his Cambridge professorship. He later dismissed Philosopher’s Quest and its sometimes arbitrary puzzles as something of a learning exercise, but he always retained his reputation as an author of difficult games. For Killworth’s follow-up to Philosopher’s Quest, Castle of Riddles, Johnson-Davis had an idea that would begin something of a tradition in the British adventure-gaming scene. Acornsoft and Your Computer magazine sponsored a contest with a prize of £1500 worth of Acorn hardware and a £700 silver ring to the first person to solve the game. It took winner Peter Voke, Britain’s equivalent of the American adventure-gaming machine Roe R. Adams III, a full eight hours to solve the game. (Runner-up Colin Bignell made a mad cross-country dash through the night to get his winning entry to Your Computer, but pulled up in his car in front of the magazine’s headquarters just 20 minutes behind Voke.) Killworth also authored one of the classics of the sub-genre of adventure-authoring guides that were popular in the early and mid-1980s, the aptly titled How to Write Adventure Games. He died in 2008 of motor neuron disease. Even when he was earning more from his adventures than he was from his day job, games were just a sideline to a significant career in oceanographic research.

We’ll likely be revisiting some of the later works of Killworth and the other Phoenix authors at some point down the road a bit. For now, you can download Philosopher’s Quest and Castle of Riddles as BBC Micro disk images. (I recommend Dave Gilbert’s superb BeebEm emulator.) Most of the original incarnations of the Phoenix games, Brand X included, have been ported to Z-Code format, playable in my own Filfre and countless other interpreters, thanks to a preservation effort led by Graham Nelson, Adam Atkinson, and David Kinder following the final shutdown of Phoenix in 1995.

The BBC Micro

Continental Europe is notable for its almost complete absence during the early years of the PC revolution. Even Germany, by popular (or stereotypical) perception a land of engineers, played little role; when PCs started to enter West German homes in large numbers in the mid-1980s, they were almost entirely machines of American or British design. Yet in some ways European governments were quite forward-thinking in their employment of computer technology in comparison to that of the United States. As early as 1978 the French postal service began rolling out a computerized public network called Minitel, which not only let users look up phone numbers and addresses but also book travel, buy mail-order products, and send messages to one another. A similar service in West Germany, Bildschirmtext, began shortly after, and both services thrived until the spread of home Internet access over the course of the 1990s gradually made them obsolete.

The U.S. had no equivalent to these public services. Yes, there was the social marvel that was PLATO, but it was restricted to students and faculty fortunate enough to attend a university on the network; The Source, but you had to both pay a substantial fee for the service and be able to afford the pricy PC you needed to access it; the early Internet, but it was also restricted to a relative technical and scientific elite fortunate enough to be at a university or company that allowed them access. It’s tempting to draw an (overly?) broad comparison here between American and European cultural values: the Americans were all about individual, personal computers that one could own and enjoy privately, while the Europeans treated computing as a communal resource to be shared and developed as a social good. But I’ll let you head further down that fraught path for yourself, if you like.

In this area as in so many others, Britain seemed stuck somewhere in the middle of this cultural divide. Although the British PC industry lagged a steady three years behind the American during the early years, from 1978 on there were plenty of eager PC entrepreneurs in Britain. Notably, however, the British government was also much more willing than the American to involve itself in bringing computers to the people. Margaret Thatcher may have dreamed of dismantling the postwar welfare state entirely and remaking the British economy on the American model, but plenty of MPs even within her own Conservative party weren’t ready to go quite that far. Thus the British post developed a Minitel equivalent of its own, Prestel, even before the German system debuted. But for the young British PC industry the most important role would be played by the country’s publicly-funded broadcasting service, the BBC — and not without, as is so typical when public funds mix with private enterprise, a storm of controversy and accusation.

Computers first turned up on the BBC in early 1980, when the network ran a three-part documentary series called The Silicon Factor just as the first Sinclair ZX80s and Acorn Atoms were reaching customers. It largely dealt with computing as an economic and social force, and wasn’t above a little scare mongering — “Did you know the micro would cut out so-and-so many skilled jobs by 1984?” The following year brought two more specialized programs: Managing the Micro, a five-parter aimed at executives wanting to understand the potential role of computers in business; and the two-part Technology for Teachers, about computers as educational tools. But even as the latter two series were being developed and coming to the airwaves, one within the BBC was dreaming of something grander. Paul Kriwaczek, a producer who had worked on The Silicon Factor, asked the higher-ups a question: “Don’t we have a duty to put some of the power of computing into the public’s hands rather than just make programs about computing?” He envisioned a program that would not treat computing as a purely abstract social or business phenomenon. It would rather be a practical examination of what the average person could do with a PC, right now — or at least in the very near future.

The idea was very much of its time, spurred equally by fear and hope. With all of the early innovation having happened in America, the PC looked likely to be another innovation — and there sure seemed to have been a lot of them this century — with which Britain would have little to do. On the other hand, however, these were still early days, and there did already exist a network of British computing companies and the enthusiasts they served. Properly stoked, and today rather than later, perhaps they could form the heart of new, home-grown British computer industry that would, at a minimum, prevent the indignity of seeing Britons rely, as they already did in so many other sectors, on imported products. At best, the PC could become a new export industry. With the government forced to prop up much of the remaining British auto industry, with many other sectors seemingly on the verge of collapse, and with the economy in general in the crapper, the country could certainly use a dose of something new and innovative. By interesting ordinary Britons in computers and spurring them to buy British models today, this program could be a catalyst for the eager but uncertain British PC industry as well as the incubator of a new generation of computing professionals.

Much to Kriwaczek’s own surprise, his proposed program landed right in his lap. The BBC approved a new ten-part series to be called Hands-On Micros. Under the day-to-day control of Kriwaczek, it would air in the autumn of 1981 — in about one year’s time. His advocacy for the program aside, Kriwaczek was the obvious choice among the BBC’s line producers. He had grown interested in PCs some months earlier, when he had worked on The Silicon Factor and, perhaps more importantly, when he had stumbled upon a copy of the early British hobbyist magazine Practical Computing. Now he had a Nascom at home which he had built for himself. A jazz saxophonist and flautist by a former trade, he now spent hours in his office trying to get the machine to play music that could be recognized as such. (“My wife and family aren’t very keen on the micro,” he said in a contemporary remark that sounds like an understatement.) Working with another producer, David Allen, Kriwaczek drafted a plan for the project that would make it more substantial than just another one-off documentary miniseries. There would be an accompanying book, for one thing, which would go deeper into many of the topics presented and offer much more hands-on programming instruction. And, strangely and controversially, there would also be a whole new computer with the official BBC stamp of approval.

To understand what motivated this seemingly bizarre step, we should look at the British PC market of the time. It was a welter of radically divergent, thoroughly incompatible machines, in many ways no different from the contemporary American market, but in at least one way even more confused. In the U.S. most PC-makers sourced their BASIC from Microsoft, which remained relatively consistent from machine to machine, and thus offered at least some sort of route to program interchange. The British market, however, was not even this consistent. While Nascom did buy a Microsoft BASIC, both Acorn and Sinclair had chosen to develop their own, highly idiosyncratic versions of the language, and a survey of other makers revealed a similar jumble. Further, none of these incompatible machines was precisely satisfactory in the BBC’s eyes. As a kit you had to build yourself, the Nascom was an obvious nonstarter. The Acorn Atom came pre-assembled, but with a maximum of 12 K of memory it was a profoundly limited machine. The Sinclair ZX80 and ZX81 were similarly limited, and also beset by that certain endemic Sinclair brand of shoddiness that left users having to glue memory expansions into place to keep them from falling out of their sockets and half expecting the whole contraption to explode one day like the Black Watch of old. The Commodore PET was the favorite of British business, but it was very expensive and American to boot, which kind of defeated the program’s purpose of goosing British computing. So, the BBC decided to endorse a new PC built to their requirements of being a) British; b) of solid build quality; c) possessed of a relatively standard and complete dialect of BASIC; and d) powerful enough to perform reasonably complex, hopefully even useful tasks. The idea may seem a more reasonable one in this light to all but the most laissez-faire among you. The way they chose to pursue it, though, was quite problematic.

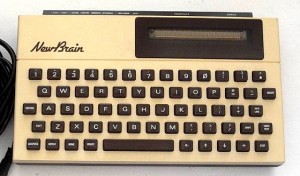

As you may remember from a previous post, Clive Sinclair and Chris Curry had worked together at Sinclair’s previous company, Sinclair Radionics, before going on to found Sinclair Research and Acorn Computers respectively. In the wake of the Black Watch fiasco, the National Enterprise Board of the British government had stepped in to take over Sinclair Radionics and prevent the company from failing. Sinclair, however, proved impossible to work with, and was soon let go. The NEB shuttered what was left of Sinclair Radionics. But they passed its one seemingly viable project, a computer called the NewBrain which Sinclair had conceived but then lost interest in, to another NEB-owned concern, Newbury Laboratories. As the BBC’s grand computer literacy project was being outlined, the NewBrain was still at Newbury and still inching slowly toward release. If Newbury could just get the thing finished, the NewBrain should meet all of the BBC’s requirements for their new computer. They decided it was the computer for them. To preserve some illusion of an open bidding process, they wrote up a set of requirements that coincidentally corresponded exactly with the proposed specifications of the NewBrain, then slipped out the call for bids as quietly as they possibly could. Nobody outside Newbury noticed it, and even if they had, it would have been impossible to develop a computer to those specifications in the tiny amount of time the BBC was offering. The plan had worked perfectly. It looked like they had their new BBC computer.

But why was the BBC so fixated on the NewBrain? It’s hard not to see bureaucratic back-scratching in the whole scheme. Another branch of the British bureaucracy, the National Enterprise Board, had pissed away a lot of taxpayer money in the failed Sinclair Radionics rescue bid. If they could turn the NewBrain into a big commercial success — something of which the official BBC endorsement would be a virtual guarantee — they could earn all of that money back through Newbury, a company which had been another questionable investment. Some damaged careers would certainly be repaired and even burnished in the process. That, at any rate, is how the rest of the British PC industry saw the situation when the whole process finally came to light, and it’s hard to come to any other conclusion today.

Just a few months later, the BBC looked to have hoisted themselves from their own petard. It had now become painfully clear that Newbury was understaffed and underfunded. They couldn’t finish developing the NewBrain in the time allotted, and couldn’t arrange to manufacture it in the massive quantities that would be required even if they did. It was just as this realization was dawning that they received two very angry letters, one from Clive Sinclair and one from Chris Curry at Acorn. Curry had come across an early report about the project in his morning paper, describing the plan for a BBC-branded computer and the “bidding process” and giving the specifications of the computer that had “won.” He called Sinclair, with whom he still maintained polite if strained relations. Sinclair hadn’t heard anything about the project either. Putting their heads together, they deduced that the machine in question must be the NewBrain, and why it must have been chosen. Thus the angry letters.

What happened next would prompt even more controversy. Curry, who had sent his letter more to vent than anything else, was stunned to receive a call from a rather sheepish John Radcliffe, an executive producer on the project, asking if the BBC could come to Acorn’s Cambridge offices for a meeting. Nothing was set in stone, Radcliffe carefully explained. If Curry had something he wanted to show the BBC, the BBC was willing to consider it. Sinclair, despite being known as Mr. Computer to the British public, received no such call. The reasons he didn’t aren’t so hard to deduce. Sinclair had screwed the National Enterprise Board badly in the Sinclair Radionics deal by being impossible to work with and finally apparently deliberately sabotaging the whole operation so that he could get away and begin a new company. It’s not surprising that his reputation within the British bureaucracy was none too good. On a less personal level, there were the persistent quality-control problems that had dogged just about everything Sinclair had ever made. The BBC simply couldn’t afford to release an exploding computer.

At the meeting, Curry first tried to sell Radcliffe on Acorn’s existing computer, the Atom, but even at this desperate juncture Radcliffe was having none of it. The Atom was just too limited. Could he propose anything else? “Well,” said Curry, “We are developing this new machine we call the Proton.” “Can you show it to me?” asked Radcliffe. “I’m afraid it’s not quite ready,” replied Curry. “When can we see a working prototype?” asked Radcliffe. It was already December 1980; time was precious. It was also a Monday. “Come back Friday,” said Curry.

The Acorn team worked frantically through the week to get the Proton, still an unfinished pile of wires, chips, and schematics, into some sort of working shape. A few hours before the BBC’s scheduled return they thought they had everything together properly, but the machine refused to boot. Hermann Hauser, the Austrian Cambridge researcher with whom Curry had started Acorn, made a suggestion: “It’s very simple — you are cross-linking the clock between the development system and the prototype. If you just cut the link it will work.” After a bit of grumbling the team agreed, and the machine sprang to life for the first time just in time for the BBC’s visit. Soon after Acorn officially had the contract, and along with it an injection of £60,000 to set up much larger manufacturing facilities. The Acorn Proton was now the BBC Micro; Acorn was playing on a whole new level.

Acorn and the BBC were fortunate in that the Proton design actually dovetailed fairly well with the BBC’s original specifications. In places where it did not, either the specification or the machine was quietly modified to make a fit. Most notably, the BASIC housed in ROM was substantially reworked to conform better to the BBC’s wish for a fairly standard implementation of the language in comparison to the very personalized dialects both Acorn and Sinclair had previously favored. After the realities of production costs sank in, the decision was made to produce two BBC Micros, the Model A with just 16 K of memory and the Model B with the full 32 K demanded by the original specification and some additional expansion capabilities. The Model B also came with an expanded suite of graphics modes, offering up to 16 colors at 160 X 256, a monochrome 640 X 256 mode, and 80-column text, all very impressive even by comparison with American computers of the era. It would turn out to be by far the more popular model. At the heart of both models was a 6502 CPU which was clocked at 2 MHz rather than the typical 1 MHz of most 6502-based computers. Combined with an innovative memory design that allowed the CPU to always run at full speed, with no waiting for memory access, this made the BBC Micro quite a potent little machine by the standards of the early 1980s. By way of comparison, the 3 to 4 MHz Z80s found in many competitors like the Sinclair machines were generally agreed to have about the same overall processing potential as a 1 MHz 6502, despite the dramatically faster clock speed, due to differences in the designs of the two chips.

By quite a number of metrics, the BBC Micro would be the best, most practical machine the domestic British industry had yet produced. Unfortunately, all that power and polish would come with a price. The BBC had originally dreamed of a sub-£200 machine, but that quickly proved unrealistic. The projected price steadily crept upward as 1981 wore on. When models started arriving in shops at last, the price was £300 for the Model A and £400 for the Model B, much more expensive than the original plans and much, much more than Sinclair’s machines. Considering that buying the peripherals needed to make a really useful system would nearly double the likely price, these figures to at least some extent put the lie to the grand dream of the BBC Micro as the computer for the everyday Briton — a fact that Clive Sinclair and others lost no time in pointing out. A roughly equivalent foreign-built system, like, say, a Commodore PET, would still cost you more, but not all that much more. The closest American comparison to the BBC Micro is probably the Apple II. Like that machine, the BBC Micro would become the relative Cadillac of 8-bit British computers: better built and somehow more solid-feeling than the competition, even as its raw processing and display capabilities grew less impressive in comparison — and, eventually, outright outdated — over time.

As the BBC Micro slowly came together, other aspects of the project also moved steadily forward. By the spring of 1981 three authors were hard at work writing the book, and Kriwaczek and Allen were traveling around the country collecting feedback from schools and focus groups on a 50-minute pilot version of the proposed documentary. With it becoming obvious that everyone needed a bit more time, the whole project was reluctantly pushed back three months. The first episode of the documentary, retitled The Computer Programme, was now scheduled to air on January 11, 1982, with the book and the computer also expected to be available by that date.

And now what had already been a crazily ambitious project suddenly found itself part of something even more ambitious. A Conservative MP named Kenneth Baker shepherded through Parliament a bill naming 1982 Information Technology Year. It would kick off with The Computer Programme in a plum time slot on the BBC, and end with a major government-sponsored conference at the Barbican Arts Centre. In between would be a whole host of other initiatives, some of which, like the issuing of an official IT ’82 stamp by the post office, were probably of, shall we say, symbolic value at best. Yet there were also a surprising number of more practical initiatives, like the establishment of a network of Microsystem Centres to offer advice and training to businessmen and IT Centres to train unemployed young people in computer-related fields. There would also be a major push to get PCs into every school in Britain in numbers that would allow every student a reasonable amount of hands-on time. All of these programs — yes, even the stamp — reflected the desire of at least some in the government to make Britain the IT Nation of the 1980s, to remake the struggling British economy via the silicon chip.

When the first step in their master plan debuted at last on January 11, everything was not quite as they might have wished it. The BBC’s programming department reneged on their promises to give the program a plum time spot. Instead it aired on a Monday afternoon and was repeated the following Sunday morning, meaning ratings were not quite what Kriwaczek and his colleagues might have hoped for. And, although Acorn had been taking orders for several months, virtually no one other than a handful of lucky magazine reviewers had an actual BBC Micro to use to try out the snippets of BASIC code that the show presented. Even with the infusion of government cash, Acorn was struggling to sort out the logistics of producing machines in the quantities demanded by the BBC, while also battling teething problems in the design and some flawed third-party components. BBC Micros didn’t finally start flowing to customers until well into spring — ironically, just as the last episodes of the series were airing. Thus Kriwaczek’s original dream of an army of excited new computer owners watching his series from behind the keyboards of their new BBC Micros didn’t quite play out, at least in the program’s first run.

In the long run, however, the BBC Micro became a big success, if not quite the epoch-defining development the BBC had originally envisioned. Its relatively high price kept it out of many homes in favor of cheaper machines from Sinclair and Commodore, but, with the full force of the government’s patronage (and numerous government-sponsored discounting programs) behind it, it became the most popular machine by far in British schools. In this respect once again, the parallels with the Apple II are obvious. The BBC Micro remained a fixture in British schools throughout the 1980s, the first taste of computing for millions of schoolchildren. It was built like a tank and, soon enough, possessed of a huge selection of educational software that made it ideal for the task. By 1984 Acorn could announce that 85% of computers sold to British schools were BBC Micros. This penetration, combined with more limited uptake in homes and business, was enough to let Acorn sell more than 1.5 million of them over more than a decade in production.

As for the butterfly flapping its wings which got all of this started: The Computer Programme is surprisingly good, in spite of a certain amount of disappointment it engendered in the hardcore hobbyist community of the time for its failure to go really deeply into the ins and outs of programming in BASIC and the like (a task for which video strikes me as supremely ill-suited anyway). At its center is a well-known BBC presenter named Chris Serle. He plays the everyman, who’s guided (along with the audience, of course) through a tour of computer history and applications and a certain amount of practical nitty-gritty by the more experienced Ian McNaught-Davis. It’s a premise that could easily wind up feeling grating and contrived, but the two men are so pleasant and natural about it that it mostly works beautifully. Rounding out the show are a field reporter, Gill Neville, who delivers a human-interest story about practical uses of computers in each episode; and “author and journalist” Rex Malik, who concludes each episode with an Andy Rooney-esque “more objective” — read, more crotchety — view on all of the gee-whiz gadgetry and high hopes that were on display in the preceding 22 minutes.

There’s a moment in one of the episodes that kind of crystallizes for me what makes the program as a whole so unique. McNaught-Davis is demonstrating a simple BASIC program for Serle. One of the lines is an INPUT statement. McNaught-Davis explains that when the computer reaches this line it just sits there checking the keyboard over and over for input from the user. Serle asks whether programs always work like that. Well, no, not always, explains McNaught-Davis… there are these things called interrupts on more advanced systems which can allow the CPU to do other things, to be notified automatically when a key press or some other event needs its attention. He then draws a beautiful analogy: the BASIC program is like someone who has a broken doorbell and is expecting guests. He must manually check the door over and over. An interrupt-driven system is the same fellow after he’s gotten his doorbell fixed, able to read or do other things in his living room and wait for his guests to come to him. The fact that McNaught-Davis acknowledges the complexity instead of just saying, “Yes, sure, just one thing at a time…” to Serle says a lot about the program’s refusal to dumb down its subject matter. Its decision not to pursue this strange notion of interrupts too much further, meanwhile, says a lot about the accompanying concern that it not overwhelm its audience. The BBC has always been really, really good at walking that line; The Computer Programme is a shining example of that skill.

Indeed, The Computer Programme can be worthwhile viewing today even for reasons outside of historical interest or kitsch value. Anyone looking for a good general overview of computers and how they work and what they can and can’t do could do a lot worse. I meant to just dip in and sample it here and there, but ended up watching the whole series (not that historical interest and kitsch value didn’t also play a factor). If you’d like to have a look for yourself, the whole series is available on YouTube thanks to Jesús Zafra.

Level 9

Before the likes of the Sinclair ZX80 and ZX81 and the Acorn Atom which I discussed in a previous post on British computing, there were the solder-them-yourself kits which began to arrive in 1978. The most long-lived and successful of these were the products of a small company called Nascom. The obvious American counterpart to the Nascom was the original kit PC, the Altair. That said, the Nascom was actually a much more complete and capable machine once you got it put together (no easy feat). It came, for example, with a real keyboard in lieu of toggle switches, and with video output in lieu of blinking lights. Like the Altair, the Nascom was open and flexible and eminently hackable, a blank canvas just waiting to be painted upon. (How could it not be open when every would-be user had to literally build her machine for herself?) In the case of the Altair, those qualities led to the so-called S-100 bus standard that, in combination with the CP/M operating system, came to dominate business computing in the years prior to the arrival of the IBM PC. In case of the Nascom, they spawned the 80-Bus architecture that could eventually also run CP/M, thanks to the Nascom’s use of the Zilog Z-80 processor that was also found in most of the American CP/M machines. A hardcore of committed users would cling to their Nascoms and other 80-Bus machines well into the 1980s even in the face of slicker, friendlier mass-market machines that would soon be selling in the millions.

One of the Nascom buyers was a 25-year-old named Pete Austin. He had finished a psychology degree at Cambridge when, “looking for an excuse to stay there for an extra year” before facing the real world of work and responsibility, he signed up for a one-year course in computing. He discovered he was very, very good at it. After finishing the course, he began a career as a programmer, mostly coding applications in COBOL on big-iron machines for banks and other big institutions. He quickly found that he wasn’t as excited by the world of business computing as he had been by the more freewheeling blue-sky research at Cambridge. But while programming accounting packages and the like may not have been exciting, it did pay the bills nicely enough. At least he earned enough to buy a Nascom for some real hacking.

After buying and building the Nascom, he spent a lot of time tinkering on it with his younger brothers Mike and Nicolas, both of whom were if anything even more technically inclined than Pete himself. Together the brothers developed a number of programming tools, initially for their own use, like a set of extensions to the Nascom’s standard BASIC and an assembler for writing Z-80 machine language. In 1981 they decided to try selling some of these utilities in the nascent British software market. They took out advertisements in a magazine or two under the name Level 9 Computing, a generic but catchy name that could refer to anything from an academic qualification to the lowest circle of Dante’s Inferno to a level in a videogame to a Dungeons and Dragons dungeon or character level. They were rewarded with a modest number of orders. Encouraged, they added some simple games to their lineup, mostly the usual clones of current arcade hits. More indicative of their future direction, however, was Fantasy, a sort of proto-text adventure written by Pete. Some earlier experiences had influenced its creation.

Already a dedicated wargamer, the young Pete had been introduced to Dungeons and Dragons while at Cambridge. He promptly became obsessed with D&D and another early tabletop RPG, Empire of the Petal Throne. He later said, “In the evening we either played D&D or went down to the pub… and played Petal Throne.” Still, it took him a surprisingly long time to connect his interest in computers to his interest in RPGs. Cambridge was the premier computing university of Britain, the atmosphere within its computer science department perhaps not terribly far removed from that at MIT. As such, there were plenty of games to be had, including some early proto-CRPGs obviously inspired by tabletop D&D. Pete toyed with them, but found them too primitive, underwhelming in comparison to playing with friends. (Ironically, Pete left the university just before the rise of the Phoenix mainframe text adventure boom, about which more in a future article.) The spark that would guide his future career wasn’t kindled until he was working in business computing, and had left D&D behind along with his old gaming buddies in Cambridge. On one of his employers’ systems, he stumbled across an installation of Adventure. Yes, now follows the story I’ve told you so many times before: long story short, he was entranced. Fantasy was the first product of his fascination. But Pete wanted to do more than create a stripped-down shadow of Adventure on the Nascom. He wanted to port the whole thing.

This was an audacious proposition to say the least. When Scott Adams had been similarly inspired, he had been wise enough not to try to recreate Adventure itself on his 16 K TRS-80, but rather to write a smaller, simpler game of his own design. A year after Adams’s Adventureland, Gordon Letwin of Microsoft ported the full game onto a 32 K TRS-80. The Austins also had 32 K to work with, but they lacked one crucial advantage that Letwin had been able to employ: a disk drive to fetch text off of disk and into memory as it was needed during play. With only a cassette drive on their Nascom, they would have to pack the entire game — program, data, and text — into 32 K. It looked an impossible task.

Meanwhile the Austins were mulling another problem that will be familiar to readers of this blog. The burgeoning British PC industry was in a state of uncertain flux. In yet another piece of evidence that hackers don’t always make the best businessmen, Nascom the company had suddenly collapsed during 1981. They were rescued by Lucas Industries, famed manufacturers of the worst electronic systems ever to be installed into automobiles (“Lucas, the Prince of Darkness”; “If Lucas made guns, wars would not start”; “Why do the British drink warm beer? Because Lucas makes their refrigerators!”), but their future still looked mighty uncertain in the face of the newer, cheaper computers from Sinclair, Acorn, and Commodore that you didn’t have to solder together for yourself. Wouldn’t it be great if the Austins could devise a system to let them run their game on any computer that met some minimal specification like having 32 K of memory? And what if said system could be designed so that games written using it would actually consume less memory than they would if coded natively? We’ve already met the P-Machine and the Z-Machine. Now, it’s time to meet the A-Machine (“A” stands for Austin, naturally).

In some ways Level 9’s A-Code system is even more impressive than Infocom’s technology. Although Level 9’s development tools would never quite reach the same level of sophistication as Infocom’s with their minicomputer-based ZIL programming language, the A-Machine itself is a minor technical miracle. While Infocom also targeted 32 K machines with their earliest games, they always required a disk drive for storage. The Austins lacked this luxury, meaning they had to develop unbelievably efficient text-compression routines. They were understandably tight-lipped about this technology that as much as anything represented the key to their success during their heyday, but they did let some details slip out in an interview with Sinclair User in 1985:

Pete’s text compressor has been a feature of all Level 9’s mammoth adventures. It works by running through all the messages and searching for common strings.

For example, “ing” might occur frequently. The compressor replaces “ing” with a single code wherever it occurs. That done, it goes through again, and again, each time saving more space. “It doesn’t always pick up what you’d expect it to,” explains Pete. In the phrase “in the room” the compressor might decide that it was more efficient to use a code for “n th” and “e r” rather than pick out “in” and “the.” That is not something which occurs to the human mind.

The Austins used a similar technique in their actual A-Machine program code, condensing frequently used sequences of instructions into a single virtual-machine “opcode” that could be defined in one place and called again and again for minimal memory overhead.

Having started with a comparison to Infocom’s technology, I do want to remind you that the Austins developed the A-Code system without the same pool of experience and technology to draw upon that Infocom had — no DEC minicomputers for development work, no deep bench of computer-science graduate-degree-holders. The youngest brother, Michael, was not even yet of university age. Yet, incredibly, they pulled it off. After some months they had an accurate if not quite word-for-word rendering of the original Adventure running in A-Code on their faithful Nascom.

But now Pete realized they had a problem. Level 9 had taken out some advertisements for the game describing its “over 200 individually described locations.” It sounded pretty good as ad copy. Unfortunately, they had neglected to actually confirm that figure. When they sat down and counted at last, they came up with just 139. So, determined to be true to their word, they decided to start squeezing in more rooms. They replaced the original’s simple (if profoundly unfair) endgame in the adventuring repository with an extended 70-room sequence in which the player must escape a flood and rescue 300 Elvish prisoners. Just like that Level 9 had their 200-room game, and they continued to trumpet it happily, the first sign of a persistent obsession they would have with room counts in the years to come. (The obsession would reach comical heights in 1983 with the infamous 7000-room Snowball and its 6800 rooms of identical empty spaceship corridors.)

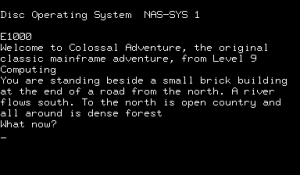

The Austins released Colossal Adventure on the Nascom in early 1982, selling it by mail order through magazine advertisements. They copied each order by hand onto a store-bought TDK cassette tape. Into the TDK case they shoved a tiny mimeographed square of paper telling how to load the game. Scott Adams’s original Ziploc-bag-and-baby-formula-liner packaging was sophisticated by comparison. But they sold several hundred games in the first few months. And, with the work of tools development behind them, they were able to follow up with two more equally lengthy, entirely original sequels — Adventure Quest and Dungeon Adventure — before the end of 1982.

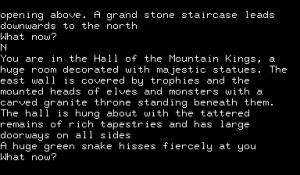

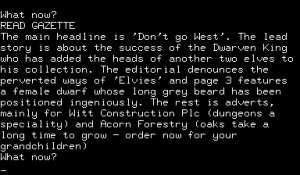

Colossal Adventure in particular makes for an interesting experience today. Prior to the endgame, it’s mostly faithful to its inspiration, but there are just enough changes to keep you on your toes. To create a context for the endgame, Pete grafted a plot onto the original game. You are now exploring the caverns at the behest of an elvish warrior. The axe-throwing dwarfs that haunt the caverns are not just annoying, but Evil; it is they who are holding the elf’s people prisoner. Certain areas are re-purposed to fit the new plot. The Hall of the Mountain King, in the original a faithful reconstruction of a cavern Will Crowther knew from his journeys into Bedquilt Cave, becomes here a sinister monument to the dwarfs’ conquests; Spelunker Today becomes a dwarven propaganda rag.

Pete did tinker here and there with the structure of the game as well. The outdoors are fleshed out quite a bit, with additional locations and (naturally) an additional maze, and a few familiar items are found in different — usually less accessible — places. Whether out of a sense of mercy or because his game engine wouldn’t support it, he also eliminates the need to respond “YES” to solve the dragon “puzzle.” Much less mercifully, he inexplicably reduces the inventory limit to just four objects, which makes everything much, much more difficult than it ought to be, and makes finishing the game without buying more batteries for the lantern (and thus getting the full score) well-nigh impossible. The inventory limit also makes mapping the several mazes even more painful.

For its part, the endgame is absurdly difficult, but it also has a sense of onrushing momentum that was still rare in this era. Literally onrushing, actually; you are trying to escape a massive flood that fills the complex room by room. It’s impressive both from a storytelling and a technical perspective. For all their old-school tendencies, Level 9 would always show a strong interest in making their games narrative experiences. Dungeon Adventure and, especially, Adventure Quest show a similar determination to present an actual plot. The latter takes place decades after the events of Colossal Adventure, but begins on the same patch of forest. It does a surprisingly good, almost moving job of showing the passage of time.

It soon evolves into a classic quest narrative that could be torn from Greek mythology, with the player needing to make her way through a series of relatively self-contained lands to arrive at “the Black Tower of the Demon Lord.”

Sprinkled increasingly liberally through the three games are references to Tolkien’s Middle Earth — Black Towers, balrogs, High Councils, Minas Tirith. Still, they never feel so much like an earnest attempt to play in Tolkien’s world as a grab bag of cool fantasy tropes. It almost feels like Pete kind of wandered into Middle Earth accidentally in his quest for Cool Stuff to put in his games. As he later said, “Middle Earth was a convenient fantasy setting. It was a way of telling people the type of world they were getting.” Where another milieu offers something equally cool, he uses that; Adventure Quest, for example, features a sandworm straight out of Dune. All of this was, of course, completely unauthorized. After not mentioning the Tolkien references in early promotion, Level 9 actually advertised the games for a while as the Middle Earth Trilogy. Then, presumably in response to some very unhappy Tolkien-estate lawyers, they went the other way, excising all of the references and renaming the games the Jewels of Darkness trilogy.

Before I leave you, I just want to emphasize again what an extraordinary achievement it was to get these games into 32 K. Not only are they large games by any standards, brimming with dozens of puzzles, but — unlike, say, the Scott Adams games — the text also reads grammatically, absent that strangled quality that marks an author trying to save every possible character. Better yet, Pete knows how to create a sense of atmosphere. His prose is blessedly competent.

That’s not, however, to say that I can really recommend them to players today. In addition to quality prose, they’re also loaded with old-school annoyances: a two-word parser (in their original incarnation; the parser at least was updated in later releases); mazes every time you turn around; endless rinse-and-repeat learning by death; and always that brutal four-item inventory limit. They were damn impressive games in their time, but they don’t quite manage to transcend it. Fortunately, there were lots more adventures still to come from Level 9.

In which spirit: by the end of 1982, Level 9 had, thanks to the magic of A-Code, leaped from the Nascom onto the Sinclair Spectrum and the BBC Micro, ready to ride a full-on adventuring craze that would sweep Britain over the next few years. We’ll start to talk about the new machines that would enable that — including the latter two in the list above — next time.

Playing Ultima II, Part 2

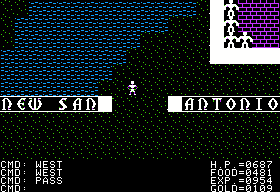

Despite allegedly taking place mostly on our Earth and sometimes even in (basically) our time, very little about Ultima II has much in common with the world that we know. One of the more interesting exceptions is the town of New San Antonio, which is right where you’d expect to find it in 1990. Oh, there are still unanswered questions; it wouldn’t be Ultima II without them. For instance, why is it called New San Antonio? Still, the town hosts an airport where we can steal the second coolest vehicle in the game: an airplane, an obvious nod to the San Antonio of our own world, which hosts two major Air Force bases. Having grown up in Houston and attended university in Austin, Richard Garriott would have been very familiar with San Antonio’s personality. One of the bases, Lackland, houses a huge training center that has earned it, and by extension San Antonio, the nickname of “Gateway to the Air Force.” Wandering the River Walk and other tourist areas around the time of one of the various graduation ceremonies is like strolling through a Norman Rockwell painting — a sea of earnest, clean-cut young men and women in uniform accompanied by proud, doting parents and siblings.

I’ve spent a lot of time already pointing out the cognitive dissonance and design failures that dog Ultima II. Never fear, I’ll get back to doing more of that in a moment. But the airplane affords an opportunity to note what Ultima II, and the Ultima series in general, do so right. As nonsensical as its world is, it consistently entices us to explore it, to find out what lies behind this locked door or at the bottom of that dungeon. Most of the time — actually, always in the case of the dungeon — the answer is “nothing.” But we find something really neat just often enough that our sense of wonder never entirely deserts us. In this case we come upon an actual, functioning airplane. Nothing in the manual or anywhere else has prepared us for this, but here it is. We look to our reference chart of one-key commands to see what seems to fit best, experiment a bit, and we’re off into the wild blue yonder. The airplane is kind of hard to control, and we can only land on grass, but we can fly through time doors to range over any of the time zones in the game, even buzz the monsters that guard Minax’s lair in the heart of the Time of Legends. We made this crazy, undocumented discovery for ourselves, so we own the experience fully. When we take flight for the first time, it’s kind of magic.

That feeling can be hard for modern players, who have every detail about every aspect of the game at their fingertips thanks to a myriad of FAQs, Wikis, and walkthroughs, to capture. Yet it’s at the heart of what made the Ultima games so entrancing in their day. Games like Wizardry gave us a more rigorous strategic challenge, but Ultima gave us a world to explore. This likely goes a long way toward reconciling the rave reviews Ultima II received upon its release (not to mention the fond memories some of you have expressed in the comments) with the contemporary consensus of bloggers, reviewers, and FAQ-writers who revisit the game today, who generally hold it a boring, poorly designed misfire and by far the worst of the 1980s Ultimas. I don’t so much want to disagree with the latter sentiment as I want to also remember that even here in their worst incarnation there was just something special about the Ultima games. They speak to a different part of our nature than most CRPGs — I’m tempted to say a better part. The joy of exploration and discovery can make us overlook much of the weirdness of not only the world and the story but also of the core game systems, some of which (like the need to buy hit points as you would food) I’ve mentioned, but many others of which (like the fact that earning experience points and leveling up confer absolutely no benefits other than bragging rights, or that the gold and experience you earn from monsters has no relation to their strength) I haven’t.

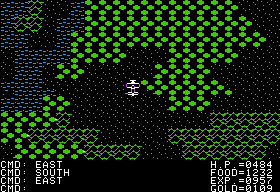

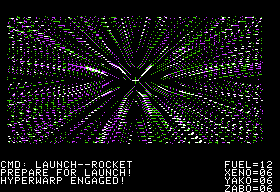

What could be cooler than an airplane, you ask? The answer, of course, is a spaceship. We find a few in the one town in 2111, Pirate’s Harbor, located approximately where we might expect Moscow to be. (Apparently the Soviet Union won World War III.) We steal one and we’re off into space, in what must already be the hundredth videogame tribute to Star Wars‘s warp-drive sequences.

It’s possible to visit all nine planets of the solar system. (In 1982 Pluto was still considered a full-fledged planet.) As with Earth itself, however, Ultima II‘s version of the solar system doesn’t have much in common with reality as we know it. Here Mercury’s terrain consists of “water and swamp”; Jupiter of “water and grass”; Uranus of “forest and grass.” Owen Garriott, Richard’s scientist/astronaut father, must have been outraged. The rest of us can marvel instead that not one of these planets contains anything to make it worth visiting. Indeed, Ultima II can feel like a box of spinning gears that often don’t connect to anything else. In addition to the planets, there are the similarly pointless dungeons, which waste a new dungeon-delving engine that marks as big an advance over Ultima I‘s dungeons as Ultima II‘s town engine is over Ultima I‘s generic towns. For some reasons spells only work in the (pointless) dungeons, meaning that there’s absolutely no reason to make one’s character a cleric or wizard, unless one feels like playing a hugely underpowered fighter. In space again, it’s actually possible — albeit pointless — to dive and climb and turn our spaceship, implying that Garriott originally intended to include a space-combat section like that of Ultima I but never got around to it. Thus, while Ultima II is an impressive machine, it feels like a half-assembled one. A couple of those meta-textual dialogs that are everywhere perhaps offer a clue why: “Isn’t Ultima II finished yet?” asks Howie the Pest; “Tomorrow — for sure!” says Richard Garriott. The only possible riposte to this complaint is that a contemporary player wouldn’t know that planets, dungeons, and so much else were superfluous. She’d presumably explore them thoroughly and get much the same thrill she’d get if her explorations were actually, you know, necessary. I’ll let you decide whether that argument works for you, or whether Ultima II plays a rather cheap game of bait and switch.

In addition to all the unconnected bits and bobs, there are also problems with pieces that are important. The most famous of the glitches is the ship-duplication bug. We can make a new ship by boarding an existing enemy ship and sailing one square away; we’re left with a ship under our control and the original enemy, which we can continue to board again and again to crank out an endless supply of ships. It can be so much fun to make bridges of ships between islands and continents that it’s almost tempting to label this error a feature, one more of those juicy moments of discovery that make the Ultima games so unique. Other bugs, though, such as certain squares on the map where we simply cannot land a blow against a monster, are more annoying. And there’s one bug that is truly unforgivable. Flying into space requires a certain strength score. There is only one place in the game where we can raise our statistics: the clerk at the Hotel California (don’t ask!) in New San Antonio will sometimes randomly raise one when bribed appropriately. In the original release of the game, however, he will never raise our strength, thus making the game unwinnable for anyone who didn’t choose a fighter as her character class and put a lot of extra points into strength. Sierra did release a patch that at least corrected this problem — one of the first patches ever released for a game.

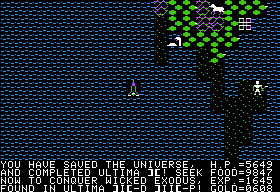

But, you might be asking, why should not being able to go into space make the game unwinnable if there’s nothing there to find anyway? Well, there actually is one thing we need there, but not on any of the familiar planets. Sifting through all of the jokes and non-sequiturs spouted by characters in the towns has revealed hints that a tenth planet, “Planet X,” exists. There we can pick up a blessing from one Father Antos, which in turn will let us buy a ring from a fellow back in New San Antonio on Earth. All we actually need to beat the Ultima II endgame is: the blessing; the ring; a special sword (“Enilno” — “On-Line” backward; the meta-textual fun just never stops!) that we also can buy in New San Antonio; and of course a character with good enough equipment and statistics to survive the final battle with Minax. She’s tricky, constantly teleporting from one end of her lair to the other, but in the end we finish her.

Like so much else in the game, the final message doesn’t really make sense. The optimistic reviewer for Computer Gaming World took it to suggest that Sierra might release new scenario disks to utilize some of those uselessly spinning gears. But that was not to be. Instead Ultima II is seen in its best light as a sort of technology demonstration, or a preview of the possibilities held out by Garriott’s approach to the CRPG. A better tighter, finished design, combined with another slate of technology upgrades, would let him do the job right next time.