Even as Bill von Meister and company were flailing away at GameLine, a pair of former General Electric research scientists in Troy, New York, were working on the idea destined to become Control Video’s real future. Howard S. Goldberg and David Panzl had spent some time looking at online services like CompuServe and The Source, and had decided that they could never become a truly mass-market phenomenon in their current form. In an era when far more people watched television than read books, all that monochrome text unspooling slowly down the screen would cause the vast majority of potential customers to run away screaming.

Goldberg and Panzl thought they saw a better model. The Apple Lisa had just been released, the Macintosh was waiting in the wings, and you couldn’t shake a stick at any computer conference without hitting someone with the phrase “graphical user interface” on the lips. Simplicity was the new watchword in computing. Goldberg and Panzl believed that anyone who could make a point-and-shoot online service to go up against the SLR complexity of current offerings could make a killing.

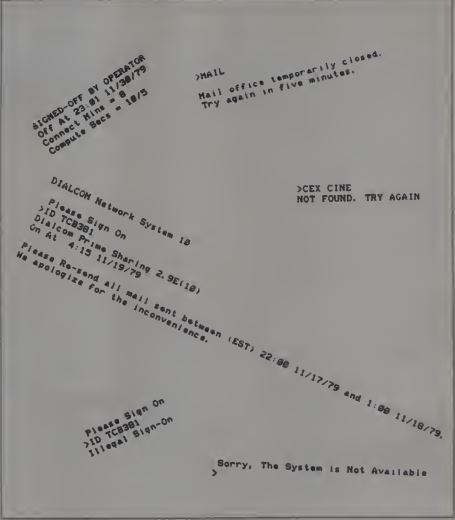

But how to do so, given the current state of technology? It was all a 300-baud modem could do to transfer text at a reasonable speed. Graphics were out of the question.

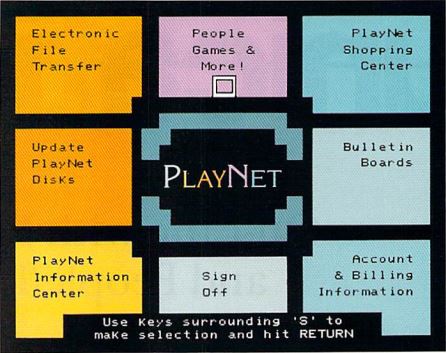

Or were they? What if the graphics could be stored locally, on the subscriber’s computer, taking most of the load off the modem? Goldberg and Panzl envisioned a sort of hybrid service, in which as much code and data as possible was stored on a disk that would be sent out to subscribers rather than on the service’s big computers. With this approach, you would be able to navigate through the service’s offerings using a full GUI, which would run via a local application on your computer. If you went into a chat room, the chat application itself would be loaded from disk; only the actual words you wrote and read would need to be sent to and from a central computer. If you decided to write an email, a full-featured editor the likes of which a CompuServe subscriber could only dream of could be loaded in from disk, with only the finished product uploaded when you clicked the send button.

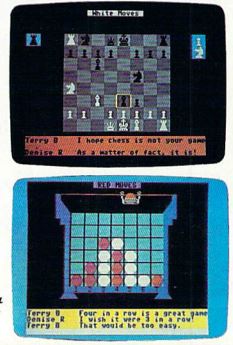

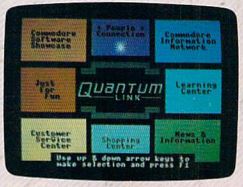

The PlayNet main menu. Note that system updates could be downloaded and installed on the user’s disks, thus avoiding the most obvious problem of this approach to an online service: that of having to send out new disks to every customer every time the system was updated. The games were also modular, with new ones made available for download to disk at the user’s discretion as they were developed. All told, it was an impressive feat of software engineering that would prove very robust; the software shown here would remain in active use as PlayNet or QuantumLink for a decade, and some of its underpinnings would last even longer than that.

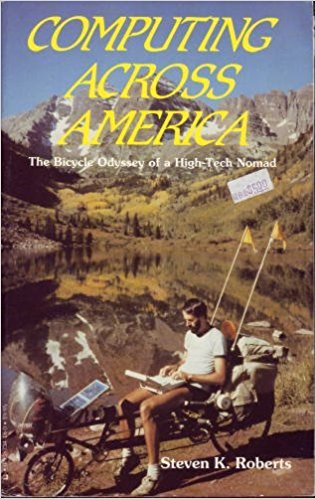

Goldberg and Panzl were particularly taken with the possibilities the approach augured for online multiplayer games, a genre still in its infancy. CompuServe had put up a conquer-the-universe multiplayer strategy game called MegaWars, but it was all text, demanding that players navigate through a labyrinth of arcane typed commands. Otherwise there were perennials like Adventure to go along with even moldier oldies like Hangman, but these were single-player games that just happened to be played online. And they all were, once again, limited to monochrome text; it was difficult indeed to justify paying all those connect charges for them when you could type in better versions from BASIC programming books. But what if you could play poker or checkers online against people from anywhere in the country instead of against the boring old computer, and could do so with graphics? Then online gaming would be getting somewhere. The prospect was so exciting that Goldberg and Panzl called their proposed new online service PlayNet. It seemed the perfect name for the funner, more colorful take on the online experience they hoped to create.

When they shared their idea with others, they found a number who agreed with them about its potential. With backing from Rensselaer Polytechnic Institute, the New York State Science and Technology Foundation, and Key Venture Corporation, they moved into a technology “incubator” run by the first of these in May of 1983. For PlayNet’s client computer — one admitted disadvantage of their approach was that it would require them to write a separate version of their software for every personal computer they targeted — they chose the recently released, fast-selling Commodore 64, which sported some of the best graphics in the industry. The back end would run on easily scalable 68000-based servers made by a relatively new company called Stratus. (The progression from CompuServe to PlayNet thus highlights the transition from big mainframes and minicomputers to the microcomputer-based client/server model in networking, just as it does the transition from a textual to a graphical focus.) Facing a daunting programming task on both the client and server sides, Goldberg and Panzl took further advantage of their relationship with Rensselaer Polytechnic Institute to bring in a team of student coders, who worked for a stipend in exchange for university credit, applying to the project many of the cutting-edge theoretical constructs they were learning about in their classes.

PlayNet began trials around Troy and Albany in April of 1984, with the service rolling out nationwide in October. Commodore 64 owners had the reputation of being far more price-sensitive than owners of other computers, and Goldberg and Panzl took this conventional wisdom to heart. PlayNet was dramatically cheaper than any of the other services: $35 for the signup package which included the necessary software, followed by $6 per month and $2 per hour actually spent online; this last was a third of what CompuServe would cost you. PlayNet hoped to, as the old saying goes, make it up in volume. Included on the disks were no fewer than thirteen games, whose names are mostly self-explanatory: Backgammon, Boxes, Capture the Flag, Checkers, Chess, Chinese Checkers, Contract Bridge, Four in a Row, Go, Hangman, Quad 64, Reversi, and Sea Strike. While they were all fairly unremarkable in terms of interface and graphics, not to mention lack of originality, it was of course the well-nigh unprecedented ability to play them with people hundreds or thousands of miles away that was their real appeal. You could even chat with your opponent as you played.

In addition to the games, most of the other areas people had come to expect from online services were present, if sometimes a little bare. There were other small problems beyond the paucity of content — some subscribers complained that chunks loaded so slowly from the Commodore 64’s notoriously slow disk drive that they might almost just as well have come in via modem, and technical glitches were far from unknown — but PlayNet was certainly the most user-friendly online service anyone had ever seen, an utterly unique offering in an industry that tended always to define itself in relation to the lodestar that was CompuServe.

Things seemed to go fairly well at the outset, with PlayNet collecting their first 5000 subscribers within a couple of months of launch. But, sadly given how visionary the service really was, they would never manage to get much beyond that. Separated both geographically and culturally from the big wellsprings of technology venture capital around Silicon Valley, forced to deal with a decline in the home-computer market shortly after their launch that made other sources of funding shy away, they were perpetually cash-poor, a situation that was only exacerbated by the rock-bottom pricing — something that, what with prices always being a lot harder to raise on customers than they are to lower, they were now stuck with. An ugly cycle began to perpetuate itself. Sufficient new subscribers would sign up to badly tax the existing servers, but PlayNet wouldn’t have enough money to upgrade their infrastructure to match their growth right away. Soon, enough customers would get frustrated by the sluggish response and occasional outright crashes to cancel their subscriptions, bringing the system back into equilibrium. Meanwhile PlayNet was constantly existing at the grace of the big telecommunications networks whose pipes and access numbers they leased, the prospect of sudden rate hikes a Sword of Damocles hanging always over their heads. Indeed, the story of PlayNet could serve as an object illustration as to why all of the really big, successful online services seemed to have the backing of the titans of corporate America, like H&R Block, Readers Digest, General Electric, or Sears. This just wasn’t a space with much room for the little guy. PlayNet may have been the most innovative service to arrive since CompuServe and The Source had spawned the consumer-focused online-services industry in the first place, but innovation alone wasn’t enough to be successful there.

Still, Goldberg and Panzl could at least take solace that their company had a reason to exist. While PlayNet was struggling to establish an online presence, Control Video was… continuing to exist, with little clear reason why beyond Jim Kimsey and Steve Case’s sheer stubbornness. Kimsey loved to tell an old soldier’s joke about a boy who is seen by the roadside, frantically digging into a giant pile of horse manure. When passersby ask him why, he says, “There must be a pony in here somewhere!” There must indeed, thought Kimsey, be a pony for Control Video as well buried somewhere in all this shit they were digging through. He looked for someone he could sell out to, but Control Video’s only real asset was the agreements they had signed with telecommunications companies giving them access to a nationwide network they had barely ever used. That was nice, but it wasn’t, judged potential purchasers, worth taking on a mountain of debt to acquire.

The way forward — the pony in all the shit — materialized more by chance than anything. Working through his list of potential purchasers, Kimsey made it to Commodore, the home-computer company, in the spring of 1985. Maybe, he thought, they might like to buy him out in order to use Control Video’s network to set up their own online service for their customers. He had a meeting with Clive Smith, an import from Commodore’s United Kingdom branch who was among the bare handful of truly savvy executives the home office ever got to enjoy. (Smith’s marketing instincts had been instrumental in the hugely successful launch of the Commodore 64.) Commodore wasn’t interested in running their own online service, Smith told Kimsey; having released not one but two flop computers in 1984 in the form of the Commodore 16 and Plus/4, they couldn’t afford such distractions. But if Control Video wanted to start an independent online service just for Commodore 64 owners, Commodore would be willing to anoint it as their officially recommended service, including it in the box with every new Commodore 64 and 128 sold in lieu of the CompuServe Snapaks that were found there now. He even knew where Kimsey could get some software that would make his service stand out from all of the others, by taking full advantage of the Commodore 64’s color graphics: a little outfit called PlayNet, up in Troy, New York.

It seemed that PlayNet, realizing that they needed to find a strong corporate backer if they hoped to survive, had already come to Commodore looking for a deal very similar to the one that Clive Smith was now offering Jim Kimsey. But, while he had been blown away by the software they showed him, Smith had been less impressed by the business acumen of the two former research scientists sitting in his office. He’d sent them packing without a deal, but bookmarked the PlayNet software in his mind. While Kimsey’s company was if anything in even worse shape than PlayNet on the surface, Smith thought he saw a much shrewder businessman before him, and knew from the grapevine that Kimsey was still tight with the venture capitalists who had convinced him to take the job with Control Video in the first place. He had, in short, all the business savvy and connections that Goldberg and Panzl lacked. Smith thus brokered a meeting between Control Video and PlayNet to let them see what they could work out.

What followed was a veritable looting of PlayNet’s one great asset. Kimsey acquired all of their software for a reported $50,000, plus ongoing royalty payments that were by all accounts very small indeed. If it wasn’t quite Bill Gates’s legendary fleecing of Seattle Computer Products for the operating system that became MS-DOS, it wasn’t that far behind either. PlayNet’s software would remain for the next nine years the heart of the Commodore 64 online service Kimsey was now about to start.

The best thing Goldberg and Panzl could have done for their company would have been to abandon altogether the idea of hosting their own online service, embracing the role of Control Video’s software arm. But they remained wedded to the little community they had fostered, determined to soldier on with the PlayNet service as an independent entity even after having given away the store to a fearsome competitor that enjoyed the official blessing of Commodore which had been so insultingly withheld from them. Needless to say, it didn’t go very well; PlayNet finally gave up the ghost in 1987, almost two years after the rival service had launched using their own technology. As part of the liquidation, they transferred all title to said technology in perpetuity to Jim Kimsey and Steve Case’s company, to do with as they would. Thus was the looting completed.

Well before that happened, the looter was no longer known as Control Video. Wanting a fresh start after all the fiasco and failure of the last couple of years, wanting to put the Bill von Meister era behind him once and for all, Kimsey on May 25, 1985, put Control Video in a shoe box, as he put it, and pulled out Quantum Computer Services. A new company in the eyes of the law, Quantum was in every other way a continuation of the old, with all the same people, all the same assets and liabilities, even the same philosophical orientation. For all that the deal with Commodore and the acquisition of the PlayNet software was down to seeming happenstance, the online service that would come to be known as QuantumLink evinced von Meister’s — and Steve Case’s — determination to create a more colorful, easier, friendlier online experience that would be as welcoming to homemakers and humanities professors as it would to hardcore hackers. And in running on its own custom software, it allowed Quantum the complete control of the user’s experience which von Meister and Case had always craved.

Continuing to tax the patience of their financiers — patience that would probably have been less forthcoming had Daniel Case III’s brother not been on the payroll — Quantum worked through the summer and early fall of 1985 to adapt the PlayNet software to their own needs and to set up the infrastructure of Stratus servers they would need to launch. QuantumLink officially went live on the evening of November 1, 1985. It was a tense group of administrators and techies who sat around the little Vienna, Virginia, data center, watching as the first paying customers logged in, watching what they did once they arrived. (Backgammon, for what it’s worth, was an early favorite.) By the time the users’ numbers had climbed into the dozens, beers were being popped and spontaneous cheers were in the air. Simultaneous users would peak at about 100 that night — not exactly a number to leave CompuServe shaking in their boots. But so be it; it just felt so good to have an actual product — an actual, concrete purpose — after their long time in the wilderness.

In keeping with the price-sensitive nature of the Commodore market, Quantum strove to make their service cheaper than the alternatives, but were careful not to price-cut themselves right out of business as had PlayNet. Subscribers paid a flat fee of $10 per month for unlimited usage of so-called “Basic” services, which in all honesty didn’t include much of anything beyond the online encyclopedia and things that made Quantum money in other ways, like the online shopping mall. “Plus” services, including the games and the chat system that together were always the centerpiece of QuantumLink social life, cost $3.60 per hour, with one hour of free Plus usage per month included with every subscription. The service didn’t set the world on fire in the beginning, but the combination of Commodore’s official support, the user-friendliness of the graphical interface, and the aggressive pricing paid off reasonably well in the long term. Within two months, QuantumLink had its first 10,000 subscribers, a number it had taken CompuServe two years to achieve. Less than a year after that, it had hit 50,000 subscribers. By then, Quantum Computer Services had finally become self-sustaining, even able to make a start at paying down the debt they had accumulated during the Control Video years.

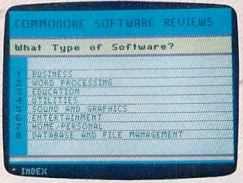

One of QuantumLink’s unique editorial services was an easy-to-navigate buyer’s guide to Commodore software.

Quantum had the advantage of being able to look back on six years of their rivals’ experience for clues as to what worked and what didn’t. For the intensely detail-oriented Steve Case, this was a treasure trove of incalculable value. Recognizing, as had Goldberg and Panzl before him, that other services were still far too hard to use for true mainstream acceptance, he insisted that nothing be allowed on QuantumLink that his mother couldn’t handle.

But Case’s vision for QuantumLink wasn’t only about being, as he put it, “a little easier and cheaper and more useful” than the competition. He grasped that, while people might sign up for an online service for the practical information and conveniences it could offer them, it was the social interchange — the sense of community — that kept them logging on. To a greater degree than that of any of its rivals, QuantumLink’s user community was actively curated by its owner. Every night of the week seemed to offer a chat with a special guest, or a game tournament, or something. If it was more artificial — perhaps in a way more cynical — than CompuServe’s more laissez-faire, organic approach to community-building, it was every bit as effective. “Most services are information- and retrieval-oriented. It doesn’t matter if you get on on Tuesday or Thursday because the information is the same,” said Case; as we’ve seen from earlier articles in this series, this statement wasn’t really accurate at all, but it served his rhetorical purpose. “What we’ve tried to do is create a more event-oriented social system, so you really do want to check in every night just to see what’s happening — because you don’t want to miss anything.” Getting the subscriber to log on every night was of course the whole point of the endeavor. “We recognized that chat and community were so important to keep people on,” remembers Bill Pytlovany, a Quantum programmer. “I joked about it. You get somebody online, we’ve got them by the balls. Plain and simple, they’ll be back tomorrow.”

Indeed, QuantumLink subscribers became if anything even more ferociously loyal — and ferociously addicted — than users of rival services. “For some people, it was their whole social life,” remembers a Quantum copywriter named Julia Wilkinson. “That was their reality.” All of the social phenomena I’ve already described on CompuServe — the friendships and the romances and, inevitably, the dirty talk — happened all over again on QuantumLink. (“The most popular [features of the service] were far and away the sexual chat rooms,” remembers one Quantum manager. “The reality of what was happening was, if you just let these folks plug into each other, middle-aged people start talking dirty to each other.”) Even at the cheaper prices, plenty of subscribers were soon racking up monthly bills in the hundreds of dollars — music to the ears of Steve Case and Jim Kimsey, especially given that the absolute number of QuantumLink subscribers would never quite meet the original expectations of either Quantum or Commodore. While the raw numbers of Commodore 64s had seemingly boded well — it had been by far the most popular home computer in North America when the service had launched — a glance beyond the numbers might have shown that the platform wasn’t quite as ideal as it seemed. Known most of all for its cheap price and its great games, the Commodore 64 attracted a much younger demographic than most other computer models. Such youngsters often lacked the means to pay even QuantumLink’s relatively cheap rates — and, when they did have money, often preferred to spend it on boxed games to play face to face with their friends rather than online games and chat.

Nevertheless, and while I know of no hard numbers that can be applied to QuantumLink at its peak, it had become a reasonably popular service by 1988, with a subscriber base that must have climbed comfortably over the 100,000 threshold. If not a serious threat to the likes of CompuServe, neither was it anything to sneeze at in the context of the times. Considering that QuantumLink was only ever available to owners of Commodore 64s and 128s — platforms that went into rapid decline in North America after 1987 — it did quite well in the big picture in what was always going to be a bit of an uphill struggle.

Even had the service been notable for nothing else, something known as Habitat would have been enough to secure QuantumLink a place in computing history. Developed in partnership with Lucasfilm Games, it was the first graphical massively multiplayer virtual world, one of the most important forerunners to everything from World of Warcraft to Second Life. It was online in its original form for only a few months in early 1988, in a closed beta of a few hundred users that’s since passed into gaming legend. Quantum ultimately judged Habitat to be technologically and economically unfeasible to maintain on the scale that would have been required in order to offer access to all of their subscribers. It did, however, reemerge a year later in bowdlerized fashion as Club Caribe, more of an elaborate online-chat environment than the functioning virtual world Lucasfilm had envisioned.

But to reduce QuantumLink to the medium for Habitat, as is too often done in histories like this one, is unjust. The fact is that the service is notable for much more than this single pioneering game that tends so to dominate its historical memory. Its graphical interface would prove very influential on the competition, to a degree that is perhaps belied by its relatively modest subscriber roll. In 1988, a new service called Prodigy, backed by IBM and Sears, entered the market with an interface not all that far removed from QuantumLink’s, albeit running on MS-DOS machines rather than the Commodore 64; thanks mostly to its choice of platform, it would far outstrip its inspiration, surpassing even GEnie to become the number-two service behind CompuServe for a time in the early 1990s. Meanwhile virtually all of the traditional text-only services introduced some form of optional graphical front end. CompuServe, as usual, came up with the most thoroughgoing technical solution, offering up a well-documented “Host Micro Interface” protocol which third-party programmers could use to build their own front ends, thus creating a thriving, competitive marketplace with alternatives to suit most any user. Kimsey and Case could at least feel proud that their little upstart service had managed to influence such a giant of online life, even as they wished that QuantumLink’s bottom line was more reflective of its influence.

QuantumLink’s technical approach was proving to be, for all its advantages, something of a double-edged sword. For all that it had let Quantum create an easier, friendlier online service, for all that the Commodore and PlayNet deals had saved them from bankruptcy, it also left said service’s fate tied to that of the platform on which it ran. It meant, in other words, that QuantumLink came with an implacable expiration date.

This hard reality had never been lost on Steve Case. As early as 1986, he had started looking to create alternative services on other platforms, especially ones that might be longer-lived than Commodore’s aging 8-bit line. His dream platform was the Apple Macintosh, with its demographic of well-heeled users who loathed the command-line interfaces of most online services as the very embodiment of The Bad Old Way of pre-Mac computing. Showing the single-minded determination that could make him alternately loved and loathed, he actually moved to Cupertino, California, home of Apple, for a few months at the height of his lobbying efforts. But Apple wasn’t quite sure Quantum was really up to the task of making a next-generation online service for the Macintosh, finally offering him instead only a sort of trial run on the Apple II, their own aging 8-bit platform.

Quantum Computer Services’s second online service, a fairly straightforward port of the Commodore QuantumLink software stack to the Apple II, went online in May of 1988. It didn’t take off like they had hoped. Part of the problem was doubtless down to the fact that Apple II owners were well-entrenched by 1988 on services like CompuServe and GEnie, and weren’t inclined to switch to a rival service. But there was also some uncharacteristically mixed public messaging on the part of an Apple that had always seemed lukewarm about the whole project; people inside both companies joked that they had given the deal to Quantum to make an online service for a platform they didn’t much care about anymore just to get Steve Case to quit bugging them. Having already a long-established online support network known as AppleLink for dealers and professional clients, Apple insisted on calling this new, completely unrelated service AppleLink Personal Edition, creating huge confusion. And they rejected most of the initiatives that had made QuantumLink successful among Commodore owners, such as the inclusion of subscription kits in their computers’ boxes, thus compounding the feeling at Quantum that their supposed partners weren’t really all that committed to the service. Chafing under Apple’s rigid rules for branding and marketing, the old soldier Kimsey growled that they were harder to deal with than the Pentagon bureaucracy.

Apple dropped Quantum in the summer of 1989, barely a year after signing the deal with them, and thereby provoked a crisis inside the latter company. The investors weren’t at all happy with the way that Quantum seemed to be doing little more than treading water; with so much debt still to service, they were barely breaking even as a business. Meanwhile the Commodore 64 market to which they were still bound was now in undeniable free fall, and they had just seen their grand chance to ride Apple into greener pastures blow up in their faces. The investors blamed for the situation Steve Case, who had promised them that the world would be theirs if they could just get in the door at Cupertino. Jim Kimsey was forced to rise up in his protege’s defense. “You don’t take a 25-pound turkey out of the oven and throw it away before it’s done,” he said, pointing to the bright future that Case was insisting could yet be theirs if they would just stay the course. Kimsey could also deliver the good news from his legal department that terminating their marketing agreement early was going to cost Apple $2.5 million, to be paid directly to Quantum Computer Services. For the time being, it was enough to save Case’s job. But the question remained: what was Quantum to do in a post-Commodore world?

In his methodical way, Case had already been plugging away at several potential answers to that question beyond the Apple relationship. One of them, called PC-Link, was in fact just going live as this internal debate was taking place. Produced in partnership with Radio Shack, it was yet another port of the Commodore QuantumLink software stack, this time to Radio Shack’s Tandy line of MS-DOS clones. PC-Link would do okay, but Radio Shack stores were no longer the retail Ground Zero of the home-computing market that they had been when CompuServe had gotten into bed with them with such success almost a decade ago.

Quantum was also in discussions with no less of a computing giant than IBM, to launch an online service called Promenade in 1990 for a new line of IBM home computers called the PS/1, a sort of successor to the earlier, ill-fated PCjr. On the one hand, this was a huge deal for so tiny a company as Quantum Computer Services. But on the other, taking the legendary flop that had been the PCjr to heart, many in the industry were already expressing skepticism about a model line that had yet to even launch. Even Jim Kimsey was downplaying the deal: “It’s not a make-or-break deal for us. We’re not expecting more than $1 million in revenue from it [the first] year. Down the road, we don’t know how much it will be. If the PS/1 doesn’t work, we’re not in trouble.” A good thing, too: the PS/1 project would prove another expensive fiasco for an IBM who could never seem to figure out how to extend their success in business computing into the consumer marketplace.

So, neither of these potential answers was the answer Quantum sought. In fact, they were just exacerbating a problem that dogged the entire online-services industry: the way that no service could talk to any other service. By the end of the 1980s Quantum had launched or were about to launch four separate online services, none of which could talk to one another, marooning their subscribers on one island or another on the arbitrary basis of the model of computer they happened to have chosen to buy. It was hard enough to nurture one online community to health; to manage four was all but impossible. The deal with Commodore to found QuantumLink had almost certainly saved Quantum from drowning, but the similar bespoke deals with Apple, Radio Shack, and IBM, as impressive as they sounded on their face, threatened to become the millstone around their neck which dragged them under again.

Circa October of 1989, Case therefore decided it was time for Quantum to go it alone, to build a brand of their own instead of for someone else. The perfect place to start was with the moribund AppleLink Personal Edition, which, having just lost its official blessing from Apple, would have to either find a new name or shut down. Case wasn’t willing to do the latter, so it would have to be the former. While it would be hard to find a worse name than the one the service already had, he wanted something truly great for what he was coming to envision as the next phase of his company’s existence. He held a company-wide contest soliciting names, but in the end the one he chose was the one he came up with himself. AppleLink Personal Edition would become America Online. He loved the sense of sweep, and loved how very Middle American it sounded, like, say, Good Morning, America on the television or America’s Top 40 on the radio. It emphasized his dream of building an online community not for the socioeconomic elite but for the heart of the American mainstream. A member of said elite though he himself was, he knew where the real money was in American media. And besides, he thought the natural abbreviation of AOL rolled off the tongue in downright tripping fashion.

In the beginning, the new era which the name change portended was hard to picture; the new AOL was at this point nothing more than a re-branding of the old AppleLink Personal Edition. Only some months after the change, well into 1990, did Case begin to tip his hand. He had had his programmers working on his coveted Macintosh version of the AppleLink software since well before Apple had walked away, in the hope, since proven forlorn, that the latter would decide to expand their agreement with Quantum. Now, Quantum released the Macintosh version anyway — a version that connected to the very same AOL that was being used by Apple II owners. A process that would become known inside Quantum as “The Great Commingling” had begun.

Case had wanted the Mac version of AOL to blend what Jeff Wilkins over at CompuServe would have called “high-tech” and “high-touch.” He wanted, in other words, a product that would impress, but that would do so in a friendly, non-intimidating way. He came up with the idea of using a few voice samples in the software — a potentially very impressive feature indeed, given that the idea of a computer talking was still quite an exotic one among the non-techie demographic he intended to target. A customer-service rep at Quantum named Karen Edwards had a husband, Elwood Edwards, who worked as a professional broadcaster and voice actor. Case took him into a studio and had him record four phrases: “Welcome!,” “File’s done!,” “Goodbye!,” and, most famously, “You’ve got mail!” The last in particular would become one of the most iconic catchphrases of the 1990s, furnishing the title of a big Hollywood romantic comedy and even showing up in a Prince song. Even for those of us who were never on AOL, the sample today remains redolent of its era, when all of the United States seemed to be rushing to embrace its online future all at once. At AOL’s peak, the chirpy voice of Elwood Edwards was easily the most recognizable — and the most widely heard — voice in the country.

But we get ahead of the story: recorded in 1990, the Edwards samples wouldn’t become iconic for several more years. In the meantime, the Great Commingling continued apace, with PC-Link and Promenade being shut down as separate services and merged into AOL in March of 1991. Only QuantumLink was left out in the cold; running as it was on the most limited hardware, with displays restricted to 40 columns of text, Quantum’s programmers judged that it just wasn’t possible to integrate what had once been their flagship service with the others. Instead QuantumLink would straggle on alone, albeit increasingly neglected, as a separate service for another four and a half years. The few tens of thousands of loyalists who stuck it out to the bitter end often retained their old Commodore hardware, now far enough out of date to be all but useless for any other purpose, just to retain access to QuantumLink. The plug was finally pulled on October 31, 1994, one day shy of the service’s ninth birthday. Even discounting the role it had played as the technical and philosophical inspiration for America Online, the software that Howard Goldberg and David Panzl and their team of student programmers had created had had one heck of a run. Indeed, QuantumLink is regarded to this day with immense nostalgia by those who used it, to such an extent that they still dream the occasional quixotic dream of reviving it.

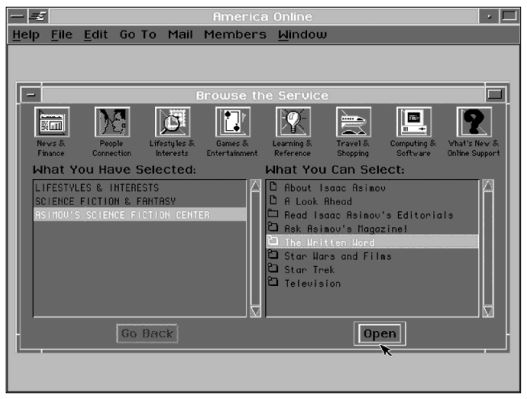

The first version of America Online for MS-DOS. Steve Case convinced Isaac Asimov, Bill von Meister’s original celebrity spokesman for The Source all those years ago, to lend his name to a science-fiction area. It seemed that things had come full-circle…

For Steve Case, though, QuantumLink was the past already in 1991; AOL was the future. The latter was now available to anyone with an MS-DOS computer — already the overwhelmingly dominant platform in the country, whose dominance would grow to virtual monopoly status as the decade progressed. This was the path to the mainstream. To better reflect the hoped-for future, the name of Quantum Computer Services joined that of Control Video in Jim Kimsey’s shoe box of odds and ends in October of 1991. Henceforward, the company as well as the service would be known as America Online.

Much of the staff’s time continued to be devoted to curating community. Now, though, even more of the online events focused on subject areas that had little to do with computers, or for that matter with the other things that stereotypical computer owners tended to be interested in. Gardening, auto repair, and television were as prominently featured as programming languages. The approach seemed to be paying off, giving AOL, helped along by its easy-to-use software and a meticulously coached customer-support staff, a growing reputation as the online service for the rest of us. It had just under 150,000 subscribers by October of 1991. This was still small by the standards of CompuServe, GEnie, or Prodigy, but AOL was coming on strong. The number of subscribers would double within the next few months, and again over the next few months after that, and so on and so on.

CompuServe offered to buy AOL for $50 million. At two and a half times the latter’s current annual revenue, it was a fairly generous offer. Just a few years before, Kimsey would have leaped at a sum a fraction of this size to wash his hands of his problem child of a company. Even now, he was inclined to take the deal, but Steve Case was emphatically opposed, insisting that they were all on the verge of something extraordinary. The first real rift between the pair of unlikely friends was threatening. But when his attempts to convince CompuServe to pay a little more failed to bear fruit, Kimsey finally agreed to reject the offer. He would later say that, had CompuServe been willing to pay $60 million, he would have corralled his investors and sold out, upset Case or no. Had he done so, the history of online life in the 1990s would have played out in considerably different fashion.

With the CompuServe deal rejected, the die was cast; AOL would make it alone or not at all. At the end of 1991, Kimsey formally passed the baton to Case, bestowing on him the title of CEO of this company in which he had always been far more emotionally invested than his older friend. But then, just a few months later, Kimsey grabbed the title back at the behest of the board of directors. They were on the verge of an initial public offering, and the board had decided that the grizzled and gregarious Kimsey would make a better face of the company on Wall Street than Case, still an awkward public speaker prone to lapse gauche or just clam up entirely at the worst possible moments. It was only temporary, Kimsey assured his friend, who was bravely trying but failing to hide how badly this latest slap in the face from AOL’s investors stung him.

America Online went public on March 19, 1992, with an initial offering of 2 million shares. Suddenly nearly everyone at the company, now 116 employees strong, was wealthy. Jim Kimsey made $3.2 million that day, Steve Case $2 million. A real buzz was building around AOL, which was indeed increasingly being seen, just as Case had always intended, as the American mainstream’s online service. The Wall Street Journal‘s influential technology reporter Walt Mossberg called AOL “the sophisticated wave of the future,” and no less a tech mogul than Paul Allen of Microsoft fame began buying up shares at a voracious pace. Ten years on from its founding, and already on its third name, AOL was finally getting hot. Which was good, because it would never be cool, would always be spurned by the tech intelligentsia who wrote for Wired and talked about the Singularity. No matter; Steve Case would take being profitable over being cool any day, would happily play Michael Bolton to the other services’ Nirvana.

For all the change and turmoil that Control Video/Quantum Computer/America Online had gone through over the past decade, Bill von Meister’s original vision for the company remained intact to a surprising degree. He had recognized that an online service must offer the things that mainstream America cared about in order to foster mainstream appeal. He had recognized that an online service must be made as easy to use as humanly possible. And he had seen the commercial and technical advantages — not least in fostering that aforementioned ease of use — that could flow from taking complete control of the subscriber’s experience via custom, proprietary software. He had even seen that the mainstream online life of the future would be based around graphics at least as much as text. But, as usual for him, he had come to all these realizations a little too early. Now, the technology was catching up to the vision, and AOL stood poised to reap rewards which even Steve Case could hardly imagine.

(Sources: the books On the Way to the Web: The Secret History of the Internet and its Founders by Michael A. Banks, Stealing Time: Steve Case, Jerry Levin, and the Collapse of AOL Time Warner by Alec Klein, Fools Rush In: Steve Case, Jerry Levin, and the Unmaking of AOL Time Warner by Nina Munk, and There Must be a Pony in Here Somewhere: The AOL Time Warner Debacle by Kara Swisher; Softline of May 1982; New York Times of December 3 1984; Ahoy! of February 1985; Commodore Power/Play of December 1984/January 1985; Info issues 6 and 9; Run of August 1985 and November 1985; Midnite Software Gazette of January/February 1985 and November/December 1985; Washington Post of May 30 1985 and June 29 1990; Compute! of November 1985; Compute!’s Gazette of March 1986 and January 1989; Commodore Magazine of October 1989; Commodore World of August/September 1995; The Monitor of March 1996; the episode of the Computer Chronicles television series entitled “Online Databases, Part 1”; old Usenet posts by C.D. Kaiser and Randell Jesup.)