Has any successful piece of software ever deserved its success less than the benighted, unloved exercise in minimalism that was MS-DOS? The program that started its life as a stopgap under the name of “The Quick and Dirty Operating System” at a tiny, long-forgotten hardware maker called Seattle Computer Products remained a stopgap when it was purchased by Bill Gates of Microsoft and hastily licensed to IBM for their new personal computer. Archaic even when the IBM PC shipped in October of 1981, MS-DOS immediately sent half the software industry scurrying to come up with something better. Yet actually arriving at a viable replacement would absorb a decade’s worth of disappointment and disillusion, conflict and compromise — and even then the “replacement” would still have to be built on top of the quick-and-dirty operating system that just wouldn’t die.

This, then, is the story of that decade, and of how Microsoft at the end of it finally broke Windows into the mainstream.

When IBM belatedly turned their attention to the emerging microcomputer market in 1980, it was both a case of bold new approaches and business-as-usual. In the willingness they showed to work together with outside partners on the hardware and especially the software front, the IBM PC was a departure for them. In other ways, though, it was a continuation of a longstanding design philosophy.

With the introduction of the System/360 line of mainframes back in 1964, IBM had in many ways invented the notion of a computing platform: a nexus of computer models that could share hardware peripherals and that could all run the same software. To buy an IBM system thereafter wasn’t so much to buy a single computer as it was to buy into a rich computing ecosystem. Long before the saying went around corporate America that “no one ever got fired for buying Microsoft,” the same was said of IBM. When you contacted them, they sent a salesman or two out to discuss your needs, desires, and budget. Then, they tailored an installation to suit and set it up for you. You paid a bit more for an IBM, but you knew it was safe. System/360 models were available at prices ranging from $2500 per month to $115,000 per month, with the latter machine a thousand times more powerful than the former. Their systems were thus designed, as all their sales literature emphasized, to grow with you. When you needed more computer, you just contacted the mother ship again, and another dark-suited fellow came out to help you decide what your latest needs really were. With IBM, no sharp breaks ever came in the form of new models which were incompatible with the old, requiring you to remake from scratch all of the processes on which your business depended. Progress in terms of IBM computing was a gradual evolution, not a series of major, disruptive revolutions. Many a corporate purchasing manager loved them for the warm blanket of safety, security, and compatibility they provided. “Once a customer entered the circle of 360 users,” noted IBM’s president Thomas Watson Jr., “we knew we could keep him there a very long time.”

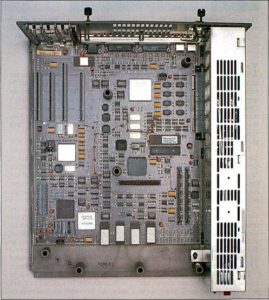

The same philosophy could be seen all over the IBM PC. Indeed, it would, as much as the IBM name itself, make the first general-purpose IBM microcomputer the accepted standard for business computing on the desktop, just as were their mainframe lines in the big corporate data centers. You could tell right away that the IBM PC was both built to last and built to grow along with you. Opening its big metal case revealed a long row of slots just waiting to be filled, thereby transforming it into exactly the computer you needed. You could buy an IBM PC with one or two floppy drives, or more, or none; with a color or a monochrome display card; with anywhere from 16 K to 256 K of RAM.

But the machine you configured at time of purchase was only the beginning. Both IBM and a thriving aftermarket industry would come to offer heaps more possibilities in the months and years that followed the release of the first IBM PC: hard drives, optical drives, better display cards, sound cards, ever larger RAM cards. And even when you finally did bite the bullet and buy a whole new machine with a faster processor, such as 1984’s PC/AT, said machine would still be able to run the same software as the old, just as its slots would still be able to accommodate hardware peripherals scavenged from the old.

Evolution rather than revolution. It worked out so well that the computer you have on your desk or in your carry-on bag today, whether you prefer Windows, OS X, or Linux, is a direct, lineal descendant of the microcomputer IBM released more than 35 years ago. Long after IBM themselves got out of the PC game, and long after sexier competitors like the Commodore Amiga and the first and second generation Apple Macintosh have fallen by the wayside, the beast they created shambles on. Its long life is not, as zealots of those other models don’t hesitate to point out, down to any intrinsic technical brilliance. It’s rather all down to the slow, steady virtues of openness, expandibility, and continuity. The timeline of what’s become known as the “Wintel” architecture in personal computing contains not a single sharp break with the past, only incremental change that’s been carefully managed — sometimes even technologically compromised in comparison to what it might have been — so as not to break compatibility from one generation to the next.

That, anyway, is the story of the IBM PC on the hardware side, and a remarkable story it is. On the software side, however, the tale is more complicated, thanks to the failure of IBM to remember the full lesson of their own System/360.

At first glance, the story of the IBM PC on the software side seems to be just another example of IBM straining to offer a machine that can be made to suit every potential customer, from the casual home user dabbling in games and BASIC to the most rarefied corporate purchaser using it to run mission-critical applications. Thus when IBM announced the computer, four official software operating paradigms were also announced. One could use the erstwhile quick-and-dirty operating system that was now known as MS-DOS; [1]MS-DOS was known as PC-DOS when sold directly under license by IBM. Its functionality, however, was almost or entirely identical to the Microsoft-branded version. For simplicity’s sake, I will just refer to “MS-DOS” whenever speaking about either product — or, more commonly, both — in the course of this series of articles. one could use CP/M, the standard for much of pre-IBM business microcomputing, from which MS-DOS had borrowed rather, shall we say, extensively (remember the latter’s original name?); one could use an innovative cross-platform environment, developed by the University of California San Diego’s computer-science department, that was based around the programming language Pascal; or one could choose not to purchase any additional operating software at all, instead relying on the machine’s built-in ROM-hosted Microsoft BASIC environment, which wasn’t at all dissimilar from those the same company had already provided for many or most of the other microcomputers on the market.

In practice, though, this smorgasbord of possibilities only offered one remotely appetizing entree in the eyes of most users. The BASIC environment was really suited only to home users wanting to tinker with simple programs and save them on cassettes, a market IBM had imagined themselves entering with their first microcomputer but had in reality priced themselves out of. The UCSD Pascal system was ahead of its time with its focus on cross-platform interoperability, accomplished using a form of byte code that would later inspire the Java virtual machine, but it was also rather slow, resource-hungry, and, well, just kind of weird — and it was quite expensive as well. CP/M ought to have been poised for success on the new machine given its earlier dominance, but its parent company Digital Research was unconscionably late making it available for the IBM PC, taking until well after the machine’s October 1981 launch to get it ported from the Zilog Z-80 microprocessor to the Intel architecture of the IBM PC and its successor models — and when CP/M finally did appear it was, once again, expensive.

That left MS-DOS, which worked, was available, and was fairly cheap. As corporations rushed out to purchase the first safe business microcomputer at a pace even IBM had never anticipated, MS-DOS relegated the other three solutions to a footnote in computing history. Nobody’s favorite operating system, it was about to become the most popular one in the world.

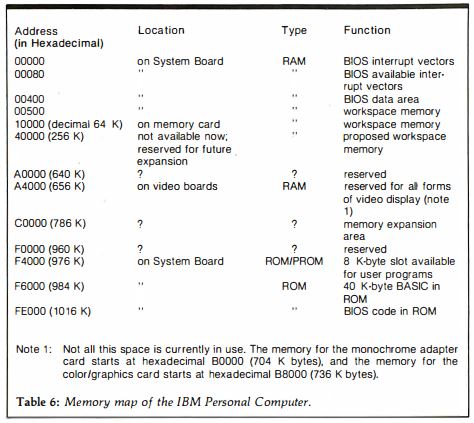

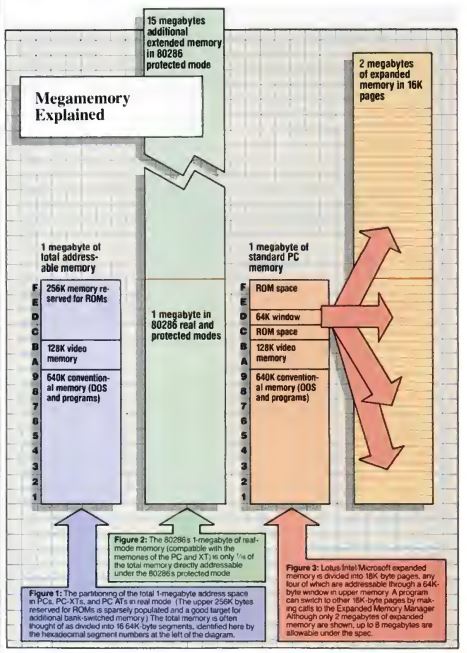

The System/360 line that had made IBM the 800-pound gorilla of large-scale corporate data-processing had used an operating system developed in-house by them with an eye toward the future every bit as pronounced as that evinced by the same line’s hardware. The emerging IBM PC platform, on the other hand, had gotten only half of that equation down. MS-DOS was locked into the 1 MB address space of the Intel 8088, allowing any computer on which it ran just 640 K of RAM at the most. When newer Intel processors with larger address spaces began to appear in new IBM models as early as 1984, software and hardware makers and ordinary users alike would be forced to expend huge amounts of time and effort on ugly, inefficient hacks to get around the problem.

Infamous though the 640 K barrier would become, memory was just one of the problems that would dog MS-DOS programmers throughout the operating system’s lifetime. True to its post-quick-and-dirty moniker of the Microsoft Disk Operating System, most of its 27 function calls involved reading and writing to disks. Otherwise, it allowed programmers to read the keyboard and put text on the screen — and not much of anything else. If you wanted to show graphics or play sounds, or even just send something to the printer, the only way to do it was to manually manipulate the underlying hardware. Here the huge amount of flexibility and expandability that had been designed into the IBM PC’s hardware architecture became a programmer’s nightmare. Let’s say you wanted to put some graphics on the screen. Well, a given machine might have an MDA monochrome video card or a CGA color card, or, soon enough, a monochrome Hercules card or a color EGA card. You the programmer had to build into your program a way of figuring out which one of these your host had, and then had to write code for dealing with each possibility on its own terms.

An example of how truly ridiculous things could get is provided by WordPerfect, the most popular business word processor from the mid-1980s on. WordPerfect Corporation maintained an entire staff of programmers whose sole job function was to devour the technical specifications and command protocols of each new printer that hit the market and write drivers for it. Their output took the form of an ever-growing pile of disks that had to be stuffed into every WordPerfect box, even though only one of them would be of any use to any given buyer. Meanwhile another department had to deal with the constant calls from customers who had purchased a printer for which they couldn’t find a driver on their extant mountain of disks, situations that could be remedied in the era before widespread telecommunications only by shipping off yet more disks. It made for one hell of a way to run a software business; at times the word processor itself could almost feel like an afterthought for WordPerfect Printer Drivers, Inc.

But the most glaringly obvious drawback to MS-DOS stared you in the face every time you turned on the computer and were greeted with that blinking, cryptic “C:\>” prompt. Hackers might have loved the command line, but it was a nightmare for a secretary or an executive who saw the computer only as an appliance. MS-DOS contrived to make everything more difficult through its sheer primitive minimalism. Think of the way you work with your computer today. You’re used to having several applications open at once, used to being able to move between them and cut and paste bits and pieces from one to the other as needed. With MS-DOS, you couldn’t do any of this. You could run just one application at a time, which would completely fill the screen. To do something else, you had to shut down the application you were currently using and start another. And if what you were hoping to do was to use something you had made in the first application inside the second, you could almost always forget about it; every application had its own proprietary data formats, and MS-DOS didn’t provide any method of its own of moving data from one to another.

Of course, the drawbacks of MS-DOS spelled opportunity for those able to offer ways to get around them. Thus Lotus Corporation became one of the biggest software success stories of the 1980s by making Lotus 1-2-3, an unwieldy colossus that integrated a spreadsheet, a database manager, and a graph- and chart-maker into a single application. People loved the thing, bloated though it was, because all of its parts could at least talk to one another.

Other solutions to the countless shortcomings of MS-DOS, equally inelegant and partial, were rampant by the time Lotus 1-2-3 hit it big. Various companies published various types of hacks to let users keep multiple applications resident in memory, switching between them using special arcane key sequences. Various companies discussed pacts to make interoperable file formats for data transfer between applications, although few of them got very far. Various companies made a cottage industry out of selling pre-packaged printer drivers to other developers for use in their applications. People wrote MS-DOS startup scripts that brought up easy-to-choose-from menus of common applications on bootup, thereby insulating timid secretaries and executives alike from the terrifying vagueness of the command line. And everybody seemed to be working a different angle when it came to getting around the 640 K barrier.

All of these bespoke solutions constituted a patchwork quilt which the individual user or IT manager would have to stitch together for herself in order to arrive at anything like a comprehensive remedy for MS-DOS’s failings. But other developers had grander plans, and much of their work quickly coalesced around various forms of the graphical user interface. Initially, this fixation may sound surprising if not inexplicable. A GUI built using a mouse, menus, icons, and windows would seem to fix only one of MS-DOS’s problems, that being its legendary user-unfriendliness. What about all the rest of its issues?

As it happens, when we look closer at what a GUI-based operating environment does and how it does it, we find that it must or at least ought to carry with it solutions to MS-DOS’s other issues as well. A windowed environment ideally allows multiple applications to be open at one time, if not actually running simultaneously. Being able to copy and paste pieces from one of those open applications to another requires interoperable data formats. Running or loading multiple applications also means that one of them can’t be allowed to root around in the machine’s innards indiscriminately, lest it damage the work of the others; this, then, must mark the end of the line for bare-metal programming, shifting the onus onto the system software to provide a proper layer of high-level function calls insulating applications from a machine’s actual or potential hardware. And GUIs, given that they need to do all of the above and more, are notoriously memory-hungry, which obligated many of those who made such products in the 1980s to find some way around MS-DOS’s memory constraints. So, a GUI environment proves to be much, much more than just a cutesy way of issuing commands to the computer. Implementing one on an IBM PC or one of its descendants meant that the quick-and-dirty minimalism of MS-DOS had to be chucked forever.

Some casual histories of computing would have you believe that the entire software industry was rigidly fixated on the command line until Steve Jobs came along to show them a better way with the Apple Macintosh, whereupon they were dragged kicking and screaming into computing’s necessary future. Such histories generally do acknowledge that Jobs himself got the GUI religion after a visit to the Xerox Palo Alto Research Center in December of 1979, but what tends to get lost is the fact that he was hardly alone in viewing PARC’s user-interface innovations as the natural direction for computing to go in the more personal, friendlier era of high technology being ushered in by the microcomputer. Indeed, by 1981, two years before a GUI made its debut on an Apple product in the form of the Lisa, seemingly everyone was already talking about them, even if the acronym itself had yet to be invented. This is not meant to minimize the hugely important role Apple really would play in the evolution of the GUI; as we’ll see to a large extent in the course of this very series of articles, they did much original formative work that has made its way into the computer you’re probably using to read these words right now. It’s rather just to say that the complete picture of how the GUI made its way to the personal computer is, as tends to happen when you dig below the surface of any history, more variegated than a tidy narrative of “A caused B which caused C” allows for.

In that spirit, we can note that the project destined to create the MS-DOS world’s first GUI was begun at roughly the same time that a bored and disgruntled Steve Jobs over at Apple, having been booted off the Lisa project, seized control of something called the Macintosh, planned at the time as an inexpensive and user-friendly computer for the home. This other pioneering project in question, also started during the first quarter of 1981, was the work of a brief-lived titan of business software called VisiCorp.

VisiCorp had been founded by one Dan Fylstra under the name of Personal Software in 1978, at the very dawn of the microcomputer age, as one of the first full-service software publishers, trafficking mostly in games which were submitted to him by hobbyists. His company became known for their comparatively slick presentation in a milieu that was generally anything but; MicroChess, one of their first releases, was quite probably the first computer game ever to be packaged in a full-color box rather than a Ziploc baggie. But their course was changed dramatically the following year when a Harvard MBA student named Dan Bricklin contacted Fylstra with a proposal for a software tool that would let accountants and other businesspeople automate most of the laborious financial calculations they were accustomed to doing by hand. Fylstra was intrigued enough to lend the microcomputer-less Bricklin one of his own Apple IIs — whereupon, according to legend at least, the latter proceeded to invent the electronic spreadsheet over the course of a single weekend. He hired a more skilled programmer named Bob Frankston and formed a company called Software Arts to develop that rough prototype into a finished application, which Fylstra’s Personal Software published in October of 1979.

Up to that point, early microcomputers like the Apple II, Radio Shack TRS-80, and Commodore PET had been a hard sell as practical tools for business — even for their most seemingly obvious business application of all, that of word processing. Their screens could often only display 40 columns of big, blocky characters, often only in upper case — about as far away from the later GUI ideal of “what you see is what you get” as it was possible to go — while their user interfaces were arcane at best and their minuscule memories could only accommodate documents of a few pages in length. Most potential business users took one look at the situation, added on the steep price tag for it all, and turned back to their typewriters with a shrug.

VisiCalc, however, was different. It was so clearly, manifestly a better way to do accounting that every accountant Fylstra showed it to lit up like a child on Christmas morning, giggling with delight as she changed a number here or there and watched all of the other rows and columns update automagically. VisiCalc took off like nothing the young microcomputer industry had ever seen, landing tens of thousands of the strange little machines in corporate accounting departments. As the first tangible proof of what personal computing could mean to business, it prompted people to begin asking why IBM wasn’t a part of this new party, doing much to convince the latter to remedy that absence by making a microcomputer of their own. It’s thus no exaggeration to say that the entire industry of business-oriented personal computing was built on the proof of concept that was VisiCalc. It would sell 500,000 copies by January of 1983, an absolutely staggering figure for that time. Fylstra, seeing what was buttering his bread, eventually dropped all of the games and other hobbyist-oriented software from his catalog and reinvented Personal Software as VisiCorp, the first major publisher of personal-computer business applications.

But all was not quite as rosy as it seemed at the new VisiCorp. Almost from the moment of the name change, Dan Fylstra found his relationship with Dan Bricklin growing strained. The latter was suspicious of his publisher’s rebranding themselves in the image of his intellectual property, feeling they had been little more than the passive beneficiaries of his brilliant stroke. This point of view was by no means an entirely fair one. While it may have been true that Fylstra had been immensely lucky to get his hands on Bricklin’s once-in-a-lifetime innovation, he’d also made it possible by loaning Bricklin an Apple II in the first place, then done much to make VisiCalc palatable for corporate America through slick, professional packaging and marketing that projected exactly the right conservative, businesslike image, consciously eschewing the hippie ethos of the Homebrew Computer Club. Nevertheless, Bricklin, perhaps a bit drunk on all the praise of his genius, credited VisiCorp’s contribution to VisiCalc’s success but little. And so Fylstra, nervous about continuing to stake his entire company on Bricklin, set up an internal development team to create more products for the business market.

By the beginning of 1981, the IBM PC project which VisiCalc had done so much to prompt was in full swing, with the finished machine due to be released before the end of the year. Thanks to their status as publisher of the hottest application in business software, VisiCorp had been taken into IBM’s confidence, one of a select number of software developers and publishers given access to prototype hardware in order to have products ready to go on the day the new machine shipped. It seems that VisiCorp realized even at this early point how underwhelming the new machine’s various operating paradigms were likely to be, for even before they had actual IBM hardware to hand, they started mocking up the GUI environment that would become known as Visi On using Apple II and III machines. Already at this early date, it reflected a real, honest, fundamental attempt to craft a more workable model for personal computing than the nightmare that MS-DOS alone could be. William Coleman, the head of the development team, later stated in reference to the project’s founding goals that “we wanted users to be able to have multiple programs on the screen at one time, ease of learning and use, and simple transfer of data from one program to another.”

Visi On seemed to have huge potential. When VisiCorp demonstrated an early version, albeit far later than they had expected to be able to, at a trade show in December of 1982, Dan Fylstra remembers a rapturous reception, “competitors standing in front of [the] booth at the show, shaking their heads and wondering how the company had pulled the product off.” It was indeed an impressive coup; well before the Apple Macintosh or even Lisa had debuted, VisiCorp was showing off a full-fledged GUI environment running on hardware that had heretofore been considered suitable only for ugly old MS-DOS.

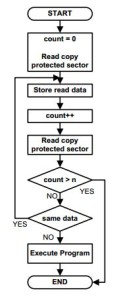

Still, actually bringing a GUI environment to market and making a success out of it was a much taller order than it might have first appeared. As even Apple would soon be learning to their chagrin, any such product trying to make a go of it within the increasingly MS-DOS-dominated culture of mainstream business computing ran headlong into a whole pile of problems which lacked clearly best solutions. Visi On, like almost all of the GUI products that would follow for the IBM hardware architecture, was built on top of MS-DOS, using the latter’s low-level function calls to manage disks and files. This meant that users could install it on their hard drive and pop between Visi On and vanilla MS-DOS as the need arose. But a much thornier question was that of running existing MS-DOS applications within the Visi On environment. Those which assumed they had full control of the system — which was practically all of them, because why wouldn’t they? — would flame out as soon as they tried to directly access some piece of hardware that was now controlled by Visi On, or tried to put something in some specific place inside what was now a shared pool of memory, or tried to do any number of other now-forbidden things. VisiCorp thus made the hard decision to not even try to get existing MS-DOS applications to run under Visi On. Software developers would have to make new, native applications for the system; Visi On would effectively be a new computing platform onto itself.

This decision was questionable in commercial if not technical terms, given how hard it must be to get a new platform accepted in an MS-DOS-dominated marketplace. But VisiCorp then proceeded to make the problem even worse. It would only be possible to program Visi On, they announced, after purchasing an expensive development kit and installing it on a $20,000 DEC PDP-11 minicomputer. They thus opted for an approach similar to one Apple was opting for with the Lisa: to allow that machine to be programmed only by yoking it up to a second Lisa. In thus betraying the original promise of the personal computer as an anything machine which ordinary users could program to do their will, both Visi On and the Lisa operating system arguably removed their hosting hardware from that category entirely, converting it into a closed electronic appliance more akin to a game console. Taxonomical debates aside, the barriers to entry even for one who wished merely to use Visi On to run store-bought applications were almost as steep: when this first MS-DOS-based GUI finally shipped on December 16, 1983, after a long series of postponements, it required a machine with 512 K of memory and a hard drive to run and cost more than $1000 to buy.

Visi On was, as the technology pundits like to say, “ahead of the hardware market.” In quite a number of ways it was actually far more ambitious than what would emerge a month or so after it as the Apple Macintosh. Multiple Visi On applications could be open at the same time (although they didn’t actually run concurrently), and a surprisingly sophisticated virtual-memory system was capable of swapping out pages to hard disk if software tried to allocate more memory than was physically available on the computer. Similar features wouldn’t reach MacOS until 1987’s System 5 and 1991’s System 7 respectively.

In the realm of usability, however, Visi On unquestionably fell down in comparison to Apple’s work. The user interfaces for the Lisa and the Macintosh made almost all the right choices right from the beginning, expanding upon the work done at Xerox PARC in all the right ways. Many of the choices made by VisiCorp, on the other hand, feel far more dubious today — and, one has to believe, not just out of the contempt bred by all those intervening decades of user interfaces modeled on Apple’s. Consider the task of moving and sizing windows on the screen, which was implemented so elegantly on the original Lisa and Macintosh that it’s been changed not at all in all the decades since. While Visi On too allows windows to be sized and placed where you will, and allows them to overlay one another — something by no means true of all of the MS-DOS GUI systems that would follow — doing so is a clumsy process involving picking options out of menus rather than simply dragging title bars or sizing widgets. In fact, Visi On uses no icons whatsoever. For anyone still enamored with the old saw that Apple just ripped off the Xerox PARC interface in its entirety and stuck it on the Lisa and Mac, Visi On, being much more slavishly based on the PARC model, provides an instructive demonstration of how far the likes of the Xerox Alto still was from the intuitive ease of Apple’s interface.

A Quick Tour of Visi On

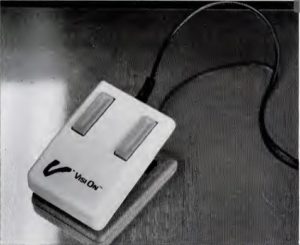

With mice still exotic creatures, VisiCorp provided their own to work with Visi On. Many other early GUI-makers, Microsoft among them, would follow their lead.

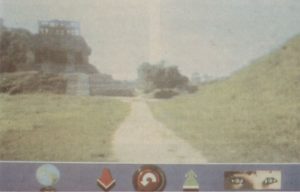

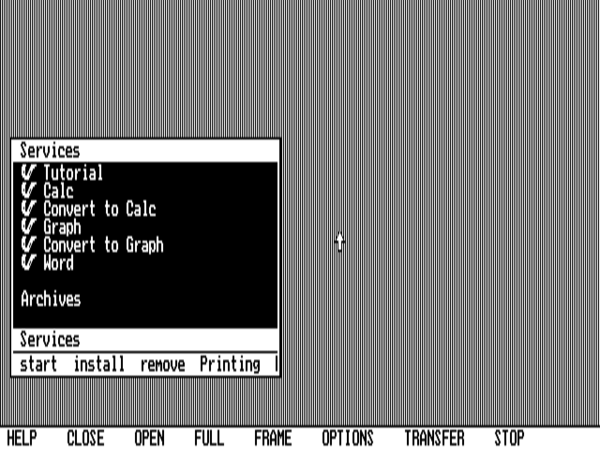

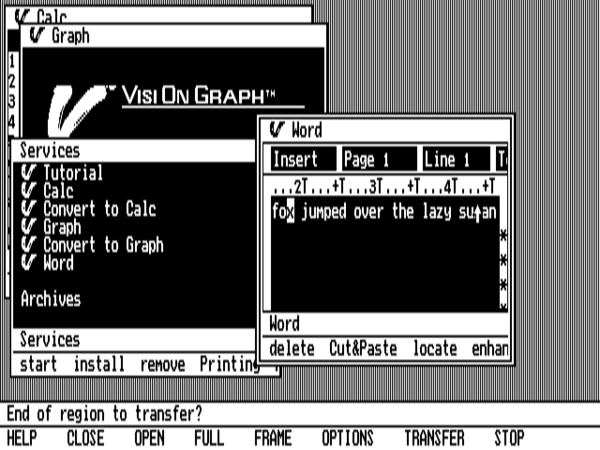

Visi On looks like this upon booting up on an original IBM PC with 640 K of memory and a CGA video card, running in high-resolution monochrome mode at 640 X 200. “Services” is Visi On’s terminology for installed applications. The list of them which you see here, all provided by VisiCorp themselves, are the only ones that would ever exist, thanks to Visi On’s complete commercial failure.

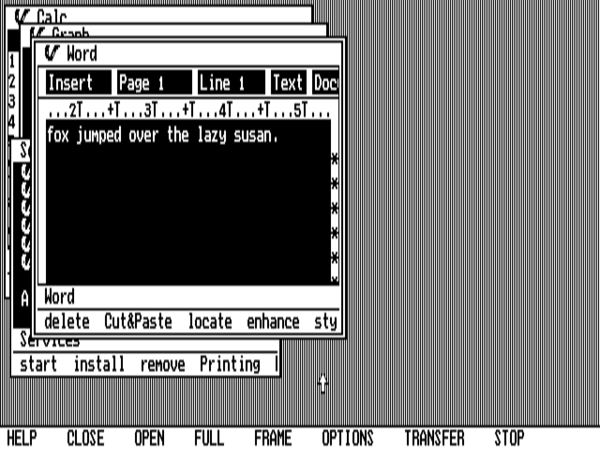

We’ve started up a spreadsheet, a graphing application, and a word processor at the same time. These don’t actually run concurrently, as they would under a true multitasking operating system, but are visible onscreen in their separate windows, becoming active when we click them. (Something similar would not have been possible under MacOS prior to 1987.)

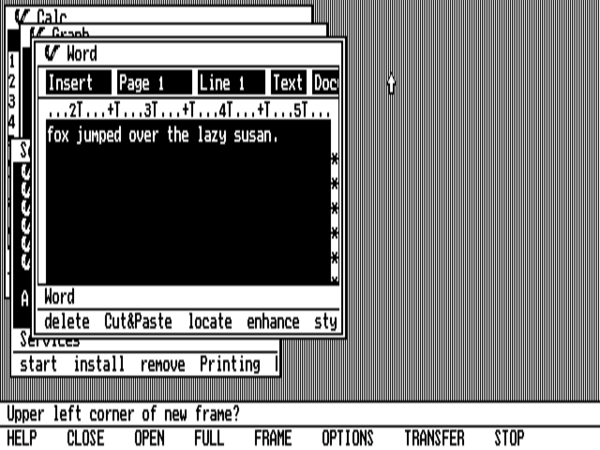

Although Visi On does sport windows that can be sized and placed anywhere and can overlap one another, arranging them is made extremely tedious by its lack of any concept of mouse-dragging; the mouse can only be used for single clicks. So, you have to click the “Frame” menu option and see its instructions through step by step. Note also the lack of pull-down menus, another of Apple’s expansions upon the work down at Xerox PARC. Menus here are just one-shot commands, akin to what a modern GUI user would call a button.

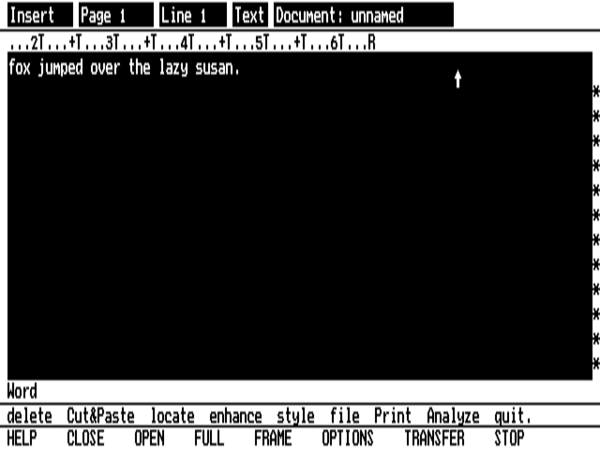

Fortunately, you can make a window full-screen with just a couple of clicks. Unfortunately, you then have to laboriously re-“Frame” it when you want to shrink it again; it doesn’t remember where it used to be.

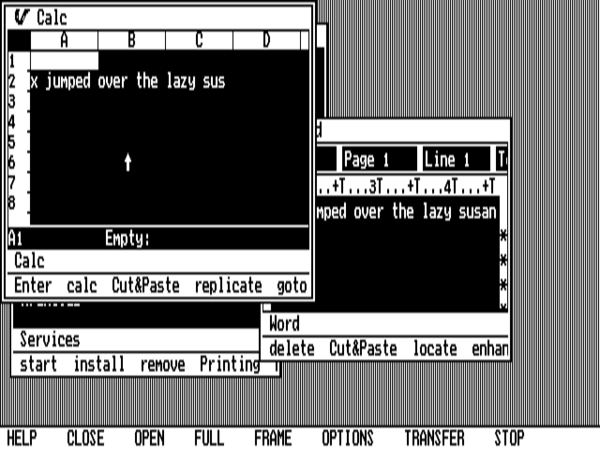

The lack of a mouse-drag affordance makes the “Transfer” function — Visi On’s version of copy-and-paste — extremely tedious.

And, as with most things in Visi On, transferring data is also slow. Moving that little snippet of text from the word processor to the spreadsheet took about ten seconds.

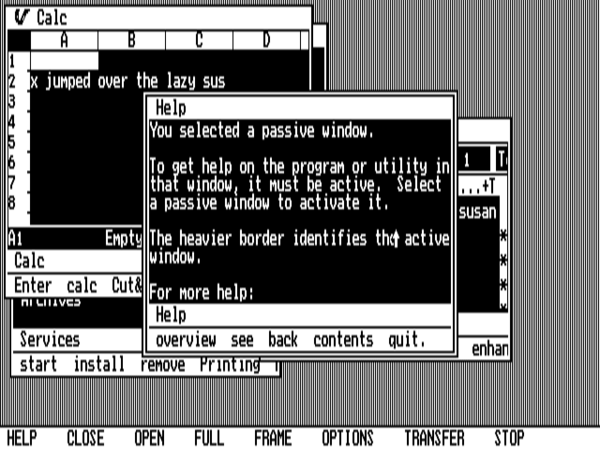

On the plus side, Visi On sports a help system that’s crazily comprehensive for its time — much more so than the one that would ship with MacOS or, for that matter, Microsoft Windows for quite some years.

As if it didn’t have enough intrinsic problems working against it, extrinsic ones also contrived to undo Visi On in the marketplace. By the time it shipped, VisiCorp was a shadow of what they had so recently been. VisiCalc sales had collapsed over the past year, going from nearly 40,000 units in December of 1982 alone to fewer than 6000 units in December of 1983 in the face of competing products — most notably the burgeoning juggernaut Lotus 1-2-3 — and what VisiCorp described as Software Arts’s failure to provide “timely upgrades” amidst a relationship that was growing steadily more tense. With VisiCorp’s marketplace clout thus dissipating like air out of a balloon, it was hardly the ideal moment for them to ask for the sorts of commitments from users and developers required by Visi On.

The very first MS-DOS-based GUI struggled along with no uptake whatsoever for nine months or so; the only applications made for it were the word processor, spreadsheet, and graphing program VisiCorp made themselves. In September of 1984, with VisiCorp and Software Arts now embroiled in a court battle that would benefit only their competitors, the Visi On technology was sold to a veteran manufacturer of mainframes and supercomputers called Control Data Corporation, who proceeded to do very little if anything with it. VisiCorp went bankrupt soon after, while Lotus bought out Software Arts for a paltry $800,000, thus ending the most dramatic boom-and-bust tale of the early business-software industry. “VisiCorp’s auspicious climb and subsequent backslide,” wrote InfoWorld magazine, “will no doubt become a ‘how-not-to’ primer for software companies of the future.”

Visi On’s struggles may have been exacerbated by the sorry state of its parent company, but time would prove them to be by no means atypical of MS-DOS-based GUI systems in general. Already in February of 1984, PC Magazine could point to at least four other GUIs of one sort or another in the works from other third-party developers: Concurrent CP/M with Windows by Digital Research, VisuALL by Trillian Computer Corporation, DesqView by Quarterdeck Office Systems, and WindowMaster by Structured Systems. All of these would make different choices in trying to balance the seemingly hopelessly competing priorities of reasonable speed and reasonable hardware requirements, compatibility with MS-DOS applications and compatibility with post-MS-DOS philosophies of computing. None would find the sweet spot. Neither they nor the still more GUI environments that followed them would be able to offer a combination of features, ease of use, and price that the market found compelling, so much so that by 1985 the whole field of MS-DOS GUIs was coming to be viewed with disdain by computer users who had been disappointed again and again. If you wanted a GUI, went the conventional wisdom, buy a Macintosh and live with the paltry software selection and the higher price. The mainstream of business computing, meanwhile, continued to truck along with creaky old MS-DOS, a shaky edifice made still more unstable by all of the hacks being grafted onto it to expand its memory model or to force it to load more than one application at a time. “Windowing and desktop environments are a solution looking for a problem,” said Robert Lefkowits, director of software services for Infocorp, in the fall of 1985. “Users aren’t really looking for any kind of windowing environment to solve problems. Users are not expressing a need or desire for it.”

The reason they weren’t, of course, was because they hadn’t yet seen a GUI in which the pleasure outweighed the pain. Entrenched as users were in the old way of doing things, accepting as they had become of all of MS-DOS’s discontents as simply the way computing was, it was up to software developers to show them why a GUI was something they had never known they couldn’t live without. Microsoft at least, the very people who had saddled their industry with the MS-DOS albatross, were smart enough to realize that mainstream business computing must be remade in the image of the much-scoffed-at Macintosh at some point. Further, they understood that it behooved them to do the remaking if they didn’t want to go the way of VisiCorp. By the time Lefkowits said his words, the long, winding tale of dogged perseverance in the face of failure and frustration that would become the story of Microsoft Windows had already been playing out for several years. One of these days, the GUI was going to make its breakthrough in one way or another, and it was going to do so with a Microsoft logo on its box — even if Bill Gates had to personally ram it down his customers’ throats.

(Sources: the books The Making of Microsoft: How Bill Gates and His Team Created the World’s Most Successful Software Company by Daniel Ichbiah and Susan L. Knepper and Computer Wars: The Fall of IBM and the Future of Global Technology by Charles H. Ferguson and Charles R. Morris; InfoWorld of October 31 1983, November 14 1983, April 2 1984, July 2 1984, and October 7 1985; Byte of June 1983, July 1983; PC Magazine of February 7 1984, and October 2 1984; the episode of the Computer Chronicles television program called “Integrated Software.” Finally, I owe a lot to Nathan Lineback for the histories, insights, comparisons, and images found at his wonderful online “GUI Gallery.”)

Footnotes

| ↑1 | MS-DOS was known as PC-DOS when sold directly under license by IBM. Its functionality, however, was almost or entirely identical to the Microsoft-branded version. For simplicity’s sake, I will just refer to “MS-DOS” whenever speaking about either product — or, more commonly, both — in the course of this series of articles. |

|---|