Unless you’re an extremely patient and/or nostalgic sort, most of the games I’ve been writing about on this blog for over two years now are a hard sell as something to just pick up and play for fun. There have been occasional exceptions: M.U.L.E., probably my favorite of any game I’ve written about so far, remains as magical and accessible as it was the day it was made; some or most of the Infocom titles remain fresh and entertaining as both fictions and games. Still, there’s an aspirational quality to even some of the most remarkable examples of gaming in this era. Colorful boxes and grandiose claims of epic tales of adventure often far exceeded the minimalist content of the disks themselves. In another era we might levy accusations of false advertising, but that doesn’t feel quite like what’s going on here. Rather, players and developers entered into a sort of partnership, a shared recognition that there were sharp limits to what developers could do with these simple computers, but that players could fill in many of the missing pieces with determined imaginings of what they could someday actually be getting on those disks.

Which didn’t mean that developers weren’t positively salivating after technological advances that could turn more of their aspirations into realities. And progress did come. Between the Trinity of 1977 and 1983, the year we’ve reached as I write this, typical memory sizes on the relatively inexpensive 8-bit machines typical in homes increased from as little as 4 K to 48 K, with 64 K set to become the accepted minimum by 1984. The arrival of the Atari 400 and 800 in 1979 and the Commodore 64 in 1982 each brought major advances in audiovisual capabilities. Faster, more convenient disks replaced cassettes as the accepted standard storage medium, at least in North America. But other parts of the technological equation remained frozen, perhaps surprisingly so given the modern accepted wisdom about the pace of advancement in computing. Home machines in 1983 were still mostly based around one of the two CPUs found in the Trinity of 1977, the Zilog Z80 and the MOS 6502, and these chips were still clocked at roughly the same speeds as in 1977. Thus, Moore’s Law notwithstanding, the processing potential that programmers had to work with remained for the moment frozen in place.

To find movement in this most fundamental part of a microcomputer we have to look to the more expensive machines. The IBM PC heralded the beginning of 16-bit microcomputing in 1981. The Apple Lisa of 1983 became the first mass-produced PC to use the state-of-the-art Motorola 68000, a chip which would have a major role to play in computing for the rest of the decade and beyond. Both the Lisa and an upgraded model of the IBM PC introduced in 1983, the PC/XT, also sported hard drives, which let them store several megabytes of data in constantly accessible form, and to retrieve it much more quickly and reliably than could be done from floppy disks. Still, these machines carried huge disadvantages to offset their technical advancements. The IBM PC and especially the PC/XT were, as noted, expensive, and had fairly atrocious graphics and sound even by the standards of 1983. The Lisa was really, really expensive, lacked color and sound, and was consciously designed to be as inaccessible to the hackers and bedroom coders who built the games industry as the Apple II was wide open. The advancements of the IBM PC and the Lisa would eventually be packaged into forms more useful to gamers and game developers, but for now for most gamers it was 8 bits, floppy disks, and (at best) 64 K.

Developers and engineers — and, I should note, by no means just those in the games industry and by no means just those working with the 8-bit machines — were always on the lookout for a secret weapon that might let them leapfrog some steps in what must have sometimes seemed a plodding pace of technological change, something that might let them get to that aspirational future faster. They found one that looked like it might just have potential in a surprising place: in the world of ordinary consumer electronics. Or perhaps by 1983 it was not so surprising, for by then they had already been waiting for, speculating about, and occasionally tinkering with the technology in question for quite some years.

At the end of the 1960s, with the home-videocassette boom still years away, the American media conglomerate MCA and the Dutch electronics giant Philips coincidentally each began working separately on a technology to encode video onto album-like discs using optical storage. The video would be recorded as a series of tiny pits in the plastic surface of the disc, which could be read by the exotic technology of a laser beam scanning it as the disc was rotated. The two companies decided to combine their efforts after learning of one another’s existence a few years later, and by the mid-1970s they were holding regular joint demonstrations of the new technology, to which they gave the perfect name for the era: DiscoVision.

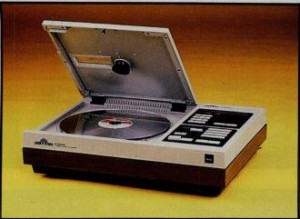

Yet laser discs, as they came to be more commonly called, were painfully slow to reach the market. A few pilots and prototype programs aside, the first consumer-grade players didn’t reach stores in numbers until late 1980.

By that time VCRs were selling in huge numbers. Laser discs offered far superior video and audio than VCRs, but, at least from the standpoint of most consumers, had enough disadvantages to more than outweigh that. For starters, they were much more expensive. And they could only hold about 30 minutes of video on a side; thus the viewer had to get up and flip or change the disc, album-style, three times over the course of a typical movie. This was a hard sell indeed to a couch-loving nation who were falling in love with their new remote controls as quickly as their VCRs. Yet it was likely the very thing that the movie and television industry found most pleasing about the laser disc that really turned away consumers: the discs were read only, meaning it was impossible to use them to record from the television, or to copy and swap movies and other programs with friends. Some (admittedly anecdotal) reports claim that up to half of the laser-disc players sold in the early years of the format were returned when their purchasers realized they couldn’t use them to record.

Thus the laser-disc format settled into a long half-life in which it never quite performed up to expectations but never flopped so badly as to disappear entirely. It became the domain of the serious cineastes and home-theater buffs who were willing to put up with its disadvantages in return for the best video and audio quality you could get in the home prior to the arrival of the DVD. Criterion appeared on the scene in 1984 to serve this market with a series of elaborate special editions of classic films loaded with the sorts of extras that other publishers wouldn’t begin to offer until the DVD era: cast and crew interviews, “making of” documentaries, alternate cuts, unused footage, and of course the ubiquitous commentary track (like DVDs, laser discs had the ability to swap and mix audio streams). Even after DVDs began to replace VCRs en masse and thus to change home video forever circa 2000, a substratum of laser-disc loyalists soldiered on, some unwilling to give up on libraries they’d spent many years acquiring, others convinced, like so many vinyl-album boosters, that laser discs simply looked better than the “colder” digital images from DVDs or Blu-ray discs. (Although all of these mediums store data using the same basic optical techniques, in a laser disc the data is analog, and is processed using analog rather than digital circuitry.) Pioneer, who despite having nothing to do with the format’s development became its most consistent champion — they were responsible for more than half of all players sold — surprised those who already thought the format long dead in January of 2009 when they announced that they were discontinuing the last player still available for purchase.

The technology developed for the laser disc first impacted the lives of those of us who didn’t subscribe to Sound and Vision in a different, more tangential way. Even as DiscoVision shambled slowly toward completion during the late 1970s, a parallel product was initiated at Philips to adapt optical-storage technology to audio only. Once again Philips soon discovered another company working on the same thing, this time Sony of Japan, and once again the two elected to join forces. Debuting in early 1983, the new compact disc was first a hit mainly with the same sorts of technophiles and culture vultures who were likely to purchase laser-disc players. Unlike the laser disc, however, the CD’s trajectory didn’t stop there. By 1988 400 million CDs were being pressed each year, by which time the format was on the verge of its real explosion in popularity; nine years later that number was 5 billion, close to one CD for every person on the planet.

But now let’s back up and relate this new optical audiovisual technology to the computer technologies with which we’re more accustomed to spending our time around these parts. Many engineers and programmers have a specific sort of epiphany after working with electronics in general or computers in particular for a certain amount of time. Data, they realize, is ultimately just data, whether it represents an audio recording, video, text, or computer code. To a computer in particular it’s all just a stream of manipulable numbers. The corollary to this fact is that a medium developed for the storage of one sort of data can be re-purposed to store something else. Microcomputers in particular already had quite a tradition of doing just that even in 1983. The first common storage format for these machines was ordinary cassette tapes, playing on ordinary cassette players wired up to Altairs, TRS-80s, or Apple IIs. The data stored on these tapes, which when played back for human ears just sounded like a stream of discordant noise, could be interpreted by the computer as the stream of 0s and 1s which it encoded. It wasn’t the most efficient of storage methods, but it worked — and it worked with a piece of cheap equipment found lying around in virtually every household, a critical advantage in those do-it-yourself days of hobbyist hackers.

If a cassette could be used to store a program, so could a laser disc. Doing so had one big disadvantage compared to other storage methods, the very same that kept so many consumers away from the format: unless you could afford the complicated, specialized equipment needed to write to them yourself, discs had to be stamped out from a special factory complete with their contents, which afterward could only be read, not altered. But the upside… oh, what an upside! A single laser-disc side may have been good for only about 30 minutes of analog video, but could store about 1 to 1.5 GB of digital computer code or data. The possibility of so much storage required a major adjustment of the scale of one’s thinking; articles even in hardcore magazines like Byte that published the figure had to include a footnote explaining what a gigabyte actually was.

Various companies initiated programs in the wake of the laser disc’s debut to adopt the technology to computers, resulting in a plethora of incompatible media and players. Edward Rothchild wrote in Byte in March of 1983 that “discs are being made now in 12- and 14-inch diameters, with 8-, 5 1/4-, 3-, and possibly 2-inch discs likely in the near future.”

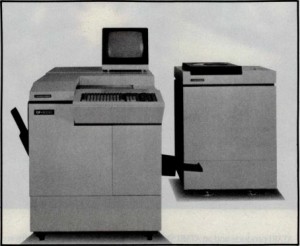

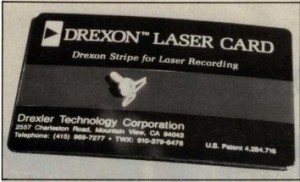

The Toshiba DF-2000, a typically elaborate optical-storage-based institutional archiving system of the 1980s

Others moved beyond discs entirely to try cards, slides, even optical versions of old-fashioned reel-to-reel or cassette tapes. Some of the ideas that swirled around were compelling enough that you have to wonder why they never took off. A company called Drexler came out with the Drexon Laser Card, a card the size of a driver’s license or credit card with a strip at the back that was optical rather than magnetic and could store some 2 MB of data. They anticipated PCs of the future being equipped with a little slot for reading the cards. Among other possibilities, a complete operating system could be installed on a card, taking the place of ROM chips or an operating system loaded from disk. Updates would become almost trivial; the cards were cheap and easy to manufacture, and the end user would need only swap the new card for the old to “install” the new operating system. Others anticipated Laser Cards becoming personal identification cards, with everything anyone could need to know about you, from citizenship status to credit rating, right there on the optical strip, a helpful boon indeed in the much less interconnected world of the early 1980s. (From the department of things that never change: the privacy concerns raised by such a scheme were generally glossed over or ignored.)

Some of these new technologies — the Laser Card alas not among them — did end up living on for quite some years. Optical storage was ideal for large, static databases like public records, especially in institutions that could afford the technology needed to create the discs as well as read them. IBM and others who served the institutional-computing market therefore came out with various products for this purpose, some of which persist to this day. In the world of PCs, however, progress was slow. It could be a bit hard to say what all that storage might actually be good for on a machine like, say, an Apple II. Today we fill our CDs and DVDs mostly with graphics and sound resources, but if you’ve seen the Apple II screenshots that litter this blog you know that home computers just didn’t have the video (or audio) hardware to make much direct use of such assets. Nor could they manipulate more than the most minuscule chunk of the laser disc’s cavernous capacity; connecting an Apple II to optical mass storage would be like trying to fill the family cat’s water bowl with a high-pressure fire hose. Optical media as a data-storage medium therefore came to PCs only slowly. When it did, it piggybacked not on the laser disc but on the newer, more successful format of the audio CD. The so-called “High Sierra” standard for the storage of data on CDs — named after the Las Vegas casino where it was hashed out by a consortium of major industry players — was devised in 1985, accompanied by the first trickle of pioneering disc-housed encyclopedias and the like, and Microsoft hosted the first big conference devoted to CD-ROM in March of 1986. It took several more years to really catch on with the mass market, but by the early years of the 1990s CD-ROM was one of the key technologies at the heart of the “multimedia PC” boom. By this time processor speeds, memory sizes, and video and sound hardware had caught up and were able to make practical use of all that storage at last.

Still, even in the very early 1980s laser discs were not useless to even the most modest of 8-bit PCs. They could in fact be used with some effectiveness in a way that hewed much closer to their original intended purpose. Considered strictly as a video format, the most important property of the laser disc to understand beyond the upgrade in quality it represented over videotape is that it was a random-access medium. Videocassettes and all other, older mediums for film and video were by contrast linear formats. One could only unspool their contents sequentially; finding a given bit of content could only be accomplished via lots of tedious rewinding and fast forwarding. But with a laser disc one could jump to any scene, any frame, immediately; freeze a frame on the screen; advance forward or backward frame by frame or at any speed desired. The soundtrack could be similarly manipulated. This raised the possibility of a new generation of interactive video, which could be controlled by a computer as cheap and common as an Apple II or TRS-80. After all, all the computer had to do was issue commands to the player. All of the work of displaying the video on the screen, so far beyond the capabilities of any extant computer’s graphics hardware, was neatly sidestepped. For certain applications at least it really did feel like leapfrogging about ten years of slow technological progress. Computers could manage graphics and sound through manipulating laser-disc players that they wouldn’t be able to do natively until the next decade.

The people who worked on the DiscoVision project were not blind to the potential here. Well before the laser disc became widely available to consumers in 1980 they were already making available pre-release and industrial-grade models to various technology companies and research institutions. These were used for the occasional showcase, such as the exhibition at Chicago’s Museum of Science and Industry in 1979 which let visitors pull up an image of the front page of any issue of the Chicago Tribune ever published. Various companies continued to offer throughout the 1980s pricey professional-level laser-disc setups that came equipped with a CPU and a modest amount of RAM memory. These could take instructions from their controlling computers and talk back to them as well: telling what frame was currently playing, notifying the host when a particular snippet was finished, etc. The host computer could even load a simple program into the player’s memory and let it run unattended. Consumer-grade devices were more limited, but virtually all did come equipped with one key feature: a remote-control sensor, which could be re-purposed to let a computer control the player. Such control was more limited than was possible with the more expensive players — no talking back on the part of the player was possible. Still, it was intriguing stuff. Magazines like Byte and Creative Computing started publishing schematics and software to let home users take control of their shiny new laser-disc player just months after the devices started becoming available to purchase in the first place. But, given all of the complications and the need to shoot video as well as write code and hack hardware to really create something, much of the most interesting work with interactive video was done by larger institutions. Consider, for example, the work done by the American Heart Association’s Advanced Technology Development Division.

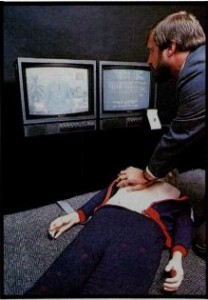

The AHA was eager to find a way to increase the quantity and quality of CPR training in the United States. They had a very good reason for doing so: in 1980 it was estimated that an American stricken with a sudden heart attack faced odds of 18 to 1 against there being someone on-hand who could use CPR to save her life. Yet CPR training from a human instructor is logistically complicated and expensive. Many small-town governments and/or hospitals simply couldn’t manage to provide it. David Hon of the AHA believed that interactive video could provide the answer. The system his research group developed consisted of an Apple II interfaced to a laser-disc player as well as a mannequin equipped with a variety of sensors. An onscreen instructor taught the techniques of CPR step by step. After each step the system quizzed the student on what she had just learned; she could select her answers by touching the screen with a light pen. It then let her try out her technique on the mannequin until she had it down. The climax of the program came with a simulation of an actual cardiac emergency, complete with video and audio, explicitly designed to be exciting and dramatic. Hon:

We had learned something from games like Space Invaders: if you design a computer-based system in such a way that people know the difference between winning and losing, virtually anyone will jump in and try to win. Saving a life is a big victory and a big incentive. We were sure that if we could build a system that was easy to use and engaging trainees would use it and learn from it willingly.

The trainee’s “coach” provides instruction and encouragement on the left monitor; the right shows the subject’s vital signs as the simulation runs

At a cost of about $15,000 per portable system, the scheme turned out to be a big success, and probably saved more than a few lives.

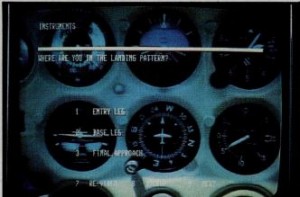

One limitation of many early implementations of interactive video like this was the fact that the computer controller and the laser disc itself each had its own video display, with no way to mix the two on one screen, as you can clearly see in the photos above. In time, however, engineers developed the genlock, a piece of hardware which allowed a computer to overlay its own signal onto a video display. How might this be useful? Well, consider the very simple case of an educational game which quizzes children on geography. The computer could play some looping video associated with a given country from the laser disc, while asking the player what country is being shown in text generated by the computer. Once the player answers, more text could be generated telling whether she got it right or not. Yet many saw even this scenario as representing the merest shadow of interactive video’s real potential. A group at the University of Nebraska developed a flight-training system which helped train prospective pilots by combining video and audio from actual flights with textual quizzes asking, “What’s happening here?” or “What should be done next?” or “What do these instruments seem to indicate?”

Another University of Nebraska group developed a series of educational games meant to teach problem-solving to hearing-impaired children. They apparently played much like the full-motion-video adventure games of a decade later, combining video footage of real actors with puzzles and conversation menus to let the child find her own way through the story and solve the case.

The Minnesota Educational Computing Consortium (the same organization that distributed The Oregon Trail) developed a high-school economics course:

Three types of media are used in each session. A booklet introduces the lesson and directs the student to use the other pieces of equipment. At the same time, it provides space for note taking and record keeping. A microcomputer [an Apple II] contributes tutorial, drill, and practice dimensions to the lesson. And a videodisc player presents information, shows examples, and develops concepts which involve graphics or motion.

Apple built commands for controlling laser-disc players into their SuperPILOT programming language, a rival to BASIC designed specifically for use in schools.

There was a widespread sense among these experimenters that they were pioneering a new paradigm of education and of computing, even if they themselves couldn’t quite put their fingers on what it was, what it meant, or what it should be called. In March of 1976, an amazingly early date when laser discs existed merely as crude prototypes, Alfred M. Bork envisioned what laser discs could someday mean to educational computing in an article that reads like a dispatch from the future:

I envision that each disc will contain a complete multimedia teaching package. Thus, a particular disc might be an elaborate teaching sequence for physics, having on the disc the computer code for that sequence (including possible microcode to make the stand-alone system emulate the particular machine that material was originally developed for), slides, audio messages, and video sequences of arbitrary length, all of these in many different segments. Thus, a teaching dialog stored on a videodisc would have full capability of handling very complex computer logic, and making sizable calculations, but it also could, at an appropriate point, show video sequences of arbitrary length or slides, or present audio messages. Another videodisc might have on it a complete language, such as APL, including a full multimedia course for learning APL interactively. Another might have relatively little logic, but very large numbers of slides in connection with an art-history or anatomy course. For the first time control of all the important audiovisual media would be with the student. The inflexibility of current film and video systems could be overcome too, because some videodiscs might have on them simply nothing but a series of film clips, with the logic for students to pick which ones they wanted to see at a particular time.

Bork uses a critical word in his first sentence above, possibly for the first time in relation to computing: “multimedia.” Certainly it’s a word that wouldn’t become commonplace until many years after Bork wrote this passage. Tony Feldman provided perhaps the most workable and elegant definition in 1994: “[Multimedia is] a method of designing and integrating computer technologies on a single platform that enables the end user to input, create, manipulate, and output text, graphics, audio, and video utilizing a single user interface.” This new paradigm of multimedia computing is key to almost all of the transformations that computers have made in people’s everyday lives in the thirty years that have passed since the pioneering experiments I just described. The ability to play back, catalog, combine, and transform various types of media, many or most of them sourced from the external world rather than being generated within the computer itself, is the bedrock at the root of the World Wide Web, of your iPod and iPhone and iPad (or equivalent). Computers today can manipulate all of that media internally, with no need for the kludgy plumbing together of disparate devices that marks these early experiments, but the transformative nature of the concept itself remains. With these experiments with laser-disc-enabled interactive video we see the beginning of the end of the old analog world of solid-state electronics, to be superseded by a digital world of smart, programmable media devices. That, much more than gigabytes of storage, is the real legacy of DiscoVision.

But of course these early experiments were just that, institutional initiatives seen by very few. There simply weren’t enough people with laser-disc players wired to their PCs for a real commercial market to develop. The process of getting a multimedia-computing setup working in the home was just too expensive and convoluted. It would be six or seven more years before “multimedia” would become the buzzword of the age — only to be quickly replaced in the public’s imagination by the World Wide Web, that further advance that multimedia enabled.

In the meantime, most people of the early 1980s had their first experience with this new paradigm of computing outside the home, in the form of — what else? — a game. We’ll talk about it next time.

(The most important sources for this article were: Byte magazines from June 1982, March 1983, and October 1984; Creative Computing from March 1976 and January 1982; Multimedia, a book by Tony Feldman; Interactive Video, a volume from 1989 in The Educational Technology Anthology Series; and various laser-disc-enthusiast sites on the Internet. I also lifted some of these ideas from my own book about the Amiga, The Future Was Here. The lovely picture that begins this article was on the cover of the June 1982 Byte. All of the other images were also taken from the various magazines listed above.)

Felix

May 28, 2013 at 3:13 pm

That’s a fascinating topic. A friend of mine is a big fan of laser disc (and other retro technology), and I have to agree it’s fascinating what people back then could do with limited means, simply because they put their minds to it.

Also, you have given me the answer to a question that has been bothering me, namely why did people want video and audio in the web browser so much? The answer, which was eluding me, is that it makes sense. Text + pictures + audio/video + interactivity — all the media in one — is what we recreated again and again, as CD encyclopedias, Hypercard, Powerpoint…

Jimmy Maher

May 29, 2013 at 5:55 am

Yeah. It’s taken for granted today, but this was a big deal back in the day. Multimedia computing changed the world, to such an extent that it can be difficult for us living in that changed world and surrounded by it every day to remark its presence and influence.

S. John Ross

May 29, 2013 at 12:17 am

“…but we can fill in all of the missing pieces with determined imaginings of what we could someday actually be getting on those disks.”

For my own perspective, I feel this sentence would have been more universally truthful had it ended at the word “imaginings,” since that covers both those that did … the thing you described … and those that did (and do) play these games as a willful and joyful exercise in the willing suspension of disbelief, without depending (or even considering) some unspoken promise of what technology might otherwise offer.

Jimmy Maher

May 29, 2013 at 5:52 am

On the one hand I hear you, but on the other I do think that most of the games released up to 1983 can be quite hard to get into today — even (especially?) the ones that are doing something kind of fascinating, like The Prisoner. But as we move into the middle years of the decade games are quickly becoming richer, deeper, and friendlier. (I kind of see the first games from EA and Infocom’s stable of 1983 as harbingers of this change.) They’re still going to be a hard sell to most people, but with these titles I’m more comfortable with your version of the statement.

Gilles Duchesne

May 29, 2013 at 2:13 pm

(Reads the article.)

“Hmm, I wonder if this’ll lead to…”

(Checks the tags.)

“Yup.”

Rowan Lipkovits

May 30, 2013 at 5:55 pm

The corollary to this fact is that a medium developed for the storage of one sort of data can be repurposed to store something else. Microcomputers in particular already had quite a tradition of doing just that even in 1983.

Then you go on to discuss audiocassette storage, the “winner” in this generation — but at this point the industry also had the delightful curios of programs disseminated through the other standard audio storage means such as records and flexi-discs and indeed of course also radio transmission.

Admittedly you could not fit video images into such formats, at least not more than a second or two of very low-resolution images (… which raises the spectre of videocassette games, a wart on the back of the laserdisc games.)

Jimmy Maher

May 31, 2013 at 8:40 am

The first draft of this article actually included a mention of the use of radio to transmit programs in Europe (http://arstechnica.com/business/2012/08/experiments-in-airborne-basic-buzzing-computer-code-over-fm-radio/). But it felt like kind of a forced digression, and so I nixed it…

Ron Newcomb

June 2, 2013 at 2:28 am

So that’s what happened. Now I know. thx

Cargo Cult

June 3, 2013 at 7:59 am

I imagine you’ve seen it already, but if not – you might be interested in the BBC Domesday Project – a mid-eighties multimedia project to mark the 900th anniversary of the original Domesday Book.

Involving laserdiscs, BBC Micros and custom interface hardware – the thing had up to a million people involved in its production, but became effectively unreadable just a few years later.

Jimmy Maher

June 4, 2013 at 5:45 am

Yeah, I was aware of that. Amazing, no? I think it’s likely to find a place here a bit later, and in the context of the parallel history of British computing I’ve been writing.

DZ-Jay

February 18, 2017 at 7:19 pm

:: serious cinestas and home-theater buffs

I think you meant “cineasts” or “cinéastes”.

Jimmy Maher

February 19, 2017 at 8:09 am

God, what a mess this article was. Cleaned it up a bit. Thanks for making me look at it again. Did I actually read the stuff I published back in the day?

Ben

January 15, 2021 at 7:23 pm

Phillips -> Philips (twice)

CD-ROMl -> CD-ROM

sensor,which -> sensor, which

work dome -> work done

to be to be -> to be

Jimmy Maher

January 17, 2021 at 10:15 am

Thanks!

Will Moczarski

September 12, 2021 at 11:54 am

the work dome

-> Should this be the work done? Or is it a dome I’m unaware of? Have I missed another Domesday? ;-)

Jimmy Maher

September 13, 2021 at 9:40 am

:) Thanks!