Come August of 1984, Microsoft Windows had missed its originally announced release date by four months and was still nowhere near ready to go. That month, IBM released the PC/AT, a new model of their personal computer based around the more powerful Intel 80286 processor. Amidst the hoopla over that event, they invited Microsoft and other prominent industry players to a sneak preview of something called TopView, and Bill Gates got an answer at last to the fraught question of why IBM had been so uninterested in his own company’s Windows operating environment.

TopView had much in common with Windows and the many other attempts around the industry, whether already on the market or still in the works, to build a more flexible and user-friendly operating environment upon the foundation of MS-DOS. Like Windows and so many of its peers, it would offer multitasking, along with a system of device drivers to isolate applications from the underlying hardware and a toolkit for application developers that would allow them to craft software with a consistent look and feel. Yet one difference made TopView stand out from the pack — and not necessarily in a good way. While it did allow the use of a mouse and offered windows of a sort, it ran in text rather than graphics mode. The end result was a long, long way from the Macintosh-inspired ideal of intuitiveness and attractiveness which Microsoft dreamed of reaching with their own GUI environment.

TopView at the interface level resembled something IBM might have produced for the mainframe market back in the day more than it did Windows and the other microcomputer GUI environments that were its ostensible competitors. Like IBM’s mainframe system software, it was a little stodgy, not terribly pretty, and not notably forgiving toward users who hadn’t done their homework, yet had a lot to offer underneath the hood to anyone who could accept its way of doing business. It was a tool that seemed designed to court power users and office IT administrators, even as its competitors advertised their ease of use to executives and secretaries.

Within its paradigm, though, TopView was a more impressive product than it’s generally given credit for being even today. It sported, for example, true preemptive multitasking [1]This is perhaps a good point to introduce a quick primer on multitasking techniques to those of you who may not be familiar with its vagaries. The first thing to understand is that multitasking during this period was fundamentally an illusion. The CPUs in the computers of this era were actually only capable of doing one task at a time. Multitasking was the art of switching the CPU’s attention between tasks quickly enough that several things seemed to be happening at once — that several applications seemed to be running at once. There are two basic approaches to creating this illusionary but hugely useful form of multitasking.

Cooperative multitasking — found in systems like the Apple Lisa, the Apple Macintosh between 1987’s System 5 and the introduction of OS X in 2001, and early versions of Microsoft Windows — is so named because it relies on the cooperation of the applications themselves. A well-behaved, well-programmed application is expected to periodically relinquish its control of the computer voluntarily to the operating system, which can then see if any of its own tasks need to be completed or any other applications have something to do. A cooperative-multitasking operating system is easier to program and less resource-intensive than the alternative, but its most important drawback is made clear to the user as soon as she tries to use an application that isn’t terribly well-behaved or well-programmed. In particular, an application that goes into an infinite loop of some sort — a very common sort of bug — will lock up the whole computer, bringing the whole operating system down with it.

Preemptive multitasking — found in the Commodore Amiga, Mac OS X, Unix and Linux, and later versions of Microsoft Windows — is so named because it gives the operating system the authority to wrest control from — to preempt — individual applications. Thus even a looping program can only slow down the system as a whole, not kill it entirely. For this reason, it’s by far the more desirable approach to multitasking, but also the more complicated to implement. months before the arrival of the Commodore Amiga, the first personal computer to ship with such a feature right out of the box. Even ill-behaved vanilla MS-DOS applications could be coerced into multitasking under TopView. Indeed, while IBM hoped, like everyone else making extended operating environments, to tempt third-party programmers into making native applications just for them, they were willing to go to heroic lengths to get existing MS-DOS applications working inside TopView in the meantime. They provided special specifications files — known as “Program Information Files,” or PIFs — for virtually all popular MS-DOS software. These told TopView exactly how and when their subjects would try to access the computer’s hardware, whereupon TopView would step in to process those calls itself, transparently to the ill-behaved application. It was an admittedly brittle solution to a problem which seemed to have no unadulteratedly good ones; it required IBM to research the technical underpinnings of every major new piece of MS-DOS software that entered the market in order to keep up with an endless game of whack-a-mole that was exhausting just to think about. Still, it was presumably better than punting on the whole problem of MS-DOS compatibility, as Visi On had done. Whatever else one could say about IBM’s approach to extending MS-DOS, they thus had apparently learned at least a little something from the travails of their competitors. Even the decision to run in character mode sounds far more defensible when you consider that up to two-thirds of MS-DOS computers at the time of TopView’s release were equipped only with a monochrome screen capable of no other mode.

Unfortunately, TopView failed to overcome many of the other issues that dogged its competitors. Having been so self-consciously paired with the pricey PC/AT, it was still a bit out in front of the sweet spot of hardware requirements, requiring a 512 K machine to do much of anything at all. And it was still dogged by the 640 K barrier, that most troublesome of all aspects of MS-DOS’s primitiveness. With hacks to get around the barrier still in their relative infancy, TopView didn’t even try to support more memory, and this inevitably limited the appeal of its multitasking capability. With applications continuing to grow in complexity and continuing to gobble up ever more memory, it wouldn’t be long before 640 K wouldn’t be enough to run even two pieces of heavyweight business software at the same time, especially after one had factored in the overhead of the operating environment itself.

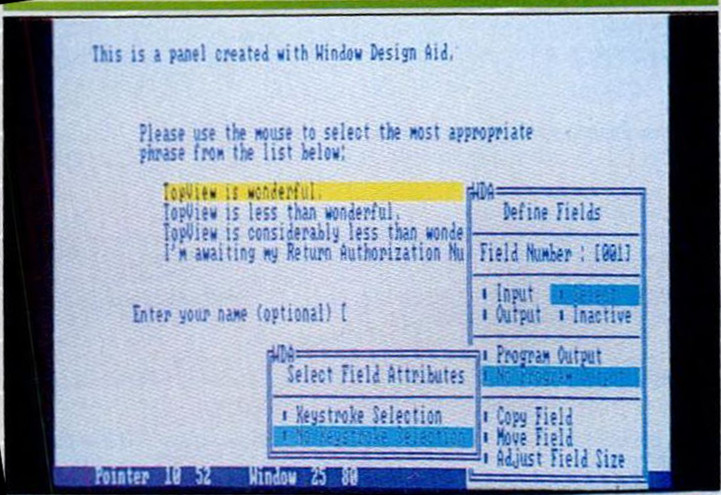

A Quick Tour of TopView

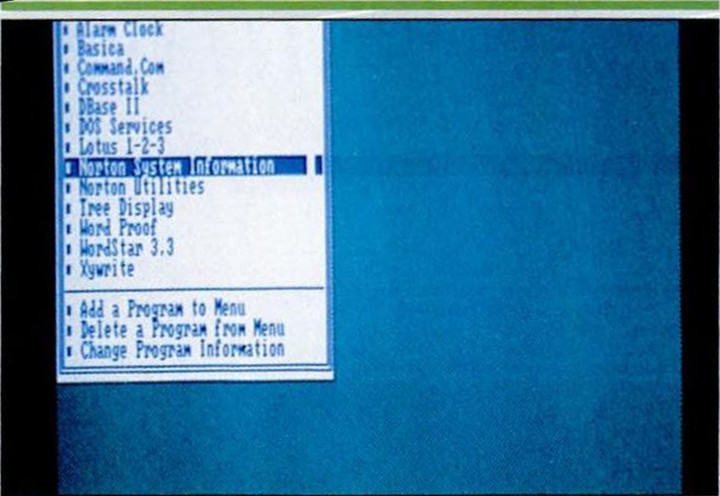

While it isn’t technically a graphical user interface, TopView shares many features with contemporaneous products like Visi On and Microsoft Windows. Here we’re choosing an application to launch from a list of those that are installed. The little bullet to the left of each name on the list is important; it indicates that we have enough memory free to run that particular application. With no more than 640 K available in this multitasking environment and no virtual-memory capability, memory usage is a constant concern.

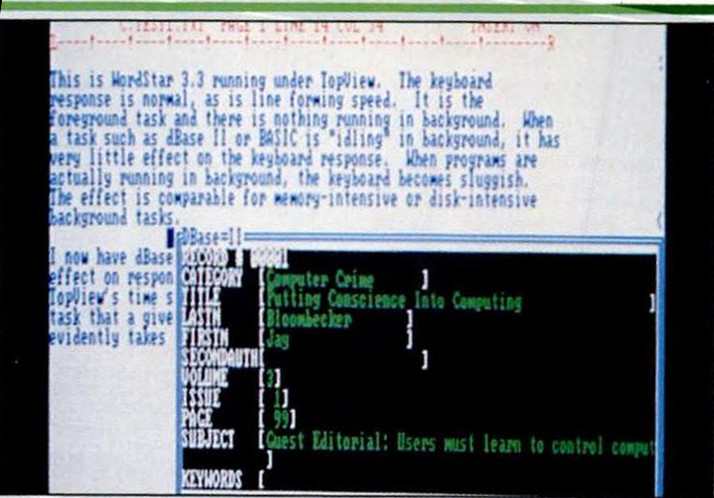

Here we see TopView’s multitasking capabilities. We’re running the WordStar word processor and the dBase database, two of the most popular MS-DOS business applications, at the same time. Note the “windows” drawn purely out of text characters. Preemptive multitasking like TopView is doing here wouldn’t come to Microsoft Windows until Windows 95, and wouldn’t reach the Macintosh until OS X was released in 2001.

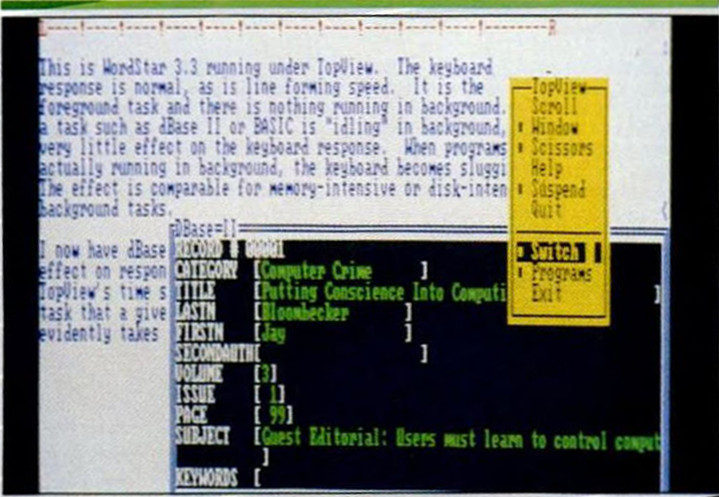

We bring up a TopView context window by hitting the third — yes, third — button on IBM’s official mouse. Here we can switch between tasks, adjust window sizes and positions (albeit somewhat awkwardly, given the limitations of pure text), and even cut and paste between many MS-DOS applications that never anticipated the need for such a function. No other operating environment would ever jump through more hoops to make MS-DOS applications work like they had been designed for a multitasking windowed paradigm from the start.

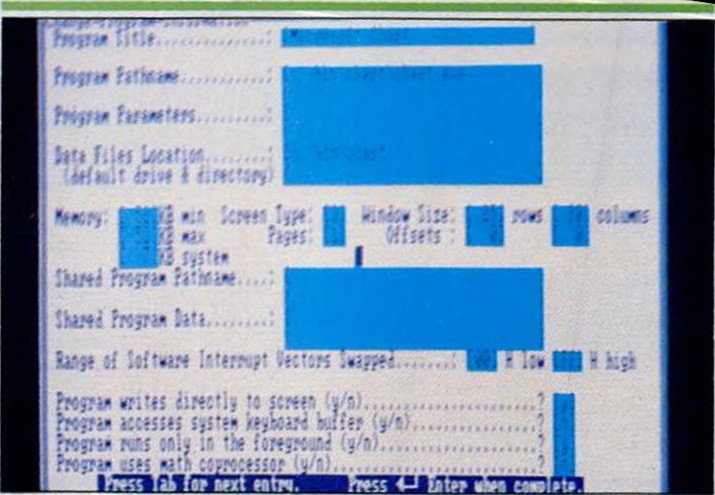

Some of those hoops are seen above. Users make MS-DOS applications run inside TopView by defining a range of parameters explaining just what the application in question tries to do and how it does it. Thankfully, pre-made definition files for a huge range of popular software shipped with the environment. Brittle as heck though this solution might be, you certainly can’t fault IBM’s determination. Microsoft would adopt TopView’s “Program Information File,” or PIF, for use in Windows as well. It would thereby become the one enduring technical legacy of TopView, persisting in Windows for years after the IBM product was discontinued in 1988.

One of the hidden innovations of TopView is its “Window Design Aid,” which lets programmers of native applications define their interface visually, then generates the appropriate code to create it. Such visually-oriented time-savers wouldn’t become commonplace programming aids for another decade at least. It all speaks to a product that’s more visionary than its reputation — and its complete lack of graphics — might suggest.

TopView shipped in March of 1985 — later than planned, but nowhere near as late as Microsoft Windows, which was now almost a full year behind schedule. It met a fractious reception. Some pundits called it the most important product to come out of IBM since the release of the original IBM PC, while others dismissed it as a bloated white elephant that hadn’t a prayer of winning mainstream acceptance — not even with the IBM logo on its box and a surprisingly cheap suggested list price of just $149. For many IBM watchers — not least those watching with concern inside Microsoft — TopView was most interesting not so much as a piece of technology as a sign of IBM’s strategic direction. “TopView is the subject of fevered whispers throughout the computer industry not because of what it does but because of what it means,” wrote PC Magazine. It had “sent shivers through the PC universe and generated watchfulness” and “possibly even paranoia. Many experts think, and some fear, that TopView is the first step in IBM’s lowering of the skirt over the PC — the beginning of a closed, proprietary operating system.”

Many did indeed see TopView as a sign that IBM was hoping to return to the old System/360 model of computing, seizing complete control of the personal-computing market by cutting Microsoft out of the system-software side. According to this point of view, the MS-DOS compatibility IBM had bent over backward to build into TopView needed last only as long as it took third-party developers to write native TopView applications. Once a critical mass of same had been built up, it shouldn’t be that difficult to decouple TopView from MS-DOS entirely, turning it into a complete, self-standing operating system in its own right. For Bill Gates, this was a true nightmare scenario, one that could mean the end of his business.

But such worries about a TopView-dominated future, to whatever extent he had them, proved unfounded. A power-user product with mostly hacker appeal in a market that revolved around the business user just trying to get her work done, TopView quickly fizzled into irrelevance, providing in the process an early warning sign to IBM, should they choose to heed it, that their omnipotence in the microcomputer market wasn’t as complete as it had been for so long in the mainframe market. IBM, a company that didn’t abandon products easily, wouldn’t officially discontinue TopView until 1988. By that time, though, the most common reaction to the news would be either “Geez, that old thing was still around?” or, more likely, “What’s TopView?”

Of course, all of this was the best possible news from Microsoft’s perspective. IBM still needed the MS-DOS they provided as much as ever — and, whatever else happened, TopView wasn’t going to be the as-yet-unreleased Windows’s undoing.

In the meantime, Bill Gates had Windows itself to worry about, and that was becoming more than enough to contend with. Beginning in February of 1984, when the planned Windows release date was given a modest push from April to May of that year, Microsoft announced delay after delay after delay. The constant postponements made the project an industry laughingstock. It became the most prominent target for a derisive new buzzword that had been coined by a software developer named Ann Winblad in 1983: “vaporware.”

Inside Microsoft, Windows’s reputation was little better. As 1984 wore on, the project seemed to be regressing rather than progressing, becoming a more and more ramshackle affair that ran more and more poorly. Microsoft’s own application developers kicked and screamed when asked to consider writing something for Windows; they all wanted to write for the sexy Macintosh.

Neil Konzen, a Microsoft programmer who had been working with the Macintosh since almost two years before that machine’s release, was asked to take a hard look at the state of Windows in mid-1984. He told Bill Gates that it was “a piece of crap,” “a total disaster.” Partially in response to that verdict, Gates pushed through a corporate reorganization, placing Steve Ballmer, his most trusted lieutenant, in charge of system software and thus of Windows. He reportedly told Ballmer to get Windows done or else find himself a job at another company. And in corporate America, of course, shit rolls downhill; Ballmer started burning through Windows project managers at a prodigious pace. The project acquired a reputation inside Microsoft as an assignment to be avoided at all costs, a place where promising careers went to die. Observers inside and outside the project’s orbit were all left with the same question: just what the hell was preventing all these smart people from just getting Windows done?

The fact was that Windows was by far the biggest thing Microsoft had ever attempted from the standpoint of software engineering, and it exposed the limitations of the development methodology that had gotten them this far. Ever since the days when Gates himself had cranked out their very first product, a version of BASIC to be distributed on paper tape for the Altair kit computer, Microsoft had functioned as a nested set of cults of personality, each project driven by if not belonging solely to a single smart hacker who called all the shots. For some time now, the cracks in this edifice had been peeking through; even when working on the original IBM PC, Gates was reportedly shocked and nonplussed at the more structured approach to project management that was the norm at IBM, a company that had already brought to fruition some of the most ambitious projects in the history of the computer industry. And IBM’s project managers felt the same way upon encountering Microsoft. “They were just a bunch of nerds, just kids,” remembers one. “They had no test suites, nothing.” Or, as another puts it:

They had a model where they just totally forgot about being efficient. That blew our minds. There we were watching all of these software tools that were supposed to work together being built by totally independent units, and nobody was talking to each other. They didn’t use any of each other’s code and they didn’t share anything.

With Windows, the freelancing approach to software development finally revealed itself to be clearly, undeniably untenable. Scott MacGregor, the recent arrival from Xerox who was Windows’s chief technical architect in 1984, remembers his frustration with this hugely successful young company — one on whose products many of the Fortune 500 elite of the business world were now dependent — that persisted in making important technical decisions on the basis of its employees’ individual whims:

I don’t think Bill understood the magnitude of doing a project such as Windows. All the projects Bill had ever worked on could be done in a week or a weekend by one or two different people. That’s a very different kind of project than one which takes multiple people more than a year to do.

I don’t think of Bill as having a lot of formal management skills, not in those days. He was kind of weak on managing people, so there was a certain kind of person who would do well in the environment. There were a lot of people at that time with no people skills whatsoever, people who were absolutely incompetent at managing people. It was the Peter Principle: very successful technical people would get promoted to management roles. You’d get thirty people reporting to one guy who was not on speaking terms with the rest of the group, which is inconceivable.

One has to suspect that MacGregor had one particular bête noir in mind when talking about his “certain kind of person.” In the eyes of MacGregor and many others inside Microsoft, Steve Ballmer combined most of Bill Gates’s bad qualities with none of his good ones. Like Gates, he had a management style that often relied on browbeating, but he lacked the technical chops to back it up. He was a yes man in a culture that didn’t suffer fools gladly, a would-be motivational speaker who too often failed to motivate, the kind of fellow who constantly talked at you rather than with you. One telling anecdote has him visiting the beleaguered Windows team to deliver the sort of pep talk one might give to a football team at halftime, complete with shouts and fist pumps. He was greeted by… laughter. “You don’t believe in this?” Ballmer asked, more than a little taken aback. The team just stood there uncomfortably, uncertain how to respond to a man that MacGregor and many of the rest of them considered almost a buffoon, a “non-tech cheerleader.”

And yet MacGregor had problems of his own in herding the programmers who were expected to implement his grand technical vision. Many of them saw said vision as an overly slavish imitation of the Xerox Star office system, whose windowing system he had previously designed. He seemed willfully determined to ignore the further GUI innovations of the Macintosh, a machine with which much of Microsoft — not least among them Bill Gates — were deeply enamored. The most irritating aspect of his stubbornness was his insistence that Windows should employ only “tiled windows” that were always stretched the horizontal length of the screen and couldn’t overlay one another or be dragged about freely in the way of their equivalents on the Macintosh.

All of this created a great deal of discord inside the project, especially given that much of MacGregor’s own code allegedly didn’t work all that well. Eventually Gates and Ballmer brought in Neil Konzen to rework much of MacGregor’s code, usurping much of his authority in the process. As Windows began to slip through MacGregor’s fingers, it began to resemble the Macintosh more and more; Konzen was so intimately familiar with Apple’s dream machine that Steve Jobs had once personally tried to recruit him. According to Bob Belleville, another programmer on the Windows team, Konzen gave to Windows “the same internal structure” as the Macintosh operating system; “in fact, some of the same errors were carried across.” Unfortunately, the tiled-windows scheme was judged to be too deeply embedded by this point to change.

In October of 1984, Microsoft announced that Windows wouldn’t ship until June of 1985. Gates sent Ballmer on an “apology tour” of the technology press, prostrating himself before journalist after journalist. It didn’t seem to help much; the press continued to pile on with glee. Stewart Alsop II, the well-respected editor of InfoWorld magazine, wrote that “buyers probably believe the new delivery date for Windows with the same fervor that they believe in Santa Claus.” Then, he got downright nasty: “If you’ve got something to sell, deliver. Otherwise, see to the business of creating the product instead of hawking vaporware.”

If the technology press was annoyed with Microsoft’s constant delays and prevarications, the third parties who had decided or been pressured into supporting Windows were getting even more impatient. One by one, the clone makers who had agreed to ship Windows with their machines backed out of their deals. Third-party software developers, meanwhile, kept getting different versions of the same letter from Microsoft: “We’ve taken the wrong approach, so everything you’ve done you need to trash and start over.” They too started dropping one by one off the Windows bandwagon. The most painful defection of all was that of Lotus, who now reneged on their promise of a Windows version of Lotus 1-2-3. The latter was the most ubiquitous single software product in corporate America, excepting only MS-DOS, and Microsoft had believed that the Windows Lotus 1-2-3 would almost guarantee their new GUI environment’s success. The question now must be whether the lack of same would have the opposite effect.

In January of 1985, Steve Ballmer brought in Microsoft’s fifth Windows project manager: Tandy Trower, a three-year veteran with the company who had recently been managing Microsoft BASIC. Trower was keenly aware of Bill Gates’s displeasure at recent inroads being made into Microsoft’s traditional BASIC-using demographic by a new product called Turbo Pascal, from a new industry player called Borland. The Windows project’s reputation inside Microsoft was such that he initially assumed he was being set up to fail, thereby giving Gates an excuse to fire him. “Nobody wanted to touch Windows,” remembers Trower. “It was like the death project.”

Trower came in just as Scott MacGregor, the Xerox golden boy who had arrived amidst such high expectations a year and a half before, was leaving amidst the ongoing discord and frustration. Ballmer elected to replace MacGregor with… himself as Windows’s chief technical architect. Not only was he eminently unqualified for such a role, but he thus placed Trower in the awkward position of having the same person as both boss and underling.

As it happened, though, there wasn’t a lot of need for new technical architecting. In that respect at least, Trower’s brief was simple. There were to be no new technical or philosophical directions explored, no more debates over the merits of tiled versus overlapping windows or any of the rest. The decisions that had already been made would remain made, for better or for worse. Trower was just to get ‘er done, thereby stemming the deluge of mocking press and keeping Ballmer from having to go on any more humiliating apology tours. He did an admirable job, all things considered, of bringing some sort of coherent project-management methodology to a group of people who desperately needed one.

What could get all too easily lost amidst all the mockery and all very real faults with the Windows project as a functioning business unit was the sheer difficulty of the task of building a GUI environment without abandoning the legacy of MS-DOS. Unlike Apple, Microsoft didn’t enjoy the luxury of starting with a clean slate; they had to keep one foot in the past as well as one in the future. Nor did they enjoy their competitor’s advantage of controlling the hardware on which their GUI environment must run. The open architecture of the IBM PC, combined with a market for clones that was by now absolutely exploding, meant that Microsoft was forced to contend with a crazy quilt of different hardware configurations. All those different video cards, printers, and memory configurations that could go into an MS-DOS machine required Microsoft to provide drivers for them, while all of the popular existing MS-DOS applications had to at the very least be launchable from Windows. Apple, by contrast, had been able to build the GUI environment of their dreams with no need to compromise with what had come before, and had released exactly two Macintosh models to date — models with an architecture so closed that opening their cases required a special screwdriver only available to Authorized Apple Service Providers.

In the face of all the challenges, some thirty programmers under Trower “sweated blood trying to get this thing done,” as one of them later put it. It soon became clear that they weren’t going to make the June 1985 deadline (thus presumably disappointing those among Stewart Alsop’s readers who still believed in Santa Claus). Yet they did manage to move forward in far more orderly fashion than had been seen during all of the previous year. Microsoft was able to bring to the Comdex trade show in May of 1985 a version of Windows which looked far more complete and polished than anything they had shown before, and on June 28, 1985, a feature-complete “Preview Edition” was sent to many of the outside developers who Microsoft hoped would write applications for the new environment. But the official first commercial release of Windows, known as Windows 1.01, didn’t ship until November of 1985, timed to coincide with that fall’s Comdex show.

In marked contrast to the inescapable presence Windows had been at its first Comdex of two years before, the premiere of an actual shipping version of Windows that November was a strangely subdued affair. But then, the spirit of the times as well was now radically different. In the view of many pundits, the bloom was rather off the rose for GUIs in general. Certainly the GUI-mania of the Fall 1983 Comdex and Apple’s “1984” advertisement now seemed like the distant past. IBM’s pseudo-GUI TopView had already failed, as had Visi On, while the various other GUI products on offer for MS-DOS machines were at best struggling for marketplace acceptance. Even the Macintosh had fallen on hard times, such that many were questioning its very survival. Steve Jobs, the GUI’s foremost evangelist, had been ignominiously booted from Apple the previous summer — rendered, as the conventional wisdom would have it, a has-been at age thirty. Was the GUI itself doomed to suffer the same fate? What, asked the conventional-wisdom spouters, was really so bad about MS-DOS’s blinking command prompt? It was good enough to let corporate America get work done, and that was the important thing. Surely it wouldn’t be Windows, an industry laughingstock for the better part of two years now, that turned all this GUI hostility back in the market’s face. Windows was launching into a headwind fit to sink the Queen Mary.

It was a Microsoft public-relations specialist named Pam Edstrom who devised the perfect way of subverting the skepticism and even ridicule that was bound to accompany the belated launch of the computer industry’s most infamous example of vaporware to date. She did so by stealing a well-worn page from the playbook of media-savvy politicians and celebrities who have found themselves embroiled in controversy. How do you stop people making fun of you? Why, you beat them to the punch by making fun of yourself first.

Edstrom invited everybody who was anybody in technology to a “Microsoft Roast” that Comdex. The columnist John C. Dvorak became master of ceremonies, doing a credible job with a comedic monologue to open the affair. (Sample joke about the prematurely bald Ballmer: “When Windows was first announced, Ballmer still had hair!”) Gates and Ballmer themselves then took the stage, where Stewart Alsop presented them with an InfoWorld “Golden Vaporware Award.” The two main men of Microsoft then launched into a comedy routine of their own that was only occasionally cringe-worthy, playing on their established reputations as the software industry’s enfant terrible and his toothy-but-not-overly-bright guard dog. Gates said that Ballmer had wanted to cut features: “He came up with this idea that we could rename this thing Microsoft Window; we would have shipped that a long time ago.” Ballmer told how Gates had ordered him to “ship this thing before the snow falls, or you’ll end your career here doing Windows!”; the joke here was that in Seattle, where the two lived and worked, snow almost never falls. Come the finale, they sang “The Impossible Dream” together as a giant shopping cart containing the first 500 boxed copies of Windows rolled onto the stage amidst billows of dry ice.

All told, it was a rare display of self-deprecating humanity and showmanship from two people not much known for either. From a PR perspective, it was about the best lemonade Microsoft could possibly have made out of a lemon of a situation. The press was charmed enough to start writing about Windows in more cautiously positive terms than they had in a long, long time. “The future of integration [can] be perceived through Windows,” wrote PC World. Meanwhile Jim Seymour, another respected pundit, wrote a column for the next issue of PC Week that perfectly parroted the message Microsoft was trying to get across:

I am a Windows fan, not because of what it is today but what it almost certainly will become. I think developers who don’t build Windows compatibility into new products and new releases of successful products are crazy. The secret of Windows in its present state is how much it offers program developers. They don’t have to write screen drivers [or] printer drivers; they can offer their customers a kind of two-bit concurrency and data exchange.

The most telling aspect of even the most sympathetic early reviews is their future orientation; they emphasize always what Windows will become, not what it is. Because what Windows actually was in November of 1985 was something highly problematic if not utterly superfluous.

The litany of problems began with that same old GUI bugaboo: performance. Two years before, Bill Gates had promised an environment that would run on any IBM PC or clone with at least 192 K of memory. Technically speaking, Microsoft had come very close to meeting that target: Windows 1.01 would run even on the original IBM PC from 1981, as long as it had at least 256 K of memory. It didn’t even absolutely require a hard drive. But running and running well — or, perhaps better put, running usably — were two very different matters. Windows could run on a floppy-based system, noted PC Magazine dryly, “in the same sense that you can bail a swimming pool dry with a teaspoon.” To have a system that wasn’t so excruciatingly slow as to outweigh any possible benefit it might deliver, you really needed a hard drive, 640 K or more of memory, and an 80286 processor like that found in the IBM PC/AT. Even on a hot-rod machine like this, Windows was far from snappy. “Most people will say that any screen refresh that can be watched takes too long,” wrote PC Magazine. “Very little happens too quickly to see in Windows.” One of Microsoft’s own Windows programmers would later offer a still more candid assessment: even at this late date, he would say, “Windows was a pig,” the result of a project that had passed through too many hands and had too many square chunks of code hammered into too many round holes.

Subjectively, Windows felt like it had been designed and programmed by a group of people who had read a whole lot about the Macintosh but never actually seen or used one. “I use a Macintosh enough to know what a mouse-based point-and-click interface should feel like,” wrote John C. Dvorak after the goodwill engendered by the Microsoft Roast had faded. “Go play with a Mac and you’ll see what I mean. Windows is clunky by comparison. Very clunky.” This reputation for endemic clunkiness — for being a Chrysler minivan pitted against Apple’s fine-tuned Porsche of a GUI — would continue to dog Windows for decades to come. In this first release, it was driven home most of all by the weird and unsatisfying system of “tiled” windows.

All of which was a shame because in certain ways Windows was actually far more technically ambitious than the contemporary Macintosh. It offered a cooperative-multitasking system that, if not quite the preemptive multitasking of TopView or the new Commodore Amiga, was more than the single-tasking Mac could boast. And it also offered a virtual-memory scheme which let the user run more applications than would fit inside 640 K. Additional RAM beyond the 640 K barrier or a hard drive, if either or both were extant, could be used as a swap space when the user tried to open more applications than there was room for in conventional memory. Windows would then automatically copy data back and forth between main memory and the swap space as needed in order to keep things running. The user was thus freed from having to constantly worry about her memory usage, as she did in TopView — although performance problems quickly started to rear their head if she went too crazy. In that circumstance, “the thrashing as Windows alternately loads one application and then the other brings the machine to its knees,” wrote PC Magazine, describing another trait destined to remain a Windows totem for years to come.

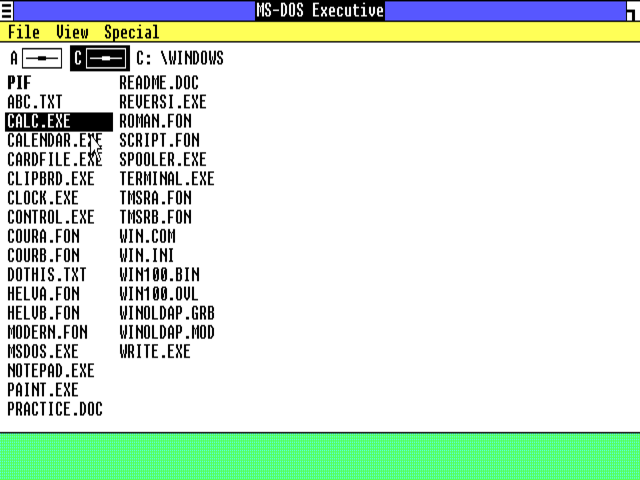

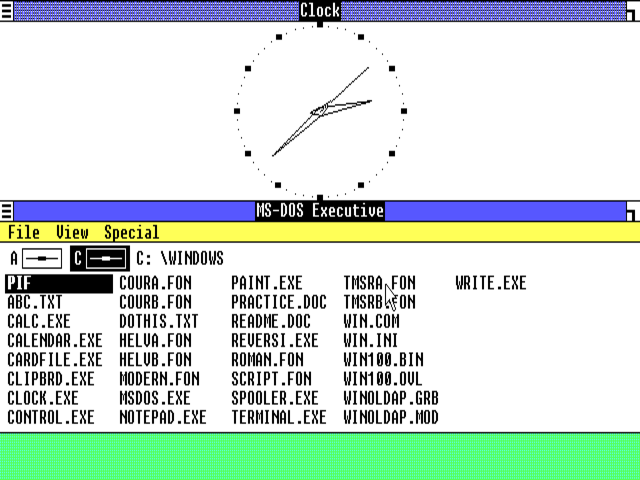

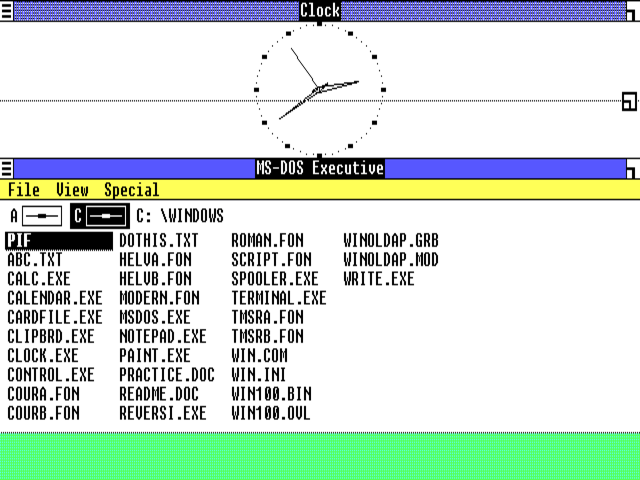

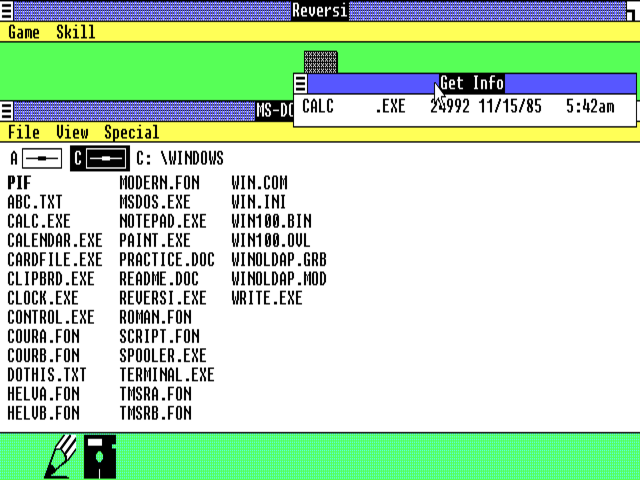

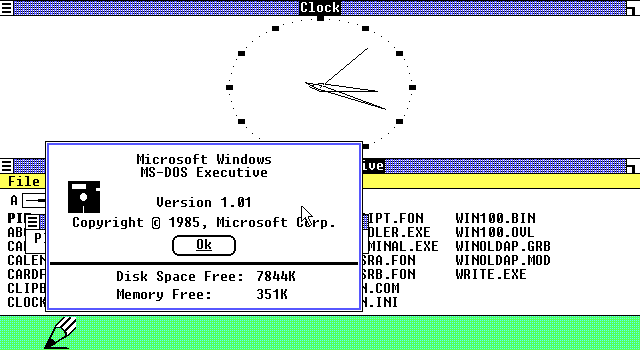

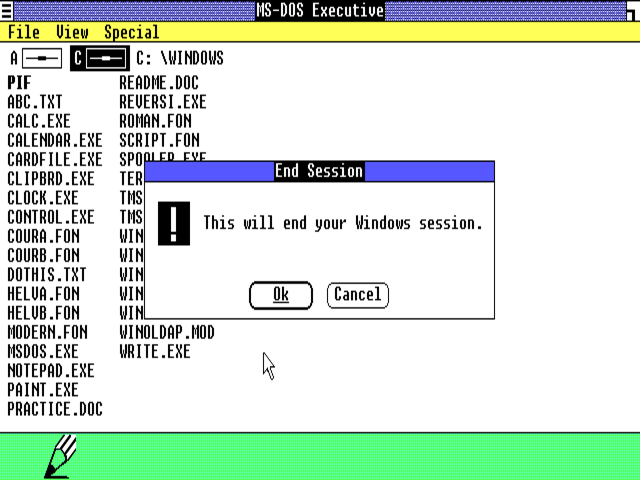

A Quick Tour of Windows 1.01

Windows 1.01 boots into what it calls the “MS-DOS Executive,” which resembles one of the many popular aftermarket file managers of the MS-DOS era, such as Norton Commander. Applications are started from here by double-clicking on their actual .exe files. This version of Windows does nothing to insulate the users from the file-level contents of their hard drives; it has no icons representing installed applications and, indeed, no concept of installation at all. Using Windows 1.01 is thus akin to using Windows 10 if the Start Menu, Taskbar, Quick-Launch Toolbar, etc. didn’t exist, and all interactions happened at the level of File Explorer windows.

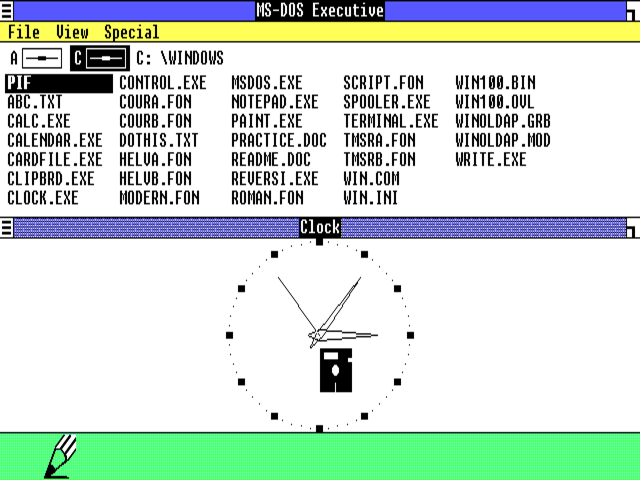

Under Microsoft’s “tiled windows” approach, windows always fill the width of the screen but can be tiled vertically. They’re never allowed to overlap one another under any circumstances, and taken as a group will always fill the screen. One window, the MS-DOS Executive will always be open and thus filling the screen even if nothing else is running. There is no concept of a desktop “beneath” the windows.

Windows can be sized to suit in vertical terms by grabbing the widget at their top right and dragging. Here we’re making the MS-DOS Executive window larger. When we release the mouse button, the Clock window will automatically be made smaller in proportion to its companion’s growth. Remember, overlapping windows aren’t allowed, no matter how hard you try to trick the software…

…with one exception. Sub-windows opened by applications can be dragged freely around the screen and can overlay other windows. Go figure!

If we try to drag a window around by its title bar, an interesting philosophical distinction is revealed between Windows 1.01 and more recent versions. We wind up swapping the contents of one window with those of another. Applications, in other words, aren’t intrinsically bound to their windows, but can be moved among them. In the screenshot above, the disk icon is actually our mouse cursor, representing the MS-DOS Executive window’s contents, which we’re about to swap with the contents of what is currently the Clock window.

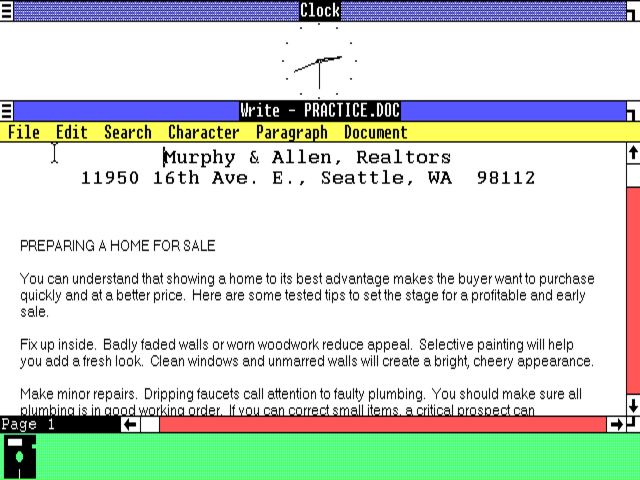

Windows 1.01 shipped with Write, a fairly impressive minimalist word processor — arguably the most impressive application ever made for the little-used operating environment.

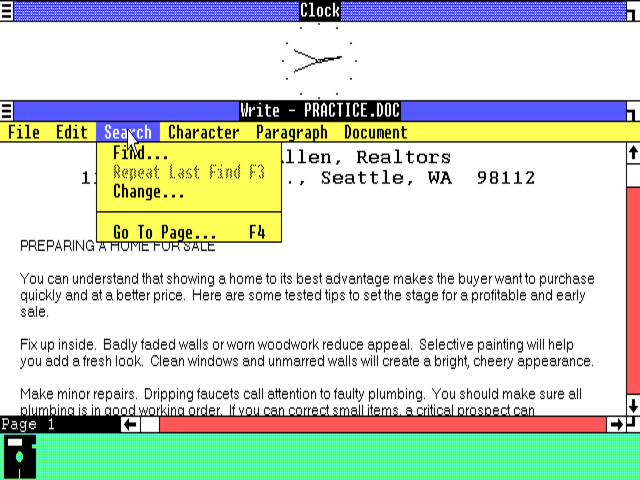

In contrast to the weirdness of other aspects of Windows 1.01, working within an application like Write feels reassuringly familiar, what with its scroll bars and Macintosh-like pull-down menus. Interestingly, the latter use the click-and-hold approach of the Mac rather than the click-once approach of later versions of Windows.

Windows 1.01 doesn’t have a great way of getting around the 640 K barrier, but it does implement a virtual-memory scheme — no mean feat in itself on a processor without built-in memory protection — which uses any memory beyond 640 K as essentially a RAM disk — or, as Microsoft called it, a “Smart Drive.” In the absence of extra memory, or if it too is filled up, the hard disk becomes the swap area.

By the time Windows was ready, all of the clone makers whom Bill Gates had cajoled and threatened into shipping it with their computers had jumped off the bandwagon, telling him that it had simply taken him too long to deliver, and that the product which he had finally delivered was simply too slow on most hardware for them to foist it on their customers in good conscience. With that path to acceptance closed to them, Microsoft was forced to push Windows as a boxed add-on sold through retail channels, a first for them in the context of a piece of system software. In a measure of just how badly Gates wanted Windows to succeed, Microsoft elected to price it at only $99 — one-tenth of what VisiCorp had tried to ask for Visi On two years before — despite its huge development cost.

Unfortunately, the performance problems, the awkwardness of the tiled windows, and the almost complete lack of native Windows applications beyond those that shipped with the environment outweighed the low price; almost nobody bought the thing. Microsoft was trapped by the old chicken-or-the-egg conundrum that comes with the launch of any new computing platform — a problem that is solved only with difficulty in even the best circumstances. Buyers wanted to see Windows applications before they bought the operating environment, while software developers wanted to see a market full of eager buyers before they invested in the platform. The fact that Windows could run most vanilla MS-DOS applications with some degree or another of felicity only helped the software developers make the decision to stay away unless and until the market started screaming for Windows-native versions of their products. Thus, the MS-DOS compatibility Microsoft had built into Windows, which had been intended as a mere bridge to the Windows-native world of the future, proved something of a double-edged sword.

When you add up all of the hard realities, it comes as little surprise that Microsoft’s first GUI sparked a brief run of favorable press notices, a somewhat longer run of more skeptical commentary, and then disappeared without a trace. Already by the spring of 1986, it was a non-factor, appearing for all the world to be just one more gravestone in the GUI graveyard, likely to be remembered only as a pundit’s punch line. Bill Gates could comfort himself only with the fact that IBM’s own big system-software innovation had landed with a similar splat.

IBM and Microsoft had each tried to go it alone, had each tried to build something better upon the foundation of MS-DOS, and had each struck out swinging. What now? Perhaps the odd couple still needed one another, loath though either was to admit it. In fact, by that spring of 1986 a gradual rapprochement had already been underway for a year, despite deep misgivings from both parties. TopView and Windows 1 had both been a bust, but neither company had gotten where they were by giving up easily. If they pooled their forces once again, who knew what they might achieve. After all, it had worked out pretty well the first time around.

(Sources: the books The Making of Microsoft: How Bill Gates and His Team Created the World’s Most Successful Software Company by Daniel Ichbiah and Susan L. Knepper, Hard Drive: Bill Gates and the Making of the Microsoft Empire by James Wallace and Jim Erickson, Gates: How Microsoft’s Mogul Reinvented an Industry and Made Himself the Richest Man in America by Stephen Manes and Paul Andrews, Computer Wars: The Fall of IBM and the Future of Global Technology by Charles H. Ferguson and Charles R. Morris, and Apple Confidential 2.0: The Definitive History of the World’s Most Colorful Company by Owen W. Linzmayer; PC Magazine of April 30 1985, February 25 1986, April 18 1987, and April 12 1988; Byte of February 1985, May 1988, and the special issue of Fall 1985; InfoWorld of May 7 1984 and November 19 1984; PC World of December 1985; Tandy Trower’s “The Secret Origins of Windows” on the Technologizer website. Finally, I owe a lot to Nathan Lineback for the histories, insights, comparisons, and images found at his wonderful online “GUI Gallery.”)

Footnotes

| ↑1 | This is perhaps a good point to introduce a quick primer on multitasking techniques to those of you who may not be familiar with its vagaries. The first thing to understand is that multitasking during this period was fundamentally an illusion. The CPUs in the computers of this era were actually only capable of doing one task at a time. Multitasking was the art of switching the CPU’s attention between tasks quickly enough that several things seemed to be happening at once — that several applications seemed to be running at once. There are two basic approaches to creating this illusionary but hugely useful form of multitasking.

Cooperative multitasking — found in systems like the Apple Lisa, the Apple Macintosh between 1987’s System 5 and the introduction of OS X in 2001, and early versions of Microsoft Windows — is so named because it relies on the cooperation of the applications themselves. A well-behaved, well-programmed application is expected to periodically relinquish its control of the computer voluntarily to the operating system, which can then see if any of its own tasks need to be completed or any other applications have something to do. A cooperative-multitasking operating system is easier to program and less resource-intensive than the alternative, but its most important drawback is made clear to the user as soon as she tries to use an application that isn’t terribly well-behaved or well-programmed. In particular, an application that goes into an infinite loop of some sort — a very common sort of bug — will lock up the whole computer, bringing the whole operating system down with it. Preemptive multitasking — found in the Commodore Amiga, Mac OS X, Unix and Linux, and later versions of Microsoft Windows — is so named because it gives the operating system the authority to wrest control from — to preempt — individual applications. Thus even a looping program can only slow down the system as a whole, not kill it entirely. For this reason, it’s by far the more desirable approach to multitasking, but also the more complicated to implement. |

|---|

Aula

July 6, 2018 at 3:38 pm

“then generats the appropriate code”

Should be “generates”.

“who been working with the Macintosh since almost almost two years”

Missing a “had” but has double “almost”.

“shit roles downhill”

Should be “rolls”.

“with shouts and fist pumps”

Or more likely “bumps”.

“Jim Symour”

Are you sure it’s not “Seymour”?

Jimmy Maher

July 6, 2018 at 7:27 pm

Thanks!

Aula

July 7, 2018 at 10:40 am

You fixed the others, but “fist pumps” is still there.

Jimmy Maher

July 7, 2018 at 1:32 pm

“Fist pump” was actually as intended: https://en.wikipedia.org/wiki/Fist_pump. ;)

Brian

July 6, 2018 at 4:34 pm

Brings back memories… I actually wrote a batch file for our computer that automatically loaded up a menu option when our Tandy booted from the hard drive. It let you select a program to load automatically. Overall, it was pretty primitive. Later we had a commercial program that did the same thing in a somewhat more elegant package.

I also remember playing around with some early task-switching programs too… it’s hard to think that there was a time when you were basically locked into a single program! It was interesting, but you were limited in that your 640k had a chunk taken up by the program and then you had to estimate how much each program needed to run.

Jimmy Maher

July 6, 2018 at 7:31 pm

That’s essentially what you had to do to run vanilla MS-DOS applications in TopView and early versions of Windows as well, using PIF files.

Lefty

August 12, 2021 at 1:48 am

I used automenu which was freeware. Push 1 for WP51, 2 for 123, 3 for dBase and 4 for Dazzle a screen fractals program or screen saver.

Casey Muratori

July 6, 2018 at 6:57 pm

I had trouble parsing, “More so than it did Windows and the other microcomputer GUI environments that were its ostensible competitors”. I think maybe there is a typo in there?

– Casey

Jimmy Maher

July 6, 2018 at 7:29 pm

Thanks!

tedder

July 7, 2018 at 12:29 am

PIFs! Norton Commander! Text mode mouse! Wow. Memories.

Hope you are going to talk more about PIFs.

This is the first time I’ve seen anything before Windows 3.0, which was my first post- MSDOS GUI OS.

Brian

July 7, 2018 at 3:28 pm

Dad decided we needed a mouse about 1988… it had 3 buttons and we finally gave up on it having any practical use for our old Tandy. It simply didn’t have the power to run any graphical programs that used a mouse, even retrofitted with a 10 Mhz 8086 processor.

I remember starting college and preferring to drop to the DOS prompt rather than use Windows 3.0+ due to being so familiar with it. Although, I was recently depressed when I tried to use DOS Box for the first time in 15 years and could barely recall the “dir” command!

Ditto here too… I don’t recall seeing Windows 1 or 2 ever! just retrospectives like this. I do remember seeing the early OS/2 computers in action at the workplace of one of my friend’s parents.

tedder

July 8, 2018 at 2:15 am

I get stuck in DOS when / and `ls` don’t work.

_RGTech

July 11, 2025 at 11:11 pm

It also works the other way… my first use of the System Rescue Linux DVD was a complete disaster. I was like Catweazle: “nothing works!”

Then I printed the “Redhat 6.2 Dos-to-Linux Cheat Sheet”: one page (if properly scaled), priceless help on the basics.

https://ftp.kh.edu.tw/Linux/Redhat/en_6.2/doc/gsg/ch-doslinux.htm

Keith Palmer

July 7, 2018 at 1:28 am

In the old computer magazines I’ve read, I’ve seen news items and column entries about the approach of Visi On and TopView, but not so much about their actual arrivals and swift aftermaths. The beginning of this series is therefore quite interesting for me.

(I don’t want this to come across as a “demand for revision,” but when I noticed you mentioning “the special screwdriver” needed to open a Macintosh case I did remember seeing case-opening tools, both extremely long Torx screwdrivers and prying irons mean to separate the front from the back, advertised in the back of old Macworld issues, along with articles about the hardware being hacked before the arrival of the Macintosh Plus to hook up internal hard drives and additional memory… So far as the rapprochement between IBM and Microsoft you mention at the end of this piece, though, I also remember a news item in the second-to-last issue of Creative Computing on that subject where Bill Gates made the barbed comment he’d expected Apple to have done “a good job” with the Macintosh.)

Anonymous

July 7, 2018 at 7:18 am

Yes, long torx drivers were never hard to get once there was a demand for them, and you really don’t need a tool to crack the case open.

While people often laugh about this, there is an awfully good reason to make the Mac difficult to get into: the CRT and power supply guts are all completely exposed in there, and it’s quite easy to get a dangerous shock if you touch the wrong thing. Besides, there was no internal expansion for ages, so unless you were upgrading via swapping out the entire motherboard (and potentially the disk drive), there wasn’t any point in opening it. Some people soldered in parts for expansion, but it was rare and obviously the torx driver was no barrier for them.

It really wasn’t until the last compact Macs — the Color Classics in the early 90s — that the CRT was separately shielded, whereupon an easy to slide out motherboard was provided and regular screws were used.

whomever

July 7, 2018 at 6:57 pm

My father beta tested Windows 1. On a XT clone with CGA. It was horrible, unusable.

Interesting to read all this, I’ve been learning stuff. Don’t forget when it gets to the time the Very Famous MS Ad for Windows 386 (https://www.youtube.com/watch?v=noEHHB6rnMI)

Tomber

July 7, 2018 at 11:49 pm

I went looking, and I can’t find anything that would let stand the claim that TopView is a pre-emptive multitasker on an 8088. It looks like it patched itself into the interrupt tables, but the numerous retreats it makes depending on what ways a program is not ‘well-behaved’ belies that it is pre-emptive in any way that wouldn’t also make that true for Win16 apps prior to NT. (I do not say Win95, because even that could be jammed up by a badly written Win16 app, while NT side-stepped that problem a couple years earlier.)

In my opinion, the references to TopView as a pre-emptive multitasker are from somebody with a different definition than what was used in the time-frame when the difference mattered. Or by a sales guy. If there is another perspective here, let it be heard.

Jimmy Maher

July 9, 2018 at 7:37 am

Sorry for the delay in getting back to you. I wanted to look back over my sources, and didn’t have a chance to do that until this morning.

So, the most detailed examination of TopView which I’ve found in the contemporary literature is in the April 30 1985 PC Magazine. Its fairly detailed description of TopView multitasking certainly sounds more preemptive than cooperative to me:

Tomber

July 11, 2018 at 11:45 pm

It’s basically a glorified TSR. In 1985, these distinctions were pretty esoteric, and slick PR (from IBM, no less) would have produced the quoted text, which sounds accurate to me. What it doesn’t say is “pre-emptive.” Since there is no identified hardware support for tasks (and therefore enforced pre-emptive task switching) in real mode on x86, TopView can not be pre-emptive multitasking. It is relying on running code to be obeying standard conventions of interrupt handling (and intercepting interrupt calls to DOS/BIOS) to achieve co-operative multitasking. Any program that decides it is going to disable interrupts and/or circumvent the interrupt vector table would expose it. That’s why I say it is no more pre-emptive than Win16 apps on Win 3.x: It works great until an app has a bug or intentionally ruins the illusion.

Maybe they called it pre-emptive because there was nothing better in DOS land, maybe they didn’t know better, or maybe it is marketing deception. But five years later, in 1990, nobody would call that pre-emptive because there were tangible examples of actual 386 protected mode task switching.

Jimmy Maher

July 12, 2018 at 7:55 am

TopView obviously wasn’t a terribly sophisticated form of preemptive multitasking even in comparison to something like AmigaOS — it has, for example, no apparent notion of priority — and perhaps a sufficiently determined task could indeed circumvent its controls entirely. Yet I’m not quite willing to call it non-preemptive for that reason, as it does largely satisfy the definition I provide in the article above. The 68000 as well lacked “identified hardware support for tasks” — if by that you mean features like an MMU — and yet AmigaOS is generally considered the first consumer-oriented PC operating system to support preemptive multitasking. And there other, even older examples of preemptive multitasking on CPUs without these features, such as Microsoft Xenix on the 8086/8088.

A TSR as I understand it generally wedges itself into the interrupt tables in order to run its own code. TopView, on the other hand, has a kernel managing not just itself but the tasks running under it. It would seem to me to be a categorically different thing.

It strikes me that you’re applying a different definition of preemptive multitasking from the one I’m using, as explained in a footnote to the article above. Your definition may very well have validity — possibly more validity than mine — in many computer-science contexts, but it’s a little hard for me to address or understand it because you haven’t explained clearly what said definition *is*. If you’d like to continue the discussion, it might be helpful to provide such a definition so I can clearly see where you’re coming from. Do you consider AmigaOS 1.x to be a preemptive-multitasking operating system?

Tomber

July 12, 2018 at 5:02 pm

I’m not familiar enough with Amiga, but I would say it could be pre-emptive because the 68000 does have a hardware concept of privilege level for code. System, or user. That permits the operating system to keep app code from interfering in mechanisms that could implement pre-emptive multitasking. On x86, real mode does not have that. The 286 introduced task structures(in protected mode) that are implemented in hardware for doing similar enforcement. The MMU of the 386 was a great improvement and increased granularity of control, but it actually is not the key piece, although it is critical if you want to get pre-existing real-mode DOS apps to pre-emptively multitask. Protected-mode apps on the 286 simply weren’t allowed to do things that real-mode apps did all the time. You end up with OS/2 1.x.

TopView can be considered pre-emptive if you look at the ability of it to hook a timer interrupt, and therefore perform tasks switches during the handling of those interrupts independent of what the app is doing. TopView can be considered co-operative if you consider that any application can stop that from happening, and certain apps would have. There is no doubt that in 1985, the ability of it to window multiple DOS apps and see them all updating the screen at the same time would have been impressive. I do think you can call TopView the best that was possible on an 8088.

Sniffnoy

July 8, 2018 at 8:17 am

Having never used any of these, I’m very confused about one thing. You mention that a number of these graphical operating systems were single-tasking. How does that work? I mean, single-tasking text-mode, that’s straightforward. Single-tasking GUI… that doesn’t make sense to me unless, like, each program takes up the entire screen. I don’t see how it makes sense with windows. How on earth does a single-tasking windowed environment work? What are the windows adding in such a case?

Jimmy Maher

July 8, 2018 at 1:23 pm

The Macintosh worked that way until Multi-Finder became an official part of MacOS in 1987. An application can open multiple windows, which can be useful in many circumstances, but you can’t have multiple applications running at one time. I believe this is the source of the longstanding Mac convention, still seen in OS X today, of menus appear at the top of the screen rather than the top of individual windows. This is also the reason that Lotus could believe a product like Jazz — integrating a spreadsheet, a database manager, a word processor, and other stuff in one huge (not to say bloated) application — could have a lot of appeal on the Mac.

That said, there is almost always some concurrency going on even with a single-tasking GUI. Printer drivers, storage and memory management, input drivers, etc., run as background “tasks.” But only one *application* at a time is allowed.

Sniffnoy

July 8, 2018 at 1:33 pm

Oh, I see; I didn’t consider multiple windows for the same program. Thanks!

Veronica Connor

July 8, 2018 at 3:57 pm

There was an HCI reason for the menus being at the top of the screen. It makes them quicker to hit with the mouse, because the top edge gives you a backstop to hit with the pointer. This also helps people with more limited physical abilities to use the system. Being used to this, other systems (Windows, NeXTStep, etc) that place menus in floating areas feel fiddly and cumbersome.

xxx

July 9, 2018 at 5:51 am

Veronica is correct about the top menu bar being a deliberate interface decision. Jef Raskin’s book The Humane Interface goes into more detail about the rationale for putting the Macintosh menu bar at the top, as I recall.

anonymous

July 13, 2018 at 5:29 am

Yes, the thing to look for is Fitt’s Law. Bigger targets are easier to hit with the mouse, basically. Since the mouse cursor can’t go past the edge of the screen, edges are essentially infinitely big targets in one direction — you can just slam the cursor that way and never overshoot.

Likewise, corners are even bigger targets than edges, since they work in two directions and the mouse can be funneled toward the corners. Apple never much relied on that, though, other than that the Apple menu is in a corner and when the Applications menu came along, it was also in a corner.

And yet despite also originating on the Xerox Alto, most people ignored contextual menus until the 90s, even though they sometimes have the advantage of not needing to move the mouse at all to reach the menu.

_RGTech

July 11, 2025 at 11:02 pm

And that explains the reason for immediately changing the Windows 11 taskbar behavior back to left-side-oriented.

-> It has an edge!! :)

Laertes

July 10, 2018 at 6:05 pm

Reading the description of Windows 1.01 reminded me that there are several window managers for Unix/Linux that work that way: https://en.m.wikipedia.org/wiki/Tiling_window_manager

Jimmy Maher

July 11, 2018 at 8:24 am

These are probably also drawing from Xerox PARC. One of the Lisa and Macintosh’s major, oft-overlooked innovations was the free-dragging, overlapping window model.

Ross

July 11, 2018 at 9:39 pm

Over the years I recall reading that overlapping windows were avoided in case Apple (or others) came knocking on Microsoft’s door with lawyers in tow. I wonder if that’s always been a myth?

Jimmy Maher

July 12, 2018 at 7:43 am

No, I’ve seen no sign that that was a motivation. Microsoft was working very closely with Apple at the time, and Bill Gates and others weren’t shy about saying publicly during 1984 that Windows would be “the Mac on PC hardware” — statements they would later come to regret. Tiled windows were the norm at Xerox PARC, whose dogma insisted that they were neater and better even in the face of users expressing preferences for overlapping, free-draggable windows. Scott MacGregor brought that dogma with him to Microsoft.

Much more on the legal stuff to come…

Iggy Drougge

November 23, 2018 at 10:46 pm

That certainly was the case for GEM; the initial release had freely moving windows, but that was disabled after Apple cautioned Digital Research. On the Atari ST, the original GEM (Mac-style) window handling was kept, but disappeared on the PC version.

cliffton

July 24, 2018 at 8:16 pm

“Gates sent Ballmer out *ON* an “apology tour””

Jimmy Maher

July 25, 2018 at 8:17 am

Thanks!

Jonathan O

February 1, 2021 at 7:49 pm

“…the odd couple still needed one another, loathe though either was to admit it.”

I think you mean loath (or loth, though this spelling is very rare in the US).

Jimmy Maher

February 2, 2021 at 6:20 am

Thanks!

Simon N Goodwin

January 7, 2023 at 5:11 pm

The Sinclair QL had preemptive multitasking long before the Amiga, and with practical advantages too. I was multitasking on mine in May 1984, using only features of the device-independent ROM-based QDOS developed by Tony Tebby in Cambridge the year before.

Amstrad put the QL back into production to satisfy retail orders when they bought Sinclair in 1986, though they continued development only of the single-tasking ZX Spectrum. QDOS lived on into the 1990s in multi-tasking business computers like the ICL One-per-Desk, BT Merlin and CST Thor, totalling sales in the hundreds of thousands (though only a few thousand QLs made under contract by Samsung reached the US market) and was ported to the ST and Amiga. As a fellow Amiga enthusiast I recommend Aminet’s free QDOS4Amiga to you, Jimmy.

Given his general awareness of non-US computing, and the influence of QDOS on Linux, which had the same scheduler priority system as QL initially (unsurprisingly as Linus Torvalds went from Vic-20 to QL while waiting for the 386 to unscrew the worst aspects of Intel’s architecture), I am surprised Jimmy missed this. But that’s why we have comments!

Daniel

October 12, 2023 at 7:30 am

Love these pieces – super interesting. Thanks for writing them!

Ben

June 3, 2025 at 9:45 pm

rather with you -> rather than with you

the “The Impossible Dream” -> “The Impossible Dream”

Jimmy Maher

June 4, 2025 at 7:12 am

Thanks!

_RGTech

July 11, 2025 at 11:01 pm

Yes… I have a few comments again, sorry.

“Preemptive multitasking like TopView is doing here wouldn’t come to Microsoft Windows until Windows 95” -> that depends on what you want to multitask. Native applications? Then you’re right. DOS applications? Then Windows/386 already did that.

“No other operating environment would ever jump through more hoops to make MS-DOS applications work like they had been designed for a multitasking windowed paradigm from the start.” Okay, I have the impression that the Windows 95 development team explicitly introduced bugfixes in form of bug-emulation so that common software, be it written for Windows 3 or DOS, worked as expected… which was the reason for nearly one year of delay. Can’t really compare it to TopView though.

————-

Strangely, Windows 1 could have been a bargain. I think some text in PC Magazine also pointed it out: where else could you get a decent drawing program, a good barebones word processor (with kind of WYSIWYG, which was much harder to achieve under DOS), a clock, calendar, and cardfile for under 100 Dollars?