+

= ?

I think [the] Macintosh accomplished everything we set out to do and more, even though it reaches most people these days as Windows.

— Andy Hertzfeld (original Apple Macintosh systems programmer), 1994

When rumors first began to circulate early in 1991 that IBM and Apple were involved in high-level talks about a major joint initiative, most people dismissed them outright. It was, after all, hard to imagine two companies in the same industry with more diametrically opposed corporate cultures. IBM was Big Blue, a bedrock of American business since the 1920s. Conservative and pragmatic to a fault, it was a Brylcreemed bastion of tradition where casual days meant that employees might remove their jackets to reveal the starched white shirts they wore underneath. Apple, on the other hand, had been founded just fifteen years before by two long-haired children of the counterculture, and its campus still looked more like Woodstock than Wall Street. IBM placed great stock in the character of its workforce; Apple, as journalist Michael S. Malone would later put it in his delightfully arch book Infinite Loop, “seemed to have no character, but only an attitude, a style, a collection of mannerisms.” IBM talked about enterprise integration and system interoperability; Apple prattled on endlessly about changing the world. IBM played Lawrence Welk at corporate get-togethers; Apple preferred the Beatles. (It was an open secret that the name the company shared with the Beatles’ old record label wasn’t coincidental.)

Unsurprisingly, the two companies didn’t like each other very much. Apple in particular had been self-consciously defining itself for years as the sworn enemy of IBM and everything it represented. When Apple had greeted the belated arrival of the IBM PC in 1981 with a full-page magazine advertisement bidding Big Blue “welcome, seriously,” it had been hard to read as anything other than snarky sarcasm. And then, and most famously, had come the “1984” television advertisement to mark the debut of the Macintosh, in which Apple was personified as a hammer-throwing freedom fighter toppling a totalitarian corporate titan — Big Blue recast as Big Brother. What would the rumor-mongers be saying next? That cats would lie down with dogs? That the Russians would tell the Americans they’d given up on the whole communism thing and would like to be friends… oh, wait. It was a strange moment in history. Why not this too, then?

Indeed, when one looked a little harder, a partnership began to make at least a certain degree of sense. Apple’s rhetoric had actually softened considerably since those heady early days of the Macintosh and the acrimonious departure of Steve Jobs which had marked their ending. In the time since, more sober minds at the company had come to realize that insulting conservative corporate customers with money to spend on Apple’s pricey hardware might be counter-productive. Most of all, though, both companies found themselves in strikingly similar binds as the 1990s got underway. After soaring to rarefied heights during the early and middle years of the previous decade, they were now being judged by an increasing number of pundits as the two biggest losers of the last few years of computing history. In the face of the juggernaut that was Microsoft Windows, that irresistible force which nothing in the world of computing could seem to defy for long, it didn’t seem totally out of line to ask whether there even was a future for IBM or Apple. Seen in this light, the pithy clichés practically wrote themselves: “the enemy of my enemy is my friend”; “any port in a storm”; etc. Other, somewhat less generous commentators just talked about an alliance of losers.

Each of the two losers had gotten to this juncture by a uniquely circuitous route.

When IBM released the IBM PC, their first mass-market microcomputer, in August of 1981, they were as surprised as anyone by the way it took off. Even as hackers dismissed it as boring and unimaginative, corporate America couldn’t get enough of the thing; a boring and unimaginative personal computer — i.e., a safe one — was exactly what they had been waiting for. IBM’s profits skyrocketed during the next several years, and the pundits lined up to praise the management of this old, enormous company for having the flexibility and wherewithal to capitalize on an emerging new market; a tap-dancing elephant became the metaphor of choice.

And yet, like so many great successes, the IBM PC bore the seeds of its downfall within it from the start. It was a simple, robust machine, easy to duplicate by plugging together readily available commodity components — a process made even easier by IBM’s commitment to scrupulously documenting every last detail of its design for all and sundry. Further, IBM had made the mistake of licensing its operating system from a small company known as Microsoft rather than buying it outright or writing one of their own, and Bill Gates, Microsoft’s Machiavellian CEO, proved more than happy to license MS-DOS to anyone else who wanted it as well. The danger signs could already be seen in 1983, when an upstart company called Compaq released a “portable” version of IBM’s computer — in those days, this meant a computer which could be packed into a single suitcase — before IBM themselves could get around to it. A more dramatic tipping point arrived in 1986, when the same company made a PC clone built around Intel’s hot new 80386 CPU before IBM managed to do so.

In 1987, IBM responded to the multiplying ranks of the clone makers by introducing the PS/2 line, which came complete with a new, proprietary bus architecture, locked up tight this time inside a cage of patents and legalese. A cynical move on the face of it, it backfired spectacularly in practice. Smelling the overweening corporate arrogance positively billowing out of the PS/2 lineup, many began to ask themselves for the first time whether the industry still needed IBM at all. And the answer they often came to was not the one IBM would have preferred. IBM’s new bus architecture slowly died on the vine, while the erstwhile clone makers put together committees to define new standards of their own which evolved the design IBM had originated in more open, commonsense ways. In short, IBM lost control of the very platform they had created. By 1990, the words “PC clone” were falling out of common usage, to be replaced by talk of the “Wintel Standard.” The new standard bearer, the closest equivalent to IBM in this new world order, was Microsoft, who continued to license MS-DOS and Windows, the software that allowed all of these machines from all of these diverse manufacturers to run the same applications, to anyone willing to pay for it. Meanwhile OS/2, IBM’s mostly-compatible alternative operating system, was struggling mightily; it would never manage to cross the hump into true mass-market acceptance.

Apple’s fall from grace had been less dizzying in some ways, but the position it had left them in was almost as frustrating.

After Steve Jobs walked away from Apple in September of 1985, leaving behind the Macintosh, his twenty-month-old dream machine, the more sober-minded caretakers who succeeded him did many of the reasonable, sober-minded things which their dogmatic predecessor had refused to allow: opening the Mac up for expansion, adding much-requested arrow keys to its keyboard, toning down the revolutionary rhetoric that spooked corporate America so badly. These things, combined with the Apple LaserWriter laser printer, Aldus PageMaker software, and the desktop-publishing niche they spawned between them, saved the odd little machine from oblivion. Yet something did seem to get lost in the process. Although the Mac remained a paragon of vision in computing in many ways — HyperCard alone proved that! — Apple’s management could sometimes seem more interested in competing head-to-head with PC clones for space on the desks of secretaries than nurturing the original dream of the Macintosh as the creative, friendly, fun personal computer for the rest of us.

In fact, this period of Apple’s history must strike anyone familiar with the company of today — or, for that matter, with the company that existed before Steve Jobs’s departure — as just plain weird. Quibbles about character versus attitude aside, Apple’s most notable strength down through the years has been a peerless sense of self, which they have used to carve out their own uniquely stylish image in the ofttimes bland world of computing. How odd, then, to see the Apple of this period almost willfully trying to become the one thing neither the zealots nor the detractors have ever seen them as: just another maker of computer hardware. They flooded the market with more models than even the most dutiful fans could keep up with, none of them evincing the flair for design that marks the Macs of earlier or later eras. Their computers’ bland cases were matched with bland names like “Performa” or “Quadra” — names which all too easily could have come out of Compaq or (gasp!) IBM rather than Apple. Even the tight coupling of hardware and software into a single integrated user experience, another staple of Apple computing before and after, threatened to disappear, as CEO John Sculley took to calling Apple a “software company” and intimated that he might be willing to license MacOS to other manufacturers in the way that Microsoft did MS-DOS and Windows. At the same time, in a bid to protect the software crown jewels, he launched a prohibitively expensive and ethically and practically ill-advised lawsuit against Microsoft for copying MacOS’s “look and feel” in Windows.

Apple’s attempts to woo corporate America by acting just as bland and conventional as everyone else bore little fruit; the Macintosh itself remained too incompatible, too expensive, and too indelibly strange to lure cautious purchasing managers into the fold. Meanwhile Apple’s prices remained too high for any but the most well-heeled private users. And so the Mac soldiered on with a 5 to 10 percent market share, buoyed by a fanatically loyal user base who still saw revolutionary potential in it, even as they complained about how many of its ideas Microsoft and others had stolen. Admittedly, their numbers were not insignificant: there were about 3 and a half million members of the Macintosh family by 1990. They were enough to keep Apple afloat and basically profitable, at least for now, but already by the early 1990s most new Macs were being sold “within the family,” as it were. The Mac became known as the platform where the visionaries tried things out; if said things proved promising, they then reached the masses in the form of Windows implementations. CD-ROM, the most exciting new technology of the early 1990s, was typical. The Mac pioneered this space; Mediagenic’s The Manhole, the very first CD-ROM entertainment product, shipped first on that platform. Yet most of the people who heard the hype and went out to buy a “multimedia PC” in the years that followed brought home a Wintel machine. The Mac was a sort of aspirational showpiece platform; in defiance of the Mac’s old “computer for the rest of us” tagline, Windows was the place where the majority of ordinary people did ordinary things.

The state of MacOS added weight to these showhorse-versus-workhorse stereotypes. Its latest incarnation, known as System 6, had fallen alarmingly behind the state of the art in computing by 1990. Once one looked beyond its famously intuitive and elegant user interface, one found that it lacked robust support for multitasking; lacked for ways to address memory beyond 8 MB; lacked the virtual memory that would allow users to open more and larger applications than the physical memory allowed; lacked the memory protection that could prevent errant applications from taking down the whole system. Having been baked into many of the operating system’s core assumptions from the start — MacOS had originally been designed to run on a machine with no hard drive and just 128 K of memory — these limitations were infuriatingly difficult to remedy after the fact. Thus Apple struggled mightily with the creation of a System 7, their attempt to do just that. When System 7 finally shipped in May of 1991, two years after Apple had initially promised it would, it still lagged behind Windows under the hood in some ways: for example, it still lacked comprehensive memory protection.

The problems which dogged the Macintosh were typical of any computing platform that attempts to survive beyond the technological era which spawned it. Keeping up with the times means hacking and kludging the original vision, as efficiency and technical elegance give way to the need just to make it work, by hook or by crook. The original Mac design team had been given the rare privilege of forgetting about backward compatibility — given permission to build something truly new and “insanely great,” as Steve Jobs had so memorably put it. That, needless to say, was no longer an option. Every decision at Apple must now be made with an eye toward all of the software that had been written for the Mac in the past seven years or so. People depended on it now, which sharply limited the ways in which it could be changed; any new idea that wasn’t compatible with what had come before was an ipso-facto nonstarter. Apple’s clever programmers doubtless could have made a faster, more stable, all-around better operating system than System 7 if they had only had free rein to do so. But that was pie-in-the-sky talk.

Yet the most pressing of all the technical problems confronting the Macintosh as it aged involved its hardware rather than its software. Back in 1984, the design team had hitched their wagon to the slickest, sexiest new CPU in the industry at the time: the Motorola 68000. And for several years, they had no cause to regret that decision. The 68000 and its successor models in the same family were wonderful little chips — elegant enough to live up to even the Macintosh ideal of elegance, an absolute joy to program. Even today, many an old-timer will happily wax rhapsodic about them if given half a chance. (Few, for the record, have similarly fond memories of Intel’s chips.)

But Motorola was both a smaller and a more diversified company than Intel, the international titan of chip-making. As time went on, they found it more and more difficult to keep up with the pace set by their rival. Lacking the same cutting-edge fabrication facilities, it was hard for them to pack as many circuits into the same amount of space. Matters began to come to a head in 1989, when Intel released the 80486, a chip for which Motorola had nothing remotely comparable. Motorola’s response finally arrived in the form of the roughly-equivalent-in-horsepower 68040 — but not until more than a year later, and even then their chip was plagued by poor heat dissipation and heavy power consumption in many scenarios. Worse, word had it that Motorola was getting ready to give up on the whole 68000 line; they simply didn’t believe they could continue to compete head-to-head with Intel in this arena. One can hardly overstate how terrifying this prospect was for Apple. An end to the 68000 line must seemingly mean the end of the Macintosh, at least as everyone knew it; MacOS, along with every application ever written for the platform, were inextricably bound to the 68000. Small wonder that John Sculley started talking about Apple as a “software company.” It looked like their hardware might be going away, whether they liked it or not.

Motorola was, however, peddling an alternative to the 68000 line, embodying one of the biggest buzzwords in computer-science circles at the time: “RISC,” short for “Reduced Instruction Set Chip.” Both the Intel x86 line and the Motorola 68000 line were what had been retroactively named “CISC,” or “Complex Instruction Set Chips”: CPUs whose set of core opcodes — i.e., the set of low-level commands by which they could be directly programmed — grew constantly bigger and more baroque over time. RISC chips, on the other hand, pared their opcodes down to the bone, to only those commands which they absolutely, positively could not exist without. This made them less pleasant for a human programmer to code for — but then, the vast majority of programmers were working by now in high-level languages rather than directly controlling the CPU in assembly language anyway. And it made programs written to run on them by any method bigger, generally speaking — but then, most people by 1990 were willing to trade a bit more memory usage for extra speed. To compensate for these disadvantages, RISC chips could be simpler in terms of circuitry than CISC chips of equivalent power, making them cheaper and easier to manufacture. They also demanded less energy and produced less heat — the computer engineer’s greatest enemy — at equivalent clock speeds. As of yet, only one RISC chip was serving as the CPU in mass-market personal computers: the ARM chip, used in the machines of the British PC maker Acorn, which weren’t even sold in the United States. Nevertheless, Motorola believed RISC’s time had come. By switching to RISC, they wouldn’t need to match Intel in terms of transistors per square millimeter to produce chips of equal or greater speed. Indeed, they’d already made a RISC CPU of their own, called the 88000, in which they were eager to interest Apple.

They found a receptive audience among Apple’s programmers and engineers, who loved Motorola’s general design aesthetic. Already by the spring of 1990, Apple had launched two separate internal projects to study the possibilities for RISC in general and the 88000 in particular. One, known as Project Jaguar, envisioned a clean break with the past, in the form of a brand new computer that would be so amazing that people would be willing to accept that none of their existing software would run on it. The other, known as Project Cognac, studied whether it might be possible to port the existing MacOS to the new architecture, and then — and this was the really tricky part — find a way to make existing applications which had been compiled for a 68000-based Mac run unchanged on the new machine.

At first, the only viable option for doing so seemed to be a sort of Frankenstein’s monster of a computer, containing both an 88000- and a 68000-series CPU. The operating system would boot and run on the 88000, but when the user started an application written for an older, 68000-based Mac, it would be automatically kicked over to the secondary CPU. Within a few years, so the thinking went, all existing users would upgrade to the newer models, all current software would get recompiled to run natively on the RISC chip, and the 68000 could go away. Still, no one was all that excited by this approach; it seemed the worst Macintosh kludge yet, the very antithesis of what the machine was supposed to be.

A eureka moment came in late 1990, with the discovery of what Cognac project leader Jack McHenry came to call the “90/10 Rule.” Running profilers on typical applications, his team found that in the case of many or most of them it was the operating system, not the application itself, that consumed 90 percent or more of the CPU cycles. This was an artifact — for once, a positive one! — of the original MacOS design, which offered programmers an unprecedentedly rich interface toolbox meant to make coding as quick and easy as possible and, just as importantly, to give all applications a uniform look and feel. Thus an application simply asked for a menu containing a list of entries; it was then the operating system that did all the work of setting it up, monitoring it, and reporting back to the application when the user chose something from it. Ditto buttons, dialog boxes, etc. Even something as CPU-intensive as video playback generally happened through the operating system’s QuickTime library rather than the application actually employing it.

All of this meant that it ought to be feasible to emulate the 68000 entirely in software. The 68000 code would necessarily run slowly and inefficiently through emulation, wiping out all of the speed advantages of the new chip and then some. Yet for many or most applications the emulator would only need to be used about 10 percent of the time. The other 90 percent of the time, when the operating system itself was doing things at native speed, would more than make up for it. In due course, applications would get recompiled and the need for 68000 emulation would largely go away. But in the meanwhile, it could provide a vital bridge between the past and the future — a next-generation Mac that wouldn’t break continuity with the old one, all with a minimum of complication, for Apple’s users and for their hardware engineers alike. By mid-1991, Project Cognac had an 88000-powered prototype that could run a RISC-based MacOS and legacy Mac applications together.

And yet this wasn’t to be the final form of the RISC-based Macintosh. For, just a few months later, Apple and IBM made an announcement that the technology press billed — sometimes sarcastically, sometimes earnestly — as the “Deal of the Century.”

Apple had first begun to talk with IBM in early 1990, when Michael Spindler, the former’s president, had first reached out to Jack Kuehler, his opposite number at IBM. It seemed that, while Apple’s technical rank and file were still greatly enamored with Motorola, upper management was less sanguine. Having been burned once with the 68000, they were uncertain about Motorola’s commitment and ability to keep evolving the 88000 over the long term.

It made a lot of sense in the abstract for any company interested in RISC technology, as Apple certainly was, to contact IBM; it was actually IBM who had invented the RISC concept back in the mid-1970s. Not all that atypically for such a huge company with so many ongoing research projects, they had employed the idea for years only in limited, mostly subsidiary usage scenarios, such as mainframe channel controllers. Now, though, they were just introducing a new line of “workstation computers” — meaning extremely high-powered desktop computers, too expensive for the consumer market — which used a RISC chip called the POWER CPU that was the heir to their many years of research in the field. Like the workstations it lay at the heart of, the chip was much too expensive and complex to become the brain of Apple’s next generation of consumer computers, but it might, thought Spindler, be something to build upon. And he knew that, with IBM’s old partnership with Microsoft slowly collapsing into bickering acrimony, Big Blue might just be looking for a new partner.

The back-channel talks were intermittent and hyper-cautious at first, but, as the year wore on and the problems both of the companies faced became more and more obvious, the discussions heated up. The first formal meeting took place in February of 1991 or shortly thereafter, at an IBM facility in Austin, Texas. The Apple people, knowing IBM’s ultra-conservative reputation and wishing to make a good impression, arrived neatly groomed and dressed in three-piece suits, only to find their opposite numbers, having acted on the same motivation, sitting there in jeans and denim shirts.

That anecdote illustrates how very much both sides wanted to make this work. And indeed, the two parties found it much easier to work together than anyone might have imagined. John Sculley, the man who really called the shots at Apple, found that he got along smashingly with Jack Kuehler, to the extent that the two were soon talking almost every day. After beginning as a fairly straightforward discussion of whether IBM might be able and willing to make a RISC chip suitable for the Macintosh, the negotiations just kept growing in scale and ambition, spurred on by both companies’ deep-seated desire to stick it to Microsoft and the Wintel hegemony in any and all possible ways. They agreed to found a joint subsidiary called Taligent, staffed initially with the people from Apple’s Project Jaguar, which would continue to develop a brand new operating system that could be licensed by any hardware maker, just like MS-DOS and Windows (and for that matter IBM’s already extant OS/2). And they would found another subsidiary called Kaleida Labs, to make a cross-platform multimedia scripting engine called ScriptX.

Still, the core of the discussions remained IBM’s POWER architecture — or rather the PowerPC, as the partners agreed to call the cost-reduced, consumer-friendly version of the chip. Apple soon pulled Motorola into these parts of the talks, thus turning a bilateral into a trilateral negotiation, and providing the name for their so-called “AIM alliance” — “AIM” for Apple, IBM, and Motorola. IBM had never made a mass-market microprocessor of their own before, noted Apple, and Motorola’s experience could serve them well, as could their chip-fabrication facilities once actual production began. The two non-Apple parties were perhaps less excited at the prospect of working together — Motorola in particular must have been smarting at the rejection of their own 88000 processor which this new plan would entail — but made nice and got along.

Jack Kuehler and John Sculley brandish what they call their “marriage certificate,” looking rather disturbingly like Neville Chamberlain declaring peace in our time. The marriage would not prove an overly long or happy one.

On October 2, 1991 — just six weeks after the first 68040-based Macintosh models had shipped — Apple and IBM made official the rumors that had been swirling around for months. At a joint press briefing held inside the Fairmont Hotel in downtown San Francisco, they trumpeted all of the initiatives I’ve just described. The Deal of the Century, they said, would usher in the next phase of personal computing. Wintel must soon give way to the superiority of a PowerPC-based computer running a Taligent operating system with ScriptX onboard. New Apple Macintosh models would also use the PowerPC, but the relationship between them and these other, Taligent-powered machines remained vague.

Indeed, it was all horribly confusing. “What Taligent is doing is not designed to replace the Macintosh,” said Sculley. “Instead we think it complements and enhances its usefulness.” But what on earth did that empty corporate speak even mean? When Apple said out of the blue that they were “not going to do to the Macintosh what we did to the Apple II” — i.e., orphan it — it rather made you suspect that that was exactly what they meant to do. And what did it all mean for IBM’s OS/2, which Big Blue had been telling a decidedly unconvinced public was also the future of personal computing for several years now? “I think the message in those agreements for the future of OS/2 is that it no longer has a future,” said one analyst. And then, what was Kaleida and this ScriptX thing supposed to actually do?

So much of the agreement seemed so hopelessly vague. Compaq’s vice president declared that Apple and IBM must be “smoking dope. There’s no way it’s going to work.” One pundit called the whole thing “a con job. There’s no software, there’s no operating system. It’s just a last gasp of extinction by the giants that can’t keep up with Intel.” Apple’s own users were baffled and consternated by this sudden alliance with the company which they had been schooled to believe was technological evil incarnate. A grim joke made the rounds: what do you get when you cross Apple and IBM? The answer: IBM.

While the journalists reported and the pundits pontificated, it was up to the technical staff at Apple, IBM, and Motorola to make PowerPC computers a reality. Like their colleagues who had negotiated the deal, they all got along surprisingly well; once one pushed past the surface stereotypes, they were all just engineers trying to do the best work possible. Apple’s management wanted the first PowerPC-based Macintosh models to ship in January of 1994, to commemorate the platform’s tenth anniversary by heralding a new technological era. The old Project Cognac team, now with the new code name of “Piltdown Man” after the famous (albeit fraudulent) “missing link” in the evolution of humanity, was responsible for making this happen. For almost a year, they worked on porting MacOS to the PowerPC, as they’d previously done to the 88000. This time, though, they had no real hardware with which to work, only specifications and software emulators. The first prototype chips finally arrived on September 3, 1992, and they redoubled their efforts, pulling many an all-nighter. Thus MacOS booted up to the desktop for the first time on a real PowerPC-based machine just in time to greet the rising sun on the morning of October 3, 1992. A new era had indeed dawned.

Their goal now was to make a PowerPC-based Macintosh work exactly like any other, only faster. MacOS wouldn’t even get a new primary version number for the first PowerPC release; this major milestone in Mac history would go under the name of System 7.1.2, a name more appropriate to a minor maintenance release. It looked so identical to what had come before that its own creators couldn’t spot the difference; they wound up lighting up a single extra pixel in the PowerPC version just so they could know which was which.

Their guiding rule of an absolutely seamless transition applied in spades to the 68000 emulation layer, duly ported from the 88000 to the PowerPC. An ordinary user should never have to think about — should not even have to know about — the emulation that was happening beneath the surface. Another watershed moment came in June of 1993, when the team brought a PowerPC prototype machine to the MacHack, a coding conference and competition. Without telling any of the attendees what was inside the machine, the team let them use it to demonstrate their boundary-pushing programs. The emulation layer performed beyond their most hopeful prognostications. It looked like the Mac’s new lease on life was all but a done deal from the engineering side of things.

But alas, the bonhomie exhibited by the partner companies’ engineers and programmers down in the trenches wasn’t so marked in their executive suites after the deal was signed. The very vagueness of so many aspects of the agreement had papered over what were in reality hugely different visions of the future. IBM, a company not usually given to revolutionary rhetoric, had taken at face value the high-flown words spoken at the announcement. They truly believed that the agreement would mark a new era for personal computing in general, with a new, better hardware architecture in the form of PowerPC and an ultra-modern operating system to run on it in the form of Taligent’s work. Meanwhile it was becoming increasingly clear that Apple’s management, who claimed to be changing the world five times before breakfast on most days, had in reality seen Taligent largely as a hedge in case their people should prove unable to create a PowerPC Macintosh that looked like a Mac, felt like a Mac, and ran vintage Mac software. As Project Piltdown Man’s work proceeded apace, Apple grew less and less enamored with those other, open-architecture ideas IBM was pushing. The Taligent people didn’t help their cause by falling headfirst into a pit of airy computer-science abstractions and staying mired there for years, all while Project Piltdown Man just kept plugging away, getting things done.

The first two and a half years of the 1990s were marred by a mild but stubborn recession in the United States, during which the PC industry had a particularly hard time of it. After the summer of 1992, however, the economy picked up steam and consumer computing eased into what would prove its longest and most sustained boom of all time, borne along on a wave of hype about CD-ROM and multimedia, along with the simple fact that personal computers in general had finally evolved to a place where they could do useful things for ordinary people in a reasonably painless way. (A bit later in the boom, of course, the World Wide Web would come along to provide the greatest impetus of all.)

And yet the position of both Apple and IBM in the PC marketplace continued to get steadily worse while the rest of their industry soared. At least 90 percent of the computers that were now being sold in such impressive numbers ran Microsoft Windows, leaving OS/2, MacOS, and a few other oddballs to divide the iconoclasts, the hackers, and the non-conformists of the world among themselves. While IBM continued to flog OS/2, more out of stubbornness than hope, Apple tried a little bit of everything to stop the slide in market share and remain relevant. Still not entirely certain whether their future lay with open architectures or their own closed, proprietary one, they started porting selected software to Windows, including most notably QuickTime, their much-admired tool for encoding and playing video. They even shipped a Mac model that could also run MS-DOS and Windows, thanks to an 80486 housed in its case alongside its 68040. And they entered into a partnership with the networking giant Novell to port MacOS itself to Intel hardware — a partnership that, like many Apple initiatives of these years, petered out without ultimately producing much of anything. Perhaps most tellingly of all, this became the only period in Apple’s history when the company felt compelled to compete solely on price. They started selling Macs in department stores for the first time, where a stream of very un-Apple-like discounts and rebates greeted prospective buyers.

While Apple thus toddled along without making much headway, IBM began to annihilate all previous conceptions of how much money a single company could possibly lose, posting oceans of red that looked more like the numbers found in macroeconomic research papers than entries in an accountant’s books. The PC marketplace was in a way one of their smaller problems. Their mainframe business, their real bread and butter since the 1950s, was cratering as customers fled to the smaller, cheaper computers that could often now do the jobs of those hulking giants just as well. In 1991, when IBM first turned the corner into loss, they did so in disconcertingly convincing fashion: they lost $2.82 billion that year. And that was only the beginning. Losses totaled $4.96 billion in 1992, followed by $8.1 billion in 1993. IBM lost more money during those three years alone than any other company in the history of the world to that point; their losses exceeded the gross domestic product of Ecuador.

The employees at both Apple and IBM paid the toll for the confusions and prevarications of these years: both companies endured rounds of major layoffs. Those at IBM marked the very first such in the long history of the company. Big Blue had for decades fostered a culture of employment for life; their motto had always been, “If you do your job, you will always have your job.” This, it was now patently obvious, was no longer the case.

The bloodletting at both companies reached their executive suites as well within a few months of one another. On April 1, 1993, John Akers, the CEO of IBM, was ousted after a seven-year tenure which one business writer called “the worst record of any chief executive in the history of IBM.” Three months later, following a terrible quarterly earnings report and a drop in share price of 58 percent in the span of six months, Michael Spindler replaced John Sculley as the CEO of Apple.

These, then, were the storm clouds under which the PowerPC architecture became a physical reality.

The first PowerPC computers to be given a public display bore an IBM rather than an Apple logo on their cases. They arrived at the Comdex trade show in November of 1993, running a port of OS/2. IBM also promised a port of AIX — their version of the Unix operating system — while Sun Microsystems announced plans to port their Unix-based Solaris operating system and, most surprisingly of all, Microsoft talked about porting over Windows NT, the more advanced, server-oriented version of their world-conquering operating environment. But, noted the journalists present, “it remains unclear whether users will be able to run Macintosh applications on IBM’s PowerPC” — a fine example of the confusing messaging the two alleged allies constantly trailed in their wake. Further, there was no word at all about the status of the Taligent operating system that was supposed to become the real PowerPC standard.

Meanwhile over at Apple, Project Piltdown Man was becoming that rarest of unicorns in tech circles: a major software-engineering project that is actually completed on schedule. The release of the first PowerPC Macs was pushed back a bit, but only to allow the factories time to build up enough inventory to meet what everyone hoped would be serious consumer demand. Thus the “Power Macs” made their public bow on March 14, 1994, at New York City’s Lincoln Center, in three different configurations clocked at speeds between 60 and 80 MHz. Unlike IBM’s machines, which were shown six months before they shipped, the Power Macs were available for anyone to buy the very next day.

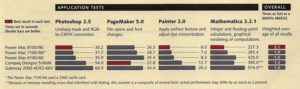

This speed test, published in MacWorld magazine, shows how all three of the Power Mac machines dramatically outperform top-of-the-line Pentium machines when running native code.

They were greeted with enormous excitement and enthusiasm by the Mac faithful, who had been waiting anxiously for a machine that could go head-to-head with computers built around Intel’s new Pentium chip, the successor to the 80486. This the Power Macs could certainly do; by some benchmarks at least, the PowerPC doubled the overall throughput of a Pentium. World domination must surely be just around the corner, right?

Predictably enough, the non-Mac-centric technology press greeted the machines’ arrival more skeptically than the hardcore Mac-heads. “I think Apple will sell [a] million units, but it’s all going to be to existing Mac users,” said one market researcher. “DOS and Windows running on Intel platforms is still going to be 85 percent of the market. [The Power Mac] doesn’t give users enough of a reason to change.” Another noted that “the Mac users that I know are not interested in using Windows, and the Windows users are not interested in using the Mac. There has to be a compelling reason [to switch].”

In the end, these more guarded predictions proved the most accurate. Apple did indeed sell an impressive spurt of Power Macs in the months that followed, but almost entirely to the faithful. One might almost say that they became a victim of Project Piltdown Man’s success: the Power Mac really did seem exactly like any other Macintosh, except that it ran faster. And even this fact could be obscured when running legacy applications under emulation, as most people were doing in the early months: despite Project Piltdown Man’s heroic efforts, applications like Excel, Word, and Photoshop actually ran slightly slower on a Power Mac than on a top-of-the-line 68040-based machine. So, while the transition to PowerPC allowed the Macintosh to persist as a viable computing platform, it ultimately did nothing to improve upon its small market share. And because the PowerPC MacOS was such a direct and literal port, it still retained all of the shortcomings of MacOS in general. It remained a pretty interface stretched over some almost laughably archaic plumbing. The new generation of Mac hardware wouldn’t receive an operating system truly, comprehensively worthy of it until OS X arrived seven long years later.

Still, these harsh realities shouldn’t be allowed to detract from how deftly Apple — and particularly the unsung coders of Project Piltdown Man — executed the transition. No one before had ever picked up a consumer-computing platform bodily and moved it to an entirely new hardware architecture at all, much less done it so transparently that many or most users never really had to think about what was happening at all. (There would be only one comparable example in computing’s future. And, incredibly, the Mac would once again be the platform in question: in 2006, Apple would move from the fading PowerPC line to Intel’s chips — if you can’t beat ’em, join ’em, right? — relying once again on a cleverly coded software emulator to see them through the period of transition. The Macintosh, it seems, has more lives than Lazarus.)

Although the briefly vaunted AIM alliance did manage to give the Macintosh a new lease on life, it succeeded in very little else. The PowerPC architecture, which had cost the alliance more than $1 billion to develop, went nowhere in its non-Mac incarnations. IBM’s own machines sold in such tiny numbers that the question of whether Apple would ever allow them to run MacOS was all but rendered moot. (For the record, though: they never did.) Sun Solaris and Microsoft Windows NT did come out in PowerPC versions, but their sales couldn’t justify their existence, and within a year or two they went away again. The bold dream of creating a new reference platform for general-purpose computing to rival Wintel never got off the ground, as it became painfully clear that said dream had been taken more to heart by IBM than by Apple. Only after the millennium would the PowerPC architecture find a measure of mass-market success outside the Mac, when it was adopted by Nintendo, Microsoft, and Sony for use in videogame consoles. In this form, then, it finally paid off for IBM; far more PowerPC-powered consoles than even Macs were sold over the lifetime of the architecture. PowerPC also eventually saw use in other specialized applications, such as satellites and planetary rovers employed by NASA.

Success, then, is always relative. But not so the complete lack thereof, as Kaleida and Taligent proved. Kaleida burned through $200 million before finally shipping its ScriptX multimedia-presentation engine years after other products, most notably Macromedia’s Director, had already sewn up that space; it was disbanded and harvested for scraps by Apple in November of 1995. Taligent burned through a staggering $400 million over the same period of time, producing only some tepid programming frameworks in lieu of the revolutionary operating system that had been promised, before being absorbed back into IBM.

There is one final fascinating footnote to this story of a Deal of the Century that turned out to be little more than a strange anecdote in computing history. In the summer of 1994, IBM, having by now stopped the worst of the bleeding, settling by now into their new life as a smaller, far less dominant company, offered to buy Apple outright for a premium of $5 over their current share price. In IBM’s view, the synergies made sense: the Power Macs were selling extremely well, which was more than could be said for IBM’s PowerPC models. Why not go all in?

Ironically, it was those same healthy sales numbers that scuppered the deal in the end. If the offer had come a year earlier, when a money-losing Apple was just firing John Sculley, they surely would have jumped at it. But now Apple was feeling their oats again, and by no means entirely without reason; sales were up more than 20 percent over the previous year, and the company was once more comfortably in the black. So, they told IBM thanks, but no thanks. The same renewed taste of success also caused them to reject serious inquiries from Philips, Sun Microsystems, and Oracle. Word had it that new CEO Michael Spindler was convinced not only that the Power Mac had saved Apple, but that it had fundamentally altered their position in the marketplace.

The following year revealed how misguided that thinking really was; the Power Mac had fixed none of Apple’s fundamental problems. That year it was Microsoft who cemented their world domination instead, with the release of Windows 95, while Apple grappled with the reality that almost all of those Power Mac sales of the previous year had been to existing members of the Macintosh family, not to the new customers they so desperately needed to attract. What happened now that everyone in the family had dutifully upgraded? The answer to that question wasn’t pretty: Apple plunged off a financial cliff as precipitous in its own way as the one which had nearly destroyed IBM a few years earlier. Now, nobody was interested in acquiring them anymore. The pundits smelled the stink of death; it’s difficult to find an article on Apple written between 1995 and 1998 which doesn’t include the adjective “beleaguered.” Why buy now when you can sift through the scraps at the bankruptcy auction in just a little while?

Apple didn’t wind up dying, of course. Instead a series of improbable events, beginning with the return of prodigal-son Steve Jobs in 1997, turned them into the richest single company in the world — yes, richer even than Microsoft. These are stories for other articles. But for now, it’s perhaps worth pausing for a moment to think about an alternate timeline where the Macintosh became an IBM product, and the Deal of the Century that got that ball rolling thus came much closer to living up to its name. Bizarre, you say? Perhaps. But no more bizarre than what really happened.

(Sources: the books Insanely Great: The Life and Times of Macintosh by Steven Levy, Apple Confidential 2.0: The Definitive History of the World’s Most Colorful Company by Owen W. Linzmayer, Infinite Loop: How the World’s Most Insanely Great Computer Company Went Insane by Michael S. Malone, Big Blues: The Unmaking of IBM by Paul Carroll, and The PowerPC Macintosh Book by Stephan Somogyi; InfoWorld of September 24 1990, October 15 1990, December 3 1990, April 8 1991, May 13 1991, May 27 1991, July 1 1991, July 8 1991, July 15 1991, July 22 1991, August 5 1991, August 19 1991, September 23 1991, September 30 1991, October 7 1991, October 21 1991, November 4 1991, December 30 1991, January 13 1992, January 20 1992, February 3 1992, March 9 1992, March 16 1992, March 23 1992, April 27 1992, May 11 1992, May 18 1992, June 15 1992, June 29 1992, July 27 1992, August 3 1992, August 10 1992, August 17 1992, September 7 1992, September 21 1992, October 5 1992, October 12 1992, October 19 1992, December 14 1992, December 21 1992, December 28 1992, January 11 1993, February 1 1993, February 22 1993, March 8 1993, March 15 1993, April 5 1993, April 12 1993, May 17 1993, May 24 1993, May 31 1993, June 21 1993, June 28 1993, July 5 1993, July 12 1993, July 19 1993, August 2 1993, August 9 1993, August 30 1993, September 6 1993, September 27 1993, October 4 1993, October 11 1993, October 18 1993, November 1 1993, November 15 1993, November 22 1993, December 6 1993, December 13 1993, December 20 1993, January 10 1994, January 31 1994, March 7 1994, March 14 1994, March 28 1994, April 25 1994, May 2 1994, May 16 1994, June 6 1994, June 27 1994; MacWorld of September 1992, February 1993, July 1993, September 1993, October 1993, November 1993, February 1994, and May 1994; Byte of November 1984. Online sources include IBM’s own corporate-history timeline and a vintage IBM lecture on the PowerPC architecture.)

Andrew Plotkin

February 7, 2020 at 6:45 pm

You’re describing the era when I first had a real job and could buy my own computers. Switching from a 68k Mac to a PowerMac was an exciting upgrade!

“The Macintosh, it seems, has more lives than Lazarus.”

Indeed, the smart money is on Apple undertaking *another* transition in the next few years, shifting from Intel to that obscure British “Acorn Risc Machine” architecture.

Not really relevant to the 90s, except to say that Apple now regards this sort of platform shift as a solved problem. Not easy, but manageable.

JP

February 7, 2020 at 9:32 pm

They also seem to have shed their preference to ease their customers’ transitions via backward compatibility; they’re now among the most ruthless deprecators in all of computing. 64 bit, gone! OpenGL support, gone! So if they do switch to ARM I’m sure it will be swift and brutal, yet the faithful will roll with it as they always do.

Andrew Plotkin

February 8, 2020 at 3:41 pm

Nope, that’s a serious misreading of history.

Apple has always worked hard to make the transition period as seamless as possible for users — for a fixed interval. After six or seven years, the transition is over and the support layer is axed. The burden is entirely on developers (not consumers) to keep up.

– PPC: Introduced in 1994 with 68k emulation; 68k emulator killed in 2001 (native OSX apps had to be PPC-native).

– OSX: Introduced in 2001 with Classic emulation; emulator killed in 2007.

– Intel: Introduced in 2006 with PPC emulation; emulator killed in 2011.

– 64-bit Intel: Introduced in 2012 alongside 32-bit support; 32-bit killed in 2019.

– Metal API: introduced in 2014 alongside OpenGL; OpenGL is now deprecated but still works.

The timelines are startlingly consistent if you map them out this way. If you take it as a guide, OpenGL will be dropped entirely in the next couple of years. (But it may not be. If you looked at Apple’s deprecation history of APIs, as opposed to application platforms, I think you’d see more variability.)

Anyway, my point is, an ARM transition will certainly be run the same way. They’ll roll it out with a “Rosetta” layer for Intel support; then about five years later, they’ll announce end-of-life for that. Active developers will shrug. People using out-of-date apps will write angry blog posts, just like they do every cycle.

Jimmy Maher

February 7, 2020 at 9:44 pm

Interesting. I assume the ultimate goal is to unify iOS and MacOS into one operating system…

JP

February 7, 2020 at 9:54 pm

Longer term, perhaps. In the nearer term they might try to move the Mac + macOS to their own ARM chips. “Vertical integration” is their strategy these days; as your article reminds us Apple only collaborates with others when they’re in a position of weakness. Tight control of their platforms have given them their success in this era.

Then you’d have appliances (iPhones, iPads) running the same ARM chips as the general purpose computers (Macs), and the main differences iOS vs macOS serve are to consume and produce, respectively.

dsparil

February 8, 2020 at 5:59 pm

If Apple switches to ARM for Macs, it’s because they’re tired of having to deal with Intel being unable to supply new processors in a timely fashion like they did with IBM. It should be noted that Apple switched to Intel because IBM was never able to produce a G5 with a low enough power draw to be usable in a laptop despite assurances, and that was the direction the market was moving. Those cracks are forming now though since every computer line has run into issues with processor updates because of Intel in recent years. I honestly would not be surprised if the groundwork for a switch isn’t already in place considering the gains their ARM processors have made in recent years.

iOS and macOS are already basically the same operating system but running different UIs and with different security restrictions. Pretty much the entire stack excluding the base UI libraries and interface itself is the same. A new library was released in the last major version for creating unified iPad/macOS UIs, but it is feature incomplete and the results have been middling and unnatural at best. It might get some simpler iPad apps to have Mac versions, but I can’t see much else coming from it.

Joshua Buergel

February 7, 2020 at 9:13 pm

Starting with my first serious job in the computer industry, my first development machine was a PowerPC-based Windows NT machine, with my test machine being an Alpha-based NT box. This is what passed for “test coverage” at the two-person shop I started at, writing device driver toolkits in 1996. Both machines were egregiously bad, of course, but I did learn more than I wanted to about the vagaries of the Alpha compiler that I had to work with.

Alex Smith

February 7, 2020 at 9:28 pm

“The PowerPC architecture, which had cost the alliance more than $1 billion to develop, went nowhere in its non-Mac incarnations.”

This is actually not strictly true, as PowerPCs powered a couple hundred million game consoles. This trend started when Nintendo contracted with IBM in 1999 to create a CPU for what became the Gamecube. The Gekko that forms the heart of the system uses a PowerPC architecture.

In the next console generation, all three major console companies, Microsoft, Sony, and Nintendo, contracted with IBM to create processors based on the PowerPC architecture for the XBox 360, PS3, and Wii. An engineer at the company even wrote a book about the difficulties of working with Sony and Microsoft at the same time. Nintendo went PowerPC one more time with the Wii U. This is the last console as of now to use the architecture.

Jimmy Maher

February 7, 2020 at 9:43 pm

I didn’t realize that. That’s what comes of relying on books written in the 1990s. Thanks!

Lt. Nitpicker

February 8, 2020 at 12:03 am

Also, PowerPC has had a lot of embedded use outside of game consoles, like NASA rovers and satellites, networking gear (under the name PowerQUICC and QorIQ from the [ex]Motorola side of things), etc. Since you’re reading, worth noting that Windows NT and Solaris never got ported to a Power Mac of any kind, running only on IBM’s oddball PowerPC machines, as shown in this comment from the OS/2 museum: https://www.os2museum.com/wp/os2-history/os2-warp-powerpc-edition/#comment-362778

Jimmy Maher

February 8, 2020 at 8:32 am

Although they weren’t officially supported, I *thought* it was possible to get them running on Mac hardware, in a sort of reversal of the Hackintosh scene of today. But I can’t find a definite reference, so I cut it. Thanks on all points!

Kaitain

February 8, 2020 at 2:17 am

Yep. Spent five years of my life developing for PowerPC consoles. In a masochistic way I enjoyed designing for the PS3’s PowerPC-based PPE units; it was a totally different approach to problem-solving, designing everything around the deferred execution of key calculations, and working out what jobs you could start now and then collect the results later.

Lt. Nitpicker

February 8, 2020 at 2:41 am

Cell’s weird but powerful parallel processing units were called SPEs, not PPE (which referred to the main processor), and they weren’t really PowerPC based, being a custom architecture created by Sony, Toshiba, and IBM for PS3 and other stuff. Still, considering how long it’s been by now since you developed for it, and the fact the main processor in the SPEs were called SPUs, I’m not faulting you forgot it over time.

Andrew Pam

February 7, 2020 at 11:36 pm

“executive suits” looks like a typo for “executive suites”, but it kind of works as written!

Jimmy Maher

February 8, 2020 at 8:47 am

Sometimes my wit surprises even me. ;) Thanks!

Keith Palmer

February 7, 2020 at 11:46 pm

I’d known from your “1993 preview” you’d be discussing “setting the PC standard in an era ignoring IBM,” and had wondered if “Apple in the early 1990s” might lead into your look at Myst, but this piece was a surprise for me. It took a close look to turn up you mentioning “the only period in Apple’s history when the company felt compelled to compete solely on price”; before then, I’d been thinking of Apple beginning to introduce “low-end Macs” in 1990 with the Classic and LC… although when my family moved up from our Tandy Color Computer 3 to an LC II, that was several years after my father had managed to get an SE/30 with the company he worked for paying for it, so we probably amounted to one more example of “selling to the fixed base.” I have to admit to not noticing too many criticisms in the early 1990s about “the limitations of System 7”; the needling then seemed more the immemorial divide between “command lines as your underpinning” and “graphical user interface or bust.”

Other than that, I’d had the impression from Owen Linzmayer’s Apple Confidential “Piltdown Man” was just the codename for one of the first three “Power Macs,” the other two being codenamed “Cold Fusion” and “Carl Sagan” (at least until Sagan took umbrage at being lumped in with two dodgy bits of science, only for the codename saga to get yet more controversial…)

Jimmy Maher

February 8, 2020 at 8:44 am

According to Steven Levy’s Insanely Great, Piltdown Man was the initial project where all of the hard work of getting MacOS running under PowerPC, with emulation for legacy apps, was done. Only in the latter stages was it split three ways, as you say. The direct (and amusing) quote:

I preferred not to get too far down in the weeds on this in the article.

Apple did lower their prices with the introduction of the low-end Macs; the Mac Classic line in particular proved unexpectedly successful. But price still wasn’t the major selling point; you still got more bang for your buck with Wintel. By a few years later, however, Apple was making direct price/performance claims — a really odd thing to see for anyone who knows anything about the company. This was actually what did in John Sculley. They really couldn’t compete with Wintel in this way in the long term; their R&D costs were too high and their more specialized hardware was just too expensive to make. (Apple’s R&D budget alone generally ran at around 10 percent of gross sales, whereas that figure was more like 1 or 2 percent for a maker of Wintel machines. Maintaining your own operating system is expensive…) They were selling at break-even prices or worse, which put their bottom line in the red even as sales did increase somewhat.

Sean Barrett

February 8, 2020 at 1:42 pm

“No one before had ever picked up a computing platform bodily and moved it to an entirely new hardware architecture at all, much less done it so transparently that many or most users never really had to think about what was happening at all.”

Within the consumer space, no, but in fact in 1992 DEC began transitioning from VAX to RISC with relase of CPUs implementing their Alpha architecture, as well as binary translators that could convert VAX programs to Alpha programs. I don’t know how transparent the process was for VAX/VMS users, though.

Jimmy Maher

February 8, 2020 at 2:41 pm

I was wondering if someone would come up with another example. Blanket statements are always good for that. ;) Will add a “consumer” to cover myself. Thanks!

Casey Muratori

February 9, 2020 at 8:03 pm

Not really relevant because it didn’t become popular, but it’s also interesting to note that the DEC Alpha’s binary translation software (called “FX” if I remember correctly?) could actually run x86 code natively on Alpha by doing translation on the machine code. This worked surprisingly well considering x86 uses variable-length encoding. Thus, a person who had an Alpha running Windows NT could fire up an application never compiled for Alpha, and it would run alongside their Alpha-native programs.

Sadly, as with most things DEC, they had the best technology but couldn’t figure out how to actually capitalize on it, and DEC Alpha didn’t become a popular Windows NT platform. But if somehow that had actually happened, they would have outdone Apple twice over by your metric: picked up and moved _someone else’s_ ecosystem onto their platform, _and_ done it by direct translation rather than emulation, so the speed was mostly as good as native.

They succeeded in doing it, but not in convincing anyone to but it :(

– Casey

David Boddie

February 9, 2020 at 10:08 pm

You jogged my memory there. You’re thinking of FX!32. I remembered that HP did something similar with their Dynamo project. Other examples can be found on Wikipedia’s Binary translation page.

calvin*

February 13, 2020 at 1:43 am

Another example: IBM’s own AS/400s to their own PowerPC processors – but it’s also easy when you intentionally designed the platform to be CPU-neutral as possible…

Other examples: Pretty much all the other vendors (SGI and numerous others did 68k->MIPS, Sun did 68k->SPARC, HP did 68k and custom HP3000 processors to PA-RISC, even POWER existed to transition over AIX from its older, more primitive ROMP (also a RISC!) roots…)

whomever

February 13, 2020 at 2:42 pm

calvin: This is really getting in the weeds, but I’m not sure most of those examples are the same as they aren’t binary compatible; for example you can’t run a Sun 3 app on a Sun 4, or an HP9000 3x (68k based) on a PA-RISC (And having dealt with both these transitions, trust me, they aren’t totally code compatible either). The AS400 example is probably valid.

Mike

February 8, 2020 at 2:28 pm

For the most part, System 7’s multitasking didn’t lag behind Windows. Microsoft introduced full preemptive multitasking with Windows NT (released 1993). Earlier versions of Windows, starting with Windows/386 (released 1988), only used it to run MS-DOS applications, with native Windows software still using cooperative multitasking.

Jimmy Maher

February 8, 2020 at 2:38 pm

Ah, I’d forgotten that, despite writing a ten-part series on such things. Thanks!

Andrew Plotkin

February 8, 2020 at 4:38 pm

Not sure what you’re saying. System 7 didn’t have preemptive multitasking; Macs didn’t have it until OSX in 2001.

Jimmy Maher

February 8, 2020 at 4:46 pm

In the time frame of the article, Windows 3.x was current. It didn’t have preemptive multitasking either, except for MS-DOS applications. (Basically, the Windows GUI and all applications running under it ran as one task, each MS-DOS application — if any were running — as another task.) That changed with Windows NT in 1993, and then (and more significantly for our purposes) Windows 95 in 1995. From that point, Windows was clearly more advanced than MacOS in this respect, but not quite so much before, as I originally implied. That’s what he’s saying. ;)

sylvestrus

February 15, 2020 at 4:24 am

One of the things I find most confusing about Mac history is that a small number of Macs did have preemptive multitasking well before 2001: those running A/UX, a GUI nearly identical to System 6 and later System 7 running atop a kernel based on System V UNIX. It could run standard Mac applications (albeit without memory protection or preemptive multitasking), standard UNIX applications, or “hybrid” applications that presented a Mac interface but made use of memory protection and preemptive multitasking. The first version of A/UX shipped in 1988!

I often wonder why Apple did not end up using A/UX as the foundation of its new consumer operating system rather than embarking on the Copland project. Perhaps the licensing fees to AT&T were too high for a consumer operating system?

Thorsten

March 26, 2020 at 10:45 pm

Sorry, but I feel _very_ differently about this. My first encounter with Macs were System 7-era PowerMacs, and the thing that literally shocked me about them was the complete lack of multitasking when it came to I/O. When you were copying files off those SyQuest cartridges to the Netware file server, you were bound to look at the copy progress meter for minutes and couldn’t do anything else with the machine. We had dedicated “Copying Macs” because of that – we had work to do and couldn’t block our working machines with single-task copying. At the same time, the Windows 95 machines attached to our inkjet poster printers were happily copying away multiple folders to multiple locations at a multiple of the speed while not missing a beat RIPping PostScript data and printing it at the same time.

I remember this so un-fondly because it drove me mad that a machine that cost a fraction of the Mac and was supposedly so much less advanced than it, as my coworkers kept telling me, was so obviously outperforming said Macs.

It was that cognitive dissonance on display that started me becoming platform agnostic, actually.

tedder

February 8, 2020 at 7:41 pm

This is getting into the era I remember, but not the storylines I was familiar with then; I didn’t start considering Apple seriously until OSX and it was much later I finally came on board. The 1981 ad wasn’t something I’d ever seen.

Now with the benefit of hindsight the story is much more compelling, not just because of Apple but the variation in processors and declining importance of consumer OSes generally.

I still want to put a SPARC on my homelab rack.

PJN

February 10, 2020 at 12:25 am

“if can’t beat ’em, join ’em, right?”. Should be “if you can’t” ?

Jimmy Maher

February 10, 2020 at 8:13 am

Yes. Thanks!

Brendan

February 21, 2020 at 7:27 am

For more info about the Mac’s RISC transition, the Computer History Museum’s oral history with Gary Davidian is fascinating. He worked at Apple on the Mac ROM and low-level OS, and was instrumental in writing the 68k emulator (first for the 88K, evaluated other chips, and then the executives came up with PPC). Fun tidbit: after the Loma Prieta earthquake (Oct. 1989), he couldn’t go to work for a week because of roads and the office building being damaged. He had just finished up other projects (IIci, Portable, IIfx), so spent that week reading the 88K docs and thinking about how to emulate the 68K.

The very end of part 1 is when the RISC work starts. There’s also transcripts online, the only real reason to watch the video is that he does show off the “RLC” prototype, a Mac LC with an 88K logic board.

https://www.youtube.com/watch?v=l_Go9D1kLNU

James

February 23, 2020 at 6:53 am

Looking back, Apple’s desperation in the mid to late 90s spawned a curious initiative into licensing the MacOS. I know because I spent 1997-1998 working for a Mac clone company called UMAX Computer Corp. UMAX, along with Motorola, and a company called Power Computing all had their own line of Mac clones. The licensing program sounded interesting but was still highly restrictive. The CPU boards were all pretty much identical to Apple’s own hardware but there was minor freedom allowed with using more off the shelf PC parts like the power supply. UMAX at least tried to design some uniqueness into the exterior bezel design while Motorola and Power Computing’s bezels were more generic PC looking. I have a lot of fond memories working at UCC with a lot of great people.

Of course it all came to a screeching halt when Steve Jobs returned to Apple and decided that the MacOS licensing program was a horrible idea.

Jonathan O

December 26, 2021 at 9:10 am

Just spotted this:

“Conservative and pragamatic to a fault…”

Jimmy Maher

December 27, 2021 at 7:37 am

Thanks!

_RGTech

August 27, 2025 at 10:08 pm

“The danger signs could already be seen in 1982, when an upstart company called Compaq”

Errr… 1983?

Jimmy Maher

September 4, 2025 at 5:44 pm

Thanks!