In 1994, the Consumer Electronics Show was a seemingly inviolate tradition among the makers of videogame consoles and game-playing personal computers. Name a landmark product, and chances were there was a CES story connected with it. The Atari VCS had been shown for the first time there in 1977; the Commodore 64 in 1982; the Amiga in 1984; the Nintendo Entertainment System in 1985; Tetris in 1988; the Sega Genesis in 1989; John Madden Football in 1990; the Super NES in 1991, just to name a few. In short, CES was the first and most important place for the videogame industry to show off its latest and greatest to an eager public.

For all that, though, few inside the industry had much good to say about the experience of actually exhibiting at the show. Instead of getting the plum positions these folks thought they deserved, their cutting-edge, transformative products were crammed into odd corners of the exhibit hall, surrounded by the likes of soft-porn and workout videos. The videogame industry’s patently second-class status may have been understandable once upon a time, when it was a tiny upstart on the media landscape with a decidedly uncertain future. But now, with it approaching the magic mark of $5 billion in annual revenues in the United States alone, its relegation at the hands of CES’s organizers struck its executives as profoundly unjust. Wherever and whenever they got together, they always seemed to wind up kibitzing about the hidebound CES people, who still lived in a world where toasters, refrigerators, and microwave ovens were the apex of technological excitement in the home.

They complained at length as well to Gary Shapiro, the man in charge of CES, but his cure proved worse than the disease. He and the other organizers of the 1993 Summer CES, which took place as usual in June in Chicago, promised to create some special “interactive showcase pavilions” for the industry. When the exhibitors arrived, they saw that their “pavilions” were more accurately described as tents, pitched in the middle of an unused parking lot. Pat Ferrell, then the editor-in-chief of GamePro magazine, recalls that “they put some porta-potties out there and a little snack stand where you could pick up a cookie. Everybody was like, ‘This is bullshit. This is like Afghanistan.'” It rained throughout the show, and the tents leaked badly, ruining several companies’ exhibits. Tom Kalinske, then the CEO of Sega of America, remembers that he “turned to my team and said, ‘That’s it. We’re never coming back here again.'”

Kalinske wasn’t true to his word; Sega was at both the Winter and Summer CES of the following year. But he was now ready and willing to listen to alternative proposals, especially after his and most other videogame companies found themselves in the basement of Chicago’s McCormick Place in lieu of the parking lot in June of 1994.

In his office at GamePro, Pat Ferrell pondered an audacious plan for getting his industry out of the basement. Why not start a trade show all his own? It was a crazy idea on the face of it — what did a magazine editor know about running a trade show? — but Ferrell could be a force of nature when he put his mind to something. He made a hard pitch to the people who ran his magazine’s parent organization: the International Data Group (IDG), who also published a wide range of other gaming and computing magazines, and already ran the Apple Macintosh’s biggest trade show under the banner of their magazine Macworld. They were interested, but skeptical whether he could really convince an entire industry to abandon its one proven if painfully imperfect showcase for such an unproven one as this. It was then that Ferrell started thinking about the brand new Interactive Digital Software Association. The latter would need money to fund the ratings program that was its first priority, then would need more money to do who knew what else in the future. Why not fund the IDSA with a trade show that would be a vast improvement over the industry’s sorry lot at CES?

Ferrell’s negotiations with the IDSA’s members were long and fraught, not least because CES seemed finally to be taking the videogame industry’s longstanding litany of complaints a bit more seriously. In response to a worrisome decline in attendance in recent editions of the summer show, Shapiro had decided to revamp his approach dramatically for 1995. After the usual January CES, there would follow not one but four smaller shows, each devoted to a specific segment of the larger world of consumer electronics. The videogame and consumer-computing industries were to get one of these, to take place in May in Philadelphia. So, the IDSA’s members stood at a fork in the road. Should they give CES one more chance, or should they embrace Ferrell’s upstart show?

The battle lines over the issue inside the IDSA were drawn, as usual, between Sega and Nintendo. Thoroughly fed up as he was with CES, Tom Kalinske climbed aboard the alternative train as soon as it showed up at the station. But Howard Lincoln of Nintendo, very much his industry’s establishment man, wanted to stick with the tried and true. To abandon a known commodity like CES, which over 120,000 journalists, early adopters, and taste-makers were guaranteed to visit, in favor of an untested concept like this one, led by a man without any of the relevant organizational experience, struck him as the height of insanity. Yes, IDG was willing to give the IDSA a five-percent stake in the venture, which was five percent more than it got from CES, but what good was five percent of a failure?

In the end, the majority of the IDSA decided to place their faith in Ferrell in spite of such reasonable objections as these — such was the degree of frustration with CES. Nintendo, however, remained obstinately opposed to the new show. Everyone else could do as they liked; Nintendo would continue going to CES, said Lincoln.

And so Pat Ferrell, a man with a personality like a battering ram, decided to escalate. He didn’t want any sort of split decision; he was determined to win. He scheduled his own show — to be called the Electronic Entertainment Expo, or E3 — on the exact same days in May for which Shapiro had scheduled his. Bet hedging would thus be out of the question; everyone would have to choose one show or the other. And E3 would have a critical advantage over its rival: it would be held in Los Angeles rather than Philadelphia, making it a much easier trip not only for those in Silicon Valley but also for those Japanese hardware and software makers thinking of attending with their latest products. He was trying to lure one Japanese company in particular: Sony, who were known to be on the verge of releasing their first ever videogame console, a cutting-edge 32-bit machine which would use CDs rather than cartridges as its standard storage medium.

Sony finally cast their lot with E3, and that sealed the deal. Pat Ferrell:

My assistant Diana comes into my office and she goes, “Gary Shapiro’s on the phone.” I go, “Really?” So she transfers it. Gary says, “Pat, how are you?” I say, “I’m good.” He says, ‘”You win.” And he hangs up.

E3 would go forward without any competition in its time slot.

Howard Lincoln, a man accustomed to dictating terms rather than begging favors, was forced to come to Ferrell and ask what spots on the show floor were still available. He was informed that Nintendo would have to content themselves with the undesirable West Hall of the Los Angeles Convention Center, a space that hadn’t been remodeled in twenty years, instead of the chic new South Hall where Sony and Sega’s big booths would have pride of place. Needless to say, Ferrell enjoyed every second of that conversation.

Michael Jackson, who was under contract to Sony’s music division, made an appearance at the first E3 to lend his star power to the PlayStation.

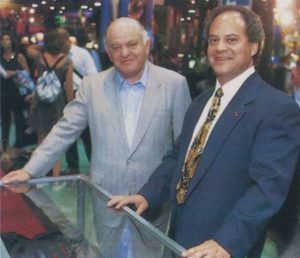

Jack and Sam Tramiel of Atari were also present. Coming twelve years after the Commodore 64’s commercial breakout, this would be one of Jack’s last appearances in the role of a technology executive. Suffice to say that it had been a long, often rocky road since then.

Lincoln’s doubts about Ferrell’s organizational acumen proved misplaced; the first E3 went off almost without a hitch from May 11 through 13, 1995. There were no less than 350 exhibitors — large, small, and in between — along with almost 38,000 attendees. There was even a jolt of star power: Michael Jackson could be seen in Sony’s booth one day. The show will be forever remembered for the three keynote addresses that opened proceedings — more specifically, for the two utterly unexpected bombshell announcements that came out of them.

First up on that first morning was Tom Kalinske, looking positively ebullient as he basked in the glow of having forced Howard Lincoln and Nintendo to bend to his will. Indeed, one could make the argument that Sega was now the greatest single power in videogames, with a market share slightly larger than Nintendo’s and the network of steadfast friends and partners that Nintendo’s my-way-or-the-highway approach had prevented them from acquiring to the same degree. Settling comfortably into the role of industry patriarch, Kalinske began by crowing about the E3 show itself:

E3 is a symbol of the changes our industry is experiencing. Here is this great big show solely for interactive entertainment. CES was never really designed for us. It related better to an older culture. It forced some of the most creative media companies on earth to, at least figuratively, put on gray flannel suits and fit into a TV-buying, furniture-selling mold. Interactive entertainment has become far more than just an annex to the bigger electronics business. Frankly, I don’t miss the endless rows of car stereos and cellular phones.

We’ve broken out to become a whole new category — a whole new culture, for that matter. This business resists hard and fast rules; it defies conventional wisdom.

After talking at length about the pace at which the industry was growing (the threshold of $5 billion in annual sales would be passed that year, marking a quintupling in size since 1987) and the demographic changes it was experiencing (only 51 percent of Sega’s sales had been to people under the age of eighteen in 1994, compared with 62 percent in 1992), he dropped his bombshell at the very end of this speech: Sega would be releasing their own new 32-bit, CD-based console, the Saturn, right now instead of on the previously planned date of September 2. In fact, the very first units were being set out on the shelves of four key retailers — Toys “R” Us, Babbage’s, Electronics Boutique, and Software Etc. — as he spoke, at a price of $399.

Halfhearted claps and cheers swept the assembly, but the dominant reaction was a palpable consternation. Many of the people in the audience were working on Saturn games, yet had been told nothing of the revised timetable. Questions abounded. Why the sudden change? And what games did Sega have to sell alongside the console? The befuddlement would soon harden into anger in many cases, as studios and publishers came to feel that they’d been cheated of the rare opportunity that is the launch of a new console, when buyers are excited and have already opened their wallets wide, and are much less averse than usual to opening them a little wider and throwing a few extra games into their bag along with their shiny new hardware. Just like that, the intra-industry goodwill which Sega had methodically built over the course of years evaporated like air out of a leaky tire. Kalinske would later claim that he knew the accelerated timetable was a bad idea, but was forced into it by Sega’s Japanese management, who were desperate to steal the thunder of the Sony PlayStation.

Speaking of which: next up was Sony, the new rider in this particular rodeo, whose very presence on this showcase stage angered such other would-be big wheels in the console space as Atari, 3DO, and Philips, none of whom were given a similar opportunity to speak. Sony’s keynote was delivered by Olaf Olafsson, a man of extraordinary accomplishment by any standard, one of those rare Renaissance Men who still manage to slip through the cracks of our present Age of the Specialist: in addition to being the head of Sony’s new North American console operation, he was a trained physicist and a prize-winning author of literary novels and stories. His slick presentation emphasized Sony’s long history of innovation in consumer electronics, celebrating such highlights as the Walkman portable cassette player and the musical compact disc, whilst praising the industry his company was now about to join with equal enthusiasm: “We are not in a tent. Instead we are indoors at our own trade show. Industry momentum, accelerating to the tune of $5 billion in annual sales, has moved us from the CES parking lot.”

Finally, Olafsson invited Steve Race, the president of Sony Computer Entertainment of America, to deliver a “brief presentation” on the pricing of the new console. Race stepped onstage and said one number: “$299.” Then he dropped the microphone and walked offstage again, as the hall broke out in heartfelt, spontaneous applause. A $299 PlayStation — i.e., a PlayStation $100 cheaper than the Sega Saturn, its most obvious competitor — would transform the industry overnight, and everyone present seemed to realize this.

The Atari VCS had been the console of the 1970s, the Nintendo Entertainment System the console of 1980s. Now, the Sony PlayStation would become the console of the 1990s.

“For a company that is so new to the industry, I would have hoped that Sony would have made more mistakes by now,” sighed Trip Hawkins, the founder of the luckless 3DO, shortly after Olafsson’s dramatic keynote. Sam Tramiel, president of the beleaguered Atari, took a more belligerent stance, threatening to complain to the Federal Trade Commission about Sony’s “dumping” on the American market. (It would indeed later emerge that Sony sold the PlayStation essentially at cost, relying on game-licensing royalties for their profits. The question of whether doing so was actually illegal, however, was another matter entirely.)

Nintendo was unfortunate enough to have to follow Sony’s excitement. And Howard Lincoln’s keynote certainly wouldn’t have done them any favors under any circumstances: it had a downbeat, sour-grapes vibe about it, which stood out all the more in contrast to what had just transpired. Lincoln had no big news to impart, and spent the vast majority of his time on an interminable, hectoring lecture about the scourge of game counterfeiting and piracy. His tone verged on the paranoid: there should be “no safe haven for pirates, whether they board ships as in the old days or manufacture fake products in violation of somebody else’s copyrights”; “every user is a potential illegal distributor”; “information wants to be free [is an] absurd rationalization.” He was like the parent who breaks up the party just as it’s really getting started — or, for that matter, like the corporate lawyer he was by training. The audience yawned and clapped politely and waited for him to go away.

The next five years of videogame-console history would be defined by the events of this one morning. The Sega Saturn was a perfectly fine little machine, but it would never recover from its botched launch. Potential buyers were as confused as developers by its premature arrival, those retailers who weren’t among the initial four chosen ones were deeply angered, and the initial library of games was as paltry as everyone had feared — and then there was the specter of the $299 PlayStation close on the horizon for any retail-chain purchasing agent or consumer who happened to be on the fence. That Christmas season, the PlayStation was launched with a slate of games and an advertising campaign that were masterfully crafted to nudge the average age of the videogame demographic that much further upward, by drawing heavily from the youth cultures of rave and electronica, complete with not-so-subtle allusions to their associated drug cultures. The campaign said that, if Nintendo was for children and Sega for adolescents, the PlayStation was the console for those in their late teens and well into their twenties. Keith Stuart, a technology columnist for the Guardian, has written eloquently of how Sony “saw a future of post-pub gaming sessions, saw a new audience of young professionals with disposable incomes, using their formative working careers as an extended adolescence.” It was an uncannily prescient vision.

Sony’s advertising campaign for the PlayStation leaned heavily on the heroin chic. No one had ever attempted to sell videogames in this way before.

The PlayStation outsold the Saturn by more than ten to one. Thus did Sony eclipse Sega at the top of the videogame heap; they would remain there virtually unchallenged until the launch of Microsoft’s Xbox in 2001. By that time, Sega was out of the console-hardware business entirely, following a truly dizzying fall from grace. Meanwhile Nintendo just kept trucking along in their own little world, much as Howard Lincoln had done at that first E3, subsisting on Mario and Donkey Kong and their lingering family-friendly reputation. When their own next-generation console, the Nintendo 64, finally appeared in 1996, the PlayStation outsold it by a margin of three to one.

In addition to its commercial and demographic implications, the PlayStation wrought a wholesale transformation in the very nature of console-based videogames themselves. It had been designed from the start for immersive 3D presentations, rather than the 2D, sprite-based experiences that held sway on the 8- and 16-bit console generations. When paired with its commercial success, the sheer technical leap the PlayStation represented over what had come before made it easily the most important console since the NES. In an alternate universe, one might have made the same argument for the Sega Saturn or even the Nintendo 64, both of which had many of the same capabilities — but it was the PlayStation that sold to the tune of more than 100 million units worldwide over its lifetime, and that thus gets the credit for remaking console gaming in its image.

The industry never gave CES another glance after the success of that first E3 show; even those computer-game publishers who were partisans of the Software Publishers Association and the Recreational Software Advisory Council rather than the IDSA and ESRB quickly made the switch to a venue where they could be the main attraction rather than a sideshow. Combined with the 3D capabilities of the latest consoles, which allowed them to run games that would previously have been possible only on computers, this change in trade-show venues marked the beginning of a slow convergence of computer games and console-based videogames. By the end of the decade, more titles than ever before would be available in versions for both computers and consoles. Thus computer gamers learned anew how much fun simple action-oriented games could be, even as console gamers developed a taste for the more extended, complex, and/or story-rich games that had previously been the exclusive domain of the personal computers. Today, even most hardcore gamers make little to no distinction between the terms “computer game” and “videogame.”

It would be an exaggeration to claim that all of these disparate events stemmed from one senator’s first glimpse of Mortal Kombat in late 1993. And yet at least the trade show whose first edition set the ball rolling really does owe its existence to that event by a direct chain of happenstance; it’s very hard to imagine an E3 without an IDSA, and hard to imagine an IDSA at this point in history without government pressure to come together and negotiate a universal content-rating system. Within a few years of the first E3, IDG sold the show in its entirety to the IDSA, which has run it ever since. It has continued to grow in size and glitz and noise with every passing year, remaining always the place where deals are made and directions are plotted, and where eager gamers look for a glimpse of some of their possible futures. How strange to think that the E3’s stepfather is Joseph Lieberman. I wonder if he’s aware of his accomplishment. I suspect not.

Postscript: Violence and Videogames

Democracy is often banal to witness up-close, but it has an odd way of working things out in the end. It strikes me that this is very much the case with the public debate begun by Senator Lieberman. As allergic as I am to all of the smarmy “Think of the children!” rhetoric that was deployed on December 9, 1993, and as deeply as I disagree with many of the political positions espoused by Senator Lieberman in the decades since, it was high time for a rating system — not in order to censor games, but to inform parents and, indeed, all of us about what sort of content each of them contained. The industry was fortunate that a handful of executives were wise enough to recognize and respond to that need. The IDSA and ESRB have done their work well. Everyone involved with them can feel justifiably proud.

There was a time when I imagined leaving things at that; it wasn’t necessary, I thought, to come to a final conclusion about the precise nature of the real-world effects of violence in games in order to believe that parents needed and deserved a tool to help them make their own decisions about what games were appropriate for their children. I explained this to my wife when I first told her that I was planning to write this series of articles — explained to her that I was more interested in recording the history of the 1993 controversy and its enormous repercussions than in taking a firm stance on the merits of the arguments advanced so stridently by the “expert panel” at that landmark first Senate hearing. But she told me in no uncertain terms that I would be leaving the elephant in the room unaddressed, leaving Chekhov’s gun unfired on the mantel… pick your metaphor. She eventually brought me around to her point of view, as she usually does, and we agreed to dive into the social-science literature on the subject.

Having left academia behind more than ten years ago, I’d forgotten how bitterly personal its feuds could be. Now, I was reminded: we found that the psychological community is riven with if anything even more dissension on this issue than is our larger culture. The establishment position in psychology is that games and other forms of violent media do have a significant effect on children’s and adolescents’ levels of aggressive behavior. (For better or for worse, virtually all of the extant studies focus exclusively on young people.) The contrary position, of course, is that they do not. A best case for the anti-establishmentarians would have them outnumbered three to one by their more orthodox peers. A United States Supreme Court case from 2011 provides a handy hook for summarizing the opposing points of view.

The case in question actually goes back to 2005, when a sweeping law was enacted in California which made it a crime to sell games that were “offensive to the community” or that depicted violence of an “especially heinous, cruel, or depraved” stripe to anyone under the age of eighteen. The law required that manufacturers and sellers label games that fit these rather subjective criteria with a large sticker that showed “18” in numerals at least two-inches square. In an irony that plenty of people noted at the time, the governor who signed the bill into law was Arnold Schwarzenegger, who was famous for starring in a long string of ultra-violent action movies.

The passage of the law touched off an extended legal battle which finally reached the Supreme Court six years later. Opponents of the law charged that it was an unconstitutional violation of the right to free speech, and that it was particularly pernicious in light of the way it targeted one specific form of media, whilst leaving, for example, the sorts of films to which Governor Schwarzenegger owed his celebrity unperturbed. Supporters of the law countered that the interactive nature of videogames made them fundamentally different — fundamentally more dangerous — than those older forms of media, and that they should be treated as a public-health hazard akin to cigarettes rather than like movies or books. The briefs submitted by social scientists on both sides provide an excellent prism through which to view the ongoing academic debate on videogame violence.

In a brief submitted in support of the law, the California Chapter of the American Academy of Pediatrics and the California Psychological Association stated without hesitation that “scientific studies confirm that violent video games have harmful effects [on] minors”:

Viewing violence increases aggression and greater exposure to media violence is strongly linked to increases in aggression.

Playing a lot of violent games is unlikely to turn a normal youth with zero, one, or even two other risk factors into a killer. But regardless of how many other risk factors are present in a youth’s life, playing a lot of violent games is likely to increase the frequency and the seriousness of his or her physical aggression, both in the short term and over time as the youth grows up. These long-term effects are a consequence of powerful observational learning and desensitization processes that neuroscientists and psychologists now understand to occur automatically in the human child. Simply stated, “adolescents who expose themselves to greater amounts of video game violence were more hostile, reported getting into arguments with teachers more frequently, were more likely to be involved in physical fights, and performed more poorly in school.”

In a recent book, researchers once again concluded that the “active participation” in all aspects of violence: decision-making and carrying out the violent act [sic], result in a greater effect from violent video games than a violent movie. Unlike a passive observer in movie watching, in first-person shooter and third-person shooter games, you’re the one who decides whether to pull the trigger or not and whether to kill or not. After conducting three very different kinds of studies (experimental, a cross-sectional correlational study, and a longitudinal study) the results confirmed that violent games contribute to violent behavior.

The relationship between media violence and real-life aggression is nearly as strong as the impact of cigarette smoking and lung cancer: not everyone who smokes will get lung cancer, and not everyone who views media violence will become aggressive themselves. However, the connection is significant.

One could imagine these very same paragraphs being submitted in support of Senator Lieberman’s videogame-labeling bill of 1994; the rhetoric of the videogame skeptics hasn’t changed all that much since then. But, as I noted earlier, there has emerged a coterie of other, usually younger researchers who are less eager to assert such causal linkages as proven scientific realities.

Thus another, looser amalgamation of “social scientists, medical scientists, and media-effects scholars” countered in their own court brief that the data didn’t support such sweeping conclusions, and in fact pointed in the opposite direction in many cases. They unspooled a long litany of methodological problems, researcher biases, and instances of selective data-gathering which, they claimed, their colleagues on the other side of the issue had run afoul of, and cited studies of their own that failed to prove or even disproved the linkage the establishmentarians believed was so undeniable.

In a recent meta-analytic study, Dr. John Sherry concluded that while there are researchers in the field who “are committed to the notion of powerful effects,” they have been unable to prove such effects; that studies exist that seem to support a relationship between violent video games and aggression but other studies show no such relationship; and that research in this area has employed varying methodologies, thus “obscuring clear conclusions.” Although Dr. Sherry “expected to find fairly clear, compelling, and powerful effects,” based on assumptions he had formed regarding video game violence, he did not find them. Instead, he found only a small relationship between playing violent video games and short-term arousal or aggression, and further found that this effect lessened the longer one spent playing video games.

Such small and inconclusive results prompted Dr. Sherry to ask: “[W]hy do some researchers continue to argue that video games are dangerous despite evidence to the contrary?” Dr. Sherry further noted that if violent video games posed such a threat, then the increased popularity of the games would lead to an increase in violent crime. But that has not happened. Quite the opposite: during the same period that video game sales, including sales of violent video games, have risen, youth violence has dramatically declined.

“The causation research can be done, and, indeed, has been done,” the brief concludes, and “leaves no empirical foundation for the assertion that playing violent video games causes harm to minors.”

The Supreme Court ruled against California, striking down the law as a violation of the First Amendment by a vote of seven to two. I’m more interested today, however, in figuring out what to make of these two wildly opposing views of the issue, both from credentialed professionals.

Before going any further, I want to emphasize that I came to this debate with what I honestly believe to have been an open mind. Although I enjoy many types of games, I have little personal interest in the most violent ones. It didn’t — and still doesn’t — strike me as entirely unreasonable to speculate that a steady diet of ultra-violent games could have some negative effects on some impressionable young minds. If I had children, I would — still would — prefer that they play games that don’t involve running around as an embodied person killing other people in the most visceral manner possible. But the indisputable scientific evidence that might give me a valid argument for imposing my preferences on others under any circumstances whatsoever just isn’t there, despite decades of earnest attempts to collect it.

The establishmentarians’ studies are shot through with biases that I will assume do not distort the data itself, but that can distort interpretations of that data. Another problem, one which I didn’t fully appreciate until I began to read some of the studies, is the sheer difficulty of conducting scientific experiments of this sort in the real world. The subjects of these studies are not mice in a laboratory whose every condition and influence can be controlled, but everyday young people living their lives in a supremely chaotic environment, being bombarded with all sorts of mediated and non-mediated influences every day. How can one possibly filter out all of that noise? The answer is, imperfectly at best. Bear with me while I cite just one example of (what I find to be) a flawed study. (For those who are interested in exploring further, a complete list of the studies which my wife and I examined can be found at the bottom of this article. The Supreme Court briefs from 2011 are also full of references to studies with findings on both sides of the issue.)

In 2012, Ontario’s Brock University published a “Longitudinal Study of the Association Between Violent Video Game Play and Aggression Among Adolescents.” It followed 1492 students, chosen as a demographic reflection of Canadian society as a whole, through their high-school years — i.e., from age fourteen or fifteen to age seventeen or eighteen. They filled out a total of four annual questionnaires over that period, which asked them about their social, familial, and academic circumstances, asked how likely they felt they were to become violent in various hypothetical real-world situations, and asked about their videogame habits: i.e., what games they liked to play and how much time they spent playing them each day. For purposes of the study, “action fighting” games like God of War and our old friend Mortal Kombat were considered violent, but strategy games with “some violent aspects” like Rainbow Six and Civilization were not; ditto sports games. The study’s concluding summary describes a “small” correlation between aggression and the playing of violent videogames: a Pearson correlation coefficient “in the .20 range.” (On this scale, a perfect, one-to-one positive or negative correlation would 1.0 or -1.0 respectively.) Surprisingly, it also describes a “trivial” correlation between aggression and the playing of nonviolent videogames: “mostly less than .10.”

On the surface, it seems a carefully worked-out study which reveals an appropriately cautious conclusion. When we dig in a bit further, however, we can see a few significant methodological problems. The first is its reliance on subjective, self-reported questionnaire answers, which are as dubious here as they were under the RSAC rating system. And the second is the researchers’ subjective assignment of games to the categories of violent and non-violent. Nowhere is it described what specific games were put where, beyond the few examples I cited in my last paragraph. This opacity about exactly what games we’re really talking about badly confuses the issue, especially given that strange finding of a correlation between aggression and “nonviolent” games. Finally, that oft-forgotten truth that correlation is not causation must be considered. When we add third variables to the mix — a statistical method of filtering out non-causative correlations from a data set — the Pearson coefficient between aggressive behavior and violent games drops to around 0.06 — i.e., well below what the researchers themselves describe as “trivial” — and that for nonviolent games drops below the threshold of statistical noise; in fact, the coefficient for violent games is just one one-hundredth above that same threshold. For reasons which are perhaps depressingly obvious, the researchers choose to base their conclusions around their findings without third variables in the mix — just one of several signs of motivated reasoning to be found in their text.

In the interest of not belaboring the point, I’ll just say that other studies I looked at had similar issues. Take, for example, a study of 295 students in Germany with an average age of thirteen and a half years, who were each given several scenarios that could lead to an aggressive response in the real world and asked how they would react, whilst also being asked which of a list of 40 videogames they played and how often they did so. Two and a half years later, they were surveyed again — but by now the researchers’ list of popular videogames was hopelessly out of date. They were thus forced to revamp the questionnaire with a list of game categories, and to retrofit the old, more specific list of titles to match the new approach. I’m sympathetic to their difficulties; again, conducting experiments like this amidst the chaos of the real world is hard. Nevertheless, I can’t help but ask how worthwhile an experiment which was changed so much on the fly can really be. And yet for all the researchers’ contortions, it too reveals only the most milquetoast of correlations between real-world aggression and videogame violence.

Trying to make sense of the extant social-science literature on this subject can often feel like wandering a hall of mirrors. “Meta-analyses” — i.e., analyses that attempt to draw universal findings from aggregations of earlier studies — are everywhere, with conclusions seemingly dictated more by the studies that the authors have chosen to analyze and the emphasis they have chosen to place on different aspects of them than by empirical truth. Even more dismaying are the analyses that piggyback on an earlier study’s raw data set, from which they almost invariably manage to extract exactly the opposite conclusions. This business of studying the effects of videogame violence begins to feel like an elaborate game in itself, one that revolves around manipulating numbers in just the right way, one that is entirely divorced from the reality behind those numbers. The deeper I fell into the rabbit hole, the more one phrase kept ringing in my head: “Garbage In, Garbage Out.”

Both sides of the debate are prone to specious reasoning. Still, the burden of proof ultimately rests with those making the affirmative case: those who assert that violent videogames lead their players to commit real-world violence. In my judgment, they have failed to make that case in any sort of thoroughgoing, comprehensive way. Even after all these years and all these studies, the jury is still out. This may be because the assertion they are attempting to prove is incorrect, or it may just be because this sort of social science is so darn hard to do. Either way, the drawing of parallels between violent videogames and an indubitably proven public-hazard like cigarettes is absurd.

Some of the more grounded studies do tell us that, if we want to find places where videogames can be genuinely harmful to individuals and by extension to society, we shouldn’t be looking at their degree of violence so much as the rote but addictive feedback loops so many of them engender. Videogame addiction, in other words, is probably a far bigger problem for society than videogame violence. So, as someone who has been playing digital games for almost 40 years, I’ll conclude by offering the following heartfelt if unsolicited advice to all other gamers, young and old:

Play the types of games you enjoy, whether they happen to be violent or nonviolent, but not for more than a couple of hours per day on average, and never at the expense of a real-world existence that can be so much richer and more rewarding than any virtual one. Make sure to leave plenty of space in your life as well for other forms of creative expression which can capture those aspects of the human experience that games tend to overlook. And make sure the games you play are ones which respect your time and are made for the right reasons — the ones which leave you feeling empowered and energized instead of enslaved and drained. Lastly, remember always the wise words of Dani Bunten Berry: “No one on their death bed ever said, ‘I wish I had spent more time alone with my computer!’” Surely that statement at least remains as true of Pac-Man as it is of Night Trap.

(Sources: the book The Ultimate History of Video Games by Steven L. Kent; Edge of August 1995; GameFan of July 1995; GamePro of June 1995 and August 1995; Next Generation of July 1995; Video Games of July 1995; Game Developer of August/September 1995. Online sources include Blake J. Harris’s “Oral History of the ESRB” at VentureBeat, “How E3 1995 Changed Gaming Forever” at Syfy Games, “The Story of the First E3” at Polygon, Game Zero‘s original online coverage of the first E3, “Sega Saturn: How One Decision Destroyed PlayStation’s Greatest Rival” by Keith Stuart at The Guardian, an interview with Olaf Olafsson at The Nervous Breakdown, and a collection of vintage CES photos at The Verge. Last but certainly not least, Anthony P’s self-shot video of the first E3, including the three keynotes, is a truly precious historical document.

I looked at the following studies of violence and gaming: “Differences in Associations Between Problematic Video-Gaming, Video-Gaming Duration, and Weapon-Related and Physically Violent Behaviors in Adolescents” by Zu Wei Zhai, et. al.; “Aggressive Video Games are Not a Risk Factor for Future Aggression in Youth: A Longitudinal Study” by Christopher J. Ferguson and John C.K. Wang; “Growing Up with Grand Theft Auto: A 10-Year Study of Longitudinal Growth of Violent Video Game Play in Adolescents” by Sarah M. Coyne and Laura Stockdale; “Aggressive Video Games are Not a Rick Factor for Mental Health Problems in Youth: A Longitudinal Study” by Christopher J. Ferguson and C.K. John Wang; “A Preregistered Longitudinal Analysis of Aggressive Video Games and Aggressive Behavior in Chinese Youth” by Christopher J. Ferguson; “Social and Behavioral Heath Factors Associated with Violent and Mature Gaming in Early Adolescence” by Lind Charmaraman, et. al.; “Reexamining the Findings of the American Psychological Association’s 2015 Task Force on Violent Media: A Meta-Analysis” by Christopher J. Ferguson, et. al.; “Do Longitudinal Studies Support Long-Term Relationships between Aggressive Game Play and Youth Aggressive Behavior? A Meta-analytic Examination” by Aaron Drummond, et. al.; “Technical Report on the Review of the Violent Video Game Literature” by the American Psychological Association; “Exposure to Violent Video Games and Aggression in German Adolescents: A Longitudinal Study” by Ingrid Möller and Barbara Krahé; “Metaanalysis of the Relationship between Violent Video Game Play and Physical Aggression over Time” by Anna T. Prescitt, et. al.; “The Effects of Reward and Punishment in Violent Video Games on Aggressive Affect,Cognition, and Behavior” by Nicholas L. Carnagey and Craig A. Anderson; “Violent Video Game Effects on Aggression, Empathy, and Prosocial Behavior in Eastern and Western Countries: A Meta-Analytic Review” by Craig A. Anderson, et. al.; “Violent Video Games: The Effects of Narrative Context and Reward Structure on In-Game and Postgame Aggression” by James D. Sauer, et. al.; “Internet and Video Game Addictions: Diagnosis, Epidemiology, and Neurobiology” by Clifford J. Sussman, et. al.; “A Longitudinal Study of the Association Between Violent Video Game Play and Aggression Among Adolescents” by Teena Willoughby, et. al. My wife dug these up for me with the help of her university hospital’s research librarian here in Denmark, but some — perhaps most? — can be accessed for free via organs like PubMed. I would be interested in the findings of any readers who care to delve into this literature — particularly any of you who possess the social-science training I lack.)