The world’s first digital network actually predates the world’s first computer, in the sense that we understand the word “computer” today.

It began with a Bell Labs engineer named George Stibitz, who worked on the electro-mechanical relays that were used to route telephone calls. One evening in late 1937, he took a box of parts home with him and started to put together on his kitchen table a contraption that distinctly resembled the one that Claude Shannon had recently described in his MIT master’s thesis. By the summer of the following year, it worked well enough that Stibitz took it to the office to show it around. In a testament to the spirit of freewheeling innovation that marked life at Bell Labs, his boss promptly told him to take a break from telephone switches and see if he could turn it into a truly useful calculating machine. The result emerged fifteen months later as the Complex Computer, made from some 450 telephone relays and many other off-the-shelf parts from telephony’s infrastructure. It was slow, as all machines of its electro-mechanical ilk inevitably were: it took it about one minute to multiply two eight-digit numbers together. And it was not quite as capable as the machine Shannon had described in print: it had no ability to make decisions at branch points, only to perform rote calculations. But it worked.

It is a little unclear to what extent the Complex Computer was derived from Shannon’s paper. Stibitz gave few interviews during his life. To my knowledge he never directly credited Shannon as his inspiration, but neither was he ever quizzed in depth about the subject. It strikes me as reasonable to grant that his initial explorations may have been entirely serendipitous, but one has to assume that he became aware of the Shannon paper after the Complex Computer became an official Bell Labs project; the paper was, after all, being widely disseminated and discussed at that time, and even the most cursory review of existing literature would have turned it up.

At any rate, another part of the Complex Computer project most definitely was completely original. Stibitz’s managers wanted to make the machine available to Bell and AT&T employees working all over the country. At first glance, this would have to entail making a lot more Complex Computers, at considerable cost, and even though the individual offices that received them would only need to make use of them occasionally. Might there be a better way, Stibitz wondered. Might it be possible to let the entire country share a single machine instead?

Stibitz enlisted a more experienced switching engineer named Samuel B. Williams, who figured out how to connect the Complex Computer to a telegraph line. By this point, telegraphy’s old manually operated Morse keys had been long since replaced by teletype machines that looked and functioned like typewriters, doing the grunt work of translating letters into Morse Code for the operator; similarly, the various arcane receiving mechanisms of old had been replaced by a teleprinter.

The world’s first digital network made its debut in September of 1940, at a meeting of the American Mathematical Society that was held at Dartmouth College in New Hampshire. The attendees were given the chance to type out mathematical problems on the teletype, which sent them up the line as Morse Code to the Complex Computer installed at Bell Labs’s facilities in New York City. The latter translated the dots and dashes of Morse Code into numbers, performed the requested calculations, and sent the results back to Dartmouth, where they duly appeared on the teleprinter. The tectonic plates subtly shifted on that sunny September afternoon, while the assembled mathematicians nodded politely, with little awareness of the importance of what they were witnessing. The computer networks of the future would be driven by a binary code known as ASCII rather than Morse Code, but the principle behind them would be the same.

As it happened, Stibitz and Williams never took their invention much further; it never did become a part of Bell’s everyday operations. The war going on in Europe was already affecting research priorities everywhere, and was soon to make the idea of developing a networked calculating device simply for the purpose of making civilian phone networks easier to install and repair seem positively quaint. In fact, the Complex Computer was destined to go down in history as the last of its breed: the last significant blue-sky advance in American computing for a long time to come that wasn’t driven by the priorities and the funding of the national-security state.

That reality would give plenty of the people who worked in the field pause, for their own worldviews would not always be in harmony with those of the generals and statesmen who funded their projects in the cause of winning actual or hypothetical wars, with all the associated costs in human suffering and human lives. Nevertheless, as a consequence of this (Faustian?) bargain, the early-modern era of computers and computer networks in the United States is almost the polar opposite of that of telegraphy and telephony in an important sense: rather than being left to the private sphere, computing at the cutting edge became a non-profit, government-sponsored activity. The ramifications of this were and remain enormous, yet have become so embedded in the way we see computing writ large that we seldom consider them. Government funding explains, for example, why the very concept of a modern digital computer was never locked up behind a patent like the telegraph and the telephone were. Perhaps it even explains in a roundabout way why the digital computer has no single anointed father figure, no equivalent to a Samuel Morse or Alexander Graham Bell — for the people who made computing happen were institutionalists, not lone-wolf inventors.

Most of all, though, it explains why the World Wide Web, when it finally came to be, was designed to be open in every sense of the word, easily accessible from any computer that implements its well-documented protocols. Even today, long after the big corporations have moved in, a spirit of egalitarianism and idealism underpins the very technical specifications that make the Internet go. Had the moment when the technology was ripe to create an Internet not corresponded with the handful of decades in American history when the federal government was willing and able to fund massive technological research projects of uncertain ultimate benefit, the world we live in would be a very different place.

There is plenty of debate surrounding the question of the first “real” computer in the modern sense of the word, with plenty of fulsome sentiment on display from the more committed partisans. Some point to the machines built by Konrad Zuse in Nazi Germany in the midst of World War II, others to the ones built by the British code breakers at Bletchley Park around the same time. But the consensus, establishment choice has long been and still remains the American “Electronic Numerical Integrator and Calculator,” or ENIAC. It was designed primarily by the physicist John Mauchly and the electrical engineer J. Presper Eckert at the University of Pennsylvania, and was funded by the United States Army for the purpose of calculating the ideal firing trajectories of artillery shells. Because building it was largely a process of trial and error from the time that the project was officially launched on June 1, 1943, it is difficult to give a precise date when ENIAC “worked” for the first time. It is clear, however, that it wasn’t able to do the job the Army expected of it until after the war that had prompted its creation was over. ENIAC wasn’t officially accepted by the Army until July of 1946.

ENIAC’s claim to being the first modern computer rests on the fact that it was the first machine to combine two key attributes: it was purely electrical rather than electro-mechanical — no clanking telephone relays here! — and it was Turing complete. The latter quality requires some explanation.

First defined by the British mathematician and proto-computer scientist Alan Turing in the 1930s, the phrase “Turing complete” describes a machine that is able to store numerical data in internal memory of some sort, perform calculations and transformations upon that data, and make conditional jumps in the program it is running based upon the results. Anyone who has ever programmed a computer of the present day is familiar with branching decision points such as BASIC’s “if, then” construction — if such-and-such is the case, then do this — as well as loops such as its “for, next” construction, which are used to repeat sections of a program multiple times. The ability to write such statements and see them carried out means that one is working on a Turing-complete computer. ENIAC was the first purely electrical computer that could deal with the contemporary equivalent of “if, then” and “for, next” statements, and thus the patriarch of the billions more that would follow.

That said, there are ways in which ENIAC still fails to match our expectations of a computer — not just quantitatively, in the sense that it was 80 feet long, 8 feet tall, weighed 30 tons, and yet could manage barely one half of one percent of the instructions per second of an Apple II from the dawn of the personal-computing age, but qualitatively, in the sense that ENIAC just didn’t function like we expect a computer to do.

For one thing, it had no real concept of software. You “programmed” ENIAC by physically rewiring it, a process that generally consumed far more time than did actually running the program thus created. The room where it was housed looked like nothing so much as a manual telephone exchange from the old days, albeit on an enormous scale; it was a veritable maze of wires and plugboards. Perhaps we shouldn’t be surprised to learn, then, that its programmers were mostly women, next-generation telephone operators who wandered through the machine’s innards with clipboards in their hands, remaking their surroundings to match the schematics on the page.

Another distinction between ENIAC and what came later is more subtle, but in its way even more profound. If you were to ask the proverbial person on the street what distinguishes a computer program from any other form of electronic media, she would probably say something about its “interactivity.” The word has become inescapable, the defining adjective of the computer age: “interactive fiction,” “interactive learning,” “interactive entertainment,” etc. And yet ENIAC really wasn’t so interactive at all. It operated under what would later become known as the “batch-processing” model. After programming it — or, if you like, rewiring it — you fed it a chunk of data, then sat back and waited however long it took for the result to come out the metaphorical other side of the pipeline. And then, if you wished, you could feed it some more data, to be massaged in exactly the same way. Ironically, this paradigm is much closer to the literal meaning of the word “computer” than the one with which we are familiar; ENIAC was a device for computing things. No more and no less. This made it useful, but far from the mind-expanding anything machine that we’ve come to know as the computer.

Thus the story of computing in the decade or two after ENIAC is largely that of how these two paradigms — programming by rewiring and batch processing — were shattered to yield said anything machine. The first paradigm fell away fairly quickly, but the second would persist for years in many computing contexts.

In November of 1944, when ENIAC was still very much a work in progress, it was visited by John von Neumann. After immigrating to the United States from Hungary more than a decade earlier, von Neumann had become one of the most prominent intellectuals in the country, an absurdly accomplished mathematician and all-around genius for all seasons, with deep wells of knowledge in everything from atomic physics to Byzantine history. He was, writes computer historian M. Mitchell Waldrop, “a scientific superstar, the very Hollywood image of what a scientist ought to be, up to and including that faint, delicious touch of a Middle European accent.” A man who hobnobbed routinely with the highest levels of his adopted nation’s political as well as scientific establishment, he was now attached to the Manhattan Project that was charged with creating an atomic bomb before the Nazis could manage to do so. He came to see ENIAC in that capacity, to find out whether it or a machine like it might be able to help himself and his colleagues with the fiendishly complicated calculations that were part and parcel of their work.

Truth be told, he was somewhat underwhelmed by what he saw that day. He was taken aback by the laborious rewiring that programming ENIAC entailed, and judged the machine to be far too balky and inflexible to be of much use on the Manhattan Project.

But discussion about what the next computer after ENIAC ought to be like was already percolating, so much so that Mauchly and Eckert had already given the unfunded, entirely hypothetical machine a catchy acronym: EDVAC, for “Electronic Discrete Variable Automatic Computer.” Von Neumann decided to throw his own hat into the ring, to offer up his own proposal for what EDVAC should be. Written betwixt and between his day job in the New Mexico desert, the resulting document laid out five abstract components of any computer. There must be a way of inputting data and a way of outputting it. There must be memory for storing the data, and a central arithmetic unit for performing calculations upon it. And finally, there must be a central control unit capable of executing programmed instructions and making conditional jumps.

But the paper’s real stroke of genius was its description of a new way of carrying out this programming, one that wouldn’t entail rewiring the computer. It should be possible, von Neumann wrote, to store not only the data a program manipulated in memory but the program itself. This way new programs could be input just the same way as other forms of data. This approach to computing — the only one most of us are familiar with — is sometimes called a “von Neumann machine” today, or simply a “stored-program computer.” It is the reason that, writes M. Mitchell Waldrop, the anything machine sitting on your desk today “can transform itself into the cockpit of a fighter jet, a budget projection, a chapter of a novel, or whatever else you want” — all without changing its physical form one iota.

Von Neumann began to distribute his paper, labeled a “first draft,” in late June of 1945, just three weeks before the Manhattan Project conducted the first test of an atomic bomb. The paper ignited a brouhaha that will ring all too familiar to readers of earlier articles in this series. Mauchly and Eckert had already resolved to patent EDVAC in order to exploit it for commercial purposes. They now rushed to do so, whilst insisting that the design had included the stored-program idea from the start, that von Neumann had in fact picked it up from them. Von Neumann himself begged to differ, saying it was all his own conception and filing a patent application of his own. Then the University of Pennsylvania entered the fray as well, saying it automatically owned any invention conceived by its employees as part of their duties. The whole mess was yet further complicated by the fact that the design of ENIAC, from which much of EDVAC was derived, had been funded by the Army, and was still considered classified.

Thus the three-way dispute wound up in the hands of the Army’s lawyers, who decided in April of 1947 that no one should get a patent. They judged that von Neumann’s paper constituted “prior disclosure” of the details of the design, effectively placing it in the public domain. The upshot of this little-remarked decision was that, in contrast to the telegraph and telephone among many other inventions, the abstract design of a digital electronic stored-program computer was to be freely available for anyone and everyone to build upon right from the start.[1]Inevitably, that wasn’t quite the end of it. Mauchly and Eckert continued their quest to win the patent they thought was their due, and were finally granted it at the rather astonishingly late date of 1964, by which time they were associated with the Sperry Rand Corporation, a maker of mainframes and minicomputers. But this victory only ignited another legal battle, pitting Sperry Rand against virtually every other company in the computer industry, who were not eager to start paying one of their competitors a royalty on every single computer they made. The patent was thrown out once and for all in 1973, primarily on the familiar premise that Von Neumann’s paper constituted prior disclosure.

Mauchly and Eckert had left the University of Pennsylvania in a huff by the time the Army’s lawyers made their decision. Without its masterminds, the EDVAC project suffered delay after delay. By the time it was finally done in 1952, it did sport stored programs, but its thunder had been stolen by other computers that had gotten there first.

The Whirlwind computer in testing, circa 1950. Jay Forrester is second from left, Robert Everett the man standing by his side.

The first stored-program computer to be actually built was known as the Manchester Mark I, after the University of Manchester in Britain that was its home. It ran its first program in April of 1949, a landmark moment in the proud computing history of Britain, which stretches back to such pioneers as Charles Babbage and Ada Lovelace. But this series of articles is concerned with how the World Wide Web came to be, and that is primarily an American story prior to its final stages. So, I hope you will forgive me if I continue to focus on the American scene. More specifically, I’d like to turn to the Whirlwind, the first stored-program all-electrical computer to be built in the United States — and, even more importantly, the first to break away from the batch-processing paradigm.

The Whirlwind had a long history behind it by the time it entered regular service at MIT in April of 1951. It had all begun in December of 1944, when the Navy had asked MIT to build it a new flight simulator for its trainees, one that could be rewired to simulate the flight characteristics of any present or future model of aircraft. The task was given to Jay Forrester, a 26-year-old engineering graduate student who would never have been allowed near such a project if all of his more senior colleagues hadn’t been busy with other wartime tasks. He and his team struggled for months to find a way to meet the Navy’s expectations, with little success. Somewhat to his chagrin, the project wasn’t cancelled even after the war ended. Then, one afternoon in October of 1945, in the course of a casual chat on the front stoop of Forrester’s research lab, a representative of the Navy brass mentioned ENIAC, and suggested that a digital computer like that one might be the solution to his problems. Forrester took the advice to heart. “We are building a digital computer!” he barked to his bewildered team just days later.

Forrester’s chief deputy Robert Everett would later admit that they started down the road of what would become known as “real-time computing” only because they were young and naïve and had no clue what they were getting into. For all that it was the product of ignorance as much as intent, the idea was nevertheless an audacious conceptual leap for computing. A computer responsible for running a flight simulator would have to do more than provide one-off answers to math problems at its own lackadaisical pace. It would need to respond to a constant stream of data about the state of the airplane’s controls, to update a model of the world in accord with that data, and provide a constant stream of feedback to the trainee behind the controls. And it would need to do it all to a clock, fast enough to give the impression of real flight. It was a well-nigh breathtaking explosion of the very idea of what a computer could be — not least in its thoroughgoing embrace of interactivity, its view of a program as a constant feedback loop of input and output.

The project gradually morphed from a single-purpose flight simulator to an even more expansive concept, an all-purpose digital computer that would be able to run a variety of real-time interactive applications. Like ENIAC before it, the machine which Forrester and Everett dubbed the Whirlwind was built and tested in stages over a period of years. In keeping with its real-time mission statement, it ended up doing seven times as many instructions per second as ENIAC, mostly thanks to a new type of memory — known as “core memory” — invented by Forrester himself for the project.

In the midst of these years of development, on August 29, 1949, the Soviet Union tested its first atomic bomb, creating panic all over the Western world; most intelligence analysts had believed that the Soviets were still years away from such a feat. The Cold War began in earnest on that day, as all of the post-World War II dreams of a negotiated peace based on mutual enlightenment gave way to one based on the terrifying brinkmanship of mutually assured destruction. The stakes of warfare had shifted overnight; a single bomb dropped from a single Soviet aircraft could now spell the end of millions of American lives. Desperate to protect the nation against this ghastly new reality, the Air Force asked Forrester whether the Whirlwind could be used to provide a real-time picture of American airspace, to become the heart of a control center which kept track of friendlies and potential enemies 24 hours per day. As it happened, the project’s other sponsors had been growing impatient and making noises about cutting their funding, so Forrester had every motivation to jump on this new chance; the likes of flight simulation was entirely forgotten for the time being. On April 20, 1951, as its first official task, the newly commissioned Whirlwind successfully tracked two fighter planes in real time.

Satisfied with that proof of concept, the Air Force offered to lavishly fund a Project Lincoln that would build upon what had been learned from the Whirlwind, with the mission of protecting the United States from Soviet bombers at any cost — almost literally, given the sum of money the Air Force was willing to throw at it. It began in November of 1951, with Forrester in charge.

Whatever its implications about the gloomy state of the world, Project Lincoln was a truly visionary technological project, enough so as to warm the cockles of even a peacenik engineer’s heart. Soviet bombers, if they came someday, were expected to come in at low altitudes in order to minimize their radar exposure. This created a tremendous logistical problem. Even if the Air Force built enough radar stations to spot all of the aircraft before they reached their targets — a task it was willing to undertake despite the huge cost of it — there would be very little time to coordinate a response. Enter the Semi-Automatic Ground Environment (SAGE); it was meant to provide that rapid coordination, which would be impossible by any other means. Data from hundreds of radar stations would pour into its control centers in real time, to be digested by a computer and displayed as a single comprehensible strategic map on the screens of operators, who would then be able to deploy fighters and ground-based antiaircraft weapons as needed in response, with nary a moment’s delay.

All of this seems old hat today, but it was unprecedented at the time. It would require computers whose power must dwarf even that of the Whirlwind. And it would also require something else: each computer would need to be networked to all the radar stations in its sector, and to its peers in other control centers. This was a staggering task in itself. To appreciate why Jay Forrester and his people thought they had a ghost of a chance of bringing it off, we need to step back from the front lines of the Cold War for a moment and check in with an old friend.

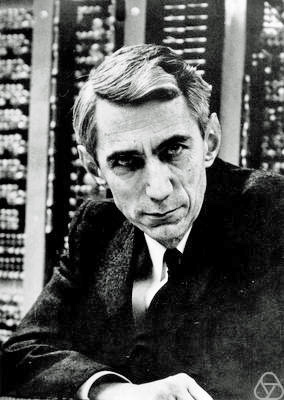

Claude Shannon in middle age, after he had become a sort of all-purpose public intellectual for the press to trot out for big occasions. He certainly looked the part…

Claude Shannon had left MIT to work for Bell Labs on various military projects during World War II, and had remained there after the end of the war. Thus when he published the second earthshaking paper of his career in 1948, he did so in the pages of the Bell System Technical Journal.

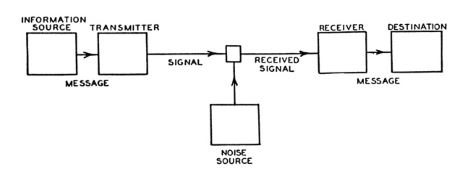

“A Mathematical Theory of Communication” belies its name to some extent, in that it can be explained in its most basic form without recourse to any mathematics at all. Indeed, it starts off so simply as to seem almost childish. Shannon breaks the whole of communication — of any act of communication — into seven elements, six of them proactive or positive, the last one negative. In addition to the message itself, there are the “source,” the person or machine generating the message; the “transmitter,” the device which encodes the message for transport and sends it on its way; the “channel,” the medium over which the message travels; the “receiver,” which decodes the message at the other end; and the “destination,” the person or machine which accepts and comprehends the message. And then there is “noise”: any source of entropy that impedes the progress of the message from source to destination or garbles its content. Let’s consider a couple of examples of Shannon’s framework in action.

One of the oldest methods of human communication is direct speech. Here the source is a person with something to say, the transmitter the mouth with which she speaks, the channel the air through which the resulting sound waves travel, the receiver the ear of a second person, and the destination that second person herself. Noise in the system might be literal background or foreground noise such as another person talking at the same time, or a wind blowing in the wrong direction, or sheer distance.

We can break telegraphy down in the same way. Here the source is the operator with a message to send, the transmitter his Morse key or teletype, the channel the wire over which the Morse Code travels, the receiver an electromagnet-actuated pencil or a teleprinter, and the destination the human operator at the other end of the wire. Noise might be static on the line, or a poor signal caused by a weak battery or something else, or any number of other technical glitches.

But if we like we can also examine the process of telegraphy from a greater remove. We might prefer to think of the source as the original source of the message — say, a soldier overseas who wants to tell his fiancée that he loves her. Here the telegraph operator who sends the message is in a sense a part of the transmitter, while the operator who receives the message is indeed a part of the receiver. The girl back home is of course the destination. When using this scheme, we consider the administration of telegraph stations and networks also to be a part of the overall communications process. In this conception, then, strictly human mistakes, such as a message dropped under a desk and overlooked, become a part of the noise in the system. Shannon provides us, in other words, with a framework for conceptualizing communication at whatever level of granularity might happen to suit our current goals.

Notably absent in all of this is any real concern over the content of the message being sent. Shannon treats content with blithe disinterest, not to say contempt. “The ‘meaning’ of a message is generally irrelevant,” he writes. “The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point. [The] semantic aspects of communication are irrelevant to the engineering problem.” Rather than content or meaning, Shannon is interested in what he calls “information,” which is related to the actual meaning of the message but not quite the same thing. It is rather the encoded form the meaning takes as it passes down the channel.

And here Shannon clearly articulated an idea of profound importance, one which network engineers had been groping toward for some time: any channel is ultimately capable of carrying any type of content — text, sound, still or moving images, computer code, you name it. It’s just a matter of having an agreed-upon protocol for the transmitter and receiver to use to package it into information at one end and then unpack it at the other.

In practical terms, however, some types of content take longer to send over any given channel than others; while a telegraph line could theoretically be used to transmit video, it would take so long to send even a single frame using its widely spaced dots and dashes that it is effectively useless for the purpose, even though it is perfectly adequate for sending text as Morse Code. Some forms of content, that is to say, are denser than others, require more information to convey. In order to quantify this, one needs a unit for measuring quantities of information itself. This Shannon provides, in the form of a single on-or-off state — a yes or a no, a one or a zero. “The units may be called binary digits,” he writes, “or, more briefly, bits.”

And so a new word entered the lexicon. An entire universe of meaning can be built out of nothing but bits if you have enough of them, as our modern digital world proves. But some types of channel can send more bits per second than others, which makes different channels more or less suitable for different types of content.

There is still one more thing to consider: the noise that might come along to corrupt the information as it travels from transmitter to receiver. A message intended for a human is actually quite resistant to noise, for our human minds are very good at filling in gaps and working around mistakes in communication. A handful of garbled characters seldom destroys the meaning of a textual message for us, and we are equally adept at coping with a bad telephone connection or a static-filled television screen. Having a lot of noise in these situations is certainly not ideal, but the amount of entropy in the system has to get pretty extreme before the process of communication itself breaks down completely.

But what of computers? Shannon was already looking forward to a world in which one computer would need to talk directly to another, with no human middleman. Computers cannot use intuition and experience to fill in gaps and correct mistakes in an information stream. If they are to function, they need every single message to reach them in its original, pristine state. But, as Shannon well realized, some amount of noise is a fact of life with any communications channel. What could be done?

What could be done, Shannon wrote, was to design error correction into a communication protocol. The transmitter could divide the information to be sent into packets of fixed length. After sending a packet, it could send a checksum, a number derived from performing a series of agreed-upon calculations on the bits in the packet. The receiver at the other end of the line would then be expected to perform the same set of calculations on the information it had received, and compare it with the transmitter’s checksum. If the numbers matched, all must be well; it could send an “okay” back to the transmitter and wait on the next packet. But if the numbers didn’t match, it knew that noise on the channel must have corrupted the information. So, it would ask the transmitter to try sending the last packet again. It was in essence the same principle as the one that had been employed on Claude Chappe’s optical-telegraph networks of 150 years earlier.

To be sure, there were parameters in the scheme to be tinkered with on a situational basis. Larger packets, for example, would be more efficient on a relatively clean channel that gave few problems, smaller ones on a noisy channel where re-transmission was often necessary. Meanwhile the larger the checksum and more intense the calculations done to create it, the more confident one could be that the information really had been received correctly, that the checksums didn’t happen to match by mere coincidence. But this extra insurance came with a price of its own, in the form of the extra computing horsepower required to generate the more complex checksums and the extra time it took to send them down the channel. It seemed that success in digital communications was, like success in life, a matter of making wise compromises.

Two years after Shannon published his paper, another Bell Labs employee by the name of R.W. Hamming published “Error Detecting and Error Correcting Codes” in the same journal. It made Shannon’s abstractions concrete, laying out in careful detail the first practical algorithms for error detection and correction on a digital network, using checksums that would become known as “Hamming codes.”

Even before Hamming’s work came along to complement it, Shannon’s paper sent shock waves through the nascent community of computing, whilst inventing at a stroke a whole new field of research known as “information theory.” The printers of the Bell System Technical Journal, accustomed to turning out perhaps a few hundred copies for internal distribution through the company, were swamped by thousands of requests for that particular issue. Many of those involved with computers and/or communications would continue to speak of the paper and its author with awe for the rest of their lives. “It was like a bolt out of the blue, a really unique thing,” remembered a Bell Labs researcher named John Pierce. “I don’t know of any other theory that came in a complete form like that, with very few antecedents or history.” “It was a revelation,” said MIT’s Oliver Selfridge. “Around MIT the reaction was, ‘Brilliant! Why didn’t I think of that?’ Information theory gave us a whole conceptual vocabulary, as well as a technical vocabulary.” Word soon spread to the mainstream press. Fortune magazine called information theory that “proudest and rarest [of] creations, a great scientific theory which could profoundly and rapidly alter man’s view of the world.” Scientific American proclaimed it to encompass “all of the procedures by which one mind may affect another. [It] involves not only written and oral speech, but also music, the pictorial arts, the theatre, the ballet, and in fact all human behavior.” And that was only the half of it: in the midst of their excitement, the magazine’s editors failed to even notice its implications for computing.

And those implications were enormous. The fact was that all of the countless digital networks of the future would be built from the principles first described by Claude Shannon. Shannon himself largely stepped away from the table he had so obligingly set. A playful soul who preferred tinkering to writing or working to a deadline, he was content to live off the prestige his paper had brought him, accepting lucrative seats on several boards of directors and the like. In the meantime, his theories were about to be brought to vivid life by Project Lincoln.

In their later years, many of the mostly young people who worked on Project Lincoln would freely admit that they had had only the vaguest notion of what they were doing during those halcyon days. Having very little experience with the military or aviation among their ranks, they extrapolated from science-fiction novels, from movies, and from old newsreel footage of the command-and-control posts whence the Royal Air Force had guided defenses during the Battle of Britain. Everything they used in their endeavors had to be designed and made from whole cloth, from the input devices to the display screens to the computers behind it all, which were to be manufactured by a company called IBM that had heretofore specialized in strictly analog gadgets (typewriters, time clocks, vote recorders, census tabulators, cheese slicers). Fortunately, they had effectively unlimited sums of money at their disposal, what with the Air Force’s paranoid sense of urgency. The government paid to build a whole new complex to house their efforts, at Laurence G. Hanscom Airfield, about fifteen miles away from MIT proper. The place would become known as Lincoln Lab, and would long outlive Project Lincoln itself and the SAGE system it made; it still exists to this day.

AT&T — who else? — was contracted to set up the communications lines that would link all of the individual radar stations into control centers scattered all over the country, and in turn link the latter together with one another; it was considered essential not to have a single main control center which, if knocked out of action, could take the whole system down with it. The lines AT&T provided were at bottom ordinary telephone connections, for nothing better existed at the time. No matter; an engineer named John V. Harrington took Claude Shannon’s assertion that all information is the same in the end to heart. He made something called a “modulator/de-modulator”: a gadget which could convert a stream of binary data into a waveform and send it down a telephone line when it was playing the role of transmitter, or convert one of these waveforms back into binary data when it was playing the role of receiver, all at the impressive rate of 1300 bits per second. Its name was soon shortened to “modem,” and bits-per-second to “baud,” borrowing a term that had earlier been applied to the dots and dashes of telegraphy. Combined with the techniques of error correction developed by Shannon and R.W. Hamming, Harrington’s modems would become the basis of the world’s first permanent wide-area computer network.

At a time when the concept of software was just struggling into existence as an entity separate from computer hardware, the SAGE system would demand programs an order of magnitude more complex than anyone had ever attempted before — interactive programs that must run indefinitely and respond constantly to new stimuli, not mere algorithms to be run on static sets of data. In the end, SAGE would employ more than 800 individual programmers. Lincoln Lab created the first tools to separate the act of programming from the bare metal of the machine itself, introducing assemblers that could do some of the work of keeping track of registers, memory locations, and the like for the programmer, to allow her to better concentrate on the core logic of her task. Lincoln Lab’s official history of the project goes so far as to boast that “the art of computer programming was essentially invented for SAGE.”

In marked contrast to later years, programmers themselves were held in little regard at the time; hardware engineers ruled the roost. With no formal education programs in the discipline yet in existence, Lincoln Lab was willing to hire anyone who could get a security clearance and pass a test of basic reasoning skills. A substantial percentage of them wound up being women.

Among the men who came to program for SAGE was Severo Ornstein, a geologist who would go on to a notable career in computing over the following three decades. In his memoir, he captures the bizarre mixture of confusion and empowerment that marked life with SAGE, explaining how he was thrown in at the deep end as soon as he arrived on the job.

It seemed that not only was an operational air-defense program lacking, but the overall system hadn’t yet been fully designed. The OP SPECS (Operational Specifications) which defined the system were just being written, and, with no more background in air defense than a woodchuck, I was unceremoniously handed the task of writing the Crosstelling Spec. What in God’s name was Crosstelling? The only thing I knew about it was that it came late in the schedule, thank heavens, after everything else was finished.

It developed that the country was divided into sectors, and that the sectors were in turn divided into sub-sectors (which were really the operational units) with a Direction Center at the heart of each. Since airplanes, especially those that didn’t belong to the Air Force (or even the U.S.), could hardly be forbidden from crossing between sub-sectors, some coordination was required for handing over the tracking of planes, controlling of interceptors, etc., between the sub-sectors. This function was called Crosstelling, a name inherited from an earlier manual system in which human operators followed the tracks of aircraft on radar screens and coordinated matters by talking to one another on telephones. Now it had somehow fallen to me to define how this coordination should be handled by computers, and then to write it all down in an official OP SPEC with a bright-red cover stamped SECRET.

I was horrified. Not only did I feel incapable of handling the task, but what was to become of a country whose Crosstelling was to be specified by an ignoramus like me? My number-two daughter was born at about that time, and for the first time I began to fear for my children’s future…

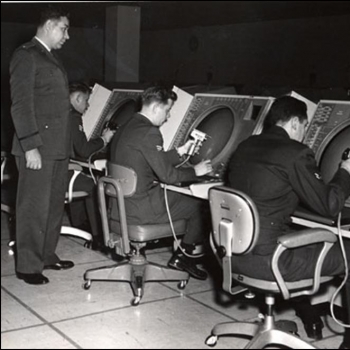

In spite of it all, SAGE more or less worked out in the end. The first control center became officially operational at last in July of 1958, at McGuire Air Force Base in New Jersey. It was followed by 21 more of its kind over the course of the next three and a half years, each housing two massive IBM computers; the second was provided for redundancy, to prevent the survival of the nation from being put at risk by a blown vacuum tube. These computers could communicate with radar stations and with their peers on the network for the purpose of “Crosstelling.” The control centers went on to become one of the iconic images of the Cold War era, featuring prominently in the likes of Dr. Strangelove.[2]That film’s title character was partially based on John Von Neumann, who after his work on the Manhattan Project and before his untimely death from cancer in 1957 became as strident a Cold Warrior as they came. “I believe there is no such thing as saturation,” he once told his old Manhattan Project boss Robert Oppenheimer. “I don’t think any weapon can be too large.” Many have attributed his bellicosity to his pain at seeing the Iron Curtain come down over his homeland of Hungary, separating him from friends and family forever. SAGE remained in service until the early 1980s, by which time its hardware was positively neolithic but still did the job asked of it.

Thankfully for all of us, the system was never subjected to a real trial by fire. Would it have actually worked? Most military experts are doubtful — as, indeed, were many of the architects of SAGE after all was said and done. Severo Ornstein, for his part, says bluntly that “I believe SAGE would have failed utterly.” During a large-scale war game known as Operation Sky Shield which was carried out in the early 1960s, SAGE succeeded in downing no more than a fourth of the attacking enemy bombers. All of the tests conducted after that fiasco were, some claim, fudged to one degree or another.

But then, the fact is that SAGE was already something of a white elephant on the day the very first control center went into operation; by that point the principal nuclear threat was shifting from bombers to ballistic missiles, a form of attack the designers had not anticipated and against which their system could offer no real utility. For all its cutting-edge technology, SAGE thus became a classic example of a weapon designed to fight the last war rather than the next one. Historian Paul N. Edwards has noted that the SAGE control centers were never placed in hardened bunkers, which he believes constitutes a tacit admission on the part of the Air Force that they had no chance of protecting the nation from a full-on Soviet first nuclear strike. “Strategic Air Command,” he posits, “intended never to need SAGE warning and interception; it would strike the Russians first. After SAC’s hammer blow, continental air defenses would be faced only with cleaning up a weak and probably disorganized counter-strike.” There is by no means a consensus that SAGE could have managed to coordinate even that much of a defense.

But this is not to say that SAGE wasn’t worth it. Far from it. Bringing so many smart people together and giving them such an ambitious, all-encompassing task to accomplish in such an exciting new field as computing could hardly fail to yield rich dividends for the future. Because so much of it was classified for so long, not to mention its association with passé Cold War paranoia, SAGE’s role in the history of computing — and especially of networked computing — tends to go underappreciated. And yet many of our most fundamental notions about what computing is and can be were born here. Paul N. Edwards credits SAGE and its predecessor the Whirlwind computer with inventing:

- magnetic-core memory

- video displays

- light guns [what we call light pens today]

- the first effective algebraic computer language

- graphic display techniques

- simulation techniques

- synchronous parallel logic (digits transmitted simultaneously rather than serially through the computer)

- analog-to-digital and digital-to-analog conversion techniques

- digital data transmission over telephone lines

- duplexing

- multiprocessing

- networks (automatic data exchange among different computers)

Readers unfamiliar with computer technology may not appreciate the extreme importance of these developments to the history of computing. Suffice it to say that much-evolved versions of all of them remain in use today. Some, such as networking and graphic displays, comprise the very backbone of modern computing.

M. Mitchell Waldrop elaborates in a more philosophical mode:

SAGE planted the seeds of a truly powerful idea, the notion that humans and computers working together could be far more effective than either working separately. Of course, SAGE by itself didn’t get us all the way to the modern idea of personal computers being used for personal empowerment; the SAGE computers were definitely not “personal,” and the controllers could use them only for that one, tightly constrained task of air defense. Nonetheless, it’s no coincidence that the basic setup still seems so eerily familiar. An operator watching his CRT display screen, giving commands to a computer via a keyboard and a handheld light gun, and sending data to other computers via a digital communications link: SAGE may not have been the technological ancestor of the modern PC, mouse, and network, but it was definitely their conceptual and spiritual ancestor.

So, ineffective though it probably was as a means of national defense, the real legacy of SAGE is one of swords turning into plowshares. Consider, for example, its most direct civilian progeny.

SAGE in operation. For a quarter of a century, hundreds of Air Force Personnel were to be found sitting in antiseptic rooms like this one at any given time, peering at their displays in case something showed up there. It’s one way to make a living…

One day in the summer of 1953, long before any actual SAGE computers had been built, a senior IBM salesman who was privy to the project, whose name was R. Blair Smith, chanced to sit next to another Smith on a flight from Los Angeles to New York City. This other Smith was none other than Cyrus Rowlett Smith, the president of American Airlines.

Blair Smith had caught the computer fever, and believed that they could be very useful for airline reservations. Being a salesman, he didn’t hesitate to tell his seatmate all about this as soon as he learned who he was. He was gratified to find his companion receptive. “Now, Blair,” said Cyrus Smith just before their airplane landed, “our reservation center is at LaGuardia Airport. You go out there and look it over. Then you write me a letter and tell me what I should do.”

In his letter, Blair Smith envisioned a network that would bind together booking agents all over the country, allowing them to search to see which seats were available on which flights and to reserve them instantly for their customers. Blair Smith:

We didn’t know enough to call it anything. Later on, the word “Sabre” was adopted. By the way, it was originally spelled SABER — the only precedent we had was SAGE. SAGE was used to detect incoming airplanes. Radar defined the perimeter of the United States and then the information was signaled into a central computer. The perimeter data was then compared with what information they had about friendly aircraft, and so on. That was the only precedent we had. When the airline system was in research and development, they adopted the code name SABER for “Semi-Automatic Business Environment Research.” Later on, American Airlines changed it to Sabre.

Beginning in 1960, Sabre was gradually rolled out over the entire country. It became the first system of its kind, an early harbinger of the world’s networked future. Spun off as an independent company in 2000, it remains a key part of the world’s travel infrastructure today, when the vast majority of the reservations it accepts come from people sitting behind laptops and smartphones.

Sabre and other projects like it led to the rise of IBM as the virtually unchallenged dominant force in business computing from the middle of the 1950s until the end of the 1980s. But even as systems like Sabre were beginning to demonstrate the value of networked computing in everyday life, another, far more expansive vision of a networked world was taking shape in the clear blue sky of the country’s research institutions. The computer networks that existed by the start of the 1960s all operated on the “railroad” model of the old telegraph networks: a set of fixed stations joined together by fixed point-to-point links. What about a computer version of a telephone network instead — a national or international network of computers all able to babble happily together, with one computer able to call up any other any time it wished? Now that would really be something…

(Sources: the books A Brief History of the Future: The Origins of the Internet by John Naughton; From Gutenberg to the Internet: A Sourcebook on the History of Information Technology edited by Jeremy M. Norman, The Information by James Gleick, The Dream Machine by M. Mitchell Waldrop, The Closed World: Computers and the Politics of Discourse in Cold War America by Paul N. Edwards, Project Whirlwind: The History of a Pioneer Computer by Kent C. Redmond and Thomas M. Smith, From Whirlwind to MITRE: The R&D Story of the SAGE Air Defense Computer by Kent C. Redmond and Thomas N. Smith, The SAGE Air Defense System: A Personal History by John F. Jacobs, A History of Modern Computing (2nd ed.) by Paul E. Ceruzzi, Computing in the Middle Ages by Severo M. Ornstein, and Robot: Mere Machine to Transcendent Mind by Hans Moravec. Online sources include Lincoln Lab’s history of SAGE and the Charles Babbage Institute’s interview with R. Blair Smith.)

Footnotes

| ↑1 | Inevitably, that wasn’t quite the end of it. Mauchly and Eckert continued their quest to win the patent they thought was their due, and were finally granted it at the rather astonishingly late date of 1964, by which time they were associated with the Sperry Rand Corporation, a maker of mainframes and minicomputers. But this victory only ignited another legal battle, pitting Sperry Rand against virtually every other company in the computer industry, who were not eager to start paying one of their competitors a royalty on every single computer they made. The patent was thrown out once and for all in 1973, primarily on the familiar premise that Von Neumann’s paper constituted prior disclosure. |

|---|---|

| ↑2 | That film’s title character was partially based on John Von Neumann, who after his work on the Manhattan Project and before his untimely death from cancer in 1957 became as strident a Cold Warrior as they came. “I believe there is no such thing as saturation,” he once told his old Manhattan Project boss Robert Oppenheimer. “I don’t think any weapon can be too large.” Many have attributed his bellicosity to his pain at seeing the Iron Curtain come down over his homeland of Hungary, separating him from friends and family forever. |

mycophobia

April 8, 2022 at 6:01 pm

“In the end, SAGE would employee more than”

employ?

Jimmy Maher

April 8, 2022 at 8:19 pm

Thanks!

Jack Brounstein

April 8, 2022 at 7:25 pm

Defining “Turing complete” for a non-technical audience is always difficult, but I’d note that “if/then/else” isn’t sufficient for Turing completeness. Douglas Hofstadter’s BlooP, for instance, has conditional statements but still isn’t Turing-complete.

Also, pedants will tell you that ENIAC isn’t Turing complete because no real-world computer is–it’s a theoretical model of computation that requires unlimited memory, and there’s only a finite amount space. Personally I don’t think that’s a problem, that outside of a Computer Science textbook “is theoretically Turing-complete assuming infinite resources” is a reasonable short-hand, but it’s something to be aware of.

Jimmy Maher

April 8, 2022 at 8:38 pm

I added a footnote. For obvious reasons, I’d rather not get too down in the weeds on this, and I don’t really think it’s necessary. In most real-contexts, the “if/then/else” rule of thumb is a sufficient test, especially when we’re applying it to hardware rather than a programming language, and it’s something anyone with just a bit of familiarity with programming can easily grasp. Thanks!

Aula

April 9, 2022 at 5:32 am

For programming languages, a sufficient definition of Turing-completeness is either “if/then/else and loops” or “if/then and goto” (you don’t need either the “else” part or loop statements if you can jump arbitrarily). However, neither definition makes any sense for hardware; in that case, the correct definition is that a piece of hardware is Turing complete if it has conditional jumps.

mb

April 9, 2022 at 9:49 am

Yes, I want to second this. The “if-then-else” definition of Turing

completeness is seriously wrong, and risks confusing people. Conditional jumps

(“if this, then continue from this instruction”) is a good simple way to

explain the key idea.

Jimmy Maher

April 9, 2022 at 10:15 am

Okay. Removed “else” from the equation. Thanks!

Aula

April 9, 2022 at 3:09 pm

That’s still not right. If-then gives you the ability to jump forward, but that’s not good enough; you need to be able to jump backwards as well. If you really want to keep it as simple as possible, remove the if-then thing altogether and say that a Turing-complete programming language needs to have *loops* since that’s the essential distinguishing feature.

Jimmy Maher

April 9, 2022 at 3:19 pm

The thing is, we’re not really so interested in Turing-complete programming languages. We’re rather interested in Turing-complete hardware, which is defined — in my understanding at least — by the ability to do conditional branches (making it hardware which can *support* a Turing-complete programming language). “If, then” is just a way of explaining that to a non-technical reader for whom the phrase “conditional branch” might not immediately cause bells to go off. I’m assuming that most readers have probably dabbled in BASIC or the like at some point in their lives.

Aula

April 10, 2022 at 4:21 am

“in my understanding at least”

Well, that’s the issue; your understanding isn’t quite right. Whether we are talking about programming languages or hardware, Turing-completeness needs the ability to jump back to an earlier point in code, and a non-technical reader will absolutely not grasp that if you just mention if-then. In terms of BASIC, it’s GOTO that makes it Turing-complete, not IF-THEN. Or to put it facetiously, it’s the ability to get stuck in an infinite loop that defines Turing-completeness.

Jimmy Maher

April 10, 2022 at 7:12 am

Okay, that’s helpful. I hope we’ve got it now. I stole your bit about infinite loops. ;) Thanks!

Buck

April 10, 2022 at 8:02 am

In non-technical terms, Turing-complete means that a language/instruction set can compute everything that is computable (and something is computable if it can be computed by a Turing machine, but that is getting technical again). It’s possible to create a Turing-complete, single-instruction computer, with the instruction “substract and jump if less than zero”. If we assume arithmetic as given, the conditional (non-resticted) jump is indeed the important element.

A machine that always terminates eventually cannot be Turing-complete, but I’m not sure if the reverse is also true (an abstract counterexample would be a language that has just infinite loops, but does nothing else). Your definition of Turing-completeness with conditional branches seems perfectly fine to me. When translating it into BASIC terms, why not just use GOTO in addition to if-then? It’s the programming language equivalent to conditional branches, and I’m sure more people are familiar with GOTO than with FOR NEXT. Who hasn’t written code like 100 PRINT “HELLO” 200 GOTO 100 when starting with BASIC?

(There are perfectly normal applications for infinite loops, btw – webservers, threadpools, the software that keeps a plane flying, …)

Jimmy Maher

April 10, 2022 at 9:45 am

I’m a little reluctant to lean on goto because, while it was indeed a fixture of the 8-bit BASICs many of us grew up with, its use is frowned upon in more recent implementations of the language, if they even allow it at all. I try not to make too many assumptions about readers’ backgrounds. Many readers who think of BASIC may think Visual BASIC first, not Commodore BASIC 2.0 or Applesoft BASIC.

I nixed the parenthetical about infinite loops, since it seems we may not be quite sure about that. Pity; I kind of liked that bit. ;) Thanks!

Peter Olausson

April 8, 2022 at 7:29 pm

“Baud” is frequently misunderstood. It’s not bps but symbols per second. If the symbols transmitted come in two states – which I assume was the case to begin with – they are equal to bits, and indeed baud = bits per second. If, however, a symbol can have four states, it represents 2 bits, and 1 baud = 2 bps. Eight states means 1 baud = 3 bps … And so on.

Jimmy Maher

April 8, 2022 at 8:44 pm

In telegraphy, it simply referred to the number of dots and dashes coming down the wire — generally from a teletype rather than a human-operated Morse key, since any human operator would presumably struggle to transmit at much more than baud. The first modems used simple on/off states effectively equivalent to dots and dashes, so I think this explanation is sufficient for our purposes.

Rowan Lipkovits

April 8, 2022 at 9:54 pm

“enough so as to warm the cackles of even a peacenik engineer’s heart”

It’s an odd expression, but I believe the word usually used there is “cockles”.

Jimmy Maher

April 9, 2022 at 7:09 am

Thanks!

Martin

April 9, 2022 at 8:37 pm

Does the Complex Computer still exist? Are there any pictures of it?

Jimmy Maher

April 10, 2022 at 7:16 am

Not to be my knowledge, no. The only place I found it described in any detail is The Dream Machine by M. Mitchell Waldrop (a superb book, by the way.)

Ethan Johnson

April 10, 2022 at 6:36 pm

The CHM has a photo purportedly from 1939 which I assume would be the most directly related device to the Complex Computer as such. There are a few photos of the teletype though this may be the only one of the telephone-relay computer.

https://www.computerhistory.org/revolution/birth-of-the-computer/4/85

Goatmeal

April 9, 2022 at 8:48 pm

“After immigrating from Hungary more than a decade earlier, von Neumann…”

Should that be ’emigrating’ ?

Jimmy Maher

April 10, 2022 at 7:21 am

That leaves it a bit too open-ended in a where-did-he-end-up sense, but then so did my original sentence. Fixed it in another way. Thanks!

Andrew Pam

April 10, 2022 at 4:59 am

“the principals first described by Claude Shannon” – typo for “principles”.

Jimmy Maher

April 10, 2022 at 7:22 am

Thanks!

Leo Vellés

April 11, 2022 at 3:54 pm

“If you were the ask the proverbial person on the street what distinguishes a computer program from any other form of electronic media”.

Shouldn’t be “If you were TO ask…”?

By the way, I suppose the delay of one week for this post is justified by the result, it was a long but nevertheless a very entertaining and informative read. Loving this series so far…and learning a lot too (for example, what bit meant)

Jimmy Maher

April 11, 2022 at 3:57 pm

Yes. Thanks!

Gnoman

April 11, 2022 at 11:48 pm

I’ve heard it said that “why didn’t I think of that” is the greatest expression of brilliance, because it means that you’ve not only come up with something genuinely new and clever (else somebody would have thought of it) but have explained it so well that others can grasp the concept very easily (else they wouldn’t think they should have thought of it). I suppose this is a principle of limited applicability, given how overconfident people tend to be in their self analysis, but when that reaction is coming from certified geniuses in their own right it probably fits.

Doug Orleans

April 13, 2022 at 8:12 pm

I’m friends with a few people who have worked at Lincoln Lab, and they will sometimes correct you if you say “Lincoln Labs” (plural). Its correct name is Lincoln Lab, singular (as opposed to Bell Labs, which is correctly plural).

Jimmy Maher

April 14, 2022 at 8:39 am

I didn’t know that. Thanks!

Pauli Ojala

April 14, 2022 at 11:50 pm

The performance comparisons you mention for ENIAC and Whirlwind seem seriously off.

ENIAC had a 5 kHz clock speed and could do 0.00289 MIPS according to this source: https://eniacday.org/eniac-trivia

The MOS 6502 CPU in the Apple II ran at approximately 1 MHz and 0.5 MIPS. That’s 173x faster.

According to Wikipedia, the Whirlwind could do 20k operations per second, or 0.02 MIPS.

The Motorola 68000 in the original Mac was rated at 1.4 MIPS — seventy times faster than the Whirlwind.

Fundamentally it’s not really possible for a computer built out of vacuum tubes to be as fast as a microprocessor. Shorter physical connections are faster.

Jimmy Maher

April 15, 2022 at 6:09 am

I got all of those figures from supplementary online materials for the book Robot: Mere Machine to Transcendent Mind by Hans Moravec: https://frc.ri.cmu.edu/~hpm/book97/ch3/processor.list.txt. He lists .00289 MIPS for ENIAC, .0694 for Whirlwind, .02 for the Apple II, and .52 for the first-generation Macintosh. It looks like only the numbers for ENIAC are correct. I wondered a bit about that when I wrote the article; it did seem awfully generous to the ENIAC and Whirlwind. But I never revisited it. I should have listened to my instinct.

Since there is obviously more that goes into overall computing potential than raw instructions per second, I think Moravec may have been doing some other calculations, perhaps factoring in memory capacity or something. But if so, I can’t find it explained anywhere.

Anyway, thanks!

Pauli Ojala

April 15, 2022 at 1:51 pm

You’re welcome!

I love your blog, have been a reader and supporter for years. These long detours around computing history are such fascinating context for the classic gaming and digital media articles.

Larry Farr

April 22, 2022 at 2:46 pm

The word ‘sense’ should be here instead

‘when it finally came to be, was designed to be open in every since of the word,’\

Always enjoy your take on things

Jimmy Maher

April 22, 2022 at 2:57 pm

A post-publication edit gone wrong. ;) Thanks!

Mikko

April 28, 2022 at 3:58 pm

Thank you for this series!

“Presper and Eckert had already given the unfunded, entirely hypothetical machine a catchy”

and several other places should probably be either

“Presper Eckert” or “Mauchly and Eckert”

Jimmy Maher

April 28, 2022 at 4:10 pm

Thanks!

jalapeno_dude

May 29, 2022 at 8:09 am

The acronym SAGE enters abruptly about halfway through the article with no explanation, and in fact I had to go to Wikipedia to learn that it stood for Semi-Automatic Ground Environment. Perhaps a sentence or two got inadvertently deleted?

jalapeno_dude

May 29, 2022 at 8:43 am

I see now that SAGE is defined in the 2012 article on the roots of Infocom which is linked to in the next part of this series, but, uh, while I did read that article, it was closer to 2012 then the present when I did…

Jimmy Maher

May 29, 2022 at 6:27 pm

Woops. That must have gotten lost in editing. Thanks!

Lhexa

February 7, 2023 at 11:02 am

Your description of Turing completeness is incorrect. If you’d like, I can type up a short correction for you to append to this post.

Lhexa

February 9, 2023 at 6:39 pm

Specifically: there are three major computing models, namely finite automata, pushdown automata, and Turing machines. The last of these has the distinction that _any_ sufficiently powerful computing model is equivalent to it. However, the prior two also meet what you call the definition of Turing machine: pushdown automata have access to FILO stacks, and finite automata can implement a crude sort of memory by using the state of the machine itself.

nicolas woollaston

July 8, 2023 at 1:09 am

something you have glossed over, presumably in the interests of brevity, is baudot and the other related 5 bit codes. Teletypes didn’t use morse as it isn’t so regular as to be easily machine readable. The story of the gradual standardisation of the 5 bit teletype codes is interesting and relevant to the later issues of standardisation and internationalisation that the internet has had to grapple with