Dan Bunten and his little company Ozark Softscape could look back on a tremendous 1984 as that year came to an end. Seven Cities of Gold had been a huge success, Electronic Arts’s biggest game of the year, doing much to keep the struggling publisher out of bankruptcy court by selling well over 100,000 copies. Bunten himself had become one the most sought-after interviewees in the industry. Everyone who got the chance to speak with him seemed to agree that Seven Cities of Gold was only the beginning, that he was destined for even greater success.

As it turned out, though, 1984 would be the high-water mark for Bunten, at least in terms of that grubbiest but most implacable metric of success in games: quantity of units shifted. The years that followed would be frustrating as often as they would be inspiring, as Bunten pursued a vision that seemed at odds with every trend in the industry, all the while trying to thread the needle between artistic fulfillment and commercial considerations.

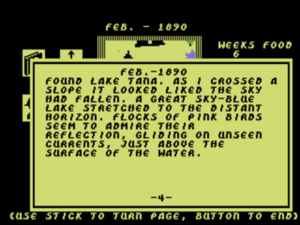

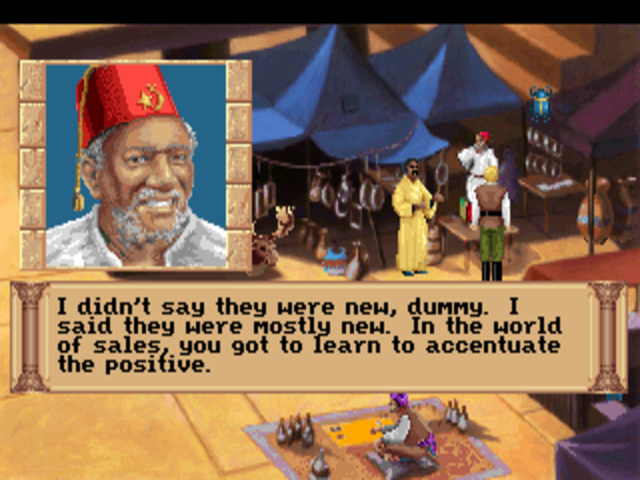

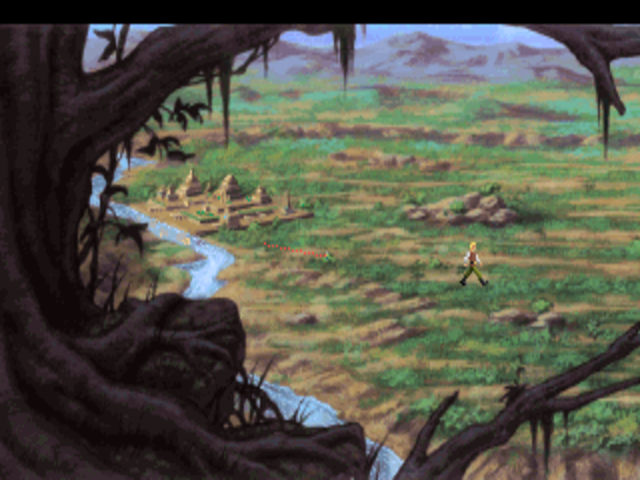

In the wake of Seven Cities of Gold‘s success, EA badly wanted a follow-up with a similar theme, so much so that they offered Bunten a personal bonus of $5000 to make it Ozark’s next project. The result was Heart of Africa, a game which at first glance looks like precisely the sequel EA was asking for but that actually plays quite differently. Instead of exploring the Americas as Hernán Cortés during the 1600s, it has you exploring Africa as an intrepid Victorian adventurer (“Livingston, I presume?”). In keeping with the changed time and location, your goal isn’t to conquer the land for your country — Africa had, for better or for worse, already been thoroughly partitioned among the European nations by 1890, the year in which the game takes place — but simply to discover and to map. In the best tradition of Victorian adventure novels like King Solomon’s Mines, your ultimate goal is to find the tomb of a mythical Egyptian pharaoh. Bunten later admitted that the differences from Heart of Africa‘s predecessor weren’t so much a product of original design intent as improvisation after he had bumbled into an historical context that just wouldn’t work as a more faithful sequel.

Indeed, Bunten in later years dismissed Heart of Africa, his most adventure-like game ever and his last ever that was single-player only, as nothing more than “a game done to please EA”: “I honestly didn’t want to do the project.” Its biggest problem hinges on the fact that its environment is randomly generated each time you start a new game, itself an attempt to remedy the most obvious failing of adventure games as a commercial proposition: their lack of replayability. Yet the random maps can never live up to what a hand-crafted map, designed for challenge and dramatic effect, might have been; the “story” in Heart of Africa is all too clearly just a bunch of shifting interchangeable parts. Bunten later acknowledged that “the attempt to make a replayable adventure game made for a shallow product (which seems true in every other case designers have tried it as well). I guess that if elements are such that they can be randomly shifted then they [aren’t] substantive enough to make for a compelling game. So, even though I don’t like linear games, they seem necessary to have the depth a good story needs.”

Heart of Africa did quite well for EA upon its release in 1985 — well enough, in fact, to become Bunten’s third most successful game of all time. Yet the whole experience left a bad taste in his mouth. He came away from the project determined to return to the guiding vision behind his first game for EA, the commercially unsuccessful but absolutely brilliant M.U.L.E.: a vision of computer games that people played together rather than alone. In the future, he would continue to compromise at times on the style and subject matter of his games in order to sell them to his publishers, but he would never again back away from his one great principle. All of his games henceforward would be multiplayer — first, foremost, and in one case exclusively. In fact, that one case would be his very next game.

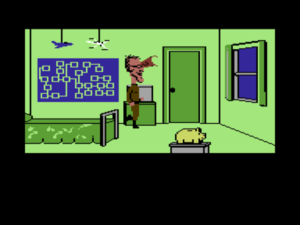

The success of his previous two games having opened something of a window of opportunity with EA, Bunten charged ahead on what he would later describe as his single “most experimental game.” Robot Rascals is a multiplayer scavenger hunt in which two physical decks of cards are integral to the game. Each player controls a robot, and must use it to collect the four items shown on the cards in her hand and return with them to home base in order to win. The game lives on the razor’s edge of pure chaos, the product both of random events generated by the computer and of a second deck of cards — the “specials” — which among other things can force players to draw new item cards, trash their old cards, or trade cards among one another; thus everyone’s goals are shifting almost constantly. As always in a Dan Bunten game, there are lots of thoughtful features here, from ways to handicap the game for players of different ages or skill levels to three selectable levels of overall complexity. He designed it to be “a game that anyone could play” rather than one limited to “special-interest groups like role-playing people or history buffs.” It can be a lot of fun, even if it’s not quite on the level of M.U.L.E. (then again, what is, right?). But this latest bid to make computer games acceptable family entertainment wound up selling hardly at all upon its release in 1986, ending Bunten’s two-game commercial hot streak.

By this point in Bunten’s career, changes in his personal life were beginning to have a major impact on the games he made. In 1985, while still working on Heart of Africa, he had divorced his second wife and married his third, with all the painful complications such disruptions entail when one is leaving children behind with the former spouse. In 1986, he and his new wife moved from Little Rock, Arkansas, to Hattiesburg, Mississippi, so she could complete a PhD. This event marked the effective end of Ozark Softscape as anything but a euphemism for Dan Bunten himself and whatever programmers and artists he happened to contract work out to. The happy little communal house/office where Dan and Bill Bunten, Jim Rushing, and Alan Watson had created games, with a neighborhood full of eager testers constantly streaming through the living room, was no more; only Watson continued to work on Bunten’s games from Robot Rascals on, and then more as just another hired programmer than a valued design voice. Even after moving back to Little Rock in 1988, Bunten would never be able to recapture the communal alchemy of 1982 to 1985.

Coupled with these changes were other, still more ominous ones in Dan Bunten himself. Those who knew him during these years generally refer only vaguely to his “problems,” and this discretion of course does them credit; I too have no desire to psychoanalyze the man. What does seem clear, however, is that he was growing increasingly unhappy as time wore on. He became more demanding of his colleagues, difficult enough to work with that many of them decided it just wasn’t worth it, even as he became more erratic in his own habits, perhaps due to an alcohol intake that struck many as alarming.

Yet Bunten was nothing if not an enigmatic personality. At the same time that close friends were worrying about his moodiness and his drinking, he could show up someplace like The Computer Game Developers Conference and electrify the attendees with his energy and ideas. Certainly his eyes could still light up when he talked about the games he was making and wanted to make. The worrisome questions were how much longer he would be allowed to make those games in light of their often meager sales, and, even more pressingly, why his eyes didn’t seem to light up about much else in his life anymore.

But, to return to the firmer ground of the actual games he was continuing to make: Modem Wars, his next one, marked the beginning of a new chapter in his tireless quest to get people playing computer games together. “We’ve failed at gathering people around the computer,” Bunten said before starting work on it. “We’re going to have to connect them out of the back by connecting their computers to each other.” He would make, in other words, a game played by two people on two separate computers, connected via modem.

Modem Wars was known as Sport of War until just prior to its release by EA in 1988, and in many ways that was a better title. Its premise is a new version of Bunten’s favorite sport of football, played not by individual athletes but by infantry, artillery, and even aircraft, if you can imagine such a thing. One might call it a mashup between two of his early designs for SSI: the strategic football simulator Computer Quarterback and the proto-real-time-strategy game Cytron Masters.

It’s the latter aspect that makes Modem Wars especially visionary. The game was nothing less than an online real-time-strategy death match years before the world had heard of such a thing. While a rudimentary artificial intelligence was provided for single-player play, it was made clear by the game’s very title that this was strictly a tool for learning to play rather than the real point of the endeavor. Daniel Hockman’s review of Modem Wars for Computer Gaming World ironically describes the qualities of online real-time strategy as a potential “problem” and “marketing weakness” — the very same qualities which a later generation would take as the genre’s main attractions:

A sizable number of gamers are not used to thinking in real-time situations. They can spend hours ordering tens of thousands of men into mortal combat, but they wimp out when they have to think under fire. They want to play chess instead of speed chess. They want to analyze instead of act. As the enemy drones zero in on their comcen, they throw up their hands in frustration when it’s knocked out before they can extract themselves from the maelstrom of fire that has engulfed them.

Whether because gamers really were daunted by this need to think on their feet or, more likely, because of the relative dearth of fast modems and stable online connections in 1988, Modem Wars became another crushing commercial disappointment for Bunten. EA declared themselves “hesitant” to keep pursuing this direction in the wake of the game’s failure. Rather than causing Bunten to turn away from multiplayer gaming, this loss of faith caused him to turn away from EA.

In the summer of 1989, MicroProse Software announced that they had signed a five-year agreement with Bunten, giving them first rights to all of the games he made during that period. The great hidden driver behind the agreement was MicroProse’s own star designer Sid Meier, who had never hidden his enormous admiration for Bunten’s work. Bunten doubtless hoped that a new, more supportive publisher would mark the beginning of a new, more commercially successful era in his career. And in the beginning at least, such optimism would, for once, prove well-founded.

Known at first simply as War!, then as War Room, and finally as Command H.Q., Bunten’s first game for MicroProse was aptly described by its designer as being akin to an abstract, casual board game of military strategy, like Risk or Axis & Allies. The big wrinkle was that this beer-and-pretzels game was to be played in real time rather than turns. But, perhaps in response to complaints about his previous game like those voiced by Daniel Hockman above, the pace is generally far less frenetic this time around. Not only can the player select an overall speed, but the program itself actually takes charge to speed up the action when not much is happening and slow it down when things heat up. Although a computer opponent is provided, the designer’s real focus was once more on modem-to-modem play.

But, whatever its designer’s preferences, MicroProse notably de-emphasized the multiplayer component in their advertising upon Command H.Q.‘s release in 1990, and this, combined with a more credible artificial intelligence for the computer opponent, gave it more appeal to the traditional wargame crowd than Modem Wars had demonstrated. Ditto a fair measure of evangelizing done by Computer Gaming World, with whom Bunten had always had a warm relationship, having even authored a regular column there for a few years in the mid-1980s. The magazine’s lengthy review concluded by saying, “This is the game we’ve all been waiting for”; they went on to publish two more lengthy articles on Command H.Q. strategy, and made it their “Wargame of the Year” for 1990. For all these reasons, Command H.Q. sold considerably better than had Bunten’s last couple of games; one report places its total sales at around 75,000 units, enough to make it his second most successful game ever.

With that to buoy his spirits, Bunten made big plans for his next game, Global Conquest. “Think of it as Command H.Q. meets Seven Cities of Gold meets M.U.L.E.,” he said. Drawing heavily from Command H.Q. in particular, as well as the old grand-strategy classic Empire, he aimed to make a globe-spanning strategy game where economics would be as important as military maneuvers. He put together a large and vocal group of play testers on CompuServe, and tried to incorporate as many of their suggestions as possible, via a huge options panel that allowed players to customize virtually every aspect of the game, from the rules themselves to the geography and topography of the planet they were fighting over, all the way down to the look of the icons representing the individual units. This time, up to four humans could play against one another in a variety of ways: they could all play together by taking turns on one computer, or they could each play on their own computer via a local-area network, or four players could share two computers that were connected via modem. The game was turn-based, but with an interesting twist designed to eliminate analysis paralysis: when the first player mashed the “next turn” button, everyone else had just twenty seconds to finish up their own turns before the execution phase began.

In later years, Dan Bunten himself had little good to say about what would turn out to be his last boxed game. In fact, he called it his absolute “worst game” of all the ones he had made. While play-testing in general is a wonderful thing, and every designer should do as much of it as possible, a designer also needs to keep his own vision for what kind of game he wants to make at the forefront. In the face of prominent-in-their-own-right, opinionated testers like Computer Gaming World‘s longtime wargame scribe Alan Emrich, Bunten failed to do this, and wound up creating not so much a single coherent strategy game as a sort of strategy-game construction set that baffled more than it delighted. “This game was a hodgepodge rather than an integration,” he admitted several years later. “It was just the opposite of the KISS doctrine. It was a kitchen-sink design. It had everything. Build your own game by struggling through several options menus.” He acknowledged as well that the mounting unhappiness in his personal life, which had now led to a divorce from his third wife, was making it harder and harder to do good work.

Released in 1992, Global Conquest under-performed commercially as well. In addition to the game’s intrinsic failings, it didn’t help matters that MicroProse had just five months prior released Sid Meier’s Civilization, another exercise in turn-based grand strategy on a global scale, also heavily influenced by Empire, that managed to be far more thematically and texturally ambitious while remaining more focused and playable as a game — albeit without the multiplayer element that was so important to Bunten.

But of course, there’s more to a game than whether it’s played by one person or more than one, and it strikes me as reasonable to question whether Bunten was beginning to lose his way as a designer in other respects even as he stuck so obstinately to his multiplayer guns. Setting aside their individual strengths and failings, the final three boxed games of Bunten’s career, with their focus on “wars” and “command” and “conquest,” can feel a little disheartening when compared to what came before. Games like M.U.L.E., Robot Rascals, and to some extent even Seven Cities of Gold and Heart of Africa had a different, friendlier, more welcoming personality. This last, more militaristic trio feels like a compromise, the product of a Dan Bunten who said that, if he couldn’t bring multiplayer gaming to the masses, he would settle for the grognard crowd, indulging their love for guns and tanks and bombs. So be it. Now, though, he was about to give that same crowd the shock of their lives.

In November of 1992, just months after completing the supremely masculine wargame Global Conquest, Dan Bunten had sexual-reassignment surgery, becoming the woman Danielle “Dani” Bunten Berry. (For continuity’s sake, I’ll generally continue to refer to her by the shorthand of “Bunten” rather than “Berry” for the remainder of this article.) It’s not for us to speculate about the personal trauma that must have accompanied such a momentous decision. What we can and should take note of, however, is that it was an unbelievably brave decision. For all that we still have a long way to go today when it comes to giving transsexuals the rights and respect they deserve, the early 1990s were a far less enlightened time than even our own on this issue. And it wasn’t as if Bunten could take comfort in the anything-goes anonymity of a New York City or San Francisco. Dan Bunten had lived, and as Dani Bunten now continued to live, in the intensely conservative small-town atmosphere of Little Rock, Arkansas. Many of those closest to her disowned her, including her mother and her ex-wives, making it heartbreakingly difficult for her to maintain a relationship with her children. She had remained in Little Rock all these years, at no small cost to her career prospects, largely because of these ties of blood, which she had believed to be indissoluble. This rejection, then, must have felt like the bitterest of betrayals.

The games industry as well, with its big-breasted damsels in distress and its machine-gun-toting male heroes, wasn’t exactly notable for its enlightened attitudes toward sex and gender. Many of Bunten’s old friends and colleagues would see her for the first time after her surgery and convalescence at the Game Developers Conference scheduled for April of 1993, and they looked forward to that event with almost as much trepidation as Bunten herself must have felt. It was all just so very unexpected. To whatever extent they had carried around a mental image of a man who would choose to become a woman, Dan Bunten didn’t fit the profile at all. He had been the games’ industry own Ozark Mountains boy, a true son of the South, always ready with his “folksy mountain humor” (read, “dirty jokes”). His rangy frame stood six feet two inches tall. He loved nothing more than a rough-and-tumble game of back-lot football, unless it be beer and poker afterward. As his three ex-wives and three children attested, he had certainly seemed to like women, but no one had ever imagined that he liked them enough to want to be one. What were they supposed to say to him — er, to her — now?

They needn’t have worried. Dani Bunten handled her coming-out party with the same low-key grace and humor she would display for the rest of her life as a woman. She said that she had made the switch to do her part to redress the gender imbalance inside the industry, and to help improve the aesthetics of game designers to match the improving aesthetics of their games. The tension dissipated, and soon everyone got into the spirit of the thing. A straw poll named Dani Bunten the game designer most likely to appear on the Oprah Winfrey Show. A designer named Gordon Walton had a typical experience: “I was put off when she made the change to become Dani, until the minute I spoke to her. It was clear to me she was much happier as Dani, and if anything an even more incredible person.” Another GDC regular remembered the “unhappy man” from the 1992 event, “sitting on the hallway floor drinking and smoking,” and contrasted him with the “happy woman” he now saw.

No one with any interest in the inner workings of those strangest of creatures, their fellow humans, could fail to be fascinated by Bunten’s dispatches from both sides of the gender divide. “Aren’t there things you’ve always wanted to know about women but were afraid to ask?” she said. “Well, now’s your chance!”

I had to learn a lot to actually “count” as a woman! I had to learn how to walk, speak, dress as a woman. Those little things which are necessary so that other people don’t [feel] alienated.There’s a little summary someone gave me to make clear what being a woman means: as a woman you have to sing when you speak, dance when you walk, and you have to open your heart… I know how stereotypical that sounds, but it is true! Speech for a man is something completely different: the melody of speech is fast, monotone, and decreases at the end of a sentence. Sometimes, this still happens to me, and people are always irritated. Female speech is a little bit like song – we have a lot more melody and different speech patterns. Walking is really a bit like dancing: slower and connected, with a lot of subtle movements. I enjoyed it at once.

She had few filters when talking about the nitty-gritty details:

One of the saddest changes I had to deal with after my operation was the fact that I couldn’t aim anymore when urinating. Boys — I have two little sons and a daughter — simply love to aim.

Bunten said that, in keeping with her new identity, she didn’t feel much desire to design any more wargames; this led to the end of her arrangement with MicroProse. By way of compensation, Electronic Arts that year released a nicely done “commemorative edition” of Seven Cities of Gold, complete with dramatically upgraded graphics and sound to suit the times. Bunten had little to nothing to do with the project, but it sold fairly well, and perhaps helped to remind her of her roots.

In the same spirit, Bunten’s first real project after her transformation became a new version of M.U.L.E. EA’s founder Trip Hawkins had always named that game as one of his all-time favorites, and had frequently stated how disappointed he was that it had never gotten the attention it deserved. Now, Hawkins had left his day-to-day management role at EA to run 3DO, a spin-off company peddling a multimedia set-top box for the living room. Hawkins thought M.U.L.E. would be perfect for the platform, and recruited Bunten to make it happen. It was a dream project; showing excellent taste, she still regarded M.U.L.E. as the best thing she had ever done. But the dream quickly began to sour.

3DO first requested that, instead of taking turns managing their properties on the map, players all be allowed to do so simultaneously. Bunten somewhat reluctantly agreed. And then:

As soon as I added the simultaneity, it instantly put into their heads, “Why can’t we shoot at each other?” And I said, “No guns.” And they said, “What about bombs? Can we drop a bomb in front of you? It won’t hurt you. It will be a cartoon thing, it will just slow you down.” And I said, “You don’t get it. It’s changing the whole notion of how this thing works!”

[3DO is] staking its future on the idea of a new generation of hardware and therefore, you’d assume, a new generation of software, but they said, “No, our market is still 18 to 35, male. We need something with action, something with intensity.” Chrome and sizzle. Ugh.

In the end, Bunten walked out, disappointed enough that she seriously considered getting out of games altogether, going so far as to apply for jobs as the industrial engineer Dan Bunten had once been before his first personal computer came along.

Instead she found a role with a new company called Mpath as a design and strategy consultant. The goal of that venture was to bring multiplayer gaming to the new frontier of the World Wide Web, and its founders included her fellow game designer Brian Moriarty, of Infocom and LucasArts fame. She also studied the elusive concept of “games for girls” in association with a think tank set up by Microsoft co-founder Paul Allen; some of her proposals would later come to market as the products of Purple Moon, Brenda Laurel’s brief-lived but important publisher of games for girls aged 8 to 14.

Offers to do conventional boxed games as sole designer, however, weren’t forthcoming; how much that was down to lingering personal prejudices against her for her changed sex and how much to the fact that the games she wanted to make just weren’t considered commercially viable must always be open for debate. Refusing as usual to be a victim, Bunten said that her “priorities had shifted” since her change anyway: “I don’t identify myself with the job as strongly as before.” Deciding that, for her, heaven was other people after a life spent programming computers, she devoured anthropology texts and riffed on Karl Jung’s theories of a collective unconscious. “Literature, anthropology, and even dance,” she noted, “have a good deal more to teach designers about human drives and abilities than the technologists of either end of California, who know silicon and celluloid but not much else.” So, she bided her time as a designer, waiting for a more inclusive ludic future to arrive. At the 1997 GDC, she described a prescient vision of “small creative shops” freed from the inherent conservatism of the “distribution trap” by the magic of the Internet.

That future would indeed come to pass — but, sadly, not in time for Dani Bunten Berry to see it. Shortly after delivering that speech, she went to see her doctor about a persistent cough, whereupon she was diagnosed with an advanced case of lung cancer. In one of those cruel ironies which always seem to dog the lives of us poor mortals, she had finally kicked a lifelong habit of heavy smoking just a few months before.

She appeared in public for the last time in May of 1998. The occasion was, once again, the Game Developers Conference, where she had always shone so. She struggled audibly for breath as she gave the last presentation of her life, entitled “Do Online Games Still Suck?,” but her passion carried her through. At the end of the conference, at a special ceremony held aboard the Queen Mary in Long Beach Harbor, she was presented with the first ever GDC Lifetime Achievement Award. The master of ceremonies for that evening was her friend and colleague Brian Moriarty, who knew, like everyone else in attendance, that the end was near. He closed his heartfelt tribute thus:

It is no exaggeration to characterize tonight’s honoree as the world’s foremost authority on multiplayer computer games. Nobody has worked harder to demonstrate how technology can be used to realize one of the noblest of human endeavors: bringing people together. Historians of electronic gaming will find in these eleven boxes the prototypes of the defining art form of the 21st century.

As one of those historians, I can only heartily concur with his assessment.

It would be nice to say that Dani Bunten passed peacefully to her rest. But, as anyone with any experience with lung cancer will recognize, that just isn’t how the disease works. Throughout her life, she had done nothing the easy way, and her death — ugly, painful, and slow — was no exception. On the brighter side, she did reconcile to some extent with her mother and other family members and friends who had rejected her. The end came on July 3, 1998. Rather incredibly in light of the prodigious, multifaceted life she had lived, she was just 49 years old.

It’s a life which resists pigeonholing or sloganeering. Bunten herself explicitly rejected the role of transgender advocate, inside or outside of the games industry. Near the end of her life, she expressed regret for her decision to change her physical sex, saying she could have found ways to live in a more gender-fluid way without taking such a drastic step. Whether this was a reasoned evaluation or a product of the pain and trauma of terminal illness must remain, like so much else about her, an enigma.

What is clear, however, is that Bunten, through the grace and humor with which she handled her transition and through her refusal to go away and hide thereafter as some might have wished, taught others in the games industry who were struggling with similar issues of identity that a new gender need not mean a decisive break with every aspect of one’s past — that a prior life in games could continue to be a life in games even with a different pronoun attached. She did this in a quieter way than the speechifying some might have wished for from her, but, nevertheless, do it she did. Jessica Mulligan, who transitioned from male to female a few years after her, remembers meeting Bunten shortly before her own sexual-reassignment surgery, hoping to hear some “profound words on The Transition”: “While I was looking for spiritual guidance, she was telling me where to shop for shoes. Talk about keeping someone honest! Every change in our personal lives is profound to us. You still have to pay attention to the nuts and bolts or the change is meaningless.”

For some, of course — even for some with generally good intentions — Danielle Bunten Berry’s transgenderism will always be the defining aspect of her life, her career in games a mere footnote to that other part of her story. But that’s not how she would have wanted it. She regarded her games as her greatest legacy after her children, and would doubtless want to be remembered as a game designer above all else.

Back in 1989, after Modem Wars had failed in the marketplace, Electronic Arts decided that the lack of “a network of people to play” was a big reason for its failure. The great what-if question pertaining to Bunten’s career is what she might have done in partnership with an online network like CompuServe, which could have provided stable connectivity along with an eager group of players and all the matchmaking and social intrigue anyone could ask for. She finally began to explore this direction late in her life, through her work with Mpath. But what might have happened if she had made the right connections — forgive the pun! — earlier? We can only speculate.

As it is, though, it’s true that, in terms of units shifted and profits generated, there have been far more impressive careers. She suffered the curse of any pioneer who gets too far out in front of the culture. All of her eleven games combined probably sold no more than 400,000 copies at the outside, a figure some prominent designers’ new games can easily better on their first week today. Certainly her commercial disappointments far outnumber her successes. But then, sales aren’t the only metric by which to measure success.

Dani Bunten, one might say, is the designer’s designer. Greg Costikyan once told what happened when he offered to introduce Warren Spector — one of those designers who can sell more games in a week than Bunten did in a lifetime — to her back in the day: “He regretfully refused; he had loved M.U.L.E. so much he was afraid he wouldn’t know what to say. He would sound like a blithering fanboy and be embarrassed.” Chris Crawford calls the same title simply “the best computer-game design of all time.” Brenda Laurel dedicated Purple Moon’s output to Bunten. Sid Meier was so taken with Seven Cities of Gold that Pirates!, Railroad Tycoon, and Civilization, his trilogy of masterpieces, can all be described as extensions in one way or another of what Bunten first wrought. And Seven Cities of Gold was only Meier’s second favorite Bunten game: he loved M.U.L.E. so much that he was afraid to even try to improve on it.

Ironically, the very multiplayer affordances that Bunten so steadfastly refused to give up on, much to the detriment of her income, continue to make it difficult for her games to be seen at their best today. M.U.L.E. can be played as its designer really intended it only on an Atari 8-bit computer — real or emulated — with four vintage joysticks plugged in and four players holding onto them in a single living room; that is, needless to say, not a trivial thing to arrange in this day and age. Likewise, the need to have the exceedingly rare physical cards to hand has made it impossible for most people to even try out Robot Rascals today. (It took me months to track down a pricey German edition on eBay.) And Bunten’s final run of boxed games, reliant on ancient modem hookups as they are, are even more difficult to play with others today than they were in their own time.

Dani Bunten didn’t have an easy life, internally or externally. She remained always an enigma — the life of the party who goes home alone, the proverbial stranger among her best friends. One person who knew her after she became a woman claimed she still had a “shadowed, slightly haunted look, even when she was smiling.” Given the complicated emotions that are still stirred up in so many of us by transgenderism, that may have been projection. On the other hand, though, it may have been perception. Even Bunten’s childhood had been haunted by the specter of familial discord and possibly abuse, to such an extent that she refused to talk much about it. But she did once tell Greg Costikyan that she grew up loving games mainly because it was only when playing them that her family wasn’t “totally dysfunctional.”

I think that for Dani Bunten games were most of all a means of communication, a way of punching through that bubble of ego and identity that isolates all of us to one degree or another, and that perhaps isolated her more so than most. Thus her guiding vision became, as Sid Meier puts it, “the family gathered around the computer.” After all, it’s a small step to go from communicating to connecting, from connecting to loving. She openly stated that she had made Robot Rascals for her own family most of all: “They’ve never played my games. I think they found them too esoteric or complex. I wanted something that I could enjoy with them, that they’d all be able to relate to.” The tragedy for her — perhaps a key to the essential sadness many felt at Bunten’s core, whether she was living as a man or a woman — is that reality never quite lived up to that Norman Rockwell dream of the happy family gathered around a computer; her daughter, the duly appointed caretaker of her legacy, still calls M.U.L.E. “boring and tedious” today. But the dream remains, and her games have given those of us privileged to discover them great joy and comfort in the midst of lives that have admittedly — hopefully! — been far easier than that of their creator. And so I’ll close, in predictable but unavoidable fashion, with Danielle Bunten Berry’s most famous quote — a quote predictable precisely because it so perfectly sums up her career: “No one on their death bed ever said, ‘I wish I had spent more time alone with my computer!'” Words to live by, my fellow gamers. Words to live by.

(Sources: Compute! of March 1989, December 1989, April 1990, January 1992, and December 1993; Questbusters of May 1986; Commodore Power Play of June/July 1986; Commodore Magazine of July 1987, October 1988, and June 1989; Ahoy! of March 1987; Computer Gaming World of January/February 1987, May 1988, February 1989, February 1990, December 1990, February 1991, March 1991, May 1991, April 1992, June 1992, August 1992, June 1993, August 1993, July 1994, September 1995, and October 1998; Family Computing of January 1987; Compute!’s Gazette of August 1989; The One of April 1991; Game Players PC Entertainment of September 1992; Game Developer of February/March 1995, July 1998, September 1998, and October 1998; Electronic Arts’s newsletter Farther of Winter 1986; Power Play of January 1995; Arkansas Times of February 8 2012. Online sources include the archived contents of the old World of Mule site, the archived contents of a Danielle Bunten Berry tribute site, the Salon article “Get Behind the M.U.L.E.”, and Bunten’s interview at Halcyon Days.)