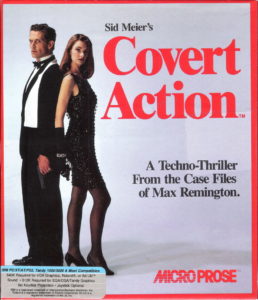

Covert Action‘s cover is representative of the thankfully brief era when game publishers thought featuring real models on their boxes would drive sales. The results almost always ended up looking like bad romance-novel covers; this is actually one of the least embarrassing examples. (For some truly cringeworthy examples of artfully tousled machismo, see the Pirates! reissue or Space Rogue.)

In the lore of gaming there’s a subset of spectacular failures that have become more famous than the vast majority of successful games. From E.T.: The Extra-Terrestrial to Daikatana to Godus, this little rogue’s gallery inhabits its own curious corner of gaming history. The stories behind these games, carrying with them the strong scent of excess and scandal, can’t help but draw us in.

But there are also other, less scandalous cases of notable failure to which some of us continually return for reasons other than schadenfreude. One such case is that of Covert Action, Sid Meier and Bruce Shelley’s 1990 game of espionage. Covert Action, while not a great or even a terribly good game, wasn’t an awful game either. And, while it wasn’t a big hit, nor was it a major commercial disaster. By all rights it should have passed into history unremarked, like thousands of similarly middling titles before and after it. The fact that it has remained a staple of discussion among game designers for some twenty years now in the context of how not to make a game is due largely to Sid Meier himself, a very un-middling designer who has never quite been able to get Covert Action, one of his few disappointing games, out of his craw. Indeed, he dwells on it to such an extent that the game and its real or perceived problems still tends to rear its head every time he delivers a lecture on the art of game design. The question of just what’s the matter with Covert Action — the question of why it’s not more fun — continues to be asked and answered over and over, in the form of Meier’s own design lectures, extrapolations on Meier’s thesis by others, and even the occasional contrarian apology telling us that, no, actually, nothing‘s wrong with Covert Action.

What with piling onto the topic having become such a tradition in design circles, I couldn’t bear to let Covert Action‘s historical moment go by without adding the weight of this article to the pile. But first, the basics for those of you who wouldn’t know Covert Action if it walked up and invited you to dinner.

As I began to detail in my previous article, Covert Action‘s development at MicroProse, the company at which Sid Meier and Bruce Shelley worked during the period in question, was long by the standards of its time, troubled by the standards of any time, and more than a little confusing to track in our own time. Begun in early 1988 as a Commodore 64 game by Lawrence Schick, another MicroProse designer, it was conceived from the beginning as essentially an espionage version of Sid Meier’s earlier hit Pirates! — as a set of mini-games the player engaged in to affect the course of an overarching strategic game. But Schick found that he just couldn’t get the game to work, and moved on to something else. And that would have been that — except that Sid Meier had become intrigued by the idea, and picked it up for his own next project, moving it in the process from the Commodore 64 to MS-DOS, where it would have a lot more breathing room.

In time, though, the enthusiasm of Meier and his assistant designer Bruce Shelley also began to evaporate; they started spending more and more time dwelling on an alternative design. By August of 1989, they were steaming ahead with Railroad Tycoon, and all work on Covert Action for the nonce had ceased.

After Railroad Tycoon was completed and released in April of 1990, Meier and Shelley returned to Covert Action only under some duress from MicroProse’s head Bill Stealey. With the idea that would become Civilization already taking shape in Meier’s head, his enthusiasm for Covert Action was lower than ever, but needs must. As Shelley tells the story, Meier’s priorities were clear in light of the idea he had waiting in the wings. “We’re just getting this game done,” Meier said of Covert Action when Shelley tried to suggest ways of improving the still somehow unsatisfying design. “I’ve got to get this game finished.” It’s hard to avoid the impression that in the end Meier simply gave up on Covert Action. Yet, given the frequency with which he references it to this day, it seems equally clear that that capitulation has never sat well with him.

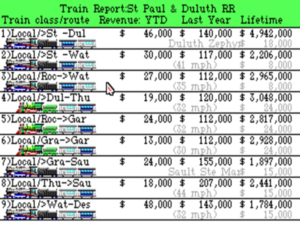

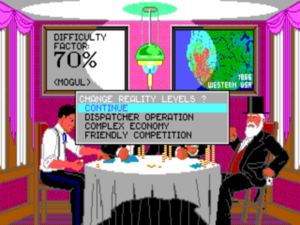

Covert Action casts you as the master spy Max Remington — or, in a nice nod to gender equality that was still unusual in a game of this era, as Maxine Remington. Max is the guy the CIA calls when they need someone to crack the really tough cases. The game presents you with a series of said tough cases, each involving a plot by some combination of criminal and/or terrorist groups to do something very bad somewhere in the world. Your objective is to figure out what group or groups are involved, figure out precisely what they’re up to, and foil their plot before they bring it to fruition. As usual for a Sid Meier game, you can play on any of four difficulty levels to ensure that everyone, from the rank beginner to the most experienced super-sleuth, can be challenged without being overwhelmed. If you do your job well, you will arrest the person at the top of the plot’s org chart, one of the game’s 26 evil masterminds. Once no more masterminds are left to arrest, Max can walk off into the sunset and enjoy a pleasant retirement, confident that he has made the world a safer place. (If only counter-terrorism was that easy in real life, right?)

The game lets Max/Maxine score with progressively hotter members of the opposite sex as he/she cracks more cases.

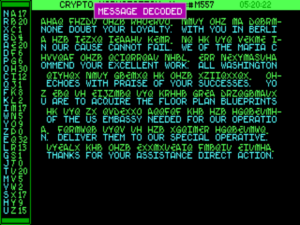

The strategic decisions you make in directing the course of your investigation will lead to naught if you don’t succeed at the various mini-games. These include rewiring a junction box to tap a suspect’s phone (Covert Action presents us with a weirdly low-tech version of espionage, even for its own day); cracking letter-substitution codes to decipher a suspect’s message traffic; tailing or chasing a suspect’s car; and, in the most elaborate of the mini-games, breaking into a group’s hideaway to either collect intelligence or make an arrest.

Covert Action seems to have all the makings of a good game — perhaps even another classic like its inspiration, Pirates!. But, as Sid Meier and most of the people who have played it agree, it doesn’t ever quite come together to become an holistically satisfying experience.

It’s not immediately obvious just why that should be the case; thus all of the discussion the game has prompted over the years. Meier does have his theory, to which he’s returned enough that he’s come to codify it into a universal design dictum he calls “The Covert Action rule.” For my part… well, I have a very different theory. So, first I’ll tell you about Meier’s theory, and then I’ll tell you about my own.

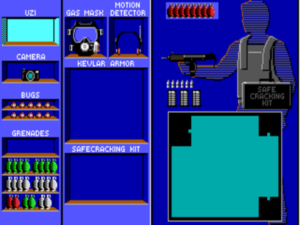

Meier’s theory hinges on the nature of the mini-games. He doesn’t believe that any of them are outright bad by any means, but does feel that they don’t blend well with the overarching strategic game, resulting in a lumpy stew of an experience that the player has trouble digesting. He’s particularly critical of the breaking-and-entering mini-game — a “mini-game” complicated enough that one could easily imagine it being released as a standalone game for the previous generation of computers (or, for that matter, for Covert Action‘s contemporaneous generation of consoles). Before you begin the breaking-and-entering game, you must choose what Max will carry with him: depending on your goals for this mission, you can give him some combination of a pistol, a sub-machine gun, a camera, several types of grenades, bugs, a Kevlar vest, a gas mask, a safe-cracking kit, and a motion detector. The underground hideaways and safe houses you then proceed to explore are often quite large, and full of guards, traps, and alarms to avoid or foil as you snoop for evidence or try to spirit away a suspect. You can charge in with guns blazing if you like, but, especially at the higher difficulty levels, that’s not generally a recipe for success. This is rather a game of stealth, of lurking in the shadows as you identify the guards’ patrol patterns, the better to avoid or quietly neutralize them. A perfectly executed mission in many circumstances will see you get in and out of the building without having to fire a single shot.

The aspect of this mini-game which Meier pinpoints as its problem is, somewhat ironically, the very ambition and complexity which makes it so impressive when considered alone. A spot of breaking and entering can easily absorb a very tense and intense half an hour of your time. By the time you make it out of the building, Meier theorizes, you’ve lost track of why you went in in the first place — lost track, in other words, of what was going on in the strategic game. Meier codified his theory in what has for almost twenty years been known in design circles as “the Covert Action rule.” In a nutshell, the rule states that “one good game is better than two great ones” in the context of a single game design. Meier believes that the mini-games of Covert Action, and the breaking-and-entering game in particular, can become so engaging and such a drain on the player’s time and energies that they clash with the strategic game; we end up with two “great games” that never make a cohesive whole. This dissonance never allows the player to settle into that elusive sense of total immersion which some call “flow.” Meier believes that Pirates! works where Covert Action doesn’t because the former’s mini-games are much shorter and much less complicated — getting the player back to the big picture, as it were, quickly enough that she doesn’t lose the plot of what the current situation is and what she’s trying to accomplish.

It’s an explanation that makes a certain sense on its face, yet I must say that it’s not one that really rings true to my own experiences with either games in general or Covert Action in particular. Certainly one can find any number of games which any number of players have hugely enjoyed that seemingly violate the Covert Action rule comprehensively. We could, for instance, look to the many modern CRPGs which include “sub-quests” that can absorb many hours of the player’s time, to no detriment to the player’s experience as a whole, at least if said players’ own reports are to be believed. If that’s roaming too far afield from the type of game which Covert Action is, consider the case of the strategy classic X-Com, one of the most frequently cited of the seeming Covert Action rule violators that paradoxically succeed as fun designs. It merges an overarching strategic game with a game of tactical combat that’s far more time-consuming and complicated than even the breaking-and-entering part of Covert Action. And yet it must place high in any ranking of the most beloved strategy games of all time. As we continue to look at specific counterexamples like X-Com or, for that matter, Pirates!, we can only continue to believe in the Covert Action rule by applying lots of increasingly tortured justifications for why this or that seemingly blatant violator nevertheless works as a game. So, X-Com, Meier tells us, works because the strategic game is relatively less complicated than the tactical game, leaving enough of the focus on the tactical game that the two don’t start to pull against one another. And Pirates!, of course, is just the opposite.

I can only say that when the caveats and exceptions to any given rule start to pile up, one is compelled to look back to the substance of the rule itself. As nice as it might be for the designers of Covert Action to believe the game’s biggest problem is that its individual parts were just each too darn ambitious, too darn good, I don’t think that’s the real reason the game doesn’t work.

So, we come back to the original question: just what is the matter with Covert Action? I don’t believe that Covert Action‘s core malady can be found in the mini-games, nor for that matter in the strategic game per se. I rather believe the problem is with the mission design and with the game’s fiction — which, as in so many games, are largely one and the same in this one. The cases you must crack in Covert Action are procedurally generated by the computer, using a set of templates into which are plugged different combinations of organizations, masterminds, and plots to create what is theoretically a virtually infinite number of potential cases to solve. My thesis is that it’s at this level — the level of the game’s fiction — where Covert Action breaks down; I believe that things have already gone awry as soon as the game generates the case it will ask you to solve, well before you make your first move. The, for lack of a better word, artificiality of the cases is never hard to detect. Even before you start to learn which of the limited number of templates are which, the stories just feel all wrong.

Literary critics have a special word, “mimesis,” which they tend to deploy when a piece of fiction conspicuously passes or fails the smell test of immersive believability. Dating back to classical philosophy, “mimesis” technically means the art of “showing” a story — as opposed to “diegesis,” the art of telling. It’s been adopted by theorists of textual interactive fiction as well as a stand-in for all those qualities of a game’s fiction that help to immerse the player in the story, that help to draw her in. “Crimes against Mimesis” — the name of an influential Usenet post written in 1996 by Roger Giner-Sorolla — are all those things, from problems with the interface to obvious flaws in the story’s logic to things that just don’t ring true somehow, that cast the player jarringly out of the game’s fiction — that reveal, in other words, the mechanical gears grinding underneath the game’s fictional veneer. Covert Action is full of these crimes against mimesis, full of these gears poking above the story’s surface. Groups that should hate each other ally with one another: the Colombian Cartel, the Mafia, the Palestine Freedom Organization (some names have been changed to protect the innocent or not-so-innocent), and the Stasi might all concoct a plot together. Why not? In the game’s eyes, they’re just interchangeable parts with differing labels on the front; they might as well have been called “Group A,” “Group B,” etc. When they send messages to one another, the diction almost always rings horribly, jarringly wrong in the ears of those of us who know what the groups represent. Here’s an example in the form of the Mafia talking like Jihadists.

If Covert Action had believable, mimetic, tantalizing — or at least interesting — plots to foil, I submit that it could have been a tremendously compelling game, without changing anything else about it. Instead, though, it’s got this painfully artificial box of whirling gears. Writing in the context of the problems of procedural generation in general, Kate Compton has called this the “10,000 Bowls of Oatmeal Problem.”

I can easily generate 10,000 bowls of plain oatmeal, with each oat being in a different position and different orientation, and mathematically speaking they will all be completely unique. But the user will likely just see a lot of oatmeal. Perceptual uniqueness is the real metric, and it’s darn tough. It is the difference between an actor being a face in a crowd scene and a character that is memorable.

Assuming that we can agree to agree, at least for now, that we’ve hit upon Covert Action‘s core problem, it’s not hard to divine how to fix it. I’m imagining a version of the game that replaces the infinite number of procedurally-generated cases with 25 or 30 hand-crafted plots, each with its own personality and its own unique flavor of intrigue. Such an approach would fix another complaint that’s occasionally levied against Covert Action: that it never becomes necessary to master or even really engage with all of its disparate parts because it’s very easy to rely just on those mini-games you happen to be best at to ferret out all of the relevant information. In particular, you can discover just about everything you need in the files you uncover during the breaking-and-entering game, without ever having to do much of anything in the realm of wire-tapping suspects, tailing them, or cracking their codes. This too feels like a byproduct of the generic templates used to construct the cases, which tend to err on the safe side to ensure that the cases are actually soluble, preferring — justifiably, in light of the circumstances — too many clues to too few. But this complaint could easily be fixed using hand-crafted cases. Different cases could be consciously designed to emphasize different aspects of the game: one case could be full of action, another more cerebral and puzzle-like, etc. This would do yet more to give each case its own personality and to keep the game feeling fresh throughout its length.

The most obvious argument against hand-crafted cases, other than the one, valid only from the developers’ standpoint, of the extra resources it would take to create them, is that it would exchange a game that is theoretically infinitely replayable for one with a finite span. Yet, given that Covert Action isn’t a hugely compelling game in its historical form, one has to suspect that my proposed finite version of it would likely yield more actual hours of enjoyment for the average player than the infinite version. Is a great game that lasts 30 hours and then is over better than a mediocre one that can potentially be played forever? The answer must depend on individual circumstances as well as individual predilections, but I know where I stand, at least as long as this world continues to be full of more cheap and accessible games than I can possibly play.

But then there is one more practical objection to my proposed variation of Covert Action, or rather one ironclad reason why it could never have seen the light of day: this simply isn’t how Sid Meier designs his games. Meier, you see, stands firmly on the other side of a longstanding divide that has given rise to no small dissension over the years in the fields of game design and academic game studies alike.

In academia, the argument has raged for twenty years between the so-called ludologists, who see games primarily as dynamic systems, and the narratologists, who see them primarily as narratives. Yet at its core the debate is actually far older even than that. In the December 1987 issue of his Journal of Computer Game Design, Chris Crawford fired what we might regard as the first salvo in this never-ending war via an article entitled “Process Intensity.” The titular phrase meant, he explained, “the degree to which a program emphasizes processes instead of data.” While all games must have some amount of data — i.e., fixed content, including fixed story content — a more process-intensive game — one that tips the balance further in favor of dynamic code as opposed to static data — is almost always a better game in Crawford’s view. That all games aren’t extremely process intensive, he baldly states, is largely down to the laziness of their developers.

The most powerful resistance to process intensity, though, is unstated. It is a mental laziness that afflicts all of us. Process intensity is so very hard to implement. Data intensity is easy to put into a program. Just get that artwork into a file and read it onto the screen; store that sound effect on the disk and pump it out to the speaker. There’s instant gratification in these data-intensive approaches. It looks and sounds great immediately. Process intensity requires all those hours mucking around with equations. Because it’s so indirect, you’re never certain how it will behave. The results always look so primitive next to the data-intensive stuff. So we follow the path of least resistance right down to data intensity.

Crawford, in other words, is a ludologist all the way. There’s always been a strongly prescriptive quality to the ludologists’ side of the ludology-versus-narratology debate, an ideology of how games ought to be made. Because processing is, to use Crawford’s words again, “the very essence of what a computer does,” the capability that in turn enables the interactivity that makes computer games unique as a medium, games that heavily emphasize processing are purer than those that rely more heavily on fixed data.

It’s a view that strikes me as short-sighted in a number of ways. It betrays, first of all, a certain programmer and systems designer’s bias against the artists and writers who craft all that fixed data; I would submit that the latter skills are every bit as worthy of admiration and every bit as valuable on most development teams as the former. Although even Crawford acknowledges that “data endows a game with useful color and texture,” he fails to account for the appeal of games where that very color and texture — we might instead say the fictional context — is the most important part of the experience. He and many of his ludologist colleagues are like most ideologues in failing to admit the possibility that different people may simply want different things, in games as in any other realm. Given the role that fixed stories have come to play in even many of the most casual modern games, too much ludologist rhetoric verges on telling players that they’re wrong for liking the games they happen to like. This is not to apologize for railroaded experiences that give the player no real role to play whatsoever and thereby fail to involve her in their fictions. It’s rather to say that drawing the line between process and data can be more complicated than saying “process good, data bad” and proceeding to act accordingly. Different games are at their best with different combinations of pre-crafted and generative content. Covert Action fails as a game because it draws that line in the wrong place. It’s thanks to the same fallacy, I would argue, that Chris Crawford has been failing for the last quarter century to create the truly open-ended interactive-story system he calls Storytron.

Sid Meier is an endlessly gracious gentleman, and thus isn’t so strident in his advocacy as many other ludologists. But despite his graciousness, there’s no doubt on which side of the divide he stands. Meier’s games never, ever include rigid pre-crafted scenarios or fixed storylines of any stripe. In most cases, this has been fine because his designs have been well-suited to the more open-ended, generative styles of play he favors. Covert Action, however, is the glaring exception, revealing one of the few blind spots of this generally brilliant game designer. Ironically, Meier had largely been drawn to Covert Action by what he calls the “intriguing” problem of its dynamic case generator. The idea of being able to use the computer to do the hard work of generating stories, and thereby to be able to churn out infinite numbers of the things at no expense, has always enticed him. He continues to muse today about a Sherlock Holmes game built using computer-generated cases, working backward from the solution of a crime to create a trail of clues for player to follow.

Meier is hardly alone in the annals of computer science and game design in finding the problem of automated story-making intriguing. Like his Sherlock Holmes idea, many experiments with procedurally-generated narratives have worked with mystery stories, that most overtly game-like of all literary genres; Covert Action‘s cases as well can be considered variations on the mystery theme. As early as 1971, Sheldon Klein, a professor at the University of Wisconsin, created something he called an “automatic novel writer” for auto-generating “2100-word murder-mystery stories.” In 1983, Electronic Arts released Jon Freeman and Paul Reiche III’s Murder on the Zinderneuf as one of their first titles; it allowed the player to solve an infinite number of randomly generated mysteries occurring aboard its titular Zeppelin airship. That game’s flaws feel oddly similar to those of Covert Action. As in Covert Action, in Murder on the Zinderneuf the randomized cases never have the resonance of a good hand-crafted mystery story. That, combined with their occasional incongruities and the patterns that start to surface as soon as you’ve played a few times, means that you can never forget their procedural origins. These tales of intrigue never manage to truly intrigue.

Suffice to say that generating believable fictions, whether in the sharply delimited realm of a murder mystery taking place aboard a Zeppelin or the slightly less delimited realm of a contemporary spy thriller, is a tough nut to crack. Even one of the most earnest and concentrated of the academic attempts at tackling the problem, a system called Tale-Spin created by James Meehan at Yale University, continued to generate more unmimetic than mimetic stories after many years of work — and this system was meant only to generate standalone static stories, not interactive mysteries to be solved. And as for Chris Crawford’s Storytron… well, as of this writing it is, as its website says, in a “medically induced coma” for the latest of many massive re-toolings.

In choosing to pick up Covert Action primarily because of the intriguing problem of its case generator and then failing to consider whether said case generator really served the game, Sid Meier may have run afoul of another of his rules for game design, one that I find much more universally applicable than what Meier calls the Covert Action rule. A designer should always ask, Meier tells us, who is really having the fun in a game — the designer/programmer/computer or the player? The procedurally generated cases may have been an intriguing problem for Sid Meier the designer, but they don’t serve the player anywhere near as well as hand-crafted cases might have done.

The model that comes to mind when I think of my ideal version of Covert Action is Killed Until Dead, an unjustly obscure gem from Accolade which, like Murder on the Zinderneuf, I wrote about in an earlier article. Killed Until Dead is very similar to Murder on the Zinderneuf in that it presents the player with a series of mysteries to solve, all of which employ the same cast of characters, the same props, and the same setting. Unlike Murder on the Zinderneuf, however, the mysteries in Killed Until Dead have all been lovingly hand-crafted. They not only hang together better as a result, but they’re full of wit and warmth and the right sort of intrigue — they intrigue the player. If you ask me, a version of Covert Action built along similar lines, full of exciting plotlines with a ripped-from-the-headlines feel, could have been fantastic — assuming, of course, that MicroProse could have found writers and scenario designers with the chops to bring the spycraft to life.

It’s of course possible that my reaction to Covert Action is hopelessly subjective, inextricably tied to what I personally value in games. As my longtime readers are doubtless aware by now, I’m an experiential player to the core, more interested in lived experiences than twiddling the knobs of a complicated system just exactly perfectly. In addition to guaranteeing that I’ll never win any e-sports competitions — well, that and my aging reflexes that were never all that great to begin with — this fact colors the way I see a game like Covert Action. The jarring qualities of Covert Action‘s fiction may not bother some of you one bit. And thus the debate about what really is wrong with Covert Action, that strange note of discordance sandwiched between the monumental Sid Meier masterpieces Railroad Tycoon and Civilization, can never be definitely settled. Ditto the more abstract and even more longstanding negotiation between ludology and narratology. Ah, well… if nothing else, it ensures that readers and writers of blogs like this one will always have something to talk about. So, let the debate rage on.

(Sources: the books Expressive Processing by Noah Wardrip-Fruin and On Interactive Storytelling by Chris Crawford; Game Developer of February 2013. Links to online sources are scattered through the article.

If you’d like to enter the Covert Action debate for yourself, you can buy it from GOG.com.)