In letting the March 31, 1984, deadline slip away without signing a licensing agreement with Atari, David Morse was taking a crazy risk. If he couldn’t find some way of scraping together $500,000 plus interest to repay Atari’s loan, Atari could walk away with the Amiga chipset for nothing, and Amiga would almost certainly go bust. All activity at Amiga therefore centered on getting the Lorraine ready for the Summer Consumer Electronic Show in Chicago, scheduled to begin on June 3. Summer CES was to be Amiga’s Hail Mary, their last chance to interest somebody — anybody — in what they had to offer enough to plunk down over half a million dollars just for openers, just to keep Atari from making the whole point moot.

By the time Summer CES arrived the Lorraine was a much more refined contraption than the one they had shown at Winter CES back in January, if still a long, long way from being a finished computer. Jay Miner’s custom chips had now been miniaturized and stamped into silicon, improving the machine’s reliability as much as it reduced its size. The Lorraine’s longstanding identity crisis was also now largely a thing of the past, the videogame crash and the example of the Macintosh having convinced everyone that what it ultimately needed to be was a computer, not a game console. Programmers like Carl Sassenrath, Dale Luck, and R.J. Mical had thus already started work on a proper operating system. Amiga’s computer was planned to be capable of doing everything the Mac could, but in spectacular color and with multitasking. That dream was, however, still a long way from fruition; the Lorraine could still be controlled only via a connected Sage IV workstation.

Led by software head Bob Pariseau as master of ceremonies, Amiga put on the best show they possibly could inside their invitation-only booth at Summer CES. The speech-synthesis library the software folks had put together was a big crowd-pleaser; spectators delighted in shouting out off-the-cuff phrases for the Lorraine to repeat, in either a male or female voice. But their hands-down favorite once again proved to be Boing, now dramatically enhanced: the ball now bounced side to side instead of just up and down, and a dramatic coup de grâce had been added in the form of sampled booms that moved from speaker to speaker to create a realistic soundscape. This impressive demonstration of Paula’s stereo-sound capabilities leaked beyond the confines of Amiga’s closed booth and out onto the crowded show floor, causing attendees to look around in alarm for the source of the noise.

Whatever the merits of their new-and-improved dog-and-pony show, Amiga also improved their credibility enormously by demonstrating that their chipset could work as actual computer chips and, indeed, simply by having survived and returned to CES once again. A bevy of industry heavyweights traipsed through Amiga’s booth that June: Sony, Hewlett Packard, Philips, Silicon Graphics, Apple. (Steve Jobs, ever the minimalist, allegedly scoffed at the Lorraine as over-engineered, containing too much fancy hardware for its own good.) The quantity and quality of Amiga’s write-ups in the trade press also increased significantly. Compute!, the biggest general-interest computing magazine in the country, raved that the Lorraine was “possibly the most advanced personal computer ever,” “the beginning of a completely new generation,” and “enough to make an IBM PC look like a four-function calculator.” Still, Amiga left the show without the thing they needed most: a viable alternative to Atari. With just a few weeks to go, their future looked grim. And then Commodore called.

To understand the reasons behind that phone call, we have to return to January 13, 1984, the day of that mysterious board meeting at Commodore that outraged their CEO Jack Tramiel so egregiously as to send him storming out of the building and burning rubber out of the parking lot, never to return. In his noncommittal statements to the press immediately after the divorce was made official, Tramiel said he planned to take some time to consider his next move. For now, he and his wife were going to spend a year traveling the world, to make up for all the vacations they had skipped over the course of his long career.

At the time that he said it, he seems to have meant it. He and wife Helen made it as far as Sri Lanka by April. But by that point he’d already had all he could take of the life of leisure. He and Helen returned to the United States so Jack could start a new venture to be called simply Tramel Technology. (The spelling of the name was changed to reflect the proper pronunciation of Tramiel’s last name; most Americans’ habit of mispronouncing the last syllable had always driven him crazy.) His plan was to scrape together funding and a team and build the mass-market successor to the Commodore 64. In the process, he hoped to stick it to Commodore and especially to its chairman, with whom he had always had a — to put it mildly — fraught relationship. Business had always been war to Tramiel, but now this war was personal.

To get Tramel Technology off the ground, he needed people, and almost all of the people he knew and had confidence in still worked at Commodore. Tramiel therefore started blatantly poaching his old favorites. That April and May at Commodore were marked by a mass exodus, as suddenly seemingly every other employee was quitting, all headed to the same place. Jack’s son Sam was the first; many felt it was likely Jack’s desire to turn Commodore into the Tramiel family business that had precipitated his departure in the first place. Then Tony Takai, the mastermind of Commodore’s Japanese branch; John Feagans, who was supposed to be finishing up the built-in software for Commodore’s new Plus/4 computer; Neil Harris, programmer of many of the most popular VIC-20 games; Ira Velinsky, a production designer; Lloyd “Red” Taylor, a president of technology; Bernie Witter, a vice president of finance; Sam Chin, a manager of finance; Joe Spiteri and David Carlone, manufacturing experts; Gregg Pratt, a vice president of operations. The most devastating defectors of all were Commodore’s head of engineering Shiraz Shivji and three of his key hardware engineers: Arthur Morgan, John Heonig, and Douglas Renn.

The mass exodus amounted to a humiliating vote of no-confidence in Irving Gould’s hand-picked successor to Tramiel, a former steel executive named Marshall Smith who was as bland as his name. The loss of engineering talent in particular left Commodore, who had already been in a difficult situation, even worse off. As Commodore’s big new machine for 1984, the Plus/4, amply demonstrated, there just wasn’t a whole lot left to be done with the 8-bit technology that had gotten Commodore this far. Trouble was, their engineers had experience with very little else. Tramiel had always kept Commodore’s engineering staff to the bare minimum, a fact which largely explains why they had nothing compelling in the pipeline now beyond the underwhelming Plus/4 and its even less impressive little brother the Commodore 16. And now, having lost four more key people… well, the situation didn’t look good.

And that was what made Amiga so attractive. At first Commodore, like Atari before them, envisioned simply licensing the Amiga chipset, in the process quite probably — again like Atari — using Amiga’s position of weakness to extort from them a ridiculously good deal. But within days of opening negotiations their thinking began to change. Here was not only a fantastic chipset but an equally fantastic group of software and hardware engineers, intimately familiar with exactly the sort of next-generation 16-bit technology with which Commodore’s own remaining engineers were so conspicuously unacquainted. Why not buy Amiga outright?

On June 29, David Morse walked unexpectedly into the lobby of Atari’s headquarters and requested to see his primary point of contact there, one John Farrand. Farrand already had an inkling that something was up; Morse had been dodging his calls and finding excuses to avoid face-to-face meetings for the last two weeks. Still, he wasn’t prepared for what happened next. Morse told him that he was here to pay back the $500,000, plus interest, and sever their business relationship. He then proceeded to practically shove a check into the hands of a very confused and, soon, very irate John Farrand. Two minutes later he was gone.

The check had of course come from Commodore, given as a gesture of good faith in their negotiations with Amiga and, more practically, to keep Atari from walking away with the technology they’d now decided they’d very much like to have for themselves. Six weeks later negotiations between Commodore and Amiga ended with the purchase by the former of the latter for $27 million. David Morse had his miracle. His investors and employees got a nice payday in return for their faith. And, most importantly, his brilliant young team would get the chance to turn Miner’s chipset into a real computer all their own, designed — for the most part — their way.

It’s worth dwelling for just a moment here on the sheer audacity of the feat Morse had just pulled off. Backed against the wall by an Atari that smelled blood in the water, he had taken their money, used it to finish the chipset and the Lorraine well enough to get him a deal with their arch-rival, then paid Atari back and walked away. It all added up to a long con worthy of The Sting. No wonder Atari, who had gotten as far as starting to design the motherboard for the game console destined to house the chipset, was pissed. And yet the Atari that would soon seek its revenge would not be the same Atari as the one he had negotiated with in March. Confused yet? To understand we must, once again, backtrack just slightly.

Atari may have been a relative Goliath in contrast to Amiga’s David in early 1984, but that’s not to say that they were financially healthy. Far from it. The previous year had been a disastrous one, marked by losses of over half a billion thanks to the Great Videogame Crash. CEO Ray Kassar had left under a cloud of accusations of insider trading, mismanagement, and general incompetence; no one turns faster on a wonder boy than Wall Street. Now his successor, a once and future cigarette mogul named James Morgan, was struggling to staunch the bleeding by laying off employees and closing offices almost by the week. Parent company Warner Communications, figuring that the videogame bubble was well and truly burst, just wanted to be rid of Atari as quickly and painlessly as possible.

Jack Tramiel, meanwhile, was becoming a regular presence in Silicon Valley, looking for facilities and technologies he could buy to get Tramel Technology off the ground. In fact, he was one of the many who visited Amiga during this period, although negotiations didn’t get very far. Then one day in June he got a call from a Warner executive, asking if he’d be interested in taking Atari off their hands.

A deal was reached in remarkably little time. Tramiel would buy not the company Atari itself but the assets of its home-computer and game-console divisions; he had no interest in its other branch, standup arcade games. Said assets included property, trademarks and copyrights, equipment, product inventories, and, not least, employees. He would pay, astonishingly, nothing upfront for it all, instead agreeing to $240 million in long-term notes and giving Warner a 32 percent stake in Tramel Technology. Warner literally sold the company — or, perhaps better said, gave away the company — out from under Morgan, who was talking new products and turnaround plans one day and arrived the next to be told to clear out his executive suite to make room for Tramiel. On July 1, just two days after Morse had given back that $500,000, the biggest chunk of Atari, a company which just a couple of years before had been the fastest growing in the history of American business, became the possession of tiny Tramel Technology, which was still being run at the time out of a vacant apartment in a dodgy neighborhood. Within days Tramiel renamed Tramel Technology to Atari Corporation. For years to come there would be two Ataris: Tramiel’s Atari Corporation, maker of home computers and game consoles, and Atari Games, maker of standup arcade games. It would take quite some time to disentangle the two; even the headquarters building would be shared for some time to come.

Legal trouble between Commodore and Jack Tramiel’s new Atari started immediately. Commodore fired the first salvo, suing Shiraz Shivji and his fellow engineers. When they had decamped to join Tramiel, Commodore claimed, they had taken with them a whole raft of technical documents under the guise of “personal goods.” A court injunction issued at Commodore’s request effectively barred them from doing any work at all for Tramiel, paralyzing his plans to start working on a new computer for several weeks. Shivji and company eventually returned a set of backup tapes taken from Commodore engineering’s in-house central server, full of schematics and other documents. Perhaps tellingly in light of the computer they would soon begin to build, many of the documents related to the Commodore 900, a prototyped but never manufactured Unix workstation to be built around the 16-bit Zilog Z8000 CPU.

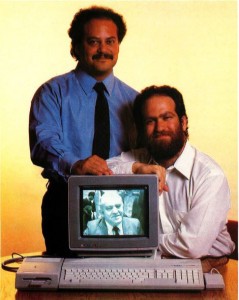

Sam and Leonard Tramiel, who would play a larger and larger role in the running of Atari as time went on.

If Tramiel was looking for a way to get revenge, he was soon to find what looked like a pretty good opportunity. Whilst going through files of documents in early August, Jack’s son Leonard discovered the Amiga agreement, complete with the $500,000 cashed check from Atari to Amiga, and brought it to his father’s attention. Jack Tramiel, who had long made a practice of treating the courts as merely another field of battle in keeping with his “business is war” philosophy, thought they just might have something. But it wasn’t immediately obvious to whom the cancelled contract should belong: to Atari Games (i.e., the coin-op people), to Warner, or to his own new Atari Corporation. Some hasty negotiating secured him clear title; Warner didn’t seem to know anything about the old agreement or what it might have meant for Atari’s future had it gone off according to plan. On August 13, as Commodore and Amiga were signing the contracts and putting the bow on the Amiga acquisition and as Shivji’s engineers were starting up work again on what was now to be the next-generation Atari computer, Atari filed suit against Amiga and against David Morse personally in Santa Clara Superior Court, alleging contract fraud. In their first motion they sought a legal injunction while the case was resolved that would have stopped the work of Commodore’s newly minted Amiga division in its tracks, and for a much longer period of time than Commodore’s more straightforward suit against Shivji and company.

Thankfully for Commodore, they didn’t get the injunction. However, the legal battle thus sparked would drag on for more than two-and-a-half years. In early 1985 Atari expanded their suit dramatically, adding Commodore, who had of course been footing the legal bill for Amiga and Morse’s defense anyway, as co-defendants — alleging them in effect to have been co-conspirators with Morse and Amiga in the fraud. They also added on a bunch of patent claims, one very important one in particular relating back to a patent Atari held on the old Atari 400 and 800 designs that Jay Miner had been responsible for in the late 1970s; those designs did indeed share a lot of attributes with the chipset he had developed at Amiga. For this sin Miner personally was added to the suit as yet another co-defendant. The whole thing was finally wrapped up only in March of 1987, in a sealed settlement whose full details have never come to light. Scuttlebutt of then and now, though, would have it that Commodore came out on the losing end, forced to pay Atari’s legal costs and some amount of additional restitution — although, again, exactly how much remains unknown.

What to make of this? A careful analysis of that March 1984 document shows that Morse and Amiga abode entirely by the letter of the agreement, that they were perfectly within their rights to return Atari’s loan to them and walk away from any further business arrangements. Atari’s argument rather lay in the spirit of the deal. At its heart is a single line in the agreement to which Morse signed his name that could easily be overlooked as boilerplate, a throwaway amidst all the carefully constructed legalese: “Amiga and Atari agree to negotiate in good faith regarding the license agreement.”

Atari’s contention, which is difficult to deny, was that Morse had at no time been acting in good faith from the moment he put pen to paper on the agreement. The agreement had rather been a desperate gambit to secure enough operating capital to keep Amiga in business for a few more months and find another suitor — nothing more, nothing less. Morse had stalled and obfuscated and dissembled for almost three months, whilst he sought that better suitor. Atari alleged that he had even verbally agreed to a “will not sell to” list of companies not allowed to acquire Amiga under any circumstances even as he was negotiating with one of the most prominent entries on that list, Commodore. And when he had forced a check into Farrand’s hands to terminate the relationship, they claimed, he had done so with the shabby excuse that the chips didn’t work properly, even though the whole world had seen them in action just a few weeks before at Summer CES. No, there wasn’t a whole lot of “good faith” going on there.

That said, the ethics of Morse’s actions, or lack thereof, strike me as far from cut and dried. It’s hard for me to get too morally outraged about Morse screwing over a company that was manifestly bent on screwing him in his position of weakness by saddling him with a terrible licensing proposal, an absurd deadline, and legal leverage that effectively destroyed any hope he might have had to get a reasonable, fair licensing agreement out of them. The letter of intent he felt compelled to sign reads more like an ultimatum than a starting point for negotiations. John Farrand as well as others from Atari claimed in court that they had had no intention of exercising their legal right to go into escrow to build the Amiga chipset without paying anything else at all for it had Morse not delivered that loan repayment in the nick of time. Still, these claims must be read skeptically, especially given Atari’s own desperate business position. Certainly Morse would have been an irresponsible executive indeed to base the fate of his company on their word. If Atari had really wished to acquire the chipset and make an equitable, money-making deal for both parties, they could best have achieved that by not opening negotiations with an absurd three-week deadline that put Morse over a barrel from day one.

That, anyway, is my view. Opinions of others who have studied the issue can and do vary. I would merely caution to consider the full picture anyone eager to read too much into the fact that Atari by relative consensus won this legal battle in the end. Even leaving aside the fact that legal right does not always correspond to moral right, we should remember that other issues eventually got bound up into the case. It strikes me particularly that Atari had quite a strong argument to make for Jay Miner having violated their patents, which covered display hardware uncomfortably similar to that in the Amiga chipset, even down to a graphics co-processor very similar in form and function to the so-called “copper” housed inside Agnus. Without knowing more about the contents of the final settlement, I really can’t say more than that.

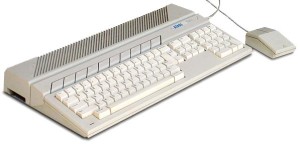

As the court battle began, the effort to build the computer that would become known as the Atari ST was also heating up. Shivji had initially been enamored with an oddball series of CPUs from National Semiconductor called the NS32000s, the first fully 32-bit CPUs to hit the industry. When they proved less impressive in reality than they were on paper, however, he quickly shifted to the Motorola 68000 that was already found in the Apple Lisa and Macintosh and the Amiga Lorraine. Generally described as a 16-bit chip, the 68000 was in some ways a hybrid 16- and 32-bit design, a fact which gave the new computer its name: “ST” stands for “Sixteen/Thirty-two Bit.” Shivji had had a very good idea even before Tramiel’s acquisition of Atari of just what he wanted to build:

There was going to be a windowing system, it was going to have bitmapped graphics, we knew roughly speaking what the [screen] resolutions were going to be, and so on. All those parameters were decided before the takeover. The idea was an advanced computer, 16/32-bit, good graphics, good sound, MIDI, the whole thing. A fun computer — but with the latest software technology.

Jack Tramiel and his sons descended on Atari and began with brutal efficiency to separate the wheat from the chaff. Huge numbers of employees got the axe from this company that had already been wracked by layoff after layoff over the past year. The latest victims were often announced impersonally by reading from a list of names in a group meeting, sometimes on the basis of impressions culled from an interview lasting all of five minutes. The bottom line was simple: who could help in an all-out effort to build a sophisticated new computer from the ground up in a matter of months? Those judged wanting in the skills and dedication that would be required were gone. Tramiel sold the equipment, even the desks they had left behind to make quick cash to throw into the ST development effort. With Amiga’s computer and who knew what else in the offing from other companies, speed was his priority. He expected his engineers, starting in August with virtually nothing other than Shivji’s rough design parameters, to build him a prototype ready to be demonstrated at the next CES show in January.

Decent graphics capabilities had to be a priority for the type of computer Shivji envisioned. Therefore the hardware engineers spent much of their time on a custom video chip that would support resolutions of up to 640 X 400, albeit only in black and white; the low-resolution mode of 320 X 200 that would be more typically used by games would allow up to 16 colors onscreen at one time from a palette of 512. That chip aside, to save time and money they would use off-the-shelf components as much as possible, such as a three-voice General Instrument sound chip that had already found a home in the popular Apple II Mockingboard sound card as well as various videogame consoles and standup arcade games. The ST’s most unusual feature would prove to be the built-in MIDI interface that let it control a MIDI-enabled synthesizer without the need for additional hardware, a strange luxury indeed for Tramiel to allow, given that he was famous for demanding that his machines contain only the hardware that absolutely had to be there in the name of keeping production costs down. (For a possible clue to why the MIDI interface was allowed, we can look to a typical ST product demonstration. Pitchmen made a habit of somewhat disingenuously playing MIDI music on the ST that was actually produced by a synthesizer under the table. It was easy — intentionally easy, many suspected — for an observer to miss the mention of the MIDI interface and think the ST was generating the music internally.) And of course in the wake of the Macintosh the ST simply had to ship with a mouse and an operating system to support it.

It was this latter that presented by far the biggest problem. While the fairly conservative hardware of the ST could be put together relatively quickly, writing a modern, GUI-based operating system for the new computer represented a herculean task. Apple, for instance, had spent years on the Macintosh’s operating system, and when the Mac was released it was still riddled with bugs and frustrations. This time around Tramiel wouldn’t be able to just slap an archaic-but-paid-for old PET BASIC ROM into the thing, as he had in the case of the Commodore 64. He needed a real operating system. Quickly. Where to get it?

He found his solution in a very surprising place: at Digital Research, whose CP/M was busily losing its last bits of business-computing market-share to Microsoft’s juggernaut MS-DOS. Digital had adopted an if-you-can’t-beat-em-join-em mentality in response. They were hard at work developing a complete Mac-like window manager that could run on top of MS-DOS or CP/M. It was called GEM, the “Graphical Environment Manager.” GEM was merely one of a whole range of similar shells that were appearing by 1985, struggling with varying degrees of failure to bring that Mac magic to the bland beige world of the IBM clones. Also among them was Microsoft’s original Windows 1.0 — another product that Tramiel briefly considered licensing for the ST. Digital got the nod because they were willing to license both GEM and a CP/M layer to run underneath it fairly cheap, always music to Jack Tramiel’s ears. The only problem was that it all currently ran only on Intel processors, not the 68000.

The small Atari team that temporarily immigrated to Digital Research’s Monterey headquarters to adapt GEM to the ST.

As Shivji and his engineers pieced the hardware together, some dozen of Atari’s top software stars migrated about 70 miles down the California coast from Silicon Valley to the surfer’s paradise of Monterey, home of Digital Research. Working with wire-wrapped prototype hardware that often flaked out for reasons having nothing to do with the software it ran, dealing with the condescension of many on the Digital staff who looked down on their backgrounds as mostly games programmers, wrestling with Digital’s Intel source code that was itself still under development and thus changing constantly, the Atari people managed in a scant few months to port enough of CP/M and GEM to the ST to give Atari something to show on the five prototype machines that Tramiel unveiled at CES in Las Vegas that January. Shivji:

The really exciting thing was that in five months we actually showed the product at CES with real chips, with real PCBs, with real monitors, with real plastic. Five months previous to that there was nothing that existed. You’re talking about tooling for plastic, you’re talking about getting an enormous software task done. And when we went to CES, 85 percent of the machine was done. We had windows, we had all kinds of stuff. People were looking for the VAX that was running all this stuff.

Tramiel was positively gloating at the show, reveling in the new ST and in Atari’s new motto: “Power Without the Price.” Atari erected a series of billboards along the freeway leading from the airport to the Vegas Strip, like the famous Burma-Shave signs of old.

PCjr, $599: IBM, Is This Price Right?

Macintosh, $2195: Does Apple Need This Big A Bite?

Atari Thinks They’re Out Of Sight

Welcome To Atari Country — Regards, Jack

The trade journalists, desperate for a machine to revive the slowing home-computer revolution and with it the various publications they wrote for, ate it up. The ST — or, as the press affectionately dubbed it, the “Jackintosh” — stole the show. “At a glance,” raved Compute! magazine, “it’s hard to tell a GEM screen from a Mac screen” — except for the ST’s color graphics, of course. And one other difference was very clear: an ST with 512 K of memory and monitor would retail for less than $1000 — less than one-third the cost of an equivalent Macintosh.

Rhapsodic press or no, Tramiel’s Atari very nearly went out of business in the months after that CES show. The Atari game consoles as well as the Atari 8-bit line of home computers were all but dead as commercial propositions, killed by the Great Videogame Crash and the Commodore 64 respectively. Thus virtually no money was coming in. You can only keep a multinational corporation in business so long by selling its old office furniture. The software team in Monterey, meanwhile, had to deal with a major crisis when they realized that CP/M just wasn’t going to work properly as the underpinning of GEM on the ST. They ended up porting and completing an abandoned Digital project to create GEMDOS, or, as it would become more popularly known, TOS: the “Tramiel Operating System.” With their software now the last hold-up to getting the ST into production and Tramiel breathing down their necks, the pressure on them was tremendous. Landon Dyer, one of the software team, delivers an anecdote that’s classic Jack Tramiel:

Jack Tramiel called a meeting. We didn’t often meet with him, and it was a big deal. He started by saying, “I hear you are unhappy.” Think of a deep, authoritarian voice, a lot like Darth Vader, and the same attitude, pretty much.

Sorry, Jack, things aren’t going all that hot. We tried to look humble, but we probably just came across as tired.

“I don’t understand why you are unhappy,” he rumbled. “You should be very happy; I am paying your salary. I am the one who is unhappy. The software is late. Why is it so late?”

Young and idealistic, I piped up: “You know, I don’t think we’re in this for the money. I think we just want to ship the best computer we can –”

Jack shut me down. “Then you won’t mind if I cut your salary in half?”

I got the message. He didn’t even have to use the Force.

Somehow they got it done. STs started rolling down production lines in June of 1985. The very first units went on sale not in the United States, where there were some hang-ups acquiring FCC certification, but rather West Germany. It was just as well, underscoring as it did Tramiel’s oft-repeated vision of the ST as an international computing platform. Indeed, the ST would go on to become a major success in West Germany and elsewhere in Europe, not only as a home computer and gaming platform but also as an affordable small-business computer, a market it would not manage to penetrate to any appreciable degree in its home country. Initial sales on both continents were gratifying, and the press largely continued to gush.

The praise was by no means undeserved. If the ST showed a few rough edges, inevitable products of its rushed development on a shoestring budget, it was more notable for everything it did well. A group of very smart, practical people put it together, ending up with a very sweet little computer for the money. Certainly GEM worked far, far better than a hasty port from a completely different architecture had any right to — arguably better, in fact, than Amiga’s soon-to-be-released homegrown equivalent, the Workbench. The ST really was exactly what Jack Tramiel had claimed it would be: a ridiculous amount of computing power for the price. That made it easier to forgive this “Jackintosh’s” failings in comparison to a real Macintosh, like its squat all-in-one-box case — no Tramiel computer was ever likely to win the sorts of design awards that Apple products routinely scooped up by the fistful even then — and materials and workmanship that weren’t quite on the same par with the Mac as were the ST’s raw specs. The historical legacy of the ST as we remember it today is kind of a tragic one in that it has little to do with the machine’s own considerable merits. The tragedy of the ST would be to be merely a very good machine, whereas its two 68000-based points of habitual comparison, the Apple Macintosh and the Commodore Amiga, together pioneered the very paradigm of computing and, one might even say, of living that we know today.

Speaking of which: just where was Commodore in the midst of all this? That’s a question many in the press were asking. Commodore had made an appearance at that January 1985 CES, but only to show off a new 8-bit computer, the last they would ever make: the Commodore 128. An odd, Frankenstein’s monster hybrid of a computer, it seemed a summary of the last ten years of 8-bit development crammed into one machine, sporting both of the microprocessors that made the PC revolution, the Zilog Z-80 and the MOS 6502 (the latter was slightly modified and re-badged the 8502). Between them they allowed for three independent operating modes: CP/M, a 99.9 percent compatible Commodore 64 mode, and the machine’s unique new 128 mode. This latter addressed most of the 64’s most notable failings, including its lack of an 80-column display, its atrocious BASIC that gave access to none of the machine’s graphics or sound capabilities (the 128’s BASIC 7.0 in contrast was amongst the best 8-bit BASICs ever released), and its absurdly slow disk drives (the 128 transferred data at six or seven times the speed of the 64). Despite being thoroughly overshadowed by the ST in CES show reports, the 128 would go on to considerable commercial success, to the tune of some 4 million units sold over the next four years.

Still, it was obvious to even contemporary observers that the Commodore 128 represented the past, the culmination of the line that had begun back in 1977 with the Commodore PET. What about the future? What about Amiga? While Tramiel and his sons trumpeted their plans for the ST line to anyone who would listen, Commodore was weirdly silent about goings-on inside its new division. The press largely had to make do with rumor and innuendo: Commodore had sent large numbers of prototypes to a number of major software developers, most notably Electronic Arts; the graphics had gotten even better since those CES shows; Commodore was planning a major announcement for tomorrow, next week, next month. The Amiga computer became the computer industry’s unicorn, oft-discussed but seldom glimpsed. This, of course, only increased its mystique. How would it compare to the Jackintosh and the Macintosh? What would it do? How much would it cost? What would it, ultimately, be? And just why the hell was it taking so long? A month after Atari started shipping STs — that machine had gone from a back-of-a-napkin proposal to production in far less time than it had taken Commodore to merely finish their own 68000-based computer — people would at long last start to get some answers.

(Sources: On the Edge by Brian Bagnall; New York Times of July 3 1984, August 21 1984, and August 29 1984; Montreal Gazette of July 12 1984 and July 14 1984; Compute! of August 1984, February 1985, March 1985, April 1985, July 1985, August 1985, and October 1985; STart of Summer 1988; InfoWorld of September 17 1984 and December 17 1984; Wall Street Journal of March 25 1984; Philadelphia Inquirer of April 19 1985. Landon Dyer’s terrific memories of working as part of Atari’s GEM team can be found on his blog as a part 1 and a part 2. Finally, Marty Goldberg’s once again shared a lot of insights and information on the legal battle between Atari and Commodore, including some extracts from actual court transcripts, although once again our conclusions about it are quite different. Regardless, my heartfelt thanks to him! Most of the pictures in this article come from STart magazine’s history of the ST, as referenced above.)