Fair warning: although there’s no nudity in the pictures below, the text of this article does contain frank descriptions of the human anatomy and sexual acts.

When you want to know where the zeitgeist is heading, just look to what the punters are lining up to see on Broadway. To wit: the unexpected breakout hit of the 2003 to 2004 season was Avenue Q, a low-budget send-up of Sesame Street where the puppets cursed, drank, and had sex with one another. They were rude, crude, and weirdly relatable — even lovable, what with their habit of breaking into song at the drop of a hat. The most enduring of their songs was a timeless show tune called “The Internet is for Porn.” (Eat your hearts out, Rodgers and Hammerstein!) It became, inevitably, an Internet meme of its own, reflecting an unnerving feeling that the ground was shifting beneath society’s feet, that the most important practical affordance of the World Wide Web, that noble experiment in the unfettered exchange of information, might indeed be to put porn at the fingertips of every human being with a computer on his desk.

And yet the world hadn’t seen anything yet in 2003; the statistics surrounding Internet porn would become truly gob-smacking after streaming video and smartphones became everyday commodities. By 2016, Pornhub, the biggest smut aggregator on the Internet, would be attracting four visits per year for every man, woman, and child on the planet. There was enough material on that site alone to keep a porn hound glued to his screen for five times as long as Homo sapiens have existed, with more fresh porn being uploaded to the site every few months than the entirety of the twentieth century had managed to produce. Needless to say, the pace of neither porn consumption nor production has cooled off a jot in the years since.

On one level, the sheer size of porn’s digital footprint is kind of hilarious. How many images do we really need of an activity which has only a limited number of possible permutations and combinations in the end, despite the fevered efforts of the imaginations behind it to discover… well, not quite virgin territory, but you know what I mean. I’ve long since come to realize that I am, for better or for worse, a member of the last generation of Western humanity to have grown up thinking of images of naked bodies and sexual activity as a scarce commodity. Cue the anecdotes about the lengths boys like I was used to have to go to in order to get a glimpse of an actual naked or even partially unclothed woman: sneaking into Dad’s Playboy stash, circumventing the child lockout on the family television’s cable box, perusing Big Sister’s Victoria’s Secret catalog, even resorting when worst came to worst to the sturdy maidens in equally sturdy brassieres that used to be found in the lingerie section of the Sears catalog. Such tales read as quaintly as the courtship rituals of Jane Austen novels to the generation after ours, who just have to pull their phones out of their pockets to see sights that would have shocked the young me to my pubescent core.

Yet lurking behind the farcical absurdity of porn’s present-day popularity are serious questions for which none of us have any concrete answers. What does it do to young people to grow up with virtual if not physical sex at their literal fingertips? For that matter, what does it do to those of us who aren’t so young anymore? Some point hopefully to statistics which seem to show that accessible porn leads to dramatically decreased rates of real-world sexual violence. But even those of us who try our darnedest to be open-minded and sex-positive can’t always suppress the uneasy feeling that turning an act as intimate as making love into a commodity as ubiquitous as toilet paper may come at a cost to our humanity.

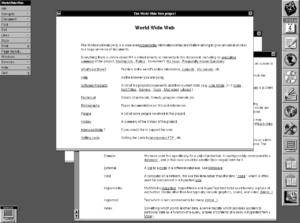

Of course, we won’t be able to resolve these dilemmas here. What we will do today, however, is learn how the song “The Internet is for Porn” may have been more truthy than even its writers were aware of. For if you look at the technologies and practices that make the modern Web go — not the idealistic building blocks provided by J.C.R. Licklider and Tim Berners-Lee and their many storied colleagues, but the ones behind the commercial Web of today — you find that a crazy amount of them came straight out of porn: online payment systems, ad trackers, affiliated marketing, streaming video, video conferencing… all of them and more were made for porn.

It was fully eight years before Avenue Q opened that the mainstream media’s attention was captured for the first time by porn on the Internet. On June 14, 1995, Jim Exon, a 74-year-old Democratic senator from Nebraska, stood up inside the United States Capitol Building to lead his colleagues in a prayer.

Almighty God, lord of all life, we praise you for the advancements in computerized communications that we enjoy in our time. Sadly, however, there are those who are littering this information superhighway with obscene, indecent, and destructive pornography. Now, guide the senators when they consider ways of controlling the pollution of computer communications and how to preserve one of our greatest resources: the minds of our children and the future moral strength of our nation. Amen.

As Exon spoke, he waved a blue binder in front of his face, filled, so he said, with filthy pictures his staff had found online. “I cannot and would not show these pictures to the Senate,” he thundered. “I would not want our cameras to pick them up. If nothing is done now, the pornographers may become the primary beneficiary of the information revolution.”

Most of his audience had no idea what he was on about. An exception was Dan Coats, a Republican senator from Indiana, who had in fact been the one to light a fire under Exon in the first place. “With old Internet technology, retrieving and viewing any graphic image on a PC at home could be laborious,” Coats explained in slightly more grounded diction after Exon had finished his righteous call to arms. “New Internet technology, like browsers for the Web, makes all of this easier.” He cited a study that was about to be published in The Georgetown Law Review, claiming that 450,000 pornographic images could already be found online, and that these had already been downloaded 6.4 million times. What on earth would happen once the Internet became truly ubiquitous, something everyone expected to happen over the next few years? “Think of the children!”

Luckily for that most precious of all social resources, Coats and Exon had legislation ready to go. Their bill would make it a federal crime to “use any interactive computer service to display in a manner available to a person under eighteen years of age, any comment, request, suggestion, proposal, image, or other communication that, in context, depicts or describes, in terms patently offensive as measured by contemporary community standards, sexual or excretory activities or organs.” Nonplussed though they largely were by it all, “few senators wanted to cast a nationally televised vote that might later be characterized as pro-pornography,” as Time magazine put it. The bill passed by a vote of 86 to 14.

On February 8, 1996, President Bill Clinton signed the final version of the Communications Decency Act into law. “Today,” he said, “with the stroke of a pen, our laws will catch up to the future.” Or perhaps not quite today: just as countless legal experts had warned would happen, the new law was immediately tied up in litigation, challenged as an unacceptable infringement on the right to free speech.

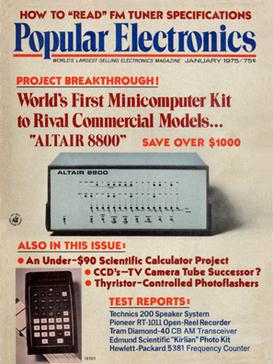

Looking back, the most remarkable thing about this first furor over online porn is just how early it came, before the World Wide Web was more than a vague aspirational notion, if that, in the minds of the large majority of Americans. The Georgetown Law study which had prompted it — a seriously flawed if not outright fraudulent study, written originally as an undergraduate research paper — didn’t focus on the Web at all but rather on Usenet, a worldwide textual discussion forum which had been hacked long ago to foster the exchange of binary files as well, among them dirty pictures.

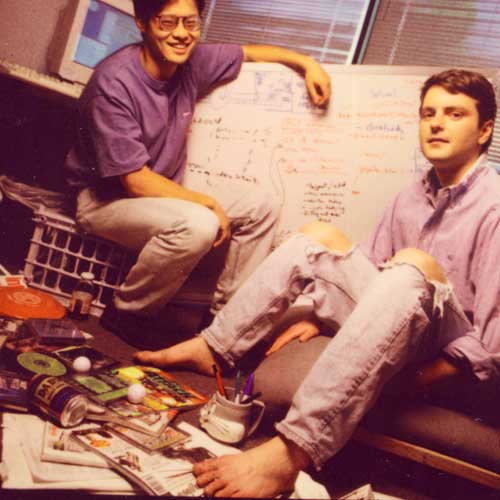

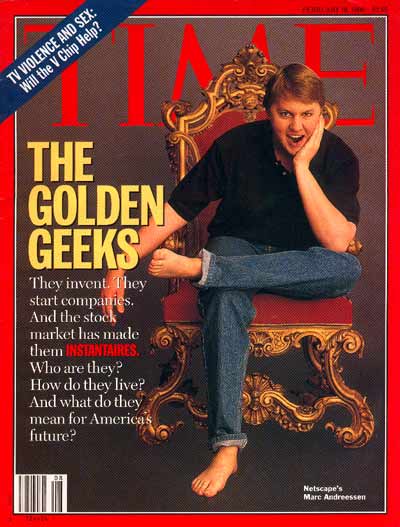

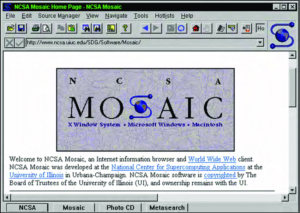

Nevertheless, by the time the Communications Decency Act became one of the shakier laws of the land the locus of digital porn was migrating quickly from CD-ROM and Usenet to the Web. Like so much else there, porn on the Web began more in a spirit of amateur experimentation than hard-eyed business acumen. During the early days of Mosaic and Netscape and Web 1.0, hundreds of thousands of ordinary folks who could sense a communications revolution in the offing rushed to learn HTML and set up their own little pages on the Web, dedicated to whatever topic they found most interesting. For some of them, that topic was sex. There are far too many stories here for me to tell you today, but we can make space for one of them at least. It involves Jen Peterson and Dave Miller, a young couple just out of high school who were trying to make ends meet in their cramped Baltimore apartment.

In the spring of 1995, Jen got approved for a Sears credit card, whereupon Dave convinced her that they should buy a computer with their windfall, to find out what this Internet thing that they were seeing in the news was really all about. So, they spent $4000 on a state-of-the-art 75Mhz Packard Bell system, complete with monitor and modem, and lugged it back home on the bus.

Dave’s first destinations on the Internet were Simpsons websites. But one day he asked himself, “I wonder if there’s any nudity on this thing?” Whereupon he discovered that there was an active trade in dirty pictures going on on Usenet. Now, it just so happened that Dave was something of a photographer himself, and his favorite subject was the unclothed Jen: “We would look at [the pictures] afterwards, and that would lead to even better sex. I wanted to share them. I wanted people to see Jen’s body.” Jen was game, so the couple started uploading their own pictures to Usenet.

But Usenet was just so baroque and unfriendly. Dave’s particular sexual kink — not an unusual one, on the spectrum of same — made him want to show Jen to as many people as possible, which meant finding a more accessible medium for the purpose. In or around October of 1995, the couple opened “JENnDAVE’s HOME PAGE!” (“I called us Jen and Dave rather than Dave and Jen,” says Dave, “because I knew nobody was there to see me. I wasn’t being sweet; I was being practical.”) At that time, Internet service providers gave you a home page with which to plant your flag on the Web as part of your subscription, so the pair’s initial financial investment in the site was literally zero. This same figure was, not coincidentally, what they charged their visitors.

But within five months, the site was attracting 25,000 visitors every day, and their service provider was growing restless under the traffic load; in fact, the amount of bandwidth Jen and Dave’s dirty pictures were absorbing was single-handedly responsible for the provider deleting the promise of “unlimited traffic” from its contracts. Meanwhile the Communications Decency Act had become law — a law which their site was all too plainly violating, placing them at risk of significant fines or even prison terms if the courts should finally decide that it was constitutional.

Yet just how was one to ensure that one’s porn wasn’t “available to a person under eighteen years of age,” as the law demanded, on the wide-open Web? Some folks, Jen and Dave among them, put up entrance pages which asked the visitor to click a button certifying that, “Yep, I’m eighteen, alright!” It was doubtful, however, whether a judge would construe such an honor system to mean that their sites were no longer “available” to youngsters. Out of this dilemma, almost as much as the pure profit motive, arose the need and desire to accept credit cards in return for dirty pictures over the Internet. For in the United States at least, a credit card, which by law could not be issued to anyone under the age of eighteen, was about as trustworthy a signifier of maturity as you were likely to find.

We’ll return to Jen and Dave momentarily. Right now, though, we must shift our focus to a wheeler and dealer named Richard Gordon, a fellow aptly described by journalist Samantha Cole as “a smooth serial entrepreneur with a grifter’s lean.” Certainly he had a sketchy background by almost anyone’s terms. In the late 1970s, he’d worked in New York in insurance and financial planning, and had gotten into the habit of dipping into his customers’ accounts to fund his own lavish lifestyle. He attempted to flee the country after being tipped off that he was under investigation by the feds, only to be dragged out of the closet of a friend’s apartment with a Concorde ticket to Paris in his hand. He served just two years of his seven-year prison sentence, emerging on parole in 1982 to continue the hustle.

Two years later, President Ronald Reagan’s administration effected a long-in-the-offing final breakup of AT&T, the corporate giant that had for well over half a century held an all but ironclad monopoly over telegraphy, telephony, and computer telecommunications in the United States. Overnight, one ginormous company became 23 smaller ones. There followed just the explosion of innovation that the Reagan administration had predicted, as those companies and other, new players all jockeyed for competitive advantage. Among other things, this led to a dramatic expansion in the leasing of “1-900” numbers: commercial telephone numbers which billed the people who called them by the minute. When it had first rolled them out in the 1970s, AT&T had imagined that they would be used for time and temperature updates, sports scores, movie listings, perhaps for dial-a-joke services, polls, and horoscopes. And indeed, they were used for all of these things and more. But if you’ve read this far, you can probably guess where this is going: they were used most of all for phone sex. The go-go 1980s in the telecom sector turned personalized auditory masturbation aids into a thriving cottage industry.

Still, there was a problem that many of those who wanted to get in on the action found well-nigh intractable: the problem of actually collecting money from their customers. The obvious way of doing so was through a credit card, which was quick and convenient and thus highly conducive to impulse buying, and which could serve as an age guarantee to boot. But the credit-card companies were huge corporations with convoluted application processes for merchants, difficult entities for the average shoestring phone-sex provider teetering on the ragged edge of business-world legitimacy to deal with.

Richard Gordon saw opportunity in this state of affairs. He set up an intermediate credit-card-billing service for the phone-sex folks. They just had to sign up with him, and he would take care of all the rest — for a small cut of the transactions he processed, naturally. His system came with an additional advantage which phone-sex customers greatly appreciated: instead of, say, “1-900-HOT-SEXX” appearing on their credit-card statements, there appeared only the innocuously generic name of “Electronic Card Systems,” which was much easier to explain away to a suspicious spouse. Gordon made a lot of money off phone sex, learning along the way an important lesson: that there was far more money to be made in facilitating the exchange of porn than in making the stuff yourself and selling it directly. The venture even came with a welcome veneer of plausible deniability; there was nothing preventing Gordon from signing up other sorts of 1-900 numbers to his billing service as well. These could be the customers he talked about at polite cocktail parties, even as he made the bulk of his money from telephonic masturbation.

The Web came to his attention in the mid-1990s. “What is the Net?” he asked himself. “It’s just a phone call with pictures.” So, Gordon extended his thriving phone-sex billing service to the purveyors of Internet pornography. In so doing, he would “play a significant role in the birth of electronic commerce,” as The New York Times would diffidently put it twelve years later, “laying the groundwork for electronic transactions conducted with credit cards, opening the doors to the first generation of e-commerce startups.”

In truth, it’s difficult to overstate the importance of this step to the evolution of the Web. Somewhat contrary to The New York Times‘s statement, Richard Gordon did not invent e-commerce from whole cloth; it had been going on on closed commercial services like CompuServe since the mid-1980s. Because those services were run from the top down and, indeed, were billing their customers’ credit cards every month already, they were equipped out of the box to handle online transactions in a way that the open, anarchic Web was not. Netscape provided the necessary core technology for this purpose when they added support for encrypted traffic to their Navigator browser. But it was Gordon and a handful of others like him who actually made commerce on the Web a practical reality, blazing trails that would soon be followed by more respectable institutions; without Gordon’s Electronic Card Services to show the way, there would never have been a PayPal.

In the meantime, Gordon happily accepted babes in the woods like Jen Peterson and Dave Miller, who wouldn’t have had a clue how to set up a merchant’s account with any one of the credit-card companies, much less all of them for maximum customer convenience. “He was the house for Internet porn in those days,” says one Steven Peisner, who worked for him. “At that time, if you had anything to do with Internet porn, you called Electronic Card Systems.”

Thanks to Gordon, Jen and Dave were able to sign up with a real hosting company and start charging $5 for six months of full access to their site in early 1996. By the turn of the millennium, the price was $15 for one month.

The site lost some of its innocence in another sense as well over the course of time. What had begun with cheesecake nudie pics turned into real hardcore porn, as others came to join in on the fun. “I would be with other girls and Jen would be with other dudes and most of the time, that was in the context of picture taking,” says Dave. “People said, ‘Oh Jen and Dave, you’ve gone away from your roots, you’re no longer the sweet innocent couple that you were. Now, you’ll screw anybody.'”

Their unlikely careers in porn largely ended after they had twins in 2005, by which time their quaint little site was already an anachronism in a sea of cutthroat porn aggregators. Today Dave works in medical administration and runs pub quizzes on the weekends, while Jen maintains their sexy archive and runs a home. They have no regrets about their former lives. “We were just looking to have a good time and spread the ideals of body-positivity and sex-positivity,” says Jen. “Even if we didn’t yet have the words for those things.”

A Pennsylvanian college student named Jennifer Ringley was a trailblazer of a different stripe, billing herself as a “lifecaster.” In 1996, she saw an early webcam, capable of capturing still images only, for sale in the Dickinson College bookstore and just had to have it. “You could become the human version of FishCam,” joked one of her friends, referring to a camera that had been set up in an aquarium in Mountain View, California, to deliver a live feed, refreshed every three to four seconds, to anyone who visited its website. Having been raised in a nudist family, Ringley was no shrinking violet; she found the idea extremely appealing.

The result was JenniCam, which showed what was going on in her dorm room around the clock — albeit, this being the 1990s, in the form of a linear series of still photographs only, snapped at the rather bleary-eyed resolution of 320 X 240. “Whatever you’re seeing isn’t staged or faked,” she said, “and while I don’t claim to be the most interesting person in the world, there’s something compelling about real life that staging it wouldn’t bring to the medium.” She was at pains to point out that JenniCam was a social experiment, one of many that were making the news on the early Web at the time. Whatever else it was, it wasn’t porn; if you happened to catch her changing clothes or making out with a boy she’d brought back to the room or even pleasuring herself alone, that was just another aspect of the life being documented.

One cannot help but feel that she protested a bit too much. After all, her original domain name was boudoir.org, and she wasn’t above performing the occasional striptease for the camera. Even if she hadn’t played for the camera so obviously at times, we would have reason to doubt whether the scenes it captured were the same as they would have been had the camera not been present. For, as documentary-film theory teaches us, the “fly on the wall” is a myth; the camera always changes the scene it captures by the very fact of its presence.

Like Jen and Dave, Ringley first put her pictures online for free, but later she began charging for access. At its peak, her site was getting millions of hits every day. “The peep-show nature of the medium was enough to get viewers turned on,” writes Patchen Barss in The Erotic Engine, a study of pornography. “Just having a window into a real person’s life was plenty — people would pay for the occasional chance to observe Ringley’s non-porn-star-like sex life, or to just catch her walking naked to the shower.”

Ringley inspired countless imitators, some likewise insisting that they were engaged in a social experiment or art project, others leaning more frankly into titillation. Some of the shine went off the experiment for her personally in 2000, when she was captured enjoying a tryst with the fiancé of another “cam girl.” (Ah, what a strange world it was already becoming…) The same mainstream media that had been burning with high-minded questions to ask her a few years earlier now labeled her a “redheaded little minx” and “amoral man-trapper.” Still, she kept her site going until December 31, 2003, making a decent living from a 95-percent male clientele who wanted the thrill of being a Peeping Tom without the dangers.

Sites like Jen and Dave’s and to some extent Jennifer Ringley’s existed on the hazy border between amateur exhibitionism and porn as a business. Much of their charm, if that is a word that applies in your opinion, stems from their ramshackle quality. But other keen minds realized early on that online porn was going to be huge, and set out far more methodically to capitalize on it.

One of the most interesting and unique of them was the stripper and model who called herself Danni Ashe, who marketed herself as a living, breathing fantasy of nerdy males, a “geek with big breasts,” as she put it. Well before becoming an online star, she was a headline attraction at strip clubs all over the country, thanks to skin-magazine “profiles” and soft-core videos. “I ventured onto the Internet and quickly got into the Usenet newsgroups, where I was hearing that my pictures were being posted, and started talking to people,” she said later. “I spent several really intense months in the newsgroups, and it was out of those conversations that the idea for Danni’s Hard Drive was born.” According to her official lore, she learned HTML during a vacation to the Bahamas and coded up her site all by herself from scratch.

In contrast to the sites we’ve already met, Danni’s Hard Drive was designed to make money from the start. It went live with a subscription price of $20 per month, which provided access to hundreds of nude and semi-nude photographs of the proprietor and, soon enough, many other women as well. Ashe dealt only in pictorials not much more explicit than those seen in Playboy, both in the case of her own pictures and those of others. As Samantha Cole writes, “Danni never shot any content with men and never posted images of herself with anything — even a sex toy — inside her.” Despite its defiantly soft-core nature in a field where extremism usually reigns supreme, some accounts claim that Danni’s Hard Drive was the busiest single site on the Internet for a couple of years, consuming more bandwidth each day than the entirety of Central America. It was as innovative as it was profitable, setting into place more building blocks of the post-millennial Web. Most notably, it pioneered online video streaming via a proprietary technology called DanniVision more than half a decade before YouTube came to be.

Danni’s Hard Drive had 25,000 subscribers by the time DanniVision was added to its portfolio of temptations in 1999. It weathered the dot.com crash of the year 2000 with nary a scratch. In 2001, the business employed 45 people behind the cameras — almost all of them women — and turned an $8 million annual profit. Savvy businesswoman that she was, Ashe sold out at the perfect moment, walking away with millions in her pocket in 2004.

Equally savvy was one Beth Mansfield, who realized, like Richard Gordon before her, that the easiest and safest way to earn money from porn was as a facilitator rather than a maker. She was a 36-year-old unemployed accountant and single mother living with her children in a trailer in Alabama when she heard the buzz about the Web and resolved to find a way to make a living online. She decided that porn was the easiest content area in which to do so, even though she had no personal interest in it whatsoever. It was just smart business; she saw a hole in an existing market and figured out how to fill it.

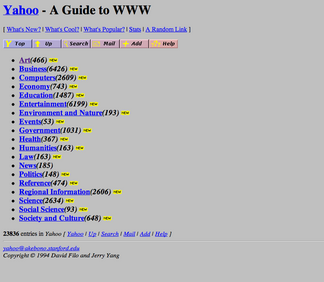

Said hole was the lack of a good way to find the porn you found most exciting. With automated site-indexing Web crawlers still in their infancy, most people’s on-ramp to the Web at the time was the Yahoo! home page, an exhaustive list of hand-curated links, a sort of Internet Yellow Pages. But Yahoo! wasn’t about to risk offending the investors who had just rewarded it with the splashiest IPO this side of Netscape’s by getting into porn curation.

So, Mansfield decided to make her own Yahoo! for porn. She called it Persian Kitty, after the family cat. Anyone could submit a porn site to her to be listed, after which she would do her due diligence by ensuring it was what they said it was and add it to one or more of her many fussily specific categories. She compared her relationship with the sex organs she spent hours per day staring at to that of a gynecologist: “I’m probably the strangest adult cruiser there is. I go and look at the structure [of the site, not the sex organs!], look at what they offer, count the images, and I’m out.” While a simple listing on Persian Kitty was free, she made money — quite a lot of money — by getting the owners of porn sites to pay for banner advertisements and priority placement within the categories, long before such online advertising went mainstream. Like Danni Ashe, she eventually sold her business for a small fortune and walked away.

We’ve met an unexpected number of female entrepreneurs thus far. And indeed, if you’re looking for positives to take away from the earliest days of online porn, one of them must surely be the number of women who took advantage of the low barriers to entry in online media to make or facilitate porn on their own terms — a welcome contrast to the notoriously exploitive old-school porn industry, a morally reprehensible place regardless of your views on sexual mores. “People have an idea of who runs a sexually oriented site on the Web,” said Danni Ashe during a chance encounter with the film critic Roger Ebert at Cannes. “They think of a dirty old man with a cigar. A Mafia type.” However you judged her, she certainly didn’t fit that stereotype.

Sadly, though, the stereotype became more and more the rule as time went on and the money waiting to be made from sex on the Web continued to escalate almost exponentially. By the turn of the millennium, the online porn industry was mostly controlled by men, just like the offline one. In the end, that is to say, Richard Gordon rather than Danni Ashe or Beth Mansfield became the archetypal porn entrepreneur online as well.

Another of the new bosses who were the same as the old was Ron “Fantasy Man” Levi, an imposing amalgamation of muscles, tattoos, and hair tonic who looked and lived like a character out of a mob movie. Having made his first fortune as the owner of a network of phone-sex providers, Levi, like Gordon before him, turned to the Web as the logical next frontier. His programmers developed the technology behind what we now refer to as “online affiliate marketing,” yet another of the mainstays of modern e-commerce, in a package he called the “XXX Counter.”

In a sense, it was just a super-powered version of what Beth Mansfield was already doing on Persian Kitty. By taking advantage of cookies — small chunks of persistent information that a Web browser can be asked to store on a user’s hard drive, that can then be used to track that user’s progress from site to site — the XXX Counter was able to see exactly what links had turned a sex-curious surfer into a paying customer of one or more porn sites.

This technology is almost as important to the commercial Web of today as the ability to accept credit cards. It’s employed by countless online stores from Amazon on down, being among other things the reason that a profession with the title of “online influencer” exists today. (Oh, what a strange world we live in…) Patchen Barss:

The esoteric computer technology which originally merely allowed EuroNubiles.com to know when PantyhosePlanet.com had sent some customers their way is today a key part of how Amazon, iTunes, eBay, and thousands of other online retailers work. Each offers a commission system for referring sites that send paying traffic their way. They rarely acknowledge that this key part of their business model was developed and refined by the adult industry.

All of the stories and players we’ve met thus far, along with many, many more, added up to a thriving industry, long before respectable e-commerce was much more than a twinkle in Jeff Bezos’s eye. Wired magazine reported in its December 1997 issue that an extraordinary 28,000 adult sites now existed on the World Wide Web, and that one or more of them were visited by 30 percent of all Internet-connected computers every single month. Estimates of the annual revenues they were bringing in ranged from $100 million to $1.2 billion. The joke in Silicon Valley and Wall Street alike was that porn was the only thing yet making real money on the Web (as opposed to the funny money of IPOs and venture capitalists, a product of aspirations rather than operations). Porn was the only form of online content that people had as yet definitively shown themselves to be willing — eager, in fact — to pay for. From the same Wired article:

Within the information-and-entertainment category — sales of online content, as opposed to consumer goods and financial services — commercial sex sites are almost the only ones in the black. Other content providers, operating in an environment that puts any offering that doesn’t promise an orgasm at a competitive disadvantage, are still trying to come up with a viable business model. ESPN SportsZone may be one of the most popular content sites on the Web, but most of what it offers is free. Online game developers can’t figure out whether to impose a flat fee or charge by the hour or rely on ad sales. USA Today had to cut the monthly subscription fee on its website from $15 to $13 and finally to nothing. Among major print publications, only The Wall Street Journal has managed to impose a blanket subscription fee.

“Sex and money,” observes Mike Wheeler, president of MSNBC Desktop Video, a Web-based video news service for the corporate market. “Those are the two areas you can charge for.”

The San Francisco Chronicle put it more succinctly at about the same time: “There’s a two-word mantra for people who want to make money on the Internet — sex sells.”

Ironically, the Communications Decency Act — the law that had first prompted so many online porn operators to lock their content behind paywalls — was already history by the time the publications wrote these words. The Supreme Court had struck it down once and for all in June of 1997, calling it a gross violation of the right to free speech. Nevertheless, the paywalled porn sites remained. Too many people were making too much money for it to be otherwise. In attempting to stamp out online porn, Senators Coats and Exon had helped to create a monster beyond their wildest nightmares.

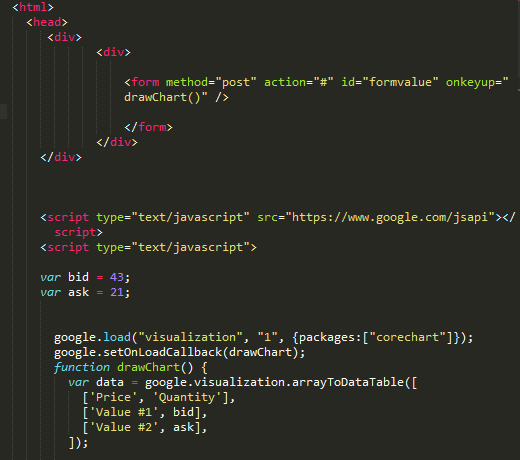

In addition to blazing the trails that the Jeff Bezos of the world would soon follow in terms of online payments and affiliate marketing, porn sites were embracing new technologies like JavaScript before just about anyone else. As Wired magazine wrote, “No matter how you feel about their content, sex sites are among the most visually dazzling around.” “We’re on the cutting edge of everything,” said one porn-site designer. “If there’s a new technology out there and we want to add it to the site, it’s not hard to convince management.”

I could keep on going, through online technology after online technology. For example, take the video-conferencing systems that have become such a mainstay of business life around the world since the pandemic. Porn was their first killer app, after some enterprising entrepreneurs figured out that the only thing better than phone sex was phone sex with video. The porn mavens even anticipated — ominously, some might say — the business models of modern-day social-media sites. “The consumers are the content!” crowed one of them in the midst of setting up a site for amateur porn stars to let it all hang out. The vast majority of that deluge of new porn that now gets uploaded every day comes from amateurs who expect little or nothing in payment beyond the thrill of knowing that others are getting off on watching them. The people hosting this material understand what Richard Gordon, Beth Mansfield and Ron Levi knew before them. Allow me to repeat it one more time, just for good measure: the real money is in porn facilitation, not in porn production.

In light of all this, it’s small wonder that nobody talked much about porn on CD-ROM after 1996, that AdultDex became all about online sex, showcasing products like a “$100,000 turnkey cyberporn system” — a porn site in a box, perfect for those looking to break into the Web’s hottest sector in a hurry. “The whole Internet is being driven by the adult industry,” said one AdultDex exhibitor who asked not to be named. “If all this were made illegal tomorrow, the Internet would go back to being a bunch of scientists discussing geek stuff in email.” That might have been overstating the case just a bit, but there was no denying that virtual sex was at the vanguard of the most revolutionary development in mass communications since the printing press. The World Wide Web had fulfilled the promise of the seedy ROM.

Did you enjoy this article? If so, please think about pitching in to help me make many more like it. You can pledge any amount you like.

Sources: The books How Sex Changed the Internet and the Internet Changed Sex by Samantha Cole, Obscene Profits: The Entrepreneurs of Pornography in the Cyber Age by Frederick S. Lane III, The Players Ball: A Genius, a Con Man, and the Secret History of the Internet’s Rise by David Kushner, The Erotic Engine by Patchen Barss, and The Pornography Wars: The Past, Present, and Future of America’s Obscene Obsession by Kelsy Burke. Wired of February 1997 and December 1997; Time of July 1995; San Francisco Chronicle of November 19 1997; San Diego Tribune of May 8 2017; New York Times of August 1 2003 and May 1 2004; Wall Street Journal of May 20 1997. Online sources include “Sex Sells, Doesn’t It?” Mark Gimein on Salon, Jen and Dave’s current (porn-free) home page, and “‘I Started Really Getting Into It’: Seven Pioneers of Amateur Porn Look Back” by Alexa Tsoulis-Reay at The Cut.

You can find the 1990s-vintage Jen and Dave, JenniCam, Danni’s Hard Drive, and Persian Kitty at archive.org. Needless to say, you should understand what you are getting into before you visit.

Finally, for a highly fictionalized and sensationalized but entertaining and truthy tale about the early days of online porn, see the 2009 movie Middle Men.