While wide-area computer networking, packet switching, and the Internet were coming of age, all of the individual computers on the wire were becoming exponentially faster, exponentially more capacious internally, and exponentially smaller externally. The pace of their evolution was unprecedented in the history of technology; had automobiles been improved at a similar rate, the Ford Model T would have gone supersonic within ten years of its introduction. We should take a moment now to find out why and how such a torrid pace was maintained.

As Claude Shannon and others realized before World War II, a digital computer in the abstract is an elaborate exercise in boolean logic, a dynamic matrix of on-off switches — or, if you like, of ones and zeroes. The more of these switches a computer has, the more it can be and do. The first Turing-complete digital computers, such as ENIAC and Whirlwind, implemented their logical switches using vacuum tubes, a venerable technology inherited from telephony. Each vacuum tube was about as big as an incandescent light bulb, consumed a similar amount of power, and tended to burn out almost as frequently. These factors made the computers which employed vacuum tubes massive edifices that required as much power as the typical city block, even as they struggled to maintain an uptime of more than 50 percent — and all for the tiniest sliver of one percent of the overall throughput of the smartphones we carry in our pockets today. Computers of this generation were so huge, expensive, and maintenance-heavy in relation to what they could actually be used to accomplish that they were largely limited to government-funded research institutions and military applications.

Computing’s first dramatic leap forward in terms of its basic technological underpinnings also came courtesy of telephony. More specifically, it came in the form of the transistor, a technology which had been invented at Bell Labs in December of 1947 with the aim of improving telephone switching circuits. A transistor could function as a logical switch just as a vacuum tube could, but it was a minute fraction of the size, consumed vastly less power, and was infinitely more reliable. The computers which IBM built for the SAGE project during the 1950s straddled this technological divide, employing a mixture of vacuum tubes and transistors. But by 1960, the computer industry had fully and permanently embraced the transistor. While still huge and unwieldy by modern standards, computers of this era were practical and cost-effective for a much broader range of applications than their predecessors had been; corporate computing started in earnest in the transistor era.

Nevertheless, wiring together tens of thousands of discrete transistors remained a daunting task for manufacturers, and the most high-powered computers still tended to fill large rooms if not entire building floors. Thankfully, a better way was in the offing. Already in 1958, a Texas Instruments engineer named Jack Kilby had come up with the idea of the integrated circuit: a collection of miniaturized transistors and other electrical components embedded in a silicon wafer, the whole being suitable for stamping out quickly in great quantities by automated machinery. Kilby invented, in other words, the soon-to-be ubiquitous computer chip, which could be wired together with its mates to produce computers that were not only smaller but easier and cheaper to manufacture than those that had come before. By the mid-1960s, the industry was already in the midst of the transition from discrete transistors to integrated circuits, producing some machines that were no larger than a refrigerator; among these was the Honeywell 516, the computer which was turned into the world’s first network router.

As chip-fabrication systems improved, designers were able to miniaturize the circuitry on the wafers more and more, allowing ever more computing horsepower to be packed into a given amount of physical space. An engineer named Gordon Moore proposed the principle that has become known as Moore’s Law: he calculated that the number of transistors which can be stamped into a chip of a given size doubles every second year.[1]When he first stated his law in 1965, Moore actually proposed a doubling every single year, but revised his calculations in 1975. In July of 1968, Moore and a colleague named Robert Noyce formed the chip maker known as Intel to make the most of Moore’s Law. The company has remained on the cutting edge of chip fabrication to this day.

The next step was perhaps inevitable, but it nevertheless occurred almost by accident. In 1971, an Intel engineer named Federico Faggin put all of the circuits making up a computer’s arithmetic, logic, and control units — the central “brain” of a computer — onto a single chip. And so the microprocessor was born. No one involved with the project at the time anticipated that the Intel 4004 central-processing unit would open the door to a new generation of general-purpose “microcomputers” that were small enough to sit on desktops and cheap enough to be purchased by ordinary households. Faggin and his colleagues rather saw the 4004 as a fairly modest, incremental advancement of the state of the art, which would be deployed strictly to assist bigger computers by serving as the brains of disk controllers and other single-purpose peripherals. Before we rush to judge them too harshly for their lack of vision, we should remember that they are far from the only inventors in history who have failed to grasp the real importance of their creations.

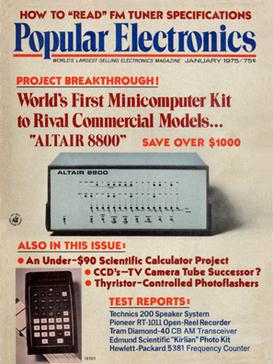

At any rate, it was left to independent tinkerers who had been dreaming of owning a computer of their own for years, and who now saw in the microprocessor the opportunity to do just that, to invent the personal computer as we know it. The January 1975 issue of Popular Electronics sports one of the most famous magazine covers in the history of American technology: it announces the $439 Altair 8800, from a tiny Albuquerque, New Mexico-based company known as MITS. The Altair was nothing less than a complete put-it-together-yourself microcomputer kit, built around the Intel 8080 microprocessor, a successor model to the 4004.

The next milestone came in 1977, when three separate companies announced three separate pre-assembled, plug-em-in-and-go personal computers: the Apple II, the Radio Shack TRS-80, and the Commodore PET. In terms of raw computing power, these machines were a joke compared to the latest institutional hardware. Nonetheless, they were real, Turing-complete computers that many people could afford to buy and proceed to tinker with to their heart’s content right in their own homes. They truly were personal computers: their buyers didn’t have to share them with anyone. It is difficult to fully express today just how extraordinary an idea this was in 1977.

This very website’s early years were dedicated to exploring some of the many things such people got up to with their new dream machines, so I won’t belabor the subject here. Suffice to say that those first personal computers were, although of limited practical utility, endlessly fascinating engines of creativity and discovery for those willing and able to engage with them on their own terms. People wrote programs on them, drew pictures and composed music, and of course played games, just as their counterparts on the bigger machines had been doing for quite some time. And then, too, some of them went online.

The first microcomputer modems hit the market the same year as the trinity of 1977. They operated on the same principles as the modems developed for the SAGE project a quarter-century before — albeit even more slowly. Hobbyists could thus begin experimenting with connecting their otherwise discrete microcomputers together, at least for the duration of a phone call.

But some entrepreneurs had grander ambitions. In July of 1979, not one but two subscription-based online services, known as CompuServe and The Source, were announced almost simultaneously. Soon anyone with a computer, a modem, and the requisite disposable income could dial them up to socialize with others, entertain themselves, and access a growing range of useful information.

Again, I’ve written about this subject in some detail before, so I won’t do so at length here. I do want to point out, however, that many of J.C.R. Licklider’s fondest predictions for the computer networks of the future first became a reality on the dozen or so of these commercial online services that managed to attract significant numbers of subscribers over the years. It was here, even more so than on the early Internet proper, that his prognostications about communities based on mutual interest rather than geographical proximity proved their prescience. Online chatting, online dating, online gaming, online travel reservations, and online shopping first took hold here, first became a fact of life for people sitting in their living rooms. People who seldom or never met one another face to face or even heard one another’s voices formed relationships that felt as real and as present in their day-to-day lives as any others — a new phenomenon in the history of social interaction. At their peak circa 1995, the commercial online services had more than 6.5 million subscribers in all.

Yet these services failed to live up to the entirety of Licklider’s old dream of an Intergalactic Computer Network. They were communities, yes, but not quite networks in the sense of the Internet. Each of them lived on a single big mainframe, or at most a cluster of them, in a single data center, which you dialed into using your microcomputer. Once online, you could interact in real time with the hundreds or thousands of others who might have dialed in at the same time, but you couldn’t go outside the walled garden of the service to which you’d chosen to subscribe. That is to say, if you’d chosen to sign up with CompuServe, you couldn’t talk to someone who had chosen The Source. And whereas the Internet was anarchic by design, the commercial online services were steered by the iron hands of the companies who had set them up. Although individual subscribers could and often did contribute content and in some ways set the tone of the services they used, they did so always at the sufferance of their corporate overlords.

Through much of the fifteen years or so that the commercial services reigned supreme, many or most microcomputer owners failed to even realize that an alternative called the Internet existed. Which is not to say that the Internet was without its own form of social life. Its more casual side centered on an online institution known as Usenet, which had arrived on the scene in late 1979, almost simultaneously with the first commercial services.

At bottom, Usenet was (and is) a set of protocols for sharing public messages, just as email served that purpose for private ones. What set it apart from the bustling public forums on services like CompuServe was its determinedly non-centralized nature. Usenet as a whole was a network of many servers, each storing a local copy of its many “newsgroups,” or forums for discussions on particular topics. Users could read and post messages using any of the servers, either by sitting in front of the server’s own keyboard and monitor or, more commonly, through some form of remote connection. When a user posted a new message to a server, it sent it on to several other servers, which were then expected to send it further, until the message had propagated through the whole network of Usenet servers. The system’s asynchronous nature could distort conversations; messages reached different servers at different times, which meant you could all too easily find yourself replying to a post that had already been retracted, or making a point someone else had already made before you. But on the other hand, Usenet was almost impossible to break completely — just like the Internet itself.

Strictly speaking, Usenet did not depend on the Internet for its existence. As far as it was concerned, its servers could pass messages among themselves in whatever way they found most convenient. In its first few years, this sometimes meant that they dialed one another up directly over ordinary phone lines and talked via modem. As it matured into a mainstay of hacker culture, however, Usenet gradually became almost inseparable from the Internet itself in the minds of most of its users.

From the three servers that marked its inauguration in 1979, Usenet expanded to 11,000 by 1988. The discussions that took place there didn’t quite encompass the whole of the human experience equally; the demographics of the hacker user base meant that computer programming tended to get more play than knitting, Pink Floyd more play than Madonna, and science-fiction novels more play than romances. Still, the newsgroups were nothing if not energetic and free-wheeling. For better or for worse, they regularly went places the commercial online services didn’t dare allow. For example, Usenet became one of the original bastions of online pornography, first in the form of fevered textual fantasies, then in the somehow even more quaint form of “ASCII art,” and finally, once enough computers had the graphics capabilities to make it worthwhile, as actual digitized photographs. In light of this, some folks expressed relief that it was downright difficult to get access to Usenet and the rest of the Internet if one didn’t teach or attend classes at a university, or work at a tech company or government agency.

The perception of the Internet as a lawless jungle, more exciting but also more dangerous than the neatly trimmed gardens of the commercial online services, was cemented by the Morris Worm, which was featured on the front page of the New York Times for four straight days in December of 1988. Created by a 23-year-old Cornell University graduate student named Robert Tappan Morris, it served as many people’s ironic first notice that a network called the Internet existed at all. The exploit, which its creator later insisted had been meant only as a harmless prank, spread by attaching itself to some of the core networking applications used by Unix, a powerful and flexible operating system that was by far the most popular among Internet-connected computers at the time. The Morris Worm came as close as anything ever has to bringing the entire Internet down when its exponential rate of growth effectively turned it into a network-wide denial-of-service attack — again, accidentally, if its creator is to be believed. (Morris himself came very close to a prison sentence, but escaped with three years of probation, a $10,000 fine, and 400 hours of community service, after which he went on to a lucrative career in the tech sector at the height of the dot-com boom.)

Attitudes toward the Internet in the less rarefied wings of the computing press had barely begun to change even by the beginning of the 1990s. An article from the issue of InfoWorld dated February 4, 1991, encapsulates the contemporary perceptions among everyday personal-computer owners of this “vast collection of networks” which is “a mystery even to people who call it home.”

It is a highway of ideas, a collective brain for the nation’s scientists, and perhaps the world’s most important computer bulletin board. Connecting all the great research institutions, a large network known collectively as the Internet is where scientists, researchers, and thousands of ordinary computer users get their daily fix of news and gossip.

But it is the same network whose traffic is occasionally dominated by X-rated graphics files, UFO sighting reports, and other “recreational” topics. It is the network where renegade “worm” programs and hackers occasionally make the news.

As with all communities, this electronic village has both high- and low-brow neighborhoods, and residents of one sometimes live in the other.

What most people call the Internet is really a jumble of networks rooted in academic and research institutions. Together these networks connect over 40 countries, providing electronic mail, file transfer, remote login, software archives, and news to users on 2000 networks.

Think of a place where serious science comes from, whether it’s MIT, the national laboratories, a university, or [a] private enterprise, [and] chances are you’ll find an Internet address. Add [together] all the major sites, and you have the seeds of what detractors sometimes call “Anarchy Net.”

Many people find the Internet to be shrouded in a cloud of mystery, perhaps even intrigue.

With addresses composed of what look like contractions surrounded by ‘!’s, ‘@’s, and ‘.’s, even Internet electronic mail seems to be from another world. Never mind that these “bangs,” “at signs,” and “dots” create an addressing system valid worldwide; simply getting an Internet address can be difficult if you don’t know whom to ask. Unlike CompuServe or one of the other email services, there isn’t a single point of contact. There are as many ways to get “on” the Internet as there are nodes.

At the same time, this complexity serves to keep “outsiders” off the network, effectively limiting access to the world’s technological elite.

The author of this article would doubtless have been shocked to learn that within just four or five years this confusing, seemingly willfully off-putting network of scientists and computer nerds would become the hottest buzzword in media, and that absolutely everybody, from your grandmother to your kids’ grade-school teacher, would be rushing to get onto this Internet thing before they were left behind, even as stalwart rocks of the online ecosystem of 1991 like CompuServe would already be well on their way to becoming relics of a bygone age.

The Internet had begun in the United States, and the locus of the early mainstream excitement over it would soon return there. In between, though, the stroke of inventive genius that would lead to said excitement would happen in the Old World confines of Switzerland.

In many respects, he looks like an Englishman from central casting — quiet, courteous, reserved. Ask him about his family life and you hit a polite but exceedingly blank wall. Ask him about the Web, however, and he is suddenly transformed into an Italian — words tumble out nineteen to the dozen and he gesticulates like mad. There’s a deep, deep passion here. And why not? It is, after all, his baby.

— John Naughton, writing about Tim Berners-Lee

The seeds of the Conseil Européen pour la Recherche Nucléaire — better known in the Anglosphere as simply CERN — were planted amidst the devastation of post-World War II Europe by the great French quantum physicist Louis de Broglie. Possessing an almost religious faith in pure science as a force for good in the world, he proposed a new, pan-European foundation dedicated to exploring the subatomic realm. “At a time when the talk is of uniting the peoples of Europe,” he said, “[my] attention has turned to the question of developing this new international unit, a laboratory or institution where it would be possible to carry out scientific work above and beyond the framework of the various nations taking part. What each European nation is unable to do alone, a united Europe can do, and, I have no doubt, would do brilliantly.” After years of dedicated lobbying on de Broglie’s part, CERN officially came to be in 1954, with its base of operations in Geneva, Switzerland, one of the places where Europeans have traditionally come together for all manner of purposes.

The general technological trend at CERN over the following decades was the polar opposite of what was happening in computing: as scientists attempted to peer deeper and deeper into the subatomic realm, the machines they required kept getting bigger and bigger. Between 1983 and 1989, CERN built the Large Electron-Positron Collider in Geneva. With a circumference of almost seventeen miles, it was the largest single machine ever built in the history of the world. Managing projects of such magnitude, some of them employing hundreds of scientists and thousands of support staff, required a substantial computing infrastructure, along with many programmers and systems architects to run it. Among this group was a quiet Briton named Tim Berners-Lee.

Berners-Lee’s credentials were perfect for his role. He had earned a bachelor’s degree in physics from Oxford in 1976, only to find that pure science didn’t satisfy his urge to create practical things that real people could make use of. As it happened, both of his parents were computer scientists of considerable note; they had both worked on the University of Manchester’s Mark I computer, the world’s very first stored-program von Neumann machine. So, it was natural for their son to follow in their footsteps, to make a career for himself in the burgeoning new field of microcomputing. Said career took him to CERN for a six-month contract in 1980, then back to Geneva on a more permanent basis in 1984. Because of his background in physics, Berners-Lee could understand the needs of the scientists he served better than many of his colleagues; his talent for devising workable solutions to their problems turned him into something of a star at CERN. Among other projects, he labored long and hard to devise a way of making the thousands upon thousands of pages of documentation that were generated at CERN each year accessible, manageable, and navigable.

But, for all that Berners-Lee was being paid to create an internal documentation system for CERN, it’s clear that he began thinking along bigger lines fairly quickly. The same problems of navigation and discoverability that dogged his colleagues at CERN were massively present on the Internet as a whole. Information was hidden there in out-of-the-way repositories that could only be accessed using command-line-driven software with obscure command sets — if, that is, you knew that it existed at all.

His idea of a better way came courtesy of hypertext theory: a non-linear approach to reading texts and navigating an information space, built around associative links embedded within and between texts. First proposed by Vannevar Bush, the World War II-era MIT giant whom we briefly met in an earlier article in this series, hypertext theory had later proved a superb fit with a mouse-driven graphical computer interface which had been pioneered at Xerox PARC during the 1970s under the astute management of our old friend Robert Taylor. The PARC approach to user interfaces reached the consumer market in a prominent way for the first time in 1984 as the defining feature of the Apple Macintosh. And the Mac in turn went on to become the early hotbed of hypertext experimentation on consumer-grade personal computers, thanks to Apple’s own HyperCard authoring system and the HyperCard-driven laser discs and CD-ROMs that soon emerged from companies like Voyager.

The user interfaces found in HyperCard applications were surprisingly similar to those found in the web browsers of today, but they were limited to the curated, static content found on a single floppy disk or CD-ROM. “They’ve already done the difficult bit!” Berners-Lee remembers thinking. Now someone just needed to put hypertext on the Internet, to allow files on one computer to link to files on another, with anyone and everyone able to create such links. He saw how “a single hypertext link could lead to an enormous, unbounded world.” Yet no one else seemed to see this. So, he decided at last to do it himself. In a fit of self-deprecating mock-grandiosity, not at all dissimilar to J.C.R. Licklider’s call for an “Intergalactic Computer Network,” he named his proposed system the “World Wide Web.” He had no idea how perfect the name would prove.

He sat down to create his World Wide Web in October of 1990, using a NeXT workstation computer, the flagship product of the company Steve Jobs had formed after getting booted out of Apple several years earlier. It was an expensive machine — far too expensive for the ordinary consumer market — but supremely elegant, combining the power of the hacker-favorite operating system Unix with the graphical user interface of the Macintosh.

The NeXT computer on which Tim Berners-Lee created the foundations of the World Wide Web. It then went on to become the world’s first web server.

Progress was swift. In less than three months, Berners-Lee coded the world’s first web server and browser, which also entailed developing the Hypertext Transfer Protocol (HTTP) they used to communicate with one another and the Hypertext Markup Language (HTML) for embedding associative links into documents. These were the foundational technologies of the Web, which still remain essential to the networked digital world we know today.

The first page to go up on the nascent World Wide Web, which belied its name at this point by being available only inside CERN, was a list of phone numbers of the people who worked there. Clicking through its hypertext links being much easier than entering commands into the database application CERN had previously used for the purpose, it served to get Berners-Lee’s browser installed on dozens of NeXT computers. But the really big step came in August of 1991, when, having debugged and refined his system as thoroughly as he was able by using his CERN colleagues as guinea pigs, he posted his web browser, his web server, and documentation on how to use HTML to create web documents on Usenet. The response was not immediately overwhelming, but it was gratifying in a modest way. Berners-Lee:

People who saw the Web and realised the sense of unbound opportunity began installing the server and posting information. Then they added links to related sites that they found were complimentary or simply interesting. The Web began to be picked up by people around the world. The messages from system managers began to stream in: “Hey, I thought you’d be interested. I just put up a Web server.”

Tim Berners-Lee’s original web browser, which he named Nexus in honor of its host platform. The NeXT computer actually had quite impressive graphics capabilities, but you’d never know it by looking at Nexus.

In December of 1991, Berners-Lee begged for and was reluctantly granted a chance to demonstrate the World Wide Web at that year’s official Hypertext conference in San Antonio, Texas. He arrived with high hopes, only to be accorded a cool reception. The hypertext movement came complete with more than its fair share of stodgy theorists with rigid ideas about how hypertext ought to work — ideas which tended to have more to do with the closed, curated experiences of HyperCard than the anarchic open Internet. Normally modest almost to a fault, the Berners-Lee of today does allow himself to savor the fact that “at the same conference two years later, every project on display would have something to do with the Web.”

But the biggest factor holding the Web back at this point wasn’t the resistance of the academics; it was rather its being bound so tightly to the NeXT machines, which had a total user base of no more than a few tens of thousands, almost all of them at universities and research institutions like CERN. Although some browsers had been created for other, more popular computers, they didn’t sport the effortless point-and-click interface of Berners-Lee’s original; instead they presented their links like footnotes, whose numbers the user had to type in to visit them. Thus Berners-Lee and the fellow travelers who were starting to coalesce around him made it their priority in 1992 to encourage the development of more point-and-click web browsers. One for the X Window System, the graphical-interface layer which had been developed for the previously text-only Unix, appeared in April. Even more importantly, a Macintosh browser arrived just a month later; this marked the first time that the World Wide Web could be explored in the way Berners-Lee had envisioned on a computer that the proverbial ordinary person might own and use.

Amidst the organization directories and technical papers which made up most of the early Web — many of the latter inevitably dealing with the vagaries of HTTP and HTML themselves — Berners-Lee remembers one site that stood out for being something else entirely, for being a harbinger of the more expansive, humanist vision he had had for his World Wide Web almost from the start. It was a site about Rome during the Renaissance, built up from a traveling museum exhibition which had recently visited the American Library of Congress. Berners-Lee:

On my first visit, I wandered to a music room. There was an explanation of the events that caused the composer Carpentras to present a decorated manuscript of his Lamentations of Jeremiah to Pope Clement VII. I clicked, and was glad I had a 21-inch colour screen: suddenly it was filled with a beautifully illustrated score, which I could gaze at more easily and in more detail than I could have done had I gone to the original exhibit at the Library of Congress.

If we could visit this site today, however, we would doubtless be struck by how weirdly textual it was for being a celebration of the Renaissance, one of the most excitingly visual ages in all of history. The reality is that it could hardly have been otherwise; the pages displayed by Berners-Lee’s NeXT browser and all of the others could not mix text with images at all. The best they could do was to present links to images, which, when clicked, would lead to a picture being downloaded and displayed in a separate window, as Berners-Lee describes above.

But already another man on the other side of the ocean was working on changing that — working, one might say, on the last pieces necessary to make a World Wide Web that we can immediately recognize today.

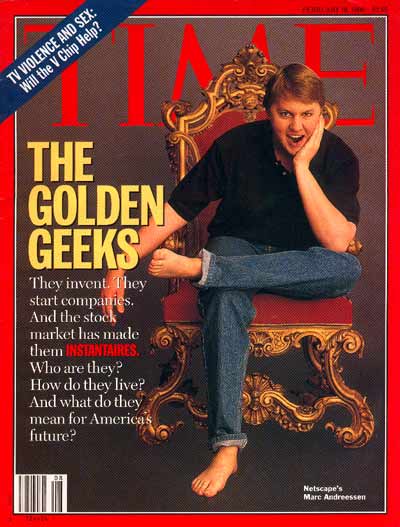

Marc Andreessen barefoot on the cover of Time magazine, creating the archetype of the dot-com entrepreneur/visionary/rock star.

Tim Berners-Lee was the last of the old guard of Internet pioneers. Steeped in an ethic of non-profit research for the abstract good of the human race, he never attempted to commercialize his work. Indeed, he has seemed in the decades since his masterstroke almost to willfully shirk the money and fame that some might say are rightfully his for putting the finishing touch on the greatest revolution in communications since the printing press, one which has bound the world together in a way that Samuel Morse and Alexander Graham Bell could never have dreamed of.

Marc Andreessen, by contrast, was the first of a new breed of business entrepreneurs who have dominated our discussions of the Internet from the mid-1990s until the present day. Yes, one can trace the cult of the tech-sector disruptor, “making the world a better place” and “moving fast and breaking things,” back to the dapper young Steve Jobs who introduced the Apple Macintosh to the world in January of 1984. But it was Andreessen and the flood of similar young men that followed him during the 1990s who well and truly embedded the archetype in our culture.

Before any of that, though, he was just a kid who decided to make a web browser of his own.

Andreessen first discovered the Web not long after Berners-Lee first made his tools and protocols publicly available. At the time, he was a twenty-year-old student at the University of Illinois at Urbana-Champaign who held a job on the side at the National Center for Supercomputing Applications, a research institute with close ties to the university. The name sounded very impressive, but he found the job itself to be dull as ditch water. His dissatisfaction came down to the same old split between the “giant brain” model of computing of folks like Marvin Minsky and the more humanist vision espoused in earlier years by people like J.C.R. Licklider. The NCSA was in pursuit of the former, but Andreessen was a firm adherent of the latter.

Bored out of his mind writing menial code for esoteric projects he couldn’t care less about, Andreessen spent a lot of time looking for more interesting things to do on the Internet. And so he stumbled across the fledgling World Wide Web. It didn’t look like much — just a screen full of text — but he immediately grasped its potential.

In fact, he judged, the Web’s not looking like much was a big part of its problem. Casting about for a way to snazz it up, he had the stroke of inspiration that would make him a multi-millionaire within three years. He decided to add a new tag to Berners-Lee’s HTML specification: “<img>,” for “image.” By using it, one would be able to show pictures inline with text. It could make the Web an entirely different sort of place, a wonderland of colorful visuals to go along with its textual content.

As conceptual leaps go, this one really wasn’t that audacious. The biggest buzzword in consumer computing in recent years — bigger than hypertext — had been “multimedia,” a catch-all term describing exactly this sort of digital mixing of content types, something which was now becoming possible thanks to the ever-improving audiovisual capabilities of personal computers since those primitive early days of the trinity of 1977. Hypertext and multimedia had actually been sharing many of the same digs for quite some time. The HyperCard authoring system, for example, boasted capabilities much like those which Andreessen now wished to add to HTML, and the Voyager CD-ROMs already existed as compelling case studies in the potential of interactive multimedia hypertext in a non-networked context.

Still, someone had to be the first to put two and two together, and that someone was Marc Andreessen. An only moderately accomplished programmer himself, he convinced a much better one, another NCSA employee named Eric Bina, to help him create his new browser. The pair fell into roles vaguely reminiscent of those of Steve Jobs and Steve Wozniak during the early days of Apple Computer: Andreessen set the agenda and came up with the big ideas — many of them derived from tireless trawling of the Usenet newsgroups to find out what people didn’t like about the current browsers — and Bina turned his ideas into reality. Andreessen’s relentless focus on the end-user experience led to other important innovations beyond inline images, such as the “forward,” “back,” and “refresh” buttons that remain so ubiquitous in the browsers of today. The higher-ups at NCSA eventually agreed to allow Andreessen to brand his browser as a quasi-official product of their institute; on an Internet still dominated by academics, such an imprimatur was sure to be a useful aid. In January of 1993, the browser known as Mosaic — the name seemed an apt metaphor for the colorful multimedia pages it could display — went up on NCSA’s own servers. After that, “it spread like a virus,” in the words of Andreessen himself.

The slick new browser and its almost aggressively ambitious young inventor soon came to the attention of Tim Berners-Lee. He calls Andreessen “a total contrast to any of the other [browser] developers. Marc was not so much interested in just making the program work as in having his browser used by as many people as possible.” But, lest he sound uncharitable toward his populist counterpart, he hastens to add that “that was, of course, what the Web needed.” Berners-Lee made the Web; the garrulous Andreessen brought it to the masses in a way the self-effacing Briton could arguably never have managed on his own.

About six months after Mosaic hit the Internet, Tim Berners-Lee came to visit its inventor. Their meeting brought with it the first palpable signs of the tension that would surround the World Wide Web and the Internet as a whole almost from that point forward. It was the tension between non-profit idealism and the urge to commercialize, to brand, and finally to control. Even before the meeting, Berners-Lee had begun to feel disturbed by the press coverage Mosaic was receiving, helped along by the public-relations arm of NCSA itself: “The focus was on Mosaic, as if it were the Web. There was little mention of other browsers, or even the rest of the world’s effort to create servers. The media, which didn’t take the time to investigate deeper, started to portray Mosaic as if it were equivalent to the Web.” Now, at the meeting, he was taken aback by an atmosphere that smacked more of a business negotiation than a friendly intellectual exchange, even as he wasn’t sure what exactly was being negotiated. “Marc gave the impression that he thought of this meeting as a poker game,” Berners-Lee remembers.

Andreessen’s recollections of the meeting are less nuanced. Berners-Lee, he claims, “bawled me out for adding images to the thing.” Andreessen:

Academics in computer science are so often out to solve these obscure research problems. The universities may force it upon them, but they aren’t always motivated to just do something that people want to use. And that’s definitely the sense that we always had of CERN. And I don’t want to mis-characterize them, but whenever we dealt with them, they were much more interested in the Web from a research point of view rather than a practical point of view. And so it was no big deal to them to do a NeXT browser, even though nobody would ever use it. The concept of adding an image just for the sake of adding an image didn’t make sense [to them], whereas to us, it made sense because, let’s face it, they made pages look cool.

The first version of Mosaic ran only on X-Windows, but, as the above would indicate, Andreessen had never intended for that to be the case for long. He recruited more programmers to write ports for the Macintosh and, most importantly of all, for Microsoft Windows, whose market share of consumer computing in the United States was crossing the threshold of 90 percent. When the Windows version of Mosaic went online in September of 1993, it motivated hundreds of thousands of computer owners to engage with the Internet for the first time; the Internet to them effectively was Mosaic, just as Berners-Lee had feared would come to pass.

The Mosaic browser. It may not look like much today, but its ability to display inline images was a game-changer.

At this time, Microsoft Windows didn’t even include a TCP/IP stack, the software layer that could make a machine into a full-fledged denizen of the Internet, with its own IP address and all the trimmings. In the brief span of time before Microsoft remedied that situation, a doughty Australian entrepreneur named Peter Tattam provided an add-on TCP/IP stack, which he distributed as shareware. Meanwhile other entrepreneurs scrambled to set up Internet service providers to provide the unwashed masses with an on-ramp to the Web — no university enrollment required! — and the shelves of computer stores filled up with all-in-one Internet kits that were designed to make the whole process as painless as possible.

The unabashed elitists who had been on the Internet for years scorned the newcomers, but there was nothing they could do to stop the invasion, which stormed their ivory towers with overwhelming force. Between December of 1993 and December of 1994, the total amount of Web traffic jumped by a factor of eight. By the latter date, there were more than 10,000 separate sites on the Web, thanks to people all over the world who had rolled up their sleeves and learned HTML so that they could get their own idiosyncratic messages out to anyone who cared to read them. If some (most?) of the sites they created were thoroughly frivolous, well, that was part of the charm of the thing. The World Wide Web was the greatest leveler in the history of media; it enabled anyone to become an author and a publisher rolled into one, no matter how rich or poor, talented or talent-less. The traditional gatekeepers of mass media have been trying to figure out how to respond ever since.

Marc Andreessen himself abandoned the browser that did so much to make all this happen before it celebrated its first birthday. He graduated from university in December of 1993, and, annoyed by the growing tendency of his bosses at NCSA to take credit for his creation, he decamped for — where else? — Silicon Valley. There he bumped into Jim Clark, a huge name in the Valley, who had founded Silicon Graphics twelve years earlier and turned it into the biggest name in digital special effects for the film industry. Feeling hamstrung by Silicon Graphics’s increasing bureaucracy as it settled into corporate middle age, Clark had recently left the company, leading to much speculation about what he would do next. The answer came on April 4, 1994, when he and Marc Andreessen founded Mosaic Communications in order to build a browser even better than the one the latter had built at NCSA. The dot-com boom had begun.

(Sources: the books A Brief History of the Future: The Origins of the Internet by John Naughton, From Gutenberg to the Internet: A Sourcebook on the History of Information Technology edited by Jeremy M. Norman, A History of Modern Computing (2nd ed.) by Paul E. Ceruzzi, Communication Networks: A Concise Introduction by Jean Walrand and Shyam Parekh, Weaving the Web by Tim Berners-Lee, How the Web was Born by James Gillies and Robert Calliau, and Architects of the Web by Robert H. Reid. InfoWorld of August 24 1987, September 7 1987, April 25 1988, November 28 1988, January 9 1989, October 23 1989, and February 4 1991; Computer Gaming World of May 1993.)

Footnotes

| ↑1 | When he first stated his law in 1965, Moore actually proposed a doubling every single year, but revised his calculations in 1975. |

|---|

Ishkur

May 20, 2022 at 5:09 pm

The nerd/hacker/researcher/hobbyist culture of the early (pre-90s) Internet was certainly it’s own thing. Usenet back then had rules, and decorum, and etiquette (netiquette) that everyone seemed to abide by, adopted through osmosis by each newcomer over time. The culture remained firm as long as the flow of newcomers wasn’t too overwhelming.

But there was always a period where there would be a rush of newcomers, too many of them to assimilate into the culture immediately, and they would violate the semi-harmonious unwritten rules and decorum for a month or so before they settled down. This usually happened every September, as that was when universities welcomed a new class of freshmen with on-campus access to the Internet for the first time.

In this “September newbie” period Usenet was often rude, chaotic, and full of low quality signal-to-noise posts (not to mention spam), and by the 90s the old guard of Internet regulars noticed it getting longer and more obnoxious, often extending into October and November.

And then in 1993, the “September newbie” era extended well into 1994. And then AOL came out with their free dial-up package, extending it into 1995. And 1996 and beyond. The old “science & research” Internet was quickly swept aside in favor of commercial exploitation.

In Internet lore September 1993 is famously known as the Eternal September: The September that never ended.

Andrew Plotkin

May 20, 2022 at 5:46 pm

This is where I show up, as part of Usenet’s (literal) freshman class of ’88. But from my point of view it wasn’t a *science and research* culture. It was a wild, fermenting discussion group about SF, fantasy, philosophy, math, sex, the SCA, and everything else that (primarily) American college students were obsessed with.

The AOL invasion wasn’t specifically about commercial exploitation, either. (Although it was the same era as the infamous Usenet green-card spam.) It was… just a cultural clash between an existing tribe of people talking about All That and a larger, incoming tribe. The tone of the discussion shifted and everybody hated that for the usual tribal reasons.

Usenet’s actual failure was its simplistic moderation model. There was no middle ground between “free-for-all” and “fully moderated, every post must be approved before it goes out.” Both models were completely unscalable. As “the Internet” got more popular, one Usenet group after another either collapsed, got overrun by spam, or just ossified (by becoming intolerably unpleasant for newcomers).

I hung on in the IF newsgroups until 2010, out of sheer stubbornness, but really the tolerable discussion had switched to web forums much earlier.

Nate

May 21, 2022 at 6:04 am

It wasn’t just AOL that brought endless noise. It was the “IXers” (those using the easy setup Windows package of ix.netcom.com, which appeared earlier). Or the CompuServe or BIX, with their all-caps users. And I’m sure several before.

AOL was barely the final straw. I think Napster was actually the end of an era. People weren’t getting on the Internet to communicate, it was just for free stuff. You could run Napster, get free music, and never talk to anyone.

By that time, the Internet itself (the servers and routers I was fascinated by) was getting extremely homogeneous. Windows at the edge, Linux (and some Sun) at the center.

Yeechang Lee

May 20, 2022 at 7:24 pm

As one who was there, it’s hard to overstate how true this was. Email always seemed to be (to paraphrase Steve Jobs) a feature, not a product. Even if Usenet (intentionally) resembles SMTP email in appearance, protocol, and formatting, it always has been its own thing. As you wrote, it was very much what the Internet *was* to most users until WWW really started to take off. BBSs that advertised “Internet access” meant “access to Usenet”, not necessarily even Internet email.

It’s “X Window”; no hyphen, no plural. *Everyone* gets this wrong. (I won’t even get into the protocol’s idiosyncratic use of “server” versus “client”, but that’s irrelevant here.)

You’re missing a more important reason for choosing Geneva. It’s a Swiss city that is almost completely surrounded by France, so it’s easy (and politically beneficial) for CERN facilities, including the Large Hadron Collider, to sprawl across the border.

Patrick Morris Miller

May 20, 2022 at 7:33 pm

It’s “the X Window System”, or just plain “X”. X is the name and a window system is what it is.

Jimmy Maher

May 21, 2022 at 8:02 am

Thanks!

I remember setting up a Linux system from scratch back in the 1990s and trying to get X working. I remember having to export my IP address to the X “server.” Crazy what I used to do for fun. (Now I have no patience whatsoever for that sort of tinkering, and want my computers to Just Work.)

Keith Palmer

May 20, 2022 at 10:17 pm

I’m certain I only noticed it years after the fact, but the November 1985 issue of IEEE Spectrum (my father had a subscription to it) contains a column from Robert W. Lucky where he discusses Usenet (without any explanation of how to connect yourself to it; I suppose IEEE members were thought smart enough to figure it out for themselves), frequently calling it just “the net.” This was back when all the newsgroups were named net.topic (Lucky mentions net.consumers, net.philosophy, net.movies, net.movies.sw (“to discuss the Star Wars saga”), and net.flame). He closed with “I am fascinated by the question of whether a new form of social interaction is in the making, or whether the phenomenon is just passing through an interesting, useful phase on its way to oblivion as thousands of new subscribers increase the noise and junk level until nothing but echoes of what-might-have-been remain.”

As for myself, I think the first time I really started to become aware of and intrigued by the Internet was around 1993, when a text file titled “Big Dummy’s Guide to the Internet” showed up on a “software of the month” disk we subscribed to. Usenet did seem “the killer app” then, but it wasn’t until late 1994 when, visiting the big city, I was able to dial into a “freenet” and dump newsgroup posts into text files for later perusal. I was given a book about Mosaic for Christmas in 1994, but there weren’t any Internet Service Providers in my small town then; I had to wait until the summer of 1995 for the first of them to start up (I managed to finagle my way into volunteering to see if Macintosh computers could be connected to their systems), and by that point I’d been supplied with a browser called “MacWeb” via Adam Engst’s “Internet Starter Kit for Macintosh” and Netscape was there to be noticed. I do still have the book about Mosaic, though.

Yeechang Lee

May 21, 2022 at 1:14 am

Simultaneously, Jerry Pournelle occasionally mentioned having net access (through MIT) in his BYTE columns, although I think he switched to BIX, sponsored by the magazine, once that got going. He mentions receiving a public key-encrypted message.

Pournelle on why he wanted a kill file for BIX:

plus ça change, plus c’est la même chose

stepped pyramids

May 21, 2022 at 8:11 pm

Pournelle lost his access via MIT at least in part because he kept on mentioning it in Byte, and the admins were worried that someone in power might start asking questions about why a science fiction author had free access to the ARPAnet. There was a little drama about whether it might have been politically motivated (and it clearly was also in part because the admins thought he was an ass). Fun bit of ancient internet drama, honestly pretty much exactly like modern internet drama:

https://www.bradford-delong.com/2013/07/how-jerry-pournelle-got-kicked-off-the-arpanet.html

Yeechang Lee

May 22, 2022 at 3:23 am

I didn’t want to get into that here. But since you brought it up …

I’ve read every issue of BYTE during its first 13 years, including Pournelle’s columns over this period. He in no way flaunts his net access; he occasionally mentions it as a matter-of-fact thing, and much less often than (say) discussing his constantly receiving tons of mail delivering new products that companies hope he mentions in writing.

It’s pretty obvious in retrospect that Pournelle really was kicked because of his political beliefs. As Dean says in the comments to DeLong’s post, ARPANET a) had unofficial users since pretty much the beginning, and b) by 1985 wasn’t really ARPANET anymore. I mean, good grief, one person cites how Pournelle dares to criticize some MIT Lisp project! Talk about overreaching in order to avoid the real issue he has with Pournelle (or anyone like him) having an account.

There’s no reasonable way for Chris Stacy (who has never hidden his own politics, which are very different from Pournelle’s) or anyone else to fear that because Pournelle mentions having access to some computer, that hordes of the unwashed would rush to MIT demanding their own account, any more than expecting hordes to demand of the Sri Lankan Air Force a personal helicopter to travel around the island (which is what Pournelle received when he visited Arthur C. Clarke, as mentioned in another column). Note that Stacy a) never denies calling Pournelle a fascist, and b) tries to avoid further public discussion of the subject.

And, yes, if anyone had the pull to get “ARPANET” to get his own account back, it would be Pournelle. He was, indeed, very tied into the SDI program at this time. That he didn’t is, I presume, some combination of a) not wanting to go to the trouble, b) not wanting to go where one does not feel wanted, c) ARPANET no longer being “ARPA”net, and d) BIX by this time up and running (and quite possibly with more traffic than the net).

Nate

May 21, 2022 at 6:06 am

Hey Keith, you didn’t happen to be in rural California did you? I started an ISP in summer 1995 that was the first one in the area that wasn’t long distance.

Keith Palmer

May 21, 2022 at 11:49 am

I actually lived on the Lake Huron shore of Ontario (near a power plant, which might have made for some concentration of “technical types.”) Maybe that’s one more reminder I shouldn’t feel “those who connected to the Internet in 1994 were somehow sharper than those who did it in 1995, and for that matter computer-magazine columns about it were starting to become obvious in 1993…” One thing I didn’t mention before was having noticed (through very recent computer-magazine skimming) a brief mention of the Internet in an Apple II magazine from 1990, although I guess I don’t skim BYTE well enough to have noticed its brief mentions.

Nate

May 21, 2022 at 6:56 pm

Neat, a parallel timeline. Yeah we started an ISP in summer 1995 when there were none in the area. The office was a converted house with fish tanks everywhere. We had to pay to get a T1 line dug out to the country. The workers kept wondering why.

Started with 10 modems and it became the hub of town business activity. User groups were teaching people how to make web pages. It was a wonderful time of change.

Peter Olausson

May 20, 2022 at 10:55 pm

“Peter Tattam made a small fortune” – I really hope he did, but here’s an article which disagrees:

https://www.vice.com/en/article/bmv3z3/the-story-of-shareware-the-original-in-app-purchase

My first experience of the web was with NCSA Mosaic (which must have been pretty new by then) on a Sun workstation with X11. But WWW wasn’t the first attempt to make the net more user friendly. One that might be worth mentioning was Gopher, essentially FTP with an interface. When the web came along, it declined pretty quickly.

And as for the WWW abbreviation, here’s a Douglas Adams quote: “The World Wide Web is the only thing I know of whose shortened form takes three times longer to say than what it’s short for.”

Jimmy Maher

May 21, 2022 at 7:58 am

I’m sorry to see that. I’d assumed that Tattam must have done very well indeed, given the quantity of deals he had with ISPs, bookstore Internet kits, etc.

I am aware of Gopher, as well as text-only Web solutions like Lynx. It’s always difficult to decide what to put in and what to take out. In this case, I’m afraid it would be a digression that interrupts the flow of the narrative a bit too much.

Nate

May 21, 2022 at 6:57 pm

You can send a donation here.

https://news.ycombinator.com/item?id=2282875

Andrew Pam

May 23, 2022 at 9:00 am

There was definitely a transitional period; when I first set up our site Glass Wings in 1993, I used the “gn” server which offered the same content as both Gopher and Web (HTML/HTTP).

Alan Estenson

May 23, 2022 at 6:54 pm

Wow, I hadn’t thought of Gopher in decades. In 1991-1992, I was an undergraduate at the University of Minnesota with a part-time job working in the campus microcomputer labs. (aside, where another “labbie” introduced me to Usenet…) I can recall going to the Shepherd Labs building on campus for an informal introduction to Gopher from one of the developers. They wanted us to know enough about it to assist lab users with questions. A year or so later, I typed “mosaic” for the first time at the command prompt on a Sun workstation.

Jim Nelson

May 26, 2022 at 11:57 pm

I recall just prior to the Web explosion (but during the multimedia boom) reading an article that asked whether hypertext would ever come into its own. The article pointed out that the largest distributor of a (non-networked) hypertext viewer was Microsoft in the form of WINHELP.EXE. Hypertext was one of the those concepts swirling around the PC revolution for years, but no one could seem to figure out the killer app that would cement it into place, like mobile/pen computing, or, heck, UNIX during the 1980s.

I’m surprised Ted Nelson (no relation) was not mentioned. My experience has been that most people uninformed about computer history are taken aback when they learn the underpinnings of the Web date back to the early 1960s, even if it was not fully realized back then. (Ted Nelson would say the WWW still hasn’t realized his vision, but that’s a longer topic.)

declain

May 27, 2022 at 10:14 am

Ted Nelson was mentioned earlier in one of the previous articles.

https://www.filfre.net/2016/09/the-freedom-to-associate/

Leo Vellés

May 21, 2022 at 12:31 pm

“At any rate, it was left to independent tinkerers who who had been dreaming of owning a computer of their own for years..”.

A double “who” there Jimmy.

Jimmy Maher

May 21, 2022 at 1:06 pm

Thanks!

Leo Vellés

May 21, 2022 at 2:12 pm

And a double “the” here:

“some might say are rightfully his for putting the finishing touch on the greatest revolution in communications since the the printing press…”

Jimmy Maher

May 21, 2022 at 2:58 pm

Thanks!

Joe Latshaw

May 21, 2022 at 5:38 pm

“dull as ditch water”

I believe the phrase is “dull as dishwater,” as dishwater is typically milky-gray and what’s in it is known, while ditch water could be full of just about *anything* – much of it pretty gross, which, while unpleasant, isn’t boring!

I’m loving this series of articles as it’s intersecting with my teen years and my relatively early introduction to the internet. My father had Compuserve and AOL, but when he brought home Mosaic on his work laptop in about 1993-1994, I was fascinated. I very quickly went looking for sites like Ford.com and CocaCola.com (there were no search engines yet, really) and was shocked that such sites didn’t exist. I very quickly suggested we purchase those URLs as they surely would soon come into demand. My parents weren’t keen on spending what was, at the time, a pretty high price for web addresses based on some hare-brained idea from their 12 or 13 year old kid. We could have been the first cybersquatters as they eventually became known, and unlike others, I’d have been happy to sell them to their eventual owners for something like $50,000 a pop. It would have paid for a better college than I eventually went to at least.

Part of me is bummed that I was in rural Pennsylvania rather than Silicon Valley and I wouldn’t have even known who to show my nascent HTML site building skills, but then it was mostly my own fault for specifically NOT going into a computer related field in college, having gotten bored with it all by 1999 or so.

I never ended up an internet millionaire, but then I was far too immature at that age to deal with success like that. My much more modest career in healthcare IT and the life it’s given me really is enough and came when I was mature enough for it. Well, mostly enough, anyway. :) But I do really have this era of innovation to thank for the influence it had on me. Many thanks for these articles, Jimmy!

Jimmy Maher

May 21, 2022 at 7:17 pm

The original idiom was actually “ditch water.” But it is often written as “dishwater” today. Neither version can be called incorrect in contemporary usage, but I’m something of a classicist, and tend to be old school about such things. ;)

A life in healthcare is certainly nothing to look down upon. My wife is an oncologist, and I suspect that she does more real good in the world in a month than I do in a year.

Alex Smith

May 22, 2022 at 1:31 am

As you say, it was “ditch” before “dish,” however near as I can tell, it should be “ditchwater” and not “ditch water.”

Jimmy Maher

May 22, 2022 at 7:53 am

To compound a word or not is always a tricky question. (“Video game” or “videogame”?) I usually compound words that have a become a perennial in combination in everyday discourse. In the 21st century at least, “ditch” and “water” don’t quite qualify. ;)

Alex Smith

May 23, 2022 at 3:26 am

Yes, but while various dictionaries disagree on “video game” versus “videogame” since the term is still so new (and bound to eventually go to one word if history is any guide), ditchwater seems to be the universal spelling everywhere from OED, to Merriam-Webster, Collins, Macmillan, Cambridge, and Britannica. The future of world language hardly hinges on your compounding preferences, but as a language pedant, I do feel compelled to point out you may be on the wrong side of history for this one. =p

Jimmy Maher

May 23, 2022 at 6:06 am

Okay, have it your way. ;)

mycophobia

May 22, 2022 at 4:37 pm

‘first in the form of fevered textual fantasies, than in the somehow even more quaint form of “ASCII art,’

“then” not “than”?

Jimmy Maher

May 22, 2022 at 8:00 pm

Yes. Thanks!

Charles

May 27, 2022 at 6:56 am

“… Soon anyone with a computer, a modem, and a valid credit card…”

When CompuServe was young, it was not actually necessary to have a credit card; they were perfectly happy to bill you like any other metered utility, and in my case I would receive an accounting of minutes in the usual fashion (via snail mail). Following that, I walked to the savings and loan, drew a money order from my passbook savings, and sent it to them to settle the account.

Jimmy Maher

May 30, 2022 at 4:44 am

I didn’t realize that. Thanks!

Doug Orleans

June 17, 2022 at 11:26 pm

I was a senior at UC Berkeley in 1992 and hung out at the eXperimental Computing Facility, a workstation lab for undergrads to work on independent projects. One day a quiet guy named Pei Wei showed me what he’d been working on: ViolaWWW. At the time I didn’t really understand what was cool about it, but it turns out to have been the first ever graphical web browser. I guess it was the Betamax to Mosaic’s VHS.

nicolas woollaston

July 9, 2023 at 11:39 pm

when i was a kid in a small town the library seemed a big place with a lot of books, but i soon found that a specific line of enquiry on a specialist subject would often turn up so little that it could be easily read in its entirety so i became accustomed to reading everything i could about whatever was my interest at the time. As a young adult, my first encounters with usenet slammed me hard with the realisation that reading everything was no longer a productive use of my time. And then when the web really took off, reading everything, even on a specific subject, was no longer physically possible