Writing about Ultima earlier, I described that game as the first to really feel like a CRPG as we would come to know the genre over the course of the rest of the 1980s. Yet now I find myself wanting to say the same thing about Wizardry, which was released just a few months after Ultima. That’s because these two games stand as the archetypes for two broad approaches to the CRPG that would mark the genre over the next decade and, arguably, even right up to the present. The Ultima approach emphasizes the fictional context: exploration, discovery, setting, and, eventually, story. Combat, although never far from center stage, is relatively deemphasized, at least in comparison with the Wizardry approach, which focuses on the process of adventuring above all else. Like their forefather, Wizardry-inspired games often take place in a single dungeon, seldom feature more than the stub of a story, and largely replace the charms of exploration, discovery, and setting with those of tactics and strategy. The Ultima strand is often mechanically a bit loose — or more than a bit, if we take Ultima itself, with its hit points as a purchasable commodity and its concept of character level as a function of time served, as an example. The Wizardry strand is largely about its mechanics, so it had better get them right. (As I wrote in my last post about Wizardry, Richard Garriott refined and balanced Ultima by playing it a bit himself and soliciting the opinions of a few buddies; Andrew Greenberg and Robert Woodhead put Wizardry through rigorous balancing and playtesting that consumed almost a year.) These bifurcated approaches parallel the dueling approaches to tabletop Dungeons and Dragons, as either a system for interactive storytelling enjoyed by “artful thespians” or a single-unit tactical wargame.

Wizardry, then, isn’t much concerned with niceties of setting or story. The manual, unusually lengthy and professional as it is, says nothing about where we are or just why we choose to spend our time delving deeper and deeper into the game’s 10-level dungeon. If a dungeon exists in a fantasy world, it must be delved, right? That’s simply a matter of faith. Only when we reach the 4th level of the dungeon do we learn the real purpose of it all, when we fight our way through a gauntlet of monsters to enter a special room.

CONGRATULATIONS, MY LOYAL AND WORTHY SUBJECTS. TODAY YOU HAVE SERVED ME WELL AND TRULY PROVEN YOURSELF WORTHY OF THE QUEST YOU ARE NOW TO UNDERTAKE. SEVERAL YEARS AGO, AN AMULET WAS STOLEN FROM THE TREASURY BY AN EVIL WIZARD WHO IS PURPORTED TO BE IN THE DUNGEON IMMEDIATELY BELOW WHERE YOU NOW STAND. THIS AMULET HAS POWERS WHICH WE ARE NOW IN DIRE NEED OF. IT IS YOUR QUEST TO FIND THIS AMULET AND RETRIEVE IT FROM THIS WIZARD. IN RECOGNITION OF YOUR GREAT DEEDS TODAY, I WILL GIVE YOU A BLUE RIBBON, WHICH MAY BE USED TO ACCESS THE LEVEL TRANSPORTER [otherwise known as an “elevator”] ON THIS FLOOR. WITHOUT IT, THE PARTY WOULD BE UNABLE TO ENTER THE ROOM IN WHICH IT LIES. GO NOW, AND GOD SPEED IN YOUR QUEST!

And that’s the last we hear about that, until we make it to the 10th dungeon level and the climax.

What Wizardry lacks in fictional context, it makes up for in mechanical depth. Nothing that predates it on microcomputers offers a shadow of its complexity. Like Ultima, Wizardry features the standard, archetypical D&D attributes, races, and classes, renamed a bit here and there for protection from Mr. Gygax’s legal team. Wizardry, however, lets us build a proper adventuring party with up to six members in lieu of the single adventurer of Ultima, with all the added tactical possibilities managing a team of adventurers implies. Also on offer here are four special classes in addition to the basic four, to which we can change characters when they become skilled enough at their basic professions. (In other words, Wizardry is already offering what the kids today call “prestige classes.”) Most impressive of all is the aspect that gave Wizardry its name: priests eventually have 29 separate spells to call upon, mages 21, each divided into 7 spell levels to be learned slowly as the character advances. Ultima‘s handful of purchasable scrolls, which had previously marked the state of the art in CRPG magic systems, pales in comparison. Most of the depth of Wizardry arises one way or another from its magic system. It’s not just a matter of learning which spells are most effective against which monsters, but also of husbanding one’s magic resources: deciding when one’s spell casters are depleted enough that it’s time to leave the dungeon, deciding whether the powerful spell is good enough against that demon or whether it’s time to use the really powerful one, etc. It’s been said that a good game is one that confronts players with interesting, non-obvious — read, difficult — decisions. By that metric, magic is largely what makes Wizardry a good game.

Of course, Wizardry‘s mechanics, from its selection of classes and races to its attribute scores that max out at 18 to its armor-class score that starts at 10 and moves downward for no apparent reason, are steeped in D&D. There’s even a suggestion in the manual that one could play Wizardry with one’s D&D group, with each player controlling a single character — not that that sounds very compelling or practical. The game also tries, not very successfully, to shoehorn in D&D‘s mechanic of alignment, a silly concept even on the tabletop. On the computer, good, evil, and neutral are just a set of arbitrary restrictions: good and evil cannot be in the same party, thieves cannot be good.

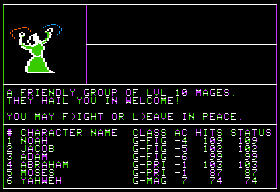

Sometimes you meet “friendly” monsters in the dungeon. If good characters kill them anyway, or evil characters let them go, there’s a chance that their alignments will change — which can in turn play the obvious havoc with party composition. (In an amusing example of unintended emergent behavior, it’s also possible for the “evil” mage at the end of the game to be… friendly. Now doesn’t that present a dilemma for a “good” adventurer, particularly since not killing him means not getting the amulet that the party needs to get out of his lair.)

So, Greenberg and Woodhead were to some extent just porting an experience that had already proven compelling as hell to many players to the computer, albeit doing a much more complete job of it than anyone had managed before. But there’s also much that’s original here. Indeed, so much that would become standard in later CRPGs has its origin here that it’s hard to know where to begin to describe it all. Wizardry is almost comparable to Adventure in defining a whole mode of play that would persist for many years and countless games. For those few of you who haven’t played an early Wizardry game, or one of its spiritual successors (read: slavish imitators) like The Bard’s Tale or Might and Magic, I’ll take you on a very brief guided tour of a few highlights. Sorry about my blasphemous adventurer names; I’ve been reading the Old Testament lately, and it seems I got somewhat carried away with it all.

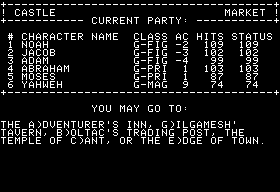

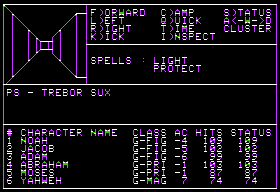

Wizardry is divided into two sections: the castle (shown below), where we do all of the housekeeping chores like making characters, leveling up, putting together our party, shopping for equipment, etc.; and the dungeon, where the meat of the game takes place.

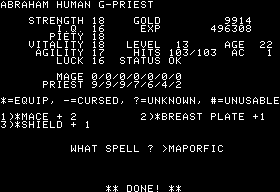

When we enter the dungeon, we start in “camp.” We are free to camp again at any time in the dungeon, as long as we aren’t in the middle of a fight. Camping gives us an opportunity to tinker with our characters and the party as a whole without needing to worry about monsters. We can also cast spells. Here I’ve just cast MAPORFIC, a very useful spell which reduces the armor class of the entire party by two for the duration of our stay in the dungeon. All spells have similar made-up names; casting one requires looking it up in the manual and entering its name.

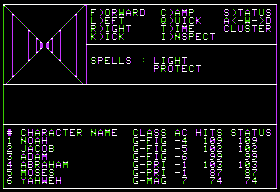

Once we leave camp, we’re greeted with the standard traveling view: a first-person wireframe-3D view of our surroundings occupies the top left, with the rest of the screen given over to various textual status information and a command menu that’s really rather wasteful of screen space. (I suspect Greenberg and Woodhead use it because it gives them something with which to fill up some space that they don’t have to spend computing resources dynamically updating.)

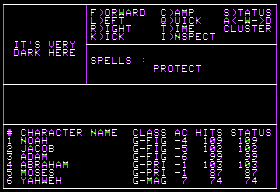

I was just saying that Wizardry manages to be its own thing, separate from D&D. That becomes clear when we consider the player’s biggest challenge: mapping. It’s absolutely essential that she keep a meticulous map of her explorations. Getting lost and not knowing how to return to the stairs or elevator is almost invariably fatal. While tabletop D&D players are often also expected to keep rough maps of their journeys, few dungeon masters are as unforgiving as Wizardry. In addition to all the challenges of keeping track of lots of samey-looking corridors and rooms, the game soon begins to throw other mapping challenges at the player: teleporters that suddenly throw the party somewhere else entirely; spinners that spin them in place so quickly it’s easy to not realize it’s happened; passages that wrap around from one side of the dungeon to the other; dark areas that force one to map by trial and error, literally by bashing one’s head against the walls.

On the player’s side are an essential mage spell, DUMAPIC, that tells her exactly where she is in relation to the bottom-left corner of the dungeon level; and the knowledge that all dungeon levels are exactly 20 spaces by 20 spaces in size. Mapping is such a key part of Wizardry that Sir-tech even provided a special pad of graph paper for the purpose in the box, sized 20 X 20.

The necessity to map for yourself is easily the most immediately off-putting aspect of a game like Wizardry for a modern player. While games before Wizardry certainly had dungeons, it was the first to really require such methodical mapping. The dungeons in Akalabeth and Ultima, for instance, don’t contain anything other than randomized monsters to fight with randomized treasure. The general approach in those games becomes to use “Ladder Down” spells to quickly move down to a level with monsters of about the right strength for one’s character, to wander around at random fighting monsters until satisfied and/or exhausted, then to use “Ladder Up” spells to make an escape. There’s nothing unique to really be found down there. Wizardry changed all that; its dungeon levels may be 99% empty rooms, corridors, and randomized monster encounters, but there’s just enough unique content to make exploring and mapping every nook and cranny feel essential. If that’s not motivation enough, there’s also the lack of a magic equivalent to “Ladder Up” and “Ladder Down” until one’s mage has reached level 13 or higher. Map-making is essential to survival in Wizardry, and for many years to follow laborious map-making would be a standard part of the CRPG experience. It’s an odd thing: I have little patience for mazes in text adventures, yet find something almost soothing about slowly building up a picture of a Wizardry dungeon on graph paper. Your milage, inevitably, will vary.

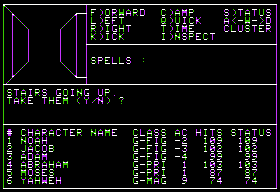

In general Wizardry is all too happy to kill you, but it does offer some kindnesses here and there in addition to DUMAPIC and dungeon levels guaranteed to be 20 X 20 spaces. These proving grounds are, for example, one of the few fantasy dungeons to be equipped with a system of elevators. They let us bypass most of the levels to quickly get to the one we want. Here we’re about to go from level 1 to level 4.

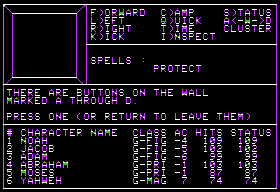

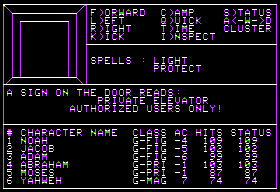

From level 4 we can take another elevator all the way down to level 9. But, as you can see below, entering that second elevator is allowed for “authorized users only.”

Wizardry doesn’t have the ability to save any real world state at all. Only characters can be saved, and only from the castle. Each dungeon level is reset entirely the moment we enter it again (or, more accurately, reset when we leave it, when it gets dumped from memory to be replaced by whatever comes next). Amongst other things, this makes it possible to kill Werdna, the evil mage of level 10, and thus “win the game” over and over again. One way the game does manage to work around this state of affairs is through checks like what you see illustrated above. We can only enter the second elevator if we have the blue ribbon — and we can only get that through the fellow who enlisted our services in another part of level 4 (see the quotation above). By tying progress through the plot (such as it is) to objects in this way, Greenberg and Woodhead manage to preserve at least a semblance of game state. The blue ribbon is of course an object which we carry around with us, and that is preserved when we save our characters back at the castle. Therefore it gives the game a way of “knowing” whether we’ve completed the first stage of our quest, and thus whether it should allow us into the lower levels. It’s quite clever in its way, and, again, would become standard operating procedure in many other RPGs for years to come. The mimesis breaker is that, just as we can kill Werdna over and over, we can also acquire an infinite number of these blue ribbons by reentering that special room on level 4 again and again.

There’s a surprising amount of unique content in the first 4 levels: not only our quest-giver and the restricted elevator, but also some special rooms with their own atmospheric descriptions and a few other lock-and-key-style puzzles similar to, although less critical than, the second-elevator puzzle. In levels 5 through 9, however, such content is entirely absent. These levels hold nothing but empty corridors and rooms. I believe the reason for this is down to disk capacity. Wizardry shipped on two disks, but the first serves only to host the opening animation and some utilities. The game proper lives entirely on a second disk, as must all of the characters that players create. This disk is stuffed right to the gills, and probably would not allow for any more text or “special” areas. Presumably Greenberg and Woodhead realized this the hard way, when the first four levels were already built with quite a bit of unique detail.

We start to see more unique content again only on level 10, the lair of Werdna himself. There’s this, for instance:

From context we can conclude that Trebor must be the quest giver that we met back on level 4. “Werdna” and “Trebor” are also, of course, “Andrew” and “Robert” spelled backward. Wizardry might like to describe itself using some pretty high-minded rhetoric sometimes and might sport a very serious-looking dragon on its box cover, but Greenberg and Woodhead weren’t above indulging in some silly fun in the game proper. When mapped, level 8 spells out Woodhead’s initials; ditto level 9 for Greenberg’s.

In the midst of all this exploration and mapping we’re fighting a steady stream of monsters. Some of these fights are trivial, but others are less so, particularly as our characters advance in level and learn more magic and the monsters we face also get more diverse and much more dangerous, with more special capabilities of their own.

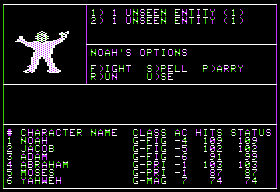

The screenshot above illustrates a pretty typical combat dilemma. In an extra little touch of cruelty most of its successors would abandon, Wizardry often decides not to immediately tell us just what kind of monsters we’re facing. The “unseen entities” above could be Murphy’s ghosts, which are pretty much harmless, or nightstalkers, a downright sadistic addition that drains a level every time it successfully hits a character. (Exceeded in cruelty only by the vampire, which drains two levels.) So, we are left wondering whether we need to throw every piece of high-level magic we have at these things in the hopes of killing them before they can make an attack, or whether we can take it easy and preserve our precious spells. As frustrating as it can be to waste one’s best spells, it usually pays to err on the side of caution in these situations; once to level 9 or so, each experience level represents hours of grinding. Indeed, if there’s anything Wizardry in general teaches, it’s the value of caution.

I won’t belabor the details of play any more here, but rather point you to the CRPG Addict’s posts on Wizardry for an entertaining description of the experience. Do note as you read that, however, that he’s playing a somewhat later MS-DOS port of the Apple II original.

The Wizardry series today has the reputation of being the cruelest of all of the earlier CRPGs. That’s by no means unearned, but I’d still like to offer something of a defense of the Wizardry approach. In Dungeons and Desktops, Matt Barton states that “CRPGs teach players how to be good risk-takers and decision-makers, managers and leaders,” on the way to making the, shall we say, bold claim that CRPGs are “possibly the best learning tool ever designed.” I’m not going to touch the latter claim, but there is something to his earlier statements, at least in the context of an old-school game of Wizardry.

For all its legendary difficulty, Wizardry requires no deductive or inductive brilliance or leaps of logical (or illogical) reasoning. It rewards patience, a willingness to experiment and learn from mistakes, attention to detail, and a dedication to doing things the right way. It does you no favors, but simply lays out its world before you and lets you sink or swim as you will. Once you have a feel for the game and understand what it demands from you, it’s usually only in the moment that you get sloppy, the moment you start to take shortcuts, that you die. And dying here has consequences; it’s not possible to save inside the dungeon, and if your party is killed they are dead, immediately. Do-overs exist only in the sense that you may be able to build up another party and send it down to retrieve the bodies for resurrection. This approach is probably down at least as much to the technical restrictions Greenberg and Woodhead were dealing with — saving the state of a whole dungeon is complicated — as to a deliberate design choice, but once enshrined it became one of Wizardry‘s calling cards.

Now, this is very possibly not the sort of game you want to play. (Feel free to insert your “I play games to have fun, not to…” statements here.) Unlike some “hardcore” chest-thumpers you’ll meet elsewhere on the Internet, I don’t think that makes you any stupider, more immature, or less manly than me. Hell, often I don’t want to play this sort of game either. But, you know, sometimes I do.

My wife and I played through one of the critical darlings of last year, L.A. Noire, recently. We were generally pretty disappointed with the experience. Leaving aside the sub-Law and Order plotting, the typically dodgy videogame writing, and the most uninteresting and unlikable hero I’ve seen in a long time, our prime source of frustration was that there was just no way to fuck this up. The player is reduced to stepping through endless series of rote tasks on the way to the next cut scene. The story is hard-coded as a series of death-defying cliffhangers, everything always happening at the last possible second in the most (melo-)dramatic way possible, and the game is quite happy to throw out everything you as the player have, you know, actually done to make sure it plays out that way. In the end, we were left feeling like bit players in someone else’s movie. Which might not have been too terrible, except it wasn’t even a very good movie.

In Wizardry, though, if you stagger out of the dungeon with two characters left alive with less than 10 hit points each, that experience is yours. It wasn’t scripted by a hack videogame writer; you own it. And if you slowly and methodically build up an ace party of characters, then take them down and stomp all over Werdna without any problems at all, there’s no need to bemoan the anticlimax. The satisfaction of a job well and thoroughly done is a reward of its own. After all, that’s pretty much how the good guys won World War II. To return to Barton’s thesis, it’s also the way you make a good life for yourself here in the real world; the people constantly scrambling out of metaphorical dungeons in the nick of time are usually not the happy and successful ones. If you’re in the right frame of mind, Wizardry, with its wire-frame graphics and its 10 K or so of total text, can feel more immersive and compelling than L.A. Noire, with all its polygons and voice actors, because Wizardry steps back and lets you make your own way through its world. (It also, of course, lets you fuck it up. Oh, boy, does it let you fuck it up.)

That’s one way to look at it. But then sometimes you’re surprised by six arch-mages and three dragons who proceed to blast you with spells that destroy your whole 15th-level party before anyone has a chance to do a thing in response, and you wish someone had at least thought to make sure that sort of thing couldn’t happen. Ah, well, sometimes life is like that too. Wizardry, like reality, can be a cruel mistress.

I’m making the Apple II version and its manual available for you to download, in case you’d like to live (or relive) the experience for yourself. You’ll need to remove write permissions from the first disk image before you boot with it. As part of its copy protection, Wizardry checks to see if the disk is write protected, and refuses to start if not. (If you’re using an un-write-protected disk, it assumes you must be a nasty pirate.)

Next time I’ll finish up with Wizardry by looking at what Softline magazine called the “Wizardry phenomenon” that followed its release.