There was a demon in memory. They said whoever challenged him would lose. Their programs would lock up, their machines would crash, and all their data would disintegrate.

The demon lived at the hexadecimal memory address A0000, 655,360 in decimal, beyond which no more memory could be allocated. He lived behind a barrier beyond which they said no program could ever pass. They called it the 640 K barrier.

— with my apologies to The Right Stuff… [1]Yes, that is quite possibly the nerdiest thing I’ve ever written.

The idea that the original IBM PC, the machine that made personal computing safe for corporate America, was a hastily slapped-together stopgap has been vastly overstated by popular technology pundits over the decades since its debut back in August of 1981. Whatever the realities of budgets and scheduling with which its makers had to contend, there was a coherent philosophy behind most of the choices they made that went well beyond “throw this thing together as quickly as possible and get it out there before all these smaller companies corner the market for themselves.” As a design, the IBM PC favored robustness, longevity, and expandability, all qualities IBM had learned the value of through their many years of experience providing businesses and governments with big-iron solutions to their most important data–processing needs. To appreciate the wisdom of IBM’s approach, we need only consider that today, long after the likes of the Commodore Amiga and the original Apple Macintosh architecture, whose owners so loved to mock IBM’s unimaginative beige boxes, have passed into history, most of our laptop and desktop computers — including modern Macs — can trace the origins of their hardware back to what that little team of unlikely business-suited visionaries accomplished in an IBM branch office in Boca Raton, Florida.

But of course no visionary has 20-20 vision. For all the strengths of the IBM PC, there was one area where all the jeering by owners of sexier machines felt particularly well-earned. Here lay a crippling weakness, born not so much of the hardware found in that first IBM PC as the operating system the marketplace chose to run on it, that would continue to vex programmers and ordinary users for two decades, not finally fading away until Microsoft’s release of Windows XP in 2001 put to bed the last legacies of MS-DOS in mainstream computing. MS-DOS, dubbed the “quick and dirty” operating system during the early days of its development, is likely the piece of software in computing history with the most lopsided contrast between the total number of hours put into its development and the total number of hours it spent in use, on millions and millions of computers all over the world. The 640 K barrier, the demon all those users spent so much time and energy battling for so many years, was just one of the more prominent consequences of corporate America’s adoption of such a blunt instrument as MS-DOS as its standard. Today we’ll unpack the problem that was memory management under MS-DOS, and we’ll also examine the problem’s multifarious solutions, all of them to one degree or another ugly and imperfect.

The original IBM PC was built around an Intel 8088 microprocessor, a cost-reduced and somewhat crippled version of an earlier chip called the 8086. (IBM’s decision to use the 8088 instead of the 8086 would have huge importance for the expansion buses of this and future machines, but the differences between the two chips aren’t important for our purposes today.) Despite functioning as a 16-bit chip in most ways, the 8088 had a 20-bit address space, meaning it could address a maximum of 1 MB of memory. Let’s consider why this limitation should exist.

Memory, whether in your brain or in your computer, is of no use to you if you can’t keep track of where you’ve put things so that you can retrieve them again later. A computer’s memory is therefore indexed by bytes, with every single byte having its own unique address. These addresses, numbered from 0 to the upper limit of the processor’s address space, allow the computer to keep track of what is stored where. The biggest number that can be represented in 20 bits is 1,048,575, or 1 MB. Thus this is the maximum amount of memory which the 8088, with its 20-bit address bus, can handle. Such a limitation hardly felt like a deal breaker to the engineers who created the IBM PC. Indeed, it’s difficult to overemphasize what a huge figure 1 MB really was when they released the machine in 1981, in which year the top-of-the-line Apple II had just 48 K of memory and plenty of other competing machines shipped with no more than 16 K.

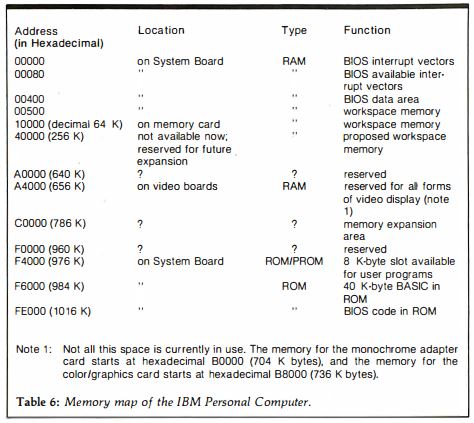

A processor needs to address other sorts of memory besides the pool of general-purpose RAM which is available for running applications. There’s also ROM memory — read-only memory, burned inviolably into chips — that contains essential low-level code needed for the computer to boot itself up, along with, in the case of the original IBM PC, an always-available implementation of the BASIC programming language. (The rarely used BASIC in ROM would be phased out of subsequent models.) And some areas of RAM as well are set aside from the general pool for special purposes, like the fully 128 K of addresses given to video cards to keep track of the onscreen display in the original IBM PC. All of these special types of memory must be accessed by the CPU, must be given their own unique addresses to facilitate that, and must thus be subtracted from the address space available to the general pool.

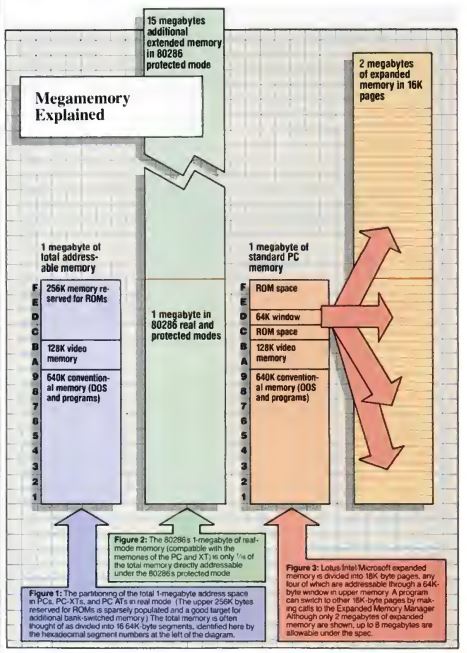

IBM’s engineers were quite generous in drawing the boundary between their general memory pool and the area of addresses allocated to special purposes. Focused on expandability and longevity as they were, they reserved big chunks of “special” memory for purposes that hadn’t even been imagined yet. In all, they reserved the upper three-eighths of the available addresses for specialized purposes actual or potential, leaving the lower five-eighths — 640 K — to the general pool. In time, this first 640 K of memory would become known as “conventional memory,” the remaining 384 K — some of which would be ROM rather than RAM — as “high memory.” The official memory map which IBM published upon the debut of the IBM PC looked like this:

It’s important to understand when looking at a memory map like this one that the existence of a logical address therein doesn’t necessarily mean that any physical memory is connected to that address in any given real machine. The first IBM PC, for instance, could be purchased with as little as 16 K of conventional memory installed, and even a top-of-the-line machine had just 256 K, leaving most of the conventional-memory space vacant. Similarly, early video cards used just 32 K or 64 K of the 128 K of address space offered to them in high memory. The 640 K barrier was thus only a theoretical limitation early on, one few early users or programmers ever even noticed.

That blissful state of affairs, however, wouldn’t last very long. As IBM’s creations — joined, soon enough, by lots of clones — became the standard for American business, more and more advanced applications appeared, craving more and more memory alongside more and more processing power. Already by 1984 the 640 K barrier had gone from a theoretical to a very real limitation, and customers were beginning to demand that IBM do something about it. In response, IBM that year released the PC/AT, built around Intel’s new 80286 microprocessor, which boasted a 24-bit address space good for 16 MB of memory. To unlock all that potential extra memory, IBM made the commonsense decision to extend the memory map above the specialized high-memory area that ended at 1 MB, making all addresses beyond 1 MB a single pool of “extended memory” available for general use.

Problem solved, right? Well, no, not really — else this would be a much shorter article. Due more to software than hardware, all of this potential extended memory proved not to be of much use for the vast majority of people who bought PC/ATs. To understand why this should be, we need to examine the deadly embrace between the new processor and the old operating system people were still running on it.

The 80286 was designed to be much more than just a faster version of the old 8086/8088. Developing the chip before IBM PCs running MS-DOS had come to dominate business computing, Intel hadn’t allowed the need to stay compatible with that configuration to keep them from designing a next-generation chip that would help to take computing to where they saw it as wanting to go. Intel believed that microcomputers were at the stage at which the big institutional machines had been a couple of decades earlier, just about ready to break free of what computer scientist Brian L. Stuart calls the “Triangle of Ones”: one user running one program at a time on one machine. At the very least, Intel believed, the second leg of the Triangle must soon fall; everyone recognized that multitasking — running several programs at a time and switching freely between them — was a much more efficient way to do complex work than laboriously shutting down and starting up application after application. But unfortunately for MS-DOS, the addition of multitasking complicates the life of an operating system to an absolutely staggering degree.

Operating systems are of course complex subjects worthy of years or a lifetime of study. We might, however, collapse their complexities down to a few fundamental functions: to provide an interface for the user to work with the computer and manage her programs and files; to manage the various tasks running on the computer and allocate resources among them; and to act as a buffer or interface between applications and the underlying hardware of the computer. That, anyway, is what we expect at a minimum of our operating systems today. But for a computer ensconced within the Triangle of Ones, the second and third functions were largely moot: with only one program allowed to run at a time, resource-management concerns were nonexistent, and, without the need for a program to be concerned about clashing with other programs running at the same time, bare-metal programming — manipulating the hardware directly, without passing requests through any intervening layer of operating-system calls — was often considered not only acceptable but the expected approach. In this spirit, MS-DOS provided just 27 function calls to programmers, the vast majority of them dealing only with disk and file management. (Compare that, my fellow programmers, with the modern Windows or OS X APIs!) For everything else, banging on the bare metal was fine.

We can’t even begin here to address all of the complications that are introduced when we add multitasking into the equation, asking the operating system in the process to fully embrace all three of the core functions listed above. Memory management alone, the one aspect we will look deeper into today, becomes complicated enough. A program which is sharing a machine with other programs can no longer have free run of the memory map, placing whatever it wants to wherever it wants to; to do so risks overwriting the code or data of another program running on the system. Instead the operating system must demand that individual programs formally request the memory they’d like to use, and then must come up with a way to keep a program, whether due to bugs or malice, from running roughshod over areas of memory that it hasn’t been granted.

Or perhaps not. The Commodore Amiga, the platform which pioneered multitasking on personal computers in 1985, didn’t so much solve the latter part of this problem as punted it away. An application program is expected to request from the Amiga’s operating system any memory that it requires. The operating system then returns a pointer to a block of memory of the requested size, and trusts the application not to write to memory outside of these bounds. Yet nothing besides the programmer’s skill and good nature absolutely prevents such unauthorized memory access from happening. Every application on the Amiga, in other words, can write to any address in the machine’s memory, whether that address be properly allocated to it or not. Screen memory, free memory, another program’s data, another program’s code — all are fair game to the errant program. Such unauthorized memory access will almost always eventually result in a total system crash. A non-malicious programmer who wants her program to be a good citizen would of course never intentionally write to memory she hasn’t properly requested, but bugs of this nature are notoriously easy to create and notoriously hard to track down, and on the Amiga a single instance of one can bring down not only the offending program but the entire operating system. With all due respect to the Amiga’s importance as the first multitasking personal computer, this is obviously not the ideal way to implement it.

A far more sustainable approach is to take the extra step of tracking and protecting the memory that has been allocated to each program. Memory protection is usually accomplished using what’s known as virtual memory: when a program requests memory, it’s returned not a true address within the system’s memory pool but rather a virtual address that’s translated back into the real address to which it corresponds every time the program accesses its data. Each program is thus effectively sandboxed from everything else, allowed to read from and write to only its own data. Only the lowest levels of the operating system have global access to the memory pool as a whole.

Implementing such memory protection in software alone, however, must be an untenable drain on the resources available to systems engineers in the 1980s — a fact which does everything to explain its absence from the Amiga. Intel therefore decided to give software a leg up via hardware. They built into the 80286 a memory-management unit that could automatically translate from virtual to real memory addresses and vice versa, making this constantly ongoing process fairly transparent even to the operating system.

Nevertheless, the operating system must know about this capability, must in fact be written very differently if it’s to run on a CPU with memory protection built into its circuitry. Intel recognized that it would take time for such operating systems to be created for the new chip, and recognized that compatibility with the earlier 8086/8088 chips would be a very good thing to have in the meantime. They therefore built two possible operating modes into the 80286. In “protected mode” — the mode they hoped would eventually come to be used almost universally — the chip’s full potential would be realized, including memory protection and the ability to address up to 16 MB of memory. In “real mode,” the 80286 would function essentially like a turbocharged 8086/8088, with no memory-protection capabilities and with the old limitation on addressable memory of 1 MB still in place. Assuming that in the early days at least the new chip would need to run on operating systems with no knowledge of its full capabilities, Intel made the 80286 default to real mode on startup. An operating system which did know about the 80286 and wanted to bring out its full potential could switch it to protected mode at boot-up and be off to the races.

It’s at the intersection between the 80286 and the operating system that Intel’s grand plans for the future of their new chip went awry. An overwhelming percentage of the early 80286s were used in IBM PC/ATs and clones, and an overwhelming percentage of those machines were running MS-DOS. Microsoft’s erstwhile “quick and dirty” operating system knew nothing of the 80286’s full capabilities. Worse, trying to give it knowledge of those capabilities would have to entail a complete rewrite which would break compatibility with all existing MS-DOS software. Yet the whole reason MS-DOS was popular in the first place — it certainly wasn’t because of a generous feature set, a friendly interface, or any aesthetic appeal — was that very same huge base of business software. Getting users to make the leap to some hypothetical new operating system in the absence of software to run on it would be as difficult as getting developers to write programs for an operating system with no users. It was a chicken-or-the-egg situation, and neither chicken nor egg was about to stick its neck out anytime soon.

IBM was soon shipping thousands upon thousands of PC/ATs every month, and the clone makers were soon shipping even more 80286-based machines of their own. Yet at least 95 percent of those machines were idling along at only a fraction of their potential, thanks to the already creakily archaic MS-DOS. For all these users, the old 640 K barrier remained as high as ever. They could stuff their machines full of extended memory if they liked, but they still couldn’t access it. And of course the multitasking that the 80286 was supposed to have enabled remained as foreign a concept to MS-DOS as a GPS unit to a Model T. The only solution IBM offered those who complained about the situation was to run another operating system. And indeed, there were a number of alternatives to MS-DOS available for the PC/AT and other 80286-based machines, including several variants of the old institutional-computing favorite Unix — one of them even from Microsoft — and new creations like Digital Research’s Concurrent DOS, which struggled with mixed results to wedge in some degree of MS-DOS compatibility. Still, the only surefire way to take full advantage of MS-DOS’s huge software base was to run the real — in more ways than one now! — MS-DOS, and this is what the vast majority of people with 80286-equipped machines wound up doing.

Meanwhile the very people making the software which kept MS-DOS the only viable choice for most users were feeling the pinch of being confined to 640 K more painfully almost by the month. Finally Lotus Corporation — makers of the Lotus 1-2-3 spreadsheet package that ruled corporate America, the greatest single business-software success story of their era — decided to use their clout to do something about it. They convinced Intel to join them in devising a scheme for breaking the 640 K barrier without abandoning MS-DOS. What they came up with was one mother of an ugly kludge — a description the scheme has in common with virtually all efforts to break through the 640 K barrier.

Looking through the sparsely populated high-memory area which the designers of the original IBM PC had so generously carved out, Lotus and Intel realized it should be possible on almost any extant machine to identify a contiguous 64 K chunk of those addresses which wasn’t being used for anything. This chunk, they decided, would be the gateway to potentially many more megabytes installed elsewhere in the machine. Using a combination of software and hardware, they implemented what’s known as a bank-switching scheme. The 64 K chunk of high-memory addresses was divided into four segments of 16 K, each of which could serve as a lens focused on a 16 K segment of additional memory above and beyond 1 MB. When the processor accessed the addresses in high memory, the data it would actually access would be the data at whatever sections of the additional memory their lenses were currently pointing to. The four lenses could be moved around at will, giving access, albeit in a roundabout way, to however much extra memory the user had installed. The additional memory unlocked by the scheme was dubbed “expanded memory.” The name’s unfortunate similarity to “extended memory” would cause much confusion over the years to come; from here on, we’ll call it by its common acronym of “EMS.”

All those gobs of extra memory wouldn’t quite come for free: applications would have to be altered to check for the existence of EMS memory and make use of it, and there would remain a distinct difference between conventional memory and EMS memory with which programmers would always have to reckon. Likewise, the overhead of constantly moving those little lenses around made EMS memory considerably slower to access than conventional memory. On the brighter side, though, EMS worked under MS-DOS with only the addition of a single device driver during startup. And, since the hardware mechanism for moving the lenses around was completely external to the CPU, it would even work on machines that weren’t equipped with the new 80286.

This diagram shows the different types of memory available on PCs of the mid-1980s. In blue, we see the original 1 MB memory map of the IBM PC. In green, we see a machine equipped with additional extended memory. And in orange we see a machine equipped with additional expanded memory.

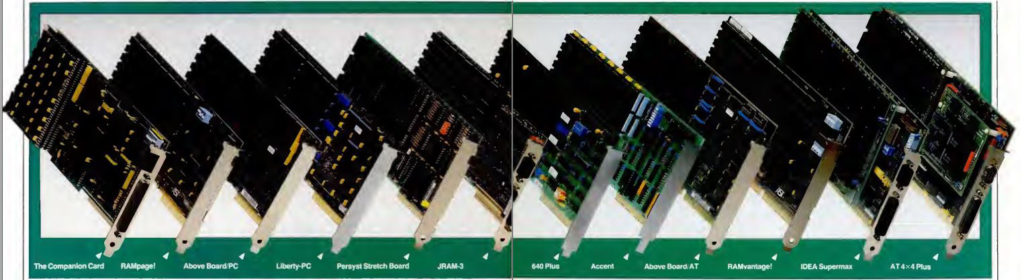

Shortly before the scheme made its official debut at a COMDEX trade show in May of 1985, Lotus and Intel convinced a crucial third partner to come aboard: Microsoft. “It’s garbage! It’s a kludge!” said Bill Gates. “But we’re going to do it.” With the combined weight of Lotus, Intel, and Microsoft behind it, EMS took hold as the most practical way of breaking the 640 K barrier. Imperfect and kludgy though it was, software developers hurried to add support for EMS memory to whatever programs of theirs could practically make use of it, while hardware manufacturers rushed EMS memory boards onto the market. EMS may have been ugly, but it was here today and it worked.

At the same time that EMS was taking off, however, extended memory wasn’t going away. Some hardware makers — most notably IBM themselves — didn’t want any part of EMS’s ugliness. Software makers therefore continued to probe at the limits of machines equipped with extended memory, still looking for a way to get at it from within the confines of MS-DOS. What if they momentarily switched the 80286 into protected mode, just for as long as they needed to manipulate data in extended memory, then went back into real mode? It seemed like a reasonable idea — except that Intel, never anticipating that anyone would want to switch modes on the fly like this, had neglected to provide a way to switch an 80286 in protected mode back into real mode. So, proponents of extended memory had to come up with a kludge even uglier than the one that allowed EMS memory to function. They could force the 80286 back into real mode, they realized, by resetting it entirely, just as if the user had rebooted her computer. The 80286 would go through its self-check again — a process that admittedly absorbed precious milliseconds — and then pick back up where it left off. It was, as Microsoft’s Gordon Letwin memorably put it, like “turning off the car to change gears.” It was staggeringly kludgy, it was horribly inefficient, but it worked in its fashion. Given the inefficiencies involved, the scheme was mostly used to implement virtual disks stored in the extended memory, which wouldn’t be subject to the constant access of an application’s data space.

In 1986, the 32-bit 80386, Intel’s latest and greatest chip, made its public bow at the heart of the Compaq Deskpro 386 rather than an IBM machine, a landmark moment signaling the slow but steady shift of business computing’s power center from IBM to Microsoft and the clone makers using their operating system. While working on the new chip, Intel had had time to see how the 80286 was actually being used in the wild, and had faced the reality that MS-DOS was likely destined to be cobbled onto for years to come rather than replaced in its entirety with something better. They therefore made a simple but vitally important change to the 80386 amidst its more obvious improvements. In addition to being able to address an inconceivable total of 4 GB of memory in protected mode thanks to its 32-bit address space, the 80386 could be switched between protected mode and real mode on the fly if one desired, without needing to be constantly reset.

In freeing programmers from that massive inefficiency, the 80386 cracked open the door that much further to making practical use of extended memory in MS-DOS. In 1988, the old EMS consortium of Lotus, Intel, and Microsoft came together once again, this time with the addition to their ranks of the clone manufacturer AST; the absence of IBM is, once again, telling. Together they codified a standard approach to extended memory on 80386 and later processors, which corresponded essentially to the scheme I’ve already described in the context of the 80286, but with a simple command to the 80386 to switch back to real mode replacing the resets. They called it the eXtended Memory Specification; memory accessed in this way soon became known universally as “XMS” memory. Under XMS as under EMS, a new device driver would be loaded into MS-DOS. Ordinary real-mode programs could then call this driver to access extended memory; the driver would do the needful switching to protected mode, copy blocks of data from extended memory into conventional memory or vice versa, then switch the processor back to real mode when it was time to return control to the program. It was still inelegant, still a little inefficient, and still didn’t use the capabilities of Intel’s latest processors in anything like the way Intel’s engineers had intended them to be used; true multitasking still remained a pipe dream somewhere off in a shadowy future. Owners of sexier machines like the Macintosh and Amiga, in other words, still had plenty of reason to mock and scoff. In most circumstances, working with XMS memory was actually slower than working with EMS memory. The primary advantage of XMS was that it let programs work with much bigger chunks of non-conventional memory at one time than the four 16 K chunks that EMS allowed. Whether any given program chose EMS or XMS came to depend on which set of advantages and disadvantages best suited its purpose.

The arrival of XMS along with the ongoing use of EMS memory meant that MS-DOS now had two competing memory-management solutions. Buyers now had to figure out not only whether they had enough extra memory to run a program but whether they had the right kind of extra memory. Ever accommodating, hardware manufacturers began shipping memory boards that could be configured as either EMS or XMS memory — whatever the application you were running at the moment happened to require.

The next stage in the slow crawl toward parity with other computing platforms in the realm of memory management would be the development of so-called “DOS extenders,” software to allow applications themselves to run in protected mode, thus giving them direct access to extended memory without having to pass their requests through an inefficient device driver. An application built using a DOS extender would only need to switch the processor to real mode when it needed to communicate with the operating system. The development of DOS extenders was driven by Microsoft’s efforts to turn Windows, which like seemingly everything else in business computing ran on top of MS-DOS, into a viable alternative to the command line and a viable challenger to the Macintosh. That story is thus best reserved for a future article, when we look more closely at Windows itself. As it is, the story that I’ve told so far today moves us nicely into the era of computer-gaming history we’ve reached on the blog in general.

In said era, the MS-DOS machines that had heretofore been reserved for business applications were coming into homes, where they were often used to play a new generation of games taking advantage of the VGA graphics, sound cards, and mice sported by the latest systems. Less positively, all of the people wanting to play these new games had to deal with the ramifications of a 640 K barrier that could still be skirted only imperfectly. As we’ve seen, both EMS and XMS imposed to one degree or another a performance penalty when accessing non-conventional memory. What with games being the most performance-sensitive applications of all, that made that first 640 K of lightning-fast conventional memory most precious of all for them.

In the first couple of years of MS-DOS’s gaming dominance, developers dealt with all of the issues that came attached to using memory beyond 640 K by the simple expedient of not using any memory beyond 640 K. But that solution was compatible neither with developers’ growing ambitions for their games nor with the gaming public’s growing expectations of them.

The first harbinger of what was to come was Origin Systems’s September 1990 release Wing Commander, which in its day was renowned — and more than a little feared — for pushing the contemporary state of the art in hardware to its limits. Even Wing Commander didn’t go so far as to absolutely require memory beyond 640 K, but it did use it to make the player’s audiovisual experience snazzier if it was present. Setting a precedent future games would largely follow, it was quite inflexible in its approach, demanding EMS — as opposed to XMS — memory. In the future, gamers would have to become all too familiar with the differences between the two standards, and how to configure their machines to use one or the other. Setting another precedent, Wing Commander‘s “installation guide” included a section on “memory usage” that was required reading in order to get things working properly. In the future, such sections would only grow in length and complexity, and would need to be pored over by long-suffering gamers with far more concentrated attention than anything in the manual having anything to do with how to actually play the games they purchased.

In Accolade’s embarrassing Leisure Suit Larry knockoff Les Manley in: Lost in LA, the title character explains EMS and XMS memory to some nubile companions. The ironic thing was that anyone who wished to play the latest games on an MS-DOS machine really did need to know this stuff, or at least have a friend who did.

Thus began the period of almost a decade, remembered with chagrin but also often with an odd sort of nostalgia by old-timers today, in which gamers spent hours monkeying about with MS-DOS’s “config.sys” and “autoexec.bat” files and swapping in and out various third-party utilities in the hope of squeezing out that last few kilobytes of conventional memory that Game X needed to run. The techniques they came to employ were legion.

In the process of developing Windows, Microsoft had discovered that the kernel of MS-DOS itself, a fairly tiny program thanks to its sheer age, could be stashed into the first 64 K of memory beyond 1 MB and still accessed like conventional memory on an 80286 or later processor in real mode thanks to what was essentially an undocumented technical glitch in the design of those processors. Gamers thus learned to include the line “DOS=HIGH” in their configuration files, freeing up a precious block of conventional memory. Likewise, there was enough unused space scattered around in the 384 K of high memory on most machines to stash many or all of MS-DOS’s device drivers there instead of in conventional memory. Thus “DOS=HIGH” soon became “DOS=HIGH,UMB,” the second parameter telling the computer to make use of these so-called “upper-memory blocks” and thereby save that many kilobytes more.

These were the most basic techniques, the starting points. Suffice to say that things got a lot more complicated from there, turning into a baffling tangle of tweaks, some saving mere bytes rather than kilobytes of conventional memory, but all of them important if one was to hope to run games that by 1993 would be demanding 604 K of 640 K for their own use. That owners of machines which by that point typically contained memories in the multi-megabytes should have to squabble with the operating system over mere handfuls of bytes was made no less vexing by being so comically absurd. And every new game seemed to up the ante, seemed to demand that much more conventional memory. Those with a sunnier disposition or a more technical bent of mind took the struggle to get each successive purchase running as the game before the game got started, as it were. Everyone else gnashed their teeth and wondered for the umpteenth time if they might not have been better off buying a console where games Just Worked. The only thing that made it all worthwhile was the mixture of relief, pride, and satisfaction that ensued when you finally got it all put together just right and the title screen came up and the intro music sprang to life — if, that is, you’d managed to configure your sound card properly in the midst of all your other travails. Such was the life of the MS-DOS gamer.

Before leaving the issue of the 640 K barrier behind in exactly the way that all those afflicted by it for so many years were so conspicuously unable to do, we have to address Bill Gates’s famous claim, allegedly made at a trade show in 1981, that “640 K ought to be enough for anybody.” The quote has been bandied about for years as computer-industry legend, seeming to confirm as it does the stereotype of Bill Gates as the unimaginative dirty trickster of his industry, as opposed to Steve Jobs the guileless visionary (the truth is, needless to say, far more complicated). Sadly for the stereotypers, however, the story of the quote is similar to all too many legends in the sense that it almost certainly never happened. Gates himself, for one, vehemently denies ever having said any such thing. Fred Shapiro, for another, editor of The Yale Book of Quotations, conducted an exhaustive search for a reputable source for the quote in 2008, going so far as to issue a public plea in The New York Times for anyone possessing knowledge of such a source to contact him. More than a hundred people did so, but none of them could offer up the smoking gun Shapiro sought, and he was left more certain than ever that the comment was “apocryphal.” So, there you have it. Blame Bill Gates all you want for the creaky operating system that was the real root cause of all of the difficulties I’ve spent this article detailing, but don’t ever imagine he was stupid enough to say that. “No one involved in computers would ever say that a certain amount of memory is enough for all time,” said Gates in 2008. Anyone doubting the wisdom of that assertion need only glance at the history of the IBM PC.

(Sources: the books Upgrading and Repairing PCs, 3rd edition by Scott Mueller and Principles of Operating Systems by Brian L. Stuart; Computer Gaming World of June 1993; Byte of January 1982, November 1984, and March 1992; Byte‘s IBM PC special issues of Fall 1985 and Fall 1986; PC Magazine of May 14 1985, January 14 1986, May 30 1989, June 13 1989, and June 27 1989; the episode of the Computer Chronicles television show entitled “High Memory Management”; the online article “The ‘640K’ quote won’t go away — but did Gates really say it?” on Computerworld.)

Footnotes

| ↑1 | Yes, that is quite possibly the nerdiest thing I’ve ever written. |

|---|

John Elliott

April 14, 2017 at 4:16 pm

“No one involved in computers would ever say that a certain amount of memory is enough for all time,” said Gates in 2008

The “640k” quote may well be apocryphal, but this quotation from the Windows 95 Resource Kit offers a similar 32-bit hostage to fortune:

silver

April 14, 2017 at 8:31 pm

Today, 20+ years later, 2GB is still enough for a browser or the largest of desktop applications. Very little requires more, unless it’s for data as opposed to program code.

Tiago Magalhães

April 16, 2017 at 11:01 am

Guess they have to start hiring lawyers to write their documentation since it looks like people aren’t able to think.

That line means “currently” and not “until the heat death of the universe”.

John Elliott

April 16, 2017 at 7:15 pm

And there was a time when 640k was “currently” enough for everyone. The parallel amused me, no more.

Alex Smith

April 14, 2017 at 4:42 pm

Ah the bootdisk era. I think it’s impossible to fully convey the frustration of being a PC gamer in the early-to-mid 1990s to someone who did not actually suffer through it. I have never dabbled in programming or more than the simplest of game modding, but I became an expert at navigating autoexec.bat and config.sys (and even occasionally command.com) out of sheer necessity to play Wing Commander and Doom and TIE Fighter and a host of other 1990s classics.

One interesting side effect of this era I feel is that us teenagers and young adults that experienced this era are far more equipped to handle technology problems than those who have grown up in the post Windows 95 era (and I say this as a librarian that personally observes the general public grappling with computers on a daily basis). Today, non-technical people are used to their technology working more or less correctly right out of the box, leaving them no incentive to learn how it functions. Therefore, when something goes wrong that can actually be easily corrected without a great deal of technical know-how, they are often completely lost. Because we had to explore every nook and cranny of our computers to make our software run, even those, like me, who had no interest in becoming programmers or engineers are not afraid to poke around a bit to solve simpler problems.

Ido Yehieli

April 14, 2017 at 5:02 pm

What would the early 8 bit generation say, that had their computers boot straight to BASIC and often had to program to get anything interesting out of it? :)

Alex Smith

April 14, 2017 at 5:10 pm

Oh absolutely. Computer use has only gotten easier as time goes on no question. I am only drawing a narrow comparison between nontechnical youth of the 1990s like me that were nonetheless committed to still using a computer regularly and nontechnical youth of today, who really don’t need to know anything about their computer at all for basic operation. When I became a public librarian, I expected the older set to not have a handle on this stuff, but was surprised that the younger set also seemed unable to perform basic, non-technical troubleshooting. This is not a “back-in-my-day/get-of-my-lawn” style rant about the good old days, just an observation of how grappling with DOS memory problems equipped a certain segment of the non-technical population with skills they would likely otherwise not possess.

Joe

April 17, 2017 at 12:52 am

Well said, and agreed.

I never considered myself a technical person, but I, too, wrestled with those arcane files and fiddly systems, and today I frequently find myself in the position of Computer Oracle. I am always surprised by it.

Ido Yehieli

April 14, 2017 at 5:03 pm

I remember Apogee’s late dos release, Realms of Chaos, being particularly gnarly to get working on a mid 90s PC, who may have drivers loaded for such modern extravaganzas as CD-ROM, Sound Blaster, a mouse & a dos extender!

Chuck again

April 14, 2017 at 5:30 pm

This is a fantastic summary of the technical challenges of that entire era. Bravo!

For me, this brings back many happy memories (and only a few frustrating ones, mostly centered around eking out a maximum of conventional memory). I seem to recall my AUTOEXEC.BAT had four system startup configurations, selectable by a CHOICE menu, which would rejigger the memory allocation as needed.

You couldn’t pay me to go back to those days though… unless you’re running a computer museum.

Jayle Enn

April 14, 2017 at 5:54 pm

About the time that consumer CD-ROMs became popular, I found a function that let me partition my boot files and make a menu to select which I wanted. Ended up with ones that would prevent peripherals from loading (CD drivers took RAM, after all…), or once or twice configurations for specific games. I was a hardcore fan of Origin games back then, and Wing Commander was only nudging the envelope compared to some of its later cousins.

Saved on boot disks at least.

Sam

April 14, 2017 at 6:06 pm

s/cracked opened/cracked open/

Jimmy Maher

April 15, 2017 at 6:10 am

Thanks!

Kai

April 14, 2017 at 6:19 pm

I played Wing Commander on the Amiga, and though this was more a slide show at times than a fast-paced space action game, at least it just worked.

Not that I was spared the fun entirely, as the first PC we had initially still ran DOS. While the hardware was great, the OS was such a huge step backwards. Like so many others, I ended up crafting a boot disk with a selection menu to prepare the system for each individual game.

Might be the reason why I never grew a fan of any of Microsoft’s offerings. Installed Linux in ’96 and went with a dual-boot system from then on, starting Windows only for playing games. For quite a while, I even kept using amiwm to recreate at least the look and feel of the Amiga workbench under Linux.

Gnoman

April 14, 2017 at 6:58 pm

That’s rather funny, because this early history of mucking around with DOS after upgrading from a C64 to an IBM 486 box is a big part of why I refuse to use Linux. The few times i’ve tried to do so, I’ve gotten so frustrated at my old DOS knowledge crashing into the new Linux commands I’m trying to learn (yes, I am aware that Linux has several rather nice GUIs, but if I’m running an OS with a viable command line THAT is what I am going to use) and turning into a mess. Sort of a muscle memory thing.

_RGTech

April 15, 2025 at 9:52 pm

I’m a MS person since 1994, starting with DOS 6.2/WfW 3.11… and yeah, cmd.exe is still one of my most used tools.

As my new emergency boot disc _had_ to run Linux (the old BartPE / WinXP PE environment wouldn’t connect to servers using newer SMB versions), I recently printed a “DOS to Linux Cheat Sheet” (https://legacy.redhat.com/pub/redhat/linux/7.0/en/doc/RH-DOCS/rhl-gsg-en-7.0/ch-doslinux.html) for that. Aside from a bash script that I’ve written (network connection, drive mapping, user/pass entry) and the file manager (Midnight Commander), I would be completely lost. I can’t remember one of those things, no matter how hard I try.

But instead I could still write a config.sys/autoexec.bat with [menu] and mscdex from scratch without manual. (I also still have the boot floppy with really small mouse and keyboard drivers here in my desk drawer.) Memory is a strange thing indeed.

Just one thing to complain: The article reads as if DOS was the reason for the 640k barrier. But DOS happily gave you more memory on other “DOS-compatible” computers back in the day, that did not reserve so much unused memory space (which also didn’t make them IBM-compatible!). So it just happened that Microsoft programmed the memory map like the customer wanted, and on the IBM PC that was a maximum of 640k user space.

Ross

April 16, 2025 at 3:36 pm

It is, as one would expect, complicated. The 8086 had 20 address lines, so it fundamentally had 1 mb of addressable space, but an operating system could facilitate using those 20 bits of address space as a 1mb window into a larger amount of memory (which is more or less how mainframes worked). At the time DOS was first developed, the overhead of doing it this way wasn’t considered worth the benefit

Steven Marsh

April 14, 2017 at 6:33 pm

This article gave me panicky flashbacks.

I think what was so amazing about this era is that it wasn’t like you could just come up with the perfect AUTOEXEC.BAT and CONFIG.SYS, dust your hands off, and say, “There! THAT problem is solved!” Every dang high-end program seemed to need its own massaging. Some software needed a mouse driver, which ate precious bytes. Some could use a variant mouse driver that was smaller . . . but most software didn’t like it. Some had their own internal mouse drivers. Some needed unique sound settings. Some needed a sound driver. Others had their own sound drivers, and loading an unneeded one would decrease that precious memory to the point of inoperability. Some needed certain versions of DOS to eke out those last few precious bytes. Sometimes the act of quitting the program wouldn’t free up all memory for some reason, and you needed to reboot cold to get a system that would give you all your memory. Sometimes the order that you had entries in your CONFIG.SYS and AUTOEXEC.BAT would matter.

I remember spending hours trying to get software to work just right, finally getting it to do so, and then realizing, “I’m not even sure if I want to play any more right now…”

Cliffy

April 26, 2017 at 7:09 pm

For some reason I was dismissive of boot disks. I could eventually get all my games to run with an appropriate .bat file, but I didn’t know nearly enough to remove all the memory artifacts they created, so if I wanted to play a different game, I usually had to reboot the thing anyway. Dumb.

Allen Brunson

April 14, 2017 at 6:39 pm

holy cow. i had almost forgotten what being a dos user in the 1990s was like. the typical computer of that era might have 4mb of ram, but most of it would be wasted, most of the time. all anybody cared about was the precious address space in the first megabyte.

i remember favoring compaq machines of that era, more than ibm machines and others, because ibms (for example) usually had a lot of precious below-1mb-space taken up by useless roms and god knows what else.

qemm to the rescue: this utility package, which worked on 386 pcs, would allow you to remap high ram into unused address slots in low ram. sometimes you could even map ram over the top of those useless roms that ibm liked to saddle us with. qemm came with a utility that would scan your computer and try to find all the adress ranges that might be reclaimed for use.

a lot of times, you could re-order the drivers in your config.sys, or reorder the tsrs in your autoexec.bat, so that you would end up with more free memory afterwards. a black art on top of a black art.

these days, it’s hard for me to believe that i didn’t just switch to some other operating system that wasn’t so ridiculous.

whomever

April 14, 2017 at 7:10 pm

Ah yes, I’d kind of expunged those memories. But, which other OS? The Amiga was dying off, the Apple ][ and Atari ST was already basically dead, the Archimedes a British eccentricity, the Mac expensive and starting to show creaking problems of it’s own (and not much of a gaming platform), Linux was only just coming into existance, high end Unix workstations cost a fortune. There was a promise…OS/2. Ironically I never used OS/2, but the proponents matched the Amiga-heads in evangelicalism, and I guess if it let them get away from this in hindsight I understand. However, we know how that turned out.

Yura

April 14, 2017 at 7:07 pm

I think it was XMS for eXtended memory on 80286 with HIMEM.SYS driver, and EMS for Expanded Memory on 80386 with EMM386.EXE driver.

https://en.wikipedia.org/wiki/Extended_memory

https://en.wikipedia.org/wiki/Expanded_memory

_RGTech

April 15, 2025 at 10:08 pm

Not quite. That would be too easy.

Extended Memory was all that RAM over the 1 MB barrier that DOS could not even see (save for that small 64k window right at the border).

XMS was a specification that was created later, and allowed to use that memory. But you still needed something other than DOS, if you wanted himem.sys to let you use more than the High Memory Area (those 64k right over the 1MB border). Windows, 2.1 or up, was a possibility.

But even then, the 80286 was some kind of a braindead CPU, and the 80386 did everything a bit better (and was designed with the IBM PC and similar in mind, which wasn’t the case with the 80286 that came out only a year after the IBM PC!).

And EMS… no, that was something that worked completely different. In the first years you even needed special EMS compatible memory boards! Not just any kind of extended memory would do. THAT came years later, and EMM386 was – along other things – then able to emulate EMS, if you wished. But you also could emulate XMS with it. Or nothing at all, and just stick with the UMB handling in addition to the HMA done by himem.sys (DOS=HIGH,UMB).

But the “old” EMS was also usable on 8086/8088 PCs, which was quite something. I think you had to use EMS to get more than 2048 lines in Lotus 1-2-3 (I could be wrong on the number, but it wasn’t much more)… just imagine that ;)

(Confusing? For sure. And there’s surely still some error in that, as I don’t recall all of the little nitpicking details. Which there were plenty.)

Jason

April 14, 2017 at 7:09 pm

The worst game for me was the CD version of “Master of Magic”

It needed on the order of 612K, with the CD-ROM driver loaded. I ended up using the Novell DOS 7 nwcdex on MS-DOS 5. It was later discovered that much of the memory was for the opening movie, so you could run a different executable that was for resuming from your last autosave, (if you didn’t want to resume you could just quit to the menu). This saved around 10K of ram IIRC.

Adele

April 14, 2017 at 7:31 pm

Thank you for another excellent article. I remember fiddling around with “autoexec.bat” and “config.sys” as a kid to play games, and I never understood why; I just did what I needed to do in order to play my game. You have somehow managed to fill in the missing pieces and make managing memory in DOS interesting. And thank you for the nostalgic satisfaction of booting up a game in DOS after all that hard work!

Vince

September 22, 2023 at 5:12 am

Heh, same.

I think I learned the meaning of the various XMS, EMS, UMB and the reasons behind them in this article for the first time, despite spending countless hours in my early teens trying to tweak the infamous autoexec.bat and config.sys

I remember it more like a dark art, a trial and error process made up of mixing arcane words that in a specific combination would cause the game to function… Syndicate in particular is stuck into my mind as a royal pain, but it was probably because was one of my first DOS games and I had no manual for it.

And it’s not like what followed improved the situation. The Windows 95-circa Windows 8-7 era was one of equally arcane driver incompatibilities, DLL hell, mysterious crashes and errors.

It is only only with Windows 10 and digital distribution that PC games achieved a degree of console-like plug and play.

Keith Palmer

April 14, 2017 at 7:35 pm

I managed to miss out on the challenges you described (if also on most of the rewards) of the “early-1990s MS-DOS era,” although in missing out I’m now struck by your description of EMS resembling the bank-switching schemes that let computers with 8-bit processors be advertised as “128K” or even “512K” models. I also have to note your bringing up Steve Jobs in connection with the “640K” quote attributed to Bill Gates reminds me of how quickly the Macintosh literature seemed to move to “you will be able to upgrade to 512K,” and how that paled pretty fast too to wind up being linked to “it took Jobs’s ritual sacrifice to start moving the Mac forward again…”

Sniffnoy

April 14, 2017 at 7:51 pm

Typo correction: “three-eights” and “five-eights” should be “eighths” rather than “eights”.

Jimmy Maher

April 15, 2017 at 6:14 am

Thanks!

Andrew Hoffman

April 14, 2017 at 8:19 pm

Gates may not have actually said anything about 640k, but if you read his interview from the first issue of PC Magazine he’s definitely excited about all the possibilities of being able to address ten times more memory than an Apple II and claims it will eliminate the need to optimize software.

https://books.google.com/books?id=w_OhaFDePS4C&printsec=frontcover#v=onepage&q&f=false

googoobaby

April 14, 2017 at 9:36 pm

The full 8086 has the same memory addressing capabilities as the 8088. The text seems to imply that 20-bit addressing is an 8088 limitation instead of being implicit to that whole family (8086 even 80186).

Jimmy Maher

April 15, 2017 at 6:15 am

???

“IBM’s decision to use the 8088 instead of the 8086 would have huge importance for the expansion buses of this and future machines, but the differences between the two chips aren’t important for our purposes today.”

Martin

April 15, 2017 at 6:19 pm

The read problem was not the 20 bit addressing but the fact that the “user memory” was put in the middle of the memory map instead of the end. Is there any technical reason why they couldn’t have put the user memory in the higher 5/8ths in the original design so to allow user memory continuation into higher addresses when later chips allowed it? I know that requires some sort of fore-sight but surely not that much unless you do believe the 640K is more than anyone could ever use.

Jimmy Maher

April 15, 2017 at 8:39 pm

Making conventional memory the upper 640 K of the 1 MB address space rather than the lower would have made things a little cleaner, but wouldn’t have solved any of the memory problems described in this article. I’m afraid the “real” problem really was the 20-bit addressing.

The reason IBM’s engineers chose the arrangement they did is likely because the 8086/8088 required its reset vector (https://en.wikipedia.org/wiki/Reset_vector) to be at the very upper end of its address space. So, the alternative would still have required a little area at the end of conventional memory reserved for a special purpose. This would have turned into yet one *more* piece of ugliness for future engineers to work around. On the whole, the arrangement chosen was the best that was practical at the time.

Martin

April 17, 2017 at 12:03 pm

You’ll notice from that link that the reset vectors of newer processors (excluding the x86 line) are down in the lowest memory page so I assume at least some manufacturers learned their lesson on this. I wonder where the ARM reset vectors are?

whomever

April 17, 2017 at 1:23 pm

(Replying to Martin but we reached max indentation). ARM is indeed at 0, however the other thing more modern CPUS have going for them is virtual memory and support for page tables, which makes this almost a non issue. ARM went 64 without anyone much noticing.

arthurdawg

April 14, 2017 at 10:02 pm

Ultima VII was one that took some tweaking… Voodoo you might say…

I still remember playing with an early task switching program on my old Tandy 1000 that would let you load up multiple programs and jump back and forth (albeit with no background processing). The memory limitations of the machine prevented any great utility and I didn’t use it for long.

Ahhh… the memories of the old days. Now my MBP just does what I tell it to do for the most part.

MagerValp

April 15, 2017 at 1:03 pm

The fun part about U7 was that it shipped with its own unique memory manager, which was incompatible with the usual tools that helped you free up RAM. It also didn’t fail immediately, but after watching part of the intro and it cut off when the Guardian started talking, making it especially painful to try to come up with a working config. Good times.

whomever

April 15, 2017 at 2:00 pm

And for those who don’t know, arthurdawg was making a joke when he referred to tweaking it as Voodoo (that was in fact the name of the memory manager).

Brian

April 16, 2017 at 1:06 am

Yep… the good ole Voodoo memory manager… wreaked havoc on the scale of Gojira eating Tokyo.

Those were the days… coming from an 8 bit background I think we were all better prepared than we realized. My dad and I tweaked that old Tandy to the max before moving on to a 386SX… also a Tandy because my dad loved Radio Shack back in the day.

As always, a great column!

If allowed – a sidebar on Voodoo from the Ultima Codex… if not I’ll glady erase.

http://wiki.ultimacodex.com/wiki/Voodoo_Memory_Manager

Christian Moura

April 18, 2017 at 5:16 am

Ultima VII – oh, yes, damn yes. I remember at one point having a choice – either loading sound drivers or mouse drivers. I could play the game with music, or I could play it and use a mouse…in silence.

bryce777

April 14, 2017 at 10:25 pm

DOS was very easy to program for actually, and even more so in assembly.

Since you could only run one program at a time anyway, games did not really need to use DOS at all and not all of them did. I am surprised origin systems never embraced the idea, it certainly would have to have been easier than dealing with their own memory manager trying to work through DOS.

When windows came suddenly even the old functionality was many times more complex.

whomever

April 14, 2017 at 11:06 pm

Sure, Dos wasn’t that hard to program for (bare metal and all that). And a lot of apps ignored a whole lot of the API (which is why we all owned The Peter Norton Programmer’s Guide to the IBM PC). But: Define “Use Dos”? Certainly, a number of very early PC games booted directly off floppy and ignored it completely (including, amusingly enough, both MS Flight Simulator and Adventure). However, in practice by this era (early 90s) you had to support hard drives and a large selection of third party TSRs (which gave sort of “fake” multitasking”) and a bunch of other stuff. Which meant that you had to at least use it for file system support. Ignoring dos after booting it up meant in practice you were stuck with a bunch of limits even if you pretended you were your own OS and switched straight to protected mode.

bryce777

April 15, 2017 at 1:08 am

When you make your own bootloader there is no DOS to worry about, and like I said the hardware at the time was a snap to work with, not like today whatsoever. I guess that the fact there were lots of multiple platform games back then was probably a big factor though.

Bernie

April 14, 2017 at 11:51 pm

Ah, Jimmy …. this kind of article of yours is very special : the ones that stir up all those collective memories. And your writing style guarantees that the “stirring” occurs ! , which is wonderful.

As a Commodore 8-bit (C64 breadbin) user who switched to 16-bit in 1990, I have fond memories of the “memory blues” :

1) I clearly remember whole nights spent in front of friends’ IBM-compatibles try to get DOS games to work : autoexec.bat, config.sys, himem, soundblaster drivers, it was all very frustrating.

2) Besides being a Commodore guy, this was one of the main things keeping me away from MS-DOS machines. Amiga software and hardware turned “Memory Configuration” into an afterthought : you just added SIMMS to your HD-SCSI-RAM combo card and that was it. Drivers were installed only once from nifty install disks and everything was handled automatically from then on. If you had an older A500 and needed to get past the 512k “Chip ram barrier”, you just popped in a new Agnus chip. As for boot disks, they were only needed to run older games or european imports that required 50hz screen refresh.

3) This why “true hardware emulation” has never been a popular option for DOS games, as opposed to DOSbox, which handles all memory issues invisibly and reliably : you just tell how much ram you need and toggle UMB, EMS and XMS on or off as needed. I would even go as far as stating that the absence of “memory and driver tweaking” is the reason that we enjoy the current DOSbox-fueled retro-pc-gaming surge. Gee, just imagine for a moment GOG selling “vanilla” installs for you to run on “real” MS-DOS installed in a virtual machine or PC-em. I have tried it out of curiosity, and it sucks, big time.

4) It’s no coincidence that both WinUAE and Mini-vMac are used and configured pretty much like a real Amiga or Mac and are very popular emulators for that same reason, whereas DOSbox is purposefully as detached as possible from the nuances of the hardware it emulates, and has become the de-facto DOS emulation standard.

Bernie

April 15, 2017 at 12:11 am

Sorry, Quick Addendum : for those purists who despise DOSbox’s simplifications but don’t want to go through the hassle of setting up a full-blown VM, there’s Tand-EM , a tandy 1000 emulator ; PCE, an accurate IBM PC emulator ; and http://www.pcjs.org , hosting java emulators with many ready-made installs of DOS, OS/2 , Win 3.1 and 95 in realistically simulated hardware , like the Compaq Deskpro 386 , for example.

Jimmy Maher

April 15, 2017 at 6:22 am

Never really thought of DOSBox in those terms before, but you’re right. It’s quite a feat of software engineering. I hope GOG.com is paying its creators well. :)

As you noted, the Amiga did have the chip/fast RAM divide, which could be almost as confusing and frustrating at times as the 640 K barrier. So it wasn’t a complete memory paradise either…

Peter Ferrie

April 15, 2017 at 9:08 pm

GOG doesn’t pay us anything. DOSBox is free software. :-)

Jimmy Maher

April 15, 2017 at 9:56 pm

I understand they’re under no obligation, but I’d kind of assumed they were throwing some money your way, given that they’ve built so much of their business around DOSBox. A pity.

Vince

September 22, 2023 at 5:38 am

DOSbox is such a fantastic emulator, it just works, painlessly and efficiently.

Thank you so much for your work!

DZ-Jay

June 6, 2017 at 4:10 pm

I dont think this is very accurate. My understanding is that ALL that tweaking is in DOSBox as well. I certainly had to tweak and play trial and error to get my old DOS games to work correctly.

The good thing is that there is a vivid community sharing pre-made configuration files, specific to each game, and that with DOSBox you can create bespoke configuration files per game easily.

GoG is another matter entirely: they sell games packaged with DOSBox and a perfectly tuned configuration; but someone created that configuration and tuned it.

Don’t get me wrong, I love DOSBox, and indeed it is a great product, but it’s “simplification” to the DOS world is in the 20-plus years of experience of a gaming community providing DOS configurations specific to classic and well-known games, and it’s focus on configuration interfaces specific to games needs.

Without that, it’s just another DOS environment.

dZ.

Skiphipdata

June 29, 2024 at 6:29 am

DOSBox actually does hugely simplify the task of configuring a game to run, compared to real legacy PC hardware, or a legacy hardware emulator such as 86Box. There is still custom configuration to be done for many games, but it’s nowhere near as much of a crapshoot with DOSBox. Consider the fact that when you start up DOSBox, all the essential drivers such as sound card, mouse, CD-ROM, EMS/XMS memory, etc. are already fully resident in upper memory, leaving well over 600K of conventional memory free without having to do any extra configuration. On a real DOS machine, that all has to be set up by hand, and even when loading drivers in upper memory, they still eat up some conventional memory. Different drivers and memory managers can use memory differently, and present unique compatibility issues with each other and with different games—creating a practically infinite number of possible permutations. DOSBox also provides a consistent set of emulated hardware, firmware, and resident drivers, rather than the patchwork of countless third-party vendor products that could’ve been installed in a real PC clone. DOSBox, though it has some complications of its own, is usually mercifully easy to configure and generous with memory by comparison.

Ish Ot Jr.

April 15, 2017 at 3:19 am

Thank you for this fantastic article! I can’t believe the nostalgia it made me feel for HIMEM.SYS and EMM386! It was really fun anticipating the evolution while reading, e.g. flashbacks to Wing Commander and XMS’ rise etc. followed shortly by your detailed recounting. Can’t wait to read your coverage of the joys of IRQ settings!!

Rusty

April 15, 2017 at 3:25 am

My apologies but but this is eating me up inside, I want to read the text on that list of cards so bad.

Do you have it in a higher resolution?

On the other hand it led to a very interesting google image search on “isa memory card”. Some of them are quite horrifying.

Jimmy Maher

April 15, 2017 at 6:28 am

https://archive.org/details/PC-Mag-1986-01-14

Page 120. ;)

Jubal

April 15, 2017 at 5:07 am

Pardon my ignorance here, as I pretty much entirely missed out on this era of computing – but why, given all these problems and endless kludgy workarounds, did Microsoft stick with the basic DOS concept for nearly two decades rather than coming up with something new that was back-compatible with older DOS software?

Jimmy Maher

April 15, 2017 at 6:47 am

Many people tried, including Microsoft with OS/2, but always with mixed results. A big piece of the problem was the sheer primitiveness of MS-DOS. It really was little more than a handful of function for dealing with disk and file management; the name “*Disk* Operating System” is very appropriate. For everything else, you either banged on the hardware or used add-on device drivers.

A more modern operating system with multitasking wouldn’t be able to allow this sort of unfettered access for the reasons described in the article. This raises the question of what you *do* with all those programs trying to peek and poke at the lowest levels of the computer. About the only practical solution might be to emulate all that hardware in software — this is what Apple did when transitioning the Macintosh from a 68000-based architecture to the PowerPC — but this is *very* expensive in terms of processing power. On late 1980s/early 1990s hardware, you’d wind up with DOS programs running much, much slower under the new operating system than they had under vanilla DOS, and requiring vastly more memory to boot. Not, in other words, a good look for Wing Commander. Both applications software and games were exploding so fast in the demands they placed on the hardware during this period that there just wasn’t processing power to spare for this sort of exercise. Nowadays, with software not having increased its demands dramatically in at least a decade, it can be a little hard for us to relate to those crazier times.

That said, there were alternatives out there that managed in one way or another to achieve perhaps as much as 95 percent MS-DOS compatibility. But that last 5 percent was still a dealbreaker for many or most. Imagine if some obscure incompatibility kicked in only when you used some obscure function in Lotus 1-2-3 — or only on the last mission of Wing Commander.

Microsoft’s habit of playing dirty with anyone who got too close, thus threatening their burgeoning monopoly, didn’t help either. Look up the story of Dr. DOS sometime, the MS-DOS alternative that actually invented many of MS-DOS’s most important latter-day improvements, forcing Microsoft to play catch-up. Digital Research didn’t get rewarded terribly well for their innovations…

Alex Freeman

April 15, 2017 at 6:43 pm

Well, it’s actually DR-DOS (or DR DOS, without hyphen up to and including version 6.0), not Dr. DOS (which would be pronounced “Doctor DOS”), as DR stood for Digital Research. Speaking of which, you might find this Tech Tale interesting:

https://www.youtube.com/watch?v=hJNaAG2BXow&list=PLbBZM9aUMsjEVZPCDMl-lXOx50rSBNFQC&index=2

Felix

April 15, 2017 at 7:41 am

They did come up with something new. It was called Windows, and among other things it allowed for running DOS apps in a sort of virtual machine (albeit with a huge performance penalty, back in the day). A capability it retained all the way to Vista 32-bit, if I’m not mistaken.

But for the most part, Microsoft’s main interest was to retain their monopoly on the OS market. Which is why they sabotaged IBM’s efforts to get OS/2 off the ground — that, by the way, could also run DOS apps in a compatibility mode, while also providing multitasking and whatnot to apps written to take advantage of it.

MSDEV

April 15, 2017 at 12:38 pm

It is incorrect to say that MS sabotaged OS/2 or IBM. I have first hand knowledge of this. MS was deeply dedicated to OS/2. Windows only succeeded where OS/2 didnt because the teams was vastly smaller than the OS/2 team, so didn’t have the organizational overhead. Certain developers went against management orders to add features like Memory protection.

The quicker time to market that met user demand made Windows a success while OS/2 was mired in bugs and backwards compatibility problems.

Alex Freeman

April 15, 2017 at 6:44 pm

That’s funny. I remember OS/2’s main selling point being that it was less buggy and had better performance.

Eric

April 16, 2017 at 4:19 pm

Remember that VHS won over Betamax, despite Beta having the superior picture quality at the time. (And, indeed, lasted longer than VHS in a commercial setting) VHS won because they hit on what the public wanted much faster (tapes long enough to record a whole 2-hour movie, cheaper, etc) than Sony did with Beta. It’s not always the “better” solution that wins, as much as the one that hits the right checkpoints when it needs to.

And then once the number of users hits critical mass, it’s even harder to dislodge.

Alex Freeman

April 18, 2017 at 5:45 am

“Remember that VHS won over Betamax, despite…”

True, but I was replying to MSDEV’s post, which stated that OS/2 was mired in bugs. That’s contrary to what I remember reading about it.

_RGTech

April 15, 2025 at 10:35 pm

Well, both were quite buggy. The DOS-based Windows reached a reasonably stable environment only slowly, peaking with Windows 98 SE. OS/2 always hung behind in terms of the latest technology, and there were quite some FixPaks for bugs in every version. And do a search about the “Synchronous Input Queue”.

But the NT line (the one that’s dominating the PC world today) was everything that Microsoft imagined OS/2 to be, just freed from the restrictions by IBM (like targeting the 80286, or at first making it incompatible with existing Windows apps). And it was better (OS/2 did not have the rigid user account and rights management). That was only possible after the two companies broke up in 1990, because of the unexpected success of Windows 3.0.

@Felix: Well, real DOS compatibility ended with Windows Me (or 98 SE, as Me was a bit buggy on that side). NT (XP) never allowed direct hardware access, so it refused to run quite some older programs. But it always ran 16-bit programs (DOS or Windows) in its emulation layer, and that is true for all 32-bit versions (available until Windows 10!). The 64-bit versions only provide 32-bit emulation (since XP-64).

Francesco Rossi

April 15, 2017 at 6:36 am

I’m grateful to this site and the author of this article! Back in Milano, I was barely a teenager when wing commander 3 came out, and I was still running on a 486 dx 33. Minimum requirements for it were a dx2 66. Still one could tweak the system enough to have the game loading. It of course took ages, but the real obstacle was still that 640k value. I solved it by loading a bootdisk with QEMM optimization on it. It gave out a whopping 634k free memory! It’s been nice to live again the feeling of those days. If it wasn’t for that I wouldn’t love tech as much as I do now

Yeechang Lee

April 15, 2017 at 8:43 am

A 100% verifiable quote about memory from 1981:

“Old-Timers Claim IBM Entry Doesn’t Scare Them”, InfoWorld, 5 October 1981

Nate

April 21, 2017 at 5:15 pm

Markkula was a marketer, hence this is all spin to justify their then current product line. I doubt anyone at Apple believed that.

whomever

April 15, 2017 at 2:17 pm

By the way, are you going to write more about Wing Commander? I remember it being a Big Deal when it came out, something to show our Amiga-owning friends and say, “Look! We can game on the IBM too!” And the music and etc. Ignoring the, ah, Star Wars physics, I actually think it’s aged very well. I pulled it out a couple of years ago, dug up the Roland MT32 (which DosBos supports!) and had a bunch of fun.

Jimmy Maher

April 15, 2017 at 4:15 pm

Yes, I have two big articles coming on Wing Commander. It was a hugely, hugely important game.

whomever

April 15, 2017 at 4:38 pm

Oooh! Can’t wait! Your blog is one of the very few I get really excited about when I see it pop up on my RSS feed. Also obviously I mistyped dosbox there (Grr, can’t edit comments etc).

Jim Leonard

April 15, 2017 at 4:05 pm

Your description of XMS is somewhat incomplete. XMS works by copying blocks of memory between the 0-1MB space and the extended memory space. You wrote “perform whatever data access was requested”, but that’s ambiguous and not how applications used XMS. With XMS, you only perform a call to copy a block of memory to/from the extended memory space to/from the 0-640k space. XMS does not allow real-mode applications to access extended memory directly.

This means your passage “It was a little easier and faster to work with than what had come before” is also not entirely accurate — XMS was slower in practice than EMS in all implementations. EMS may only allow you to access 64K at a time through the page frame, but switching what the frame referred to was much faster than XMS copying memory around. This is why Wing Commander could only work with EMS, because it needed that speed during the game’s spaceflight scenes.

I have programmed both of these specifications, and can cover the above in more detail over a side channel if you need further clarification. Other than the above, this is a well-written article, as always. Keep up the good work.

Jimmy Maher

April 15, 2017 at 4:28 pm

Thanks. I don’t want to get *too* far down in the weeds on some of this stuff, but made a few edits to that paragraph to clarify.

Peter Ferrie

April 15, 2017 at 9:10 pm

The XMS driver can copy between extended memory regions, too. It worked by entering “unreal” mode to access all 4Gb directly – another undocumented feature of Intel’s hardware.

Nate

April 21, 2017 at 5:17 pm

I believe that’s also called “real big” mode, from my BIOS/ACPI days.

P.S. Hi Peter, long time no chat

Brad Spencer

April 15, 2017 at 5:21 pm

It’s several decades too late for this advice to be useful :), but my friends and I simply made many copies of autoexec.bat and config.sys named things like “autoexec.dom” for Doom and “config.wc” for Wing Commander, etc. Then we had batch files that would present menus of which game you wanted. Running 1.bat to select the first game on the menu would just rename the appropriate pair of autoexec- and config-prefixed files and reboot. Then you could optimize per game. No CD needed for Doom? Don’t load the MSCDEX (or whatever it was called) driver, and so on. Another advantage was that you could, as the last step of autoexec.bat (whichever one had been renamed into place before the reboot), rename your “normal” autoexec.bat and config.sys back into place and then launch the game.

So, for us, the pain was one time, and encapsulated. But it does highlight how much of a tinkerer’s mindset you needed to play games back in that era.

FWIW, my friends and I all “knew” that protected mode games were better and then XMS and only as a last resort would we use EMS (which in the early 1990s we considered to be the ancient way to extend memory). Or at least that’s how we felt about it ;)

Alex Freeman

April 15, 2017 at 7:43 pm

Hoo boy! This brings back memories for me. I even remember DOS=HIGH,UMB. My family got its first computer in 1989 for my dad’s work, and it was a PC/AT 80286 with 512k of RAM and 10 MB of hard disk space. It had DOS and a Hercules monitor too. I remember thinking the games wer primitive compared to the games in the arcades, but I never thought DOS was primitive as it was really all I knew. I had no idea how behind the times our PC was.

When a friend of mine from junior high recommended Ultima Underworld to me in 1996, that was MY introduction to all this kludginess. By then, we had our second computer, a 486 with Windows 3.1. Getting UU to run on that required using a bootable floppy and tweaking autoexec.bat and config.sys on it. The manual had sample configurations for both, but they didn’t work. Figuring it all out was like a puzzle one might find in a computer game. I felt great satisfaction when I FINALLY figured out how to make it run.

Unfortunately, the sound wouldn’t work unless you first went into Windows and THEN exited to DOS. Since I couldn’t get into Windows from the bootable floppy, I had to play with no sound. However, I did learn a great deal about how batch files work. It probably enhanced my overall understanding of DOS too.

I remember that sort of thing only ever being a problem for Origin’s games, though. Other DOS games ran (mostly) fine with no tweaking. I later read about Origin’s unorthodox approach to programming DOS games and wondering why they’d do it that way when it clearly caused so many problems for its customers. Now I can see it was trying to push the limits of the PC.

Brian

April 16, 2017 at 1:12 am

Yep… as I noted with the Voodoo memory post above, Origin did it’s darndest to drive us poor DOS users cray cray!

Ben P. Stein

April 15, 2017 at 10:32 pm

Thank you so much for explaining the 640 K memory issue in patient, step-by-step, understandable terms. This kind of fundamental information is really important to me as I learn about the history of computer games. Instead of just hearing about the 640 K barrier, I now have some level of understanding about what it really meant for older computer games on IBM and compatible PCs.

Andrew Dalke

April 15, 2017 at 10:56 pm

I’ve found that one way to understand ‘what a huge figure 1 MB really was when they released the machine in 1981’ is to look at memory prices. http://www.jcmit.com/memoryprice.htm (archived at https://web-beta.archive.org/web/20170108175446/http://www.jcmit.com/memoryprice.htm ) lists a price of $4,479/MB for that time. That’s about $12,000 once adjusted for inflation, and about 3x the price of the base PC price.

Patrick Fleck

April 16, 2017 at 2:38 pm

Hey David, Very interesting article, but how come all your programmers are female? To me that’s just as sexist as making them all male. Don’t be so pc…

GeoX

April 22, 2017 at 12:28 pm

Somehow, it’s perfect that a lunkheaded comment like this wouldn’t even get the author’s name right.

Nate

April 22, 2017 at 10:44 pm

I agree. Let’s right this wrong, just as soon as 50.0001% of programmers are female.

Appendix H

April 16, 2017 at 7:57 pm