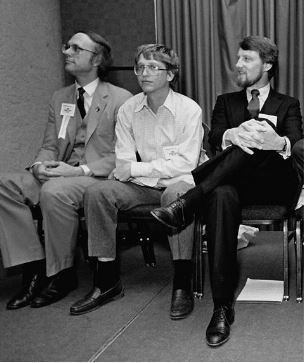

From left, Dan Fylstra of VisiCorp, Bill Gates of Microsoft, and Gary Kildall of Digital Research in 1984. As usual, Gates looks rumpled, high-strung, and vaguely tortured, while Kildall looks polished, relaxed, and self-assured. (Which of these men would you rather chat with at a party?) Pictures like these perhaps reveal one of the key reasons that Gates consistently won against more naturally charismatic characters like Kildall: he personally needed to win in ways that they did not.

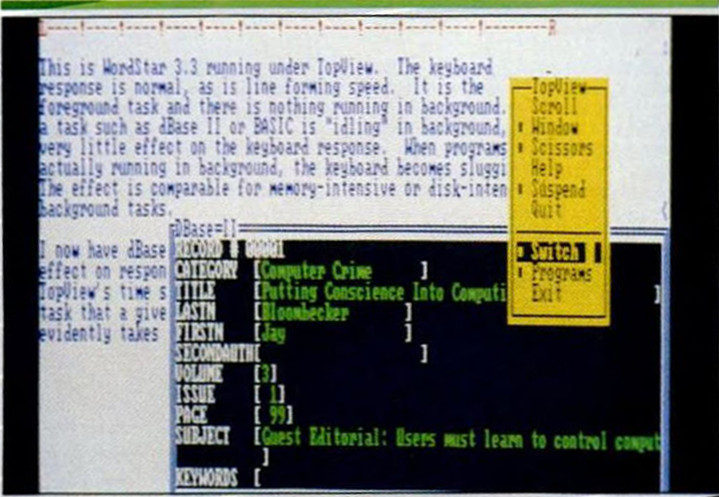

In the interest of clarity and concision, I’ve restricted this series of articles about non-Apple GUI environments to the efforts of Microsoft and IBM, making an exception to that rule only for VisiCorp’s Visi On, the very first product of its type. But, as I have managed to acknowledge in passing, those GUIs hardly constituted the sum total of the computer industry’s efforts in this direction. Among the more impressive and prominent of what we might label the alternative MS-DOS GUIs was a product from none other than Gary Kildall and Digital Research — yes, the very folks whom Bill Gates once so slyly fleeced out of a contract to provide the operating system for the first IBM PC.

To his immense credit, Kildall didn’t let the loss of that once-in-a-lifetime opportunity get him down for very long. Digital Research accepted the new MS-DOS-dominated order with remarkable alacrity, and set about making the best of things by publishing system software, such as the multitasking Concurrent DOS, which tried to do the delicate dance of improving on MS-DOS while maintaining compatibility. In the same spirit, they made a GUI of their own, called the “Graphics Environment Manager” — GEM.

After futzing around with various approaches, the GEM team found their muse on the day in early 1984 when team-member Darrell Miller took Apple’s new Macintosh home to show his wife: “Her eyes got big and round, and she hates computers. If the Macintosh gets that kind of reaction out of her, this is powerful.” Miller is blunt about what happened next: “We copied it exactly.” When they brought their MacOS clone to the Fall 1984 Comdex, Steve Jobs expressed nothing but approbation. “You did a great job!” he said. No one from Apple seemed the slightest bit concerned at this stage about the resemblance to the Macintosh, and GEM hit store shelves the following spring as by far the most elegant and usable MS-DOS GUI yet.

A few months later, though, Apple started singing a very different tune. In the summer of 1985, they sent a legal threat to Digital Research which included a detailed list of all the ways that they believed GEM infringed on their MacOS copyrights. Having neither the stomach nor the cash for an extended court battle and fearing a preliminary injunction which might force them to withdraw GEM from the market entirely, Digital Research caved without a fight. They signed an agreement to replace the current version of GEM with a new one by November 15, doing away with such distinctive and allegedly copyright-protected Macintosh attributes as “the trash-can icon, the disk icons, and the close-window button in the upper-left-hand corner of a window.” They also agreed to an “undisclosed monetary settlement,” and to “provide programming services to Apple at a reduced rate.”

Any chance GEM might have had to break through the crowded field of MS-DOS GUIs was undone by these events. Most of the third-party developers Digital Research so desperately needed were unnerved by the episode, abandoning any plans they might have hatched to make native GEM applications. And so GEM, despite being vastly more usable than the contemporaneous Microsoft Windows even in its somewhat bowdlerized post-agreement form, would go on to become just another also-ran in the GUI race. [1]Reworked to run under a 68000 architecture, GEM would enjoy some degree of sustained success in another realm: not as an MS-DOS-hosted GUI but as the GUI hosted in the Atari ST’s ROM. In this form, it would survive well into the 1990s.

For the industry at large, the GEM smackdown was most significant as a sign of changing power structures inside Apple — changes which carried with them a new determination that others shouldn’t be allowed to rip off all of the Mac’s innovations. The former Pepsi marketing manager John Sculley was in the ascendant at Apple by the summer of 1985, Steve Jobs already being eased out the door. The former had been taught by the Cola Wars that a product’s secret formula was everything, and had to be protected at all costs. And the Macintosh’s secret formula was its beautiful interface; without it, it was just an overpriced chunk of workmanlike hardware — a bad joke when set next to a better, cheaper Motorola 68000-based computer like the new Commodore Amiga. The complaint against Digital Research was a warning shot to an industry that Sculley believed had gotten far too casual about throwing around phrases like “Mac-like.” “Apple is going after everybody,” warned one fearful software executive to the press. The relationship between Microsoft and Apple in particular was about to get a whole lot more complicated.

Said relationship had been a generally good one during the years when Steve Jobs was calling many of Apple’s shots. Jobs and Bill Gates, dramatically divergent in countless ways but equally ambitious, shared a certain esprit de corps born of having been a part of the microcomputer industry since before there was a microcomputer industry. Jobs genuinely appreciated his counterpart’s refusal to frame business computing as a zero-sum game between the Macintosh and the MS-DOS standard, even when provoked by agitprop like Apple’s famous “1984” Super Bowl advertisement. Instead Gates, contrary to his established popular reputation as the ultimate zero-sum business warrior, supported Apple’s efforts as well as IBM’s with real enthusiasm: signing up to produce Macintosh software two full years before the finished Mac was released, standing at Jobs’s side when Apple made major announcements, coming to trade shows conspicuously sporting a Macintosh tee-shirt. All indications are that the two truly liked and respected one another. For all that Apple and Microsoft through much of these two men’s long careers would be cast as the yin and yang of personal computing — two religions engaged in the most righteous of holy wars — they would have surprisingly few negative words to say about one another personally down through the years.

But when Steve Jobs decided or was forced to submit his resignation letter to Apple on September 17, 1985, trouble for Microsoft was bound to follow. John Sculley, the man now charged with cleaning up the mess Jobs had supposedly made of the Macintosh, enjoyed nothing like the same camaraderie with Bill Gates. He and his management team were openly suspicious of Microsoft, whose Windows was already circulating widely in beta form. Gates and others at Microsoft had gone on the record repeatedly saying they intended for Windows and the Macintosh to be sufficiently similar that they and other software developers would be able to port applications in short order between the two. Few prospects could have sounded less appealing to Sculley. Apple, whose products then as now enjoyed the highest profit margins in the industry thanks to their allure as computing’s hippest luxury brand, could see their whole business model undone by the appearance of cheap commodity clones that had been transformed by the addition of Windows into Mac-alikes. Of course, one look at Windows as it actually existed in 1985 could have disabused Sculley of the notion that it was likely to win any converts among people who had so much as glanced at MacOS. Still, he wasn’t happy about the idea of the Macintosh losing its status, now or in the future, as the only GUI environment that could serve as a true, comprehensive solution to all of one’s computing needs. So, within weeks of Jobs’s departure, feeling his oats after having so thoroughly cowed Digital Research, he threatened to sue Microsoft as well for copying the “look and feel” of the Macintosh in Windows.

He really ought to have thought things through a bit more before doing so. Threatening Bill Gates was always a dangerous game to play, and it was sheer folly when Gates had the upper hand, as he largely did now. Apple was at their lowest ebb of the 1980s when they tried to tell Microsoft that Windows would have to be cancelled or radically redesigned to excise any and all similarities to the Macintosh. Sales of the Mac had fallen to some 20,000 units per month, about one-fifth of Apple’s pre-launch projections for this point. The stream of early adopters with sufficient disposable income to afford the pricey gadget had ebbed away, and other potential buyers had started asking what you could really do with a Macintosh that justified paying two or three times as much for it as for an equivalent MS-DOS-based computer. Aldus PageMaker, the first desktop-publishing package for the Mac, had been released the previous summer, and would eventually go down in history as the product that, when combined with the Apple LaserWriter printer, saved the platform by providing a usage scenario that ugly old MS-DOS clearly, obviously couldn’t duplicate. But the desktop-publishing revolution would take time to show its full import. In the meantime, Apple was hard-pressed, and needed Microsoft — one of the few major publishers of business software actively supporting the Mac — far too badly to go around issuing threats to them.

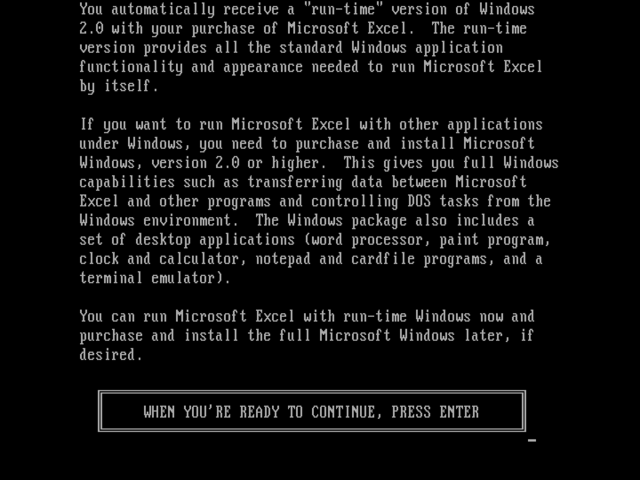

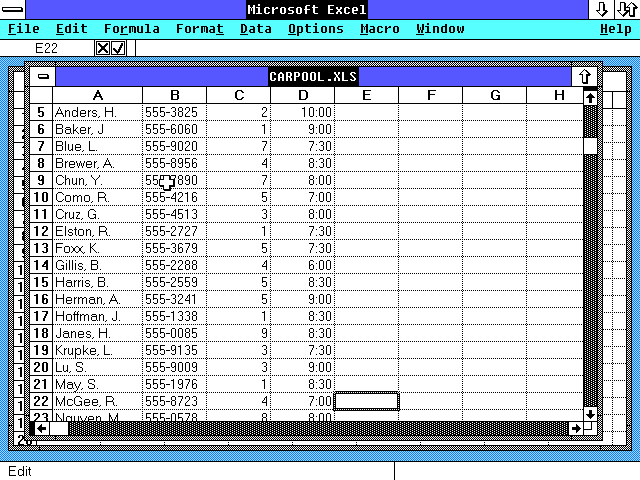

Gates responded to Sculley’s threat with several of his own. If Sculley followed through with a lawsuit, Gates said, he’d stop all work at Microsoft on applications for the Macintosh and withdraw those that were already on store shelves, treating business computing henceforward as exactly the zero-sum game which he had never believed it to be in the past. This was a particularly potent threat in light of Microsoft’s new Excel spreadsheet, which had just been released to rave reviews and already looked likely to join PageMaker as the leading light among the second generation of Mac applications. In light of the machine’s marketplace travails, Apple was in no position to toss aside a sales driver like that one, the first piece of everyday Mac business software that was not just as good as but in many ways quite clearly better than equivalent offerings for MS-DOS. Yet Gates wouldn’t stop there. He would also, he said, refuse to renew Apple’s license to use Microsoft’s BASIC on their Apple II line of computers. This was a serious threat indeed, given that the aged Apple II line was the only thing keeping Apple as a whole afloat as the newer, sexier Macintosh foundered. Duly chastised, Apple backed down quickly — whereupon Gates, smelling blood in the water, pressed his advantage relentlessly, determined to see what else he could get out of finishing the fight Sculley had so foolishly begun.

One ongoing source of frustration between the two companies, dating back well into the days of Steve Jobs’s power and glory, was the version of BASIC for the Mac which Microsoft had made available for purchase on the day the machine first shipped. In the eyes of Apple and most of their customers, the mere fact of its existence on a platform that wasn’t replete with accessible programming environments was its only virtue. In practice, it didn’t work all that differently from Microsoft’s Apple II BASIC, offering almost no access to the very things which made the Macintosh the Macintosh, like menus, windows, and dialogs. A second release a year later had improved matters somewhat, but nowhere near enough in most people’s view. So, Apple had started work on a BASIC of their own, to be called simply MacBASIC, to supersede Microsoft’s. Microsoft BASIC for the Macintosh was hardly a major pillar of his company’s finances, but Bill Gates was nevertheless bothered inordinately by the prospect of it being cast aside. “Essentially, since Microsoft started their company with BASIC, they felt proprietary towards it,” speculates Andy Hertzfeld, one of the most important of the Macintosh software engineers. “They felt threatened by Apple’s BASIC, which was a considerably better implementation than theirs.” Gates said that Apple would have to kill their own version of BASIC and — just to add salt to the wound — sign over the name “MacBASIC” to Microsoft if they wished to retain the latter’s services as a Mac application developer and retain Microsoft BASIC on the Apple II.

And that wasn’t even the worst form taken by Gates’s escalation. Apple would also have to sign what amounted to a surrender document, granting Microsoft the right to create “derivative works of the visual displays generated by Apple’s Lisa and Macintosh graphic-user-interface programs.” The specific “derivative works” covered by the agreement were the user interfaces already found in Microsoft Windows for MS-DOS and five Microsoft applications for the Macintosh, including Word and Excel. The agreement provided Microsoft with nothing less than a “non-exclusive, worldwide, royalty-free, perpetual, non-transferable license to use those derivative works in present and future software programs, and to license them to and through third parties for use in their software programs.” In return, Microsoft would promise only to support Word and Excel on the Mac until October 1, 1986 — something they would certainly have done anyway. Gates was making another of those deviously brilliant tactical moves that were already establishing his reputation as the computer industry’s most infamous villain. Rather than denying that a “visual display” could fall under the domain of copyright, as many might have been tempted to do, he would rather affirm the possibility while getting Apple to grant Microsoft an explicit exception to being bound by it. Thus Apple — or, for that matter, Microsoft — could continue to sue MacOS’s — and potentially Windows’s — competitors out of existence while Windows trundled on unmolested.

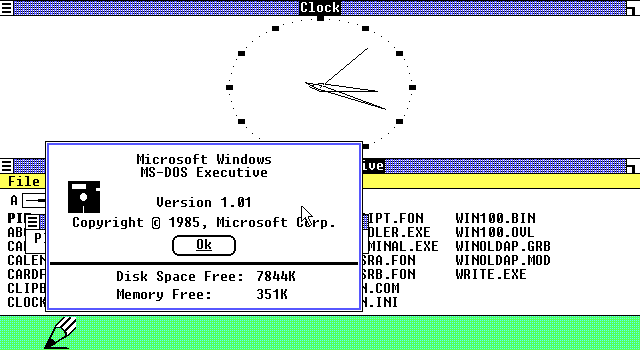

Sculley called together his management team to discuss what to do about this Apple threat against Microsoft that had suddenly boomeranged into a Microsoft threat against Apple. Most at the meeting insisted that Gates had to be bluffing, that he would never cut off several extant revenue streams just to spite Apple and support this long-overdue Windows product of his which had been an industry laughingstock for so long. But Sculley wasn’t sure; he kept coming back to the fact that Microsoft could undoubtedly survive without Apple, but Apple might not be able to survive without Microsoft — at least not right now, given the Mac’s current travails. “I’m not ready to bloody the company,” he said, and signed the surrender document two days after Windows 1.01 first appeared in its boxed form at the Fall 1985 Comdex show’s Microsoft Roast. His tone toward Gates now verged on pleading: “What I’m really asking for, Bill, is a good relationship. I’m glad to give you the rights to this stuff.”

After the full scale of what John Sculley had given away to Bill Gates became clear, Apple fans started drawing pointed comparisons between Sculley and Neville Chamberlain. As it happened, Sculley’s version of “peace for our time” would last scarcely longer than Chamberlain’s. And as for Gates… well, plenty of Apple fans would indeed soon be calling him the Adolf Hitler of the computer industry in the midst of plenty of other overheated rhetoric.

Bill Gates wrote a jubilant email to eleven colleagues at Microsoft’s partner companies, saying that he had “received a release from Apple for any possible copyright, trade-secret, or patent issue relating to our products, including Windows.” The people at Apple were less jubilant. “Everyone was somewhat disgusted over [the agreement],” remembers Donn Denman, the chief programmer of Apple’s much superior but shitcanned MacBASIC. Sculley could only say to him and his colleagues that “it was the right decision for the company. It was a business decision.” They didn’t find him very convincing. The bad feelings engendered by the agreement would never entirely go away, and the relationship between Apple and Microsoft would never be quite the same again — not even when Excel became one of the prime drivers of something of a Macintosh Renaissance in the following year.

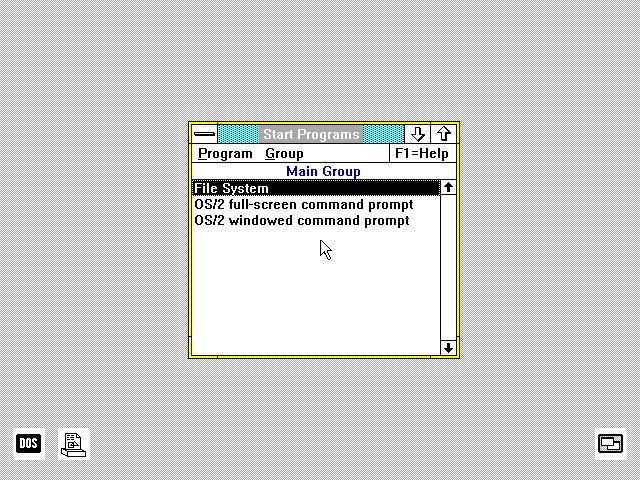

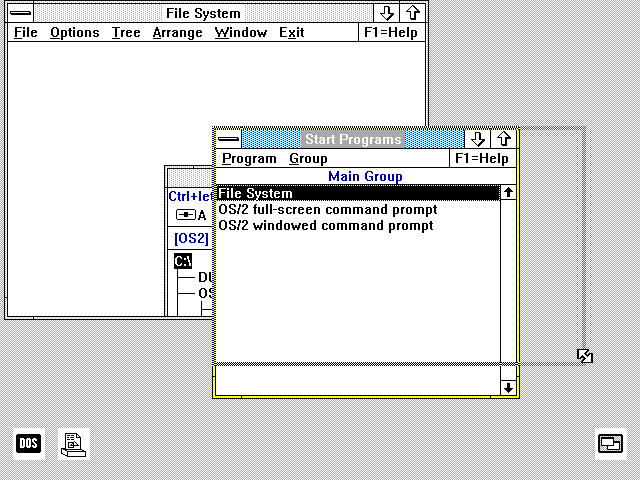

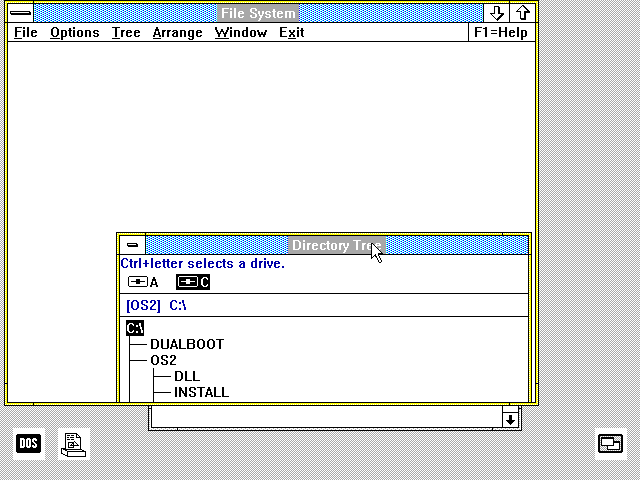

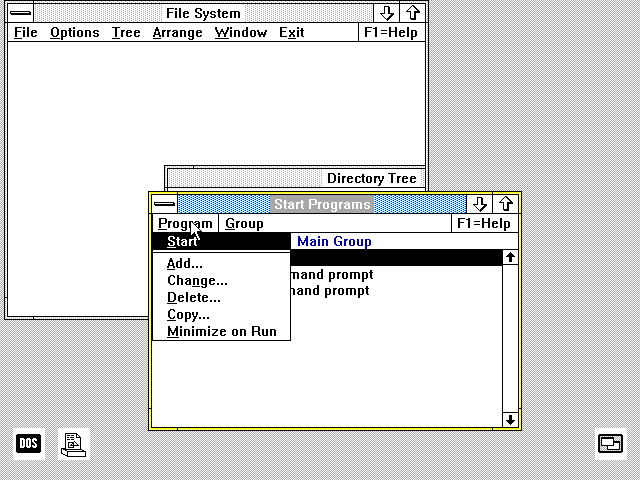

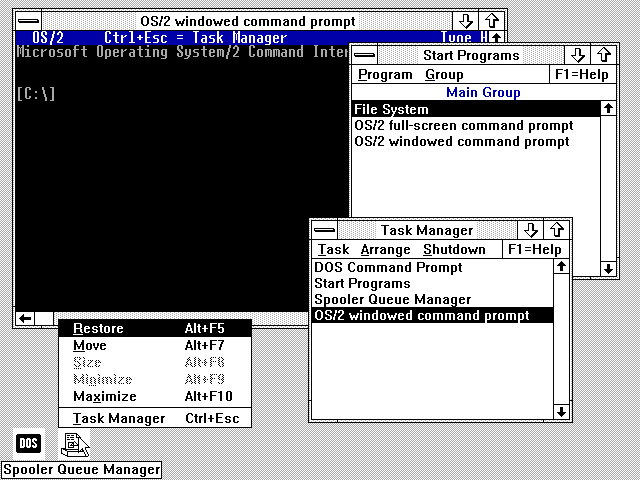

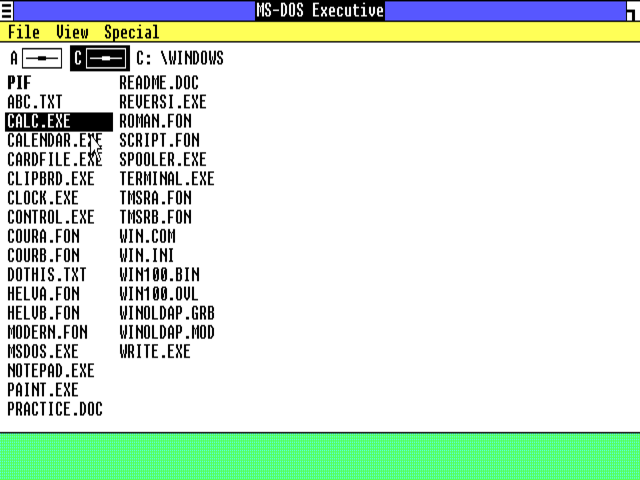

We jump forward now to March 17, 1988, by which time the industry had changed considerably. Microsoft was still entangled with IBM in the development of OS/2 and its Presentation Manager, but was also continuing to push Windows, which had come out in a substantially revised version 2 some six months earlier. The Macintosh, meanwhile, had carved out a reasonable niche for itself as a tool for publishers and creative professionals of various stripes, even as the larger world of business-focused personal computing continued to run on MS-DOS.

Sitting in his office that day, Bill Gates agreed to take a call from a prominent technology journalist, who asked him if he had a comment to make about the new lawsuit from Apple against Microsoft. “Lawsuit? What lawsuit?” Gates asked. He had just met with Sculley the day before to discuss Microsoft’s latest Mac applications. “He never mentioned it to me. Not one word,” said Gates to the reporter on the other end of the line.

Sculley, it seemed, had decided not to risk losing his nerve again. Apple had gone straight to filing their lawsuit in court, without giving Microsoft so much as a warning, much less a chance to negotiate a remedy. [2]Apple sued Hewlett-Packard at the same time, for an application called NewWave which ran on top of Windows and provided many of the Mac-like features, such as icons representing programs and disks and a desktop workspace, which Windows 2 alone still lacked. But that lawsuit would always remain a sideshow in comparison to the main event to whose fate its own must inevitably be tied. So, in the interest of that aforementioned clarity and concision, we won’t concern ourselves with it here. It appeared that the latest version of Microsoft’s GUI environment for MS-DOS, which with its free-dragging and overlapping windows hewed much closer to the Macintosh than its predecessor, had both scared and enraged Sculley to such an extent that he had judged this declaration of war to be his only option. “Windows 2 is an unconscionable ripoff of MacOS,” claimed Apple. They demanded $50,000 per infringement per unit of Windows sold — adding up to a downright laughable total of $4.5 billion by their current best estimate — and the “impoundment and destruction” of all extant or future copies of Windows. Microsoft replied that Apple had signed over the rights to the Mac’s “visual displays” for use in Windows in 1985, and, even if they hadn’t, such things weren’t really copyrightable anyway.

So, who had the right of this thing? As one might expect, the answer to that question is far more nuanced than the arguments which either side would present in court. Writing years after the lawsuit had passed into history but repeating the arguments he had once made in court, Tandy Trower, the Windows project leader at Microsoft from 1985 to 1988, stated that “the allegation clearly had no merit as I had never intended to copy the Macintosh interface, was never given any directive to do that, and never directed my team to do that. The similarities between the two products were largely due to the fact that both Windows and Macintosh had common ancestors, that being many of the earlier windowing systems, such as those like Alto and Star that were created at Xerox PARC.” This is, to put it bluntly, nonsense. To deny the massive influence of the Macintosh on Windows is well-nigh absurd — although, I should be careful to say, I have no reason to believe that Trower makes his absurd argument out of anything but ignorance here. By the time he arrived on the Windows team, Apple’s implementation of the GUI had already been so thoroughly internalized by the industry in general that the huge strides it had made over the Xerox PARC model were being forgotten, and the profoundly incorrect and unfair meme that Apple had simply copied Xerox’s work and called it a day was already taking hold.

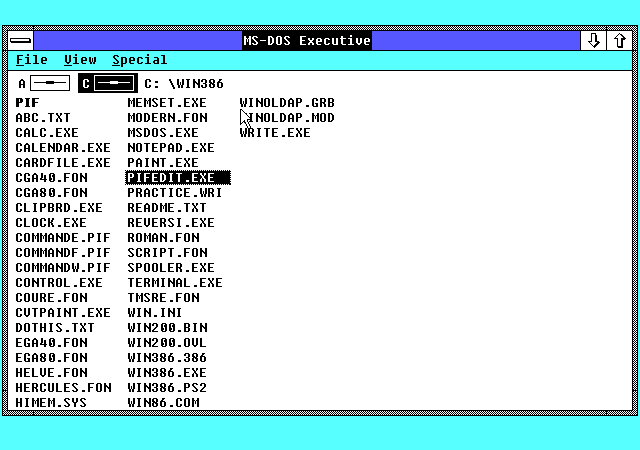

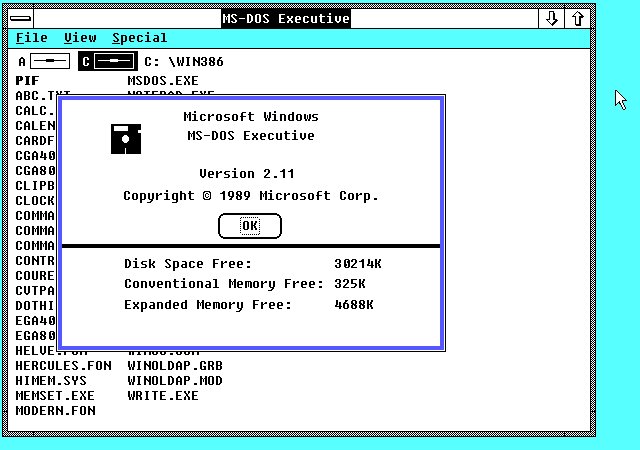

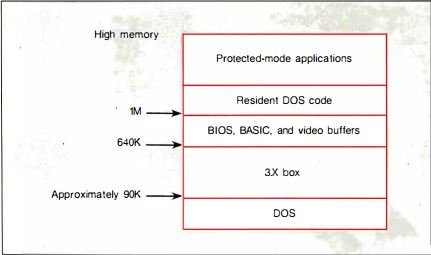

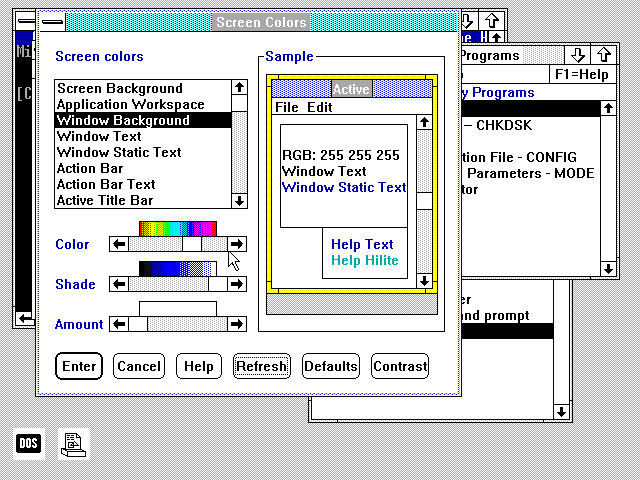

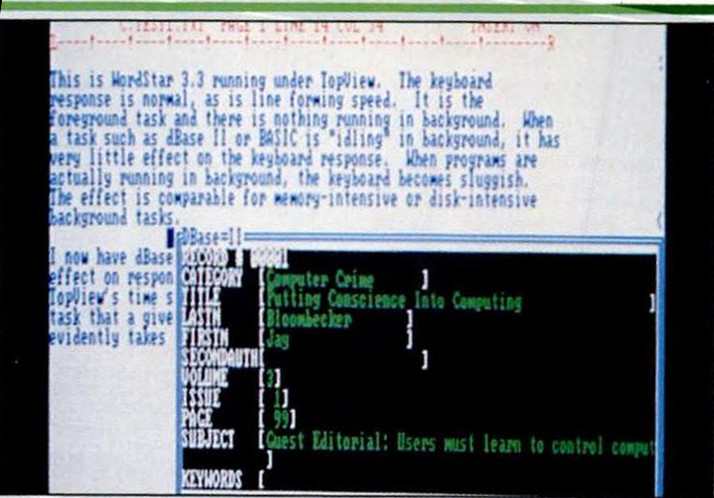

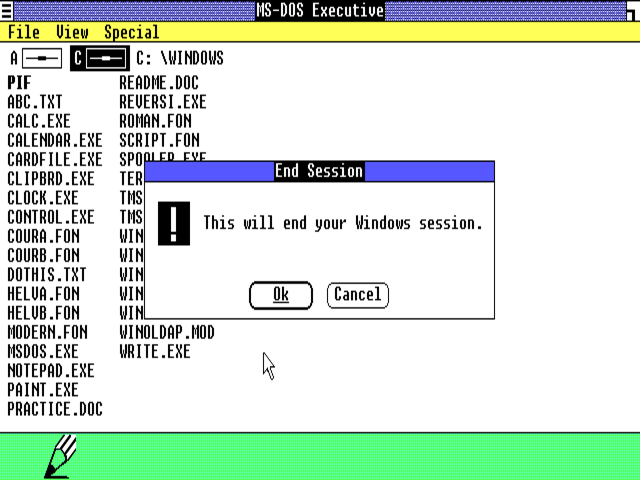

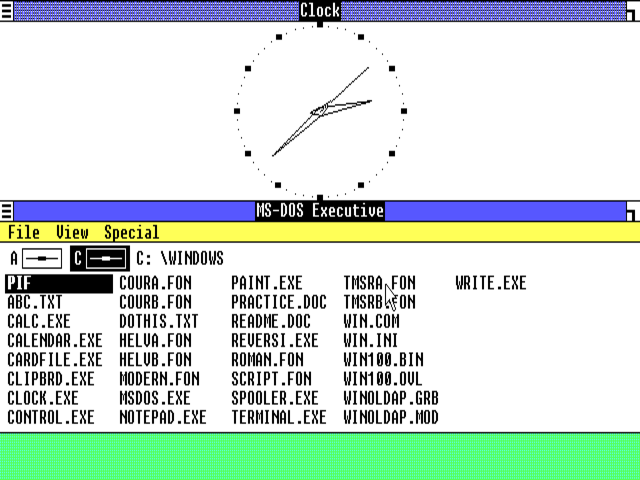

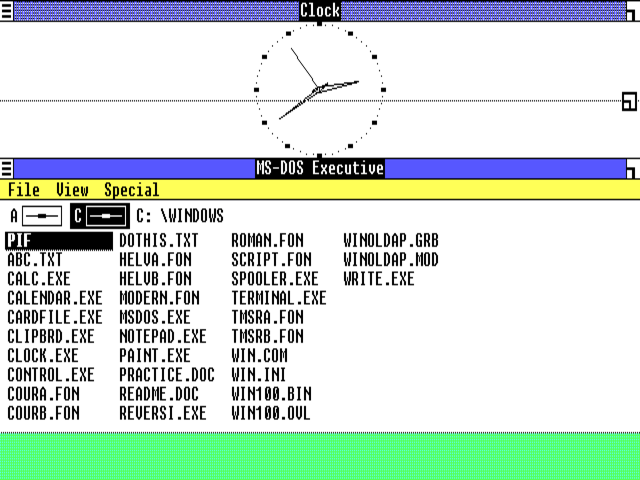

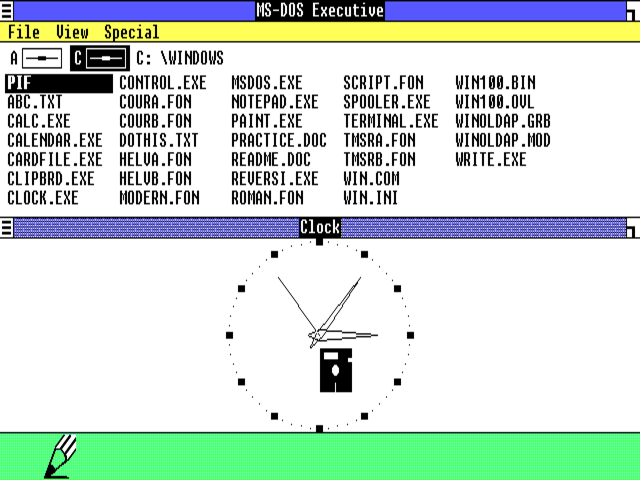

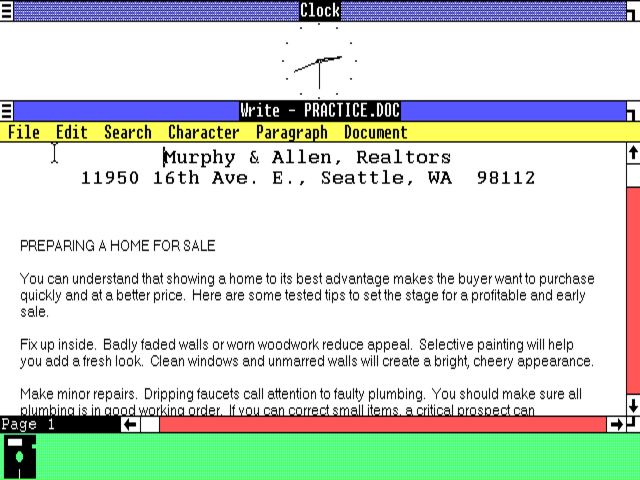

The people at Xerox PARC had indeed originated the idea of the GUI, but, as playing with a Xerox Alto emulator will quickly reveal, hadn’t been able to take it anywhere close to the Macintosh’s place of elegant, intuitive usability. By the time the Xerox GUI made its one appearance as a commercial product, in the form of the Xerox Star office system, it had actually regressed in at least one way even as it progressed in many others: overlapping windows, which had been possible in Xerox PARC’s Smalltalk environment, were not allowed on the Star. Tellingly, the aspect of Windows 1 which attracted the most derision back in the day, and which still makes it appear so hapless today, is a similar rigid system of tiled windows. (The presence of this retrograde-seeming element was largely thanks to Scott MacGregor, who arrived at Microsoft to guide the Windows project after having been one of the major architects of the Star.) Meanwhile, as I also noted in my little tour of Windows 1 in a previous article, many of those aspects of it which do manage to feel natural and intuitive today — such as the drop-down menus — are those that work more like the Macintosh than anything developed at Xerox PARC. In light of this reality, Microsoft’s GUI would only hew closer to the Mac model as time went on, for the incontrovertible reason that the Mac model was just better for getting real stuff done in the real world.

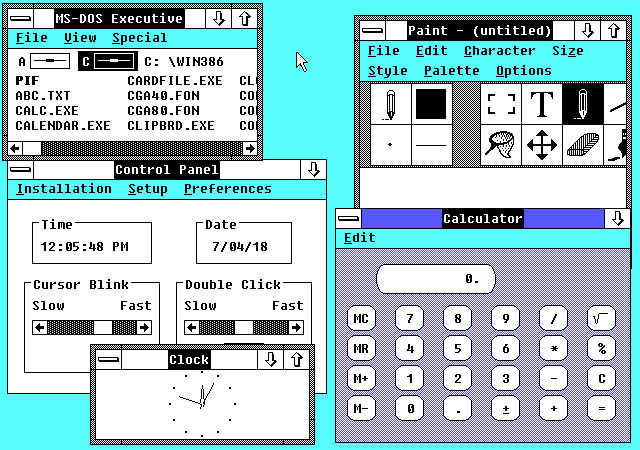

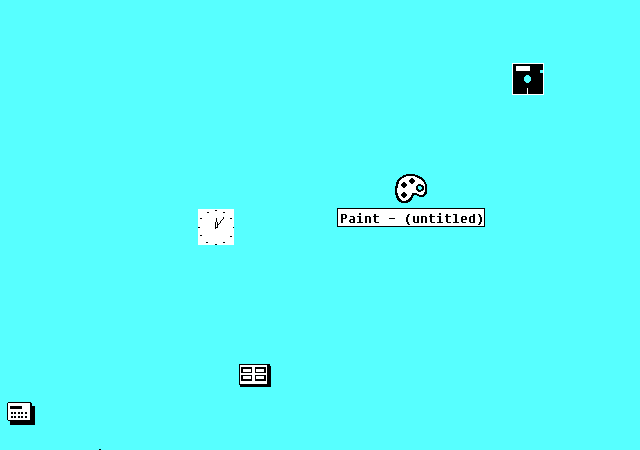

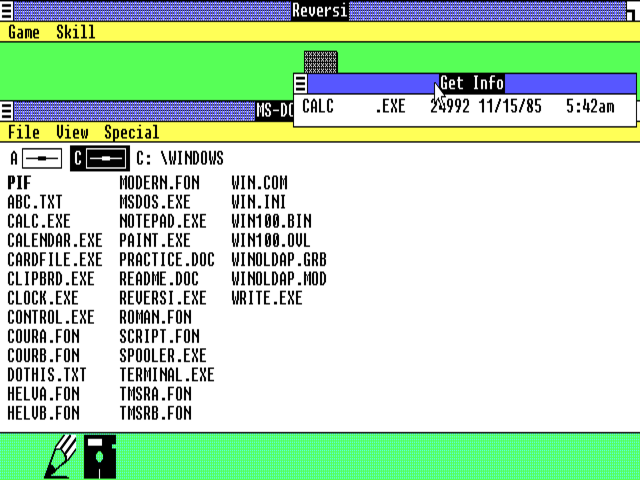

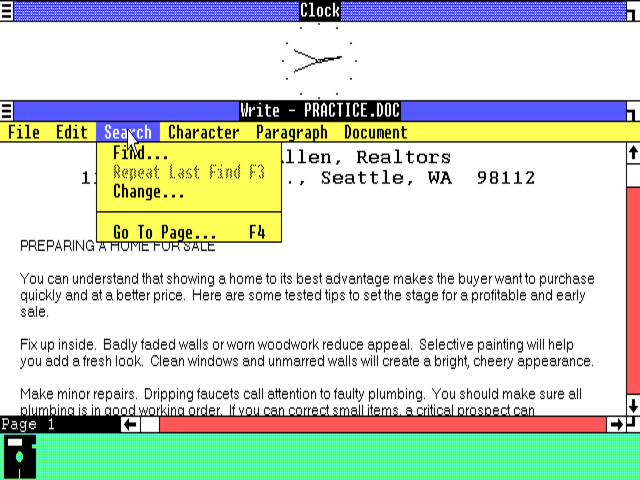

And there are plenty of other disconcerting points of similarity between early versions of MacOS and early versions of Windows. Right from the beginning, Windows 1 shipped with a suite of applets — a calculator, a “control panel” for system settings, a text editor that went by the name of “notepad,” etc. — that were strikingly similar to those included in MacOS. Further, if other members of the Windows team itself are to be believed, Microsoft’s Neil Konzen, a programmer intimately familiar with the Macintosh, duplicated some of MacOS’s internal structures so closely as to introduce some of the same bugs. In short, to believe that the Macintosh wasn’t the most important influence on the Windows user interface by far, given not only the similarities in the finished product but the knowledge that Microsoft had been working daily with the evolving Macintosh since January of 1982, is to actively deny reality out of either ignorance or some ulterior motive.

Which isn’t to say that Microsoft’s designers had no ideas of their own. In fact, some of those ideas are still in place in current versions of Windows. To take perhaps the most immediately obvious example, Windows then and now places its drop-down menus at the top of the windows themselves, while the Macintosh has a menu bar at the top of the screen which changes to reflect the currently selected window. [3]Both Microsoft and Apple have collected reams of data which they claim prove that their approach is the best one. I do suspect, however, that the original impetus can be found in the fact that MacOS was originally a single-tasking operating system, meaning that only one menu bar would need to be available at any one time. Windows, on the other hand, was designed as a multitasking environment from the start. And Microsoft’s embrace of the two-button mouse, contrasted with Apple’s stubborn loyalty to the one-button version of same, has sparked constant debate for decades. Still, differing details like these should be seen as exactly that in light of all the larger-scale similarities.

And yet just acknowledging that Windows was, shall we say, strongly influenced by MacOS hardly got to the bottom of the 1988 case. There was still the matter of that November 1985 agreement, which Microsoft was now waving in the face of anyone in the legal or journalistic professions who would look at it. The bone of contention between the two companies here was whether the “visual displays” of Windows 2 as well as Windows 1 were covered by the agreement. Microsoft naturally contended that they were; Apple contended that the Windows 2 interface had changed so much in comparison to its predecessor — coming to resemble the Macintosh even more in the process — that it could no longer be considered one of the specific “derivative works” to which Apple had granted Microsoft a license.

We’ll return to the court’s view of this question shortly. For now, though, let’s give Apple the benefit of the doubt as we continue to explore the full ramifications of their charges against Microsoft. The fact was that if one accepted Apple’s contention that Windows 2 wasn’t covered by the agreement, the questions surrounding the case grew more rather than less momentous. Could and should one be able to copyright the “look and feel” of a user interface, as opposed to the actual code used to create it? In pressing their claim, Apple was relying on an amorphous, under-explicated area of copyright law known as “visual copyright.”

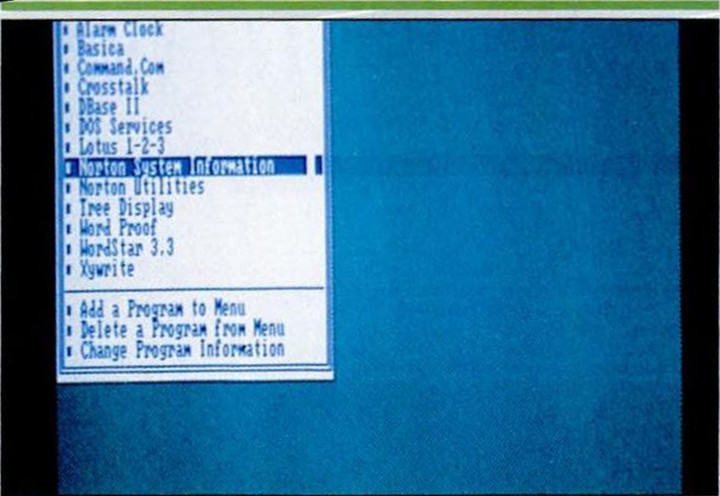

In terms of computer software, the question of the bounds of visual copyright had been most thoroughly explored in the context of videogames. Back in 1980, Midway, a major producer of standup-arcade games, had sued a much smaller company called Dirkschneider for producing a clone of their popular game Galaxian. The judge in that case ruled in favor of Midway, formulating a new legal standard called the “Ten-foot Rule”: “If a reasonable person could not, at ten feet, tell the difference between two competitive products, then there was cause to believe an infringement was occurring.” Atari, the biggest videogame producer of all, then proceeded to use this precedent to pressure dozens of companies into withdrawing their clones of Atari games — in arcades, on game consoles, and on computers — from the market.

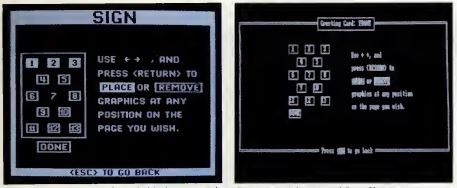

Somewhat later, in 1985, Brøderbund Software sued Kyocera for bundling with their printers an application called Printmaster, a thinly veiled clone of Brøderbund’s own hugely popular Print Shop package for making signs, greeting cards, and banners. They won their case the following year, with Judge William H. Orrick stating that Brøderbund’s copyright did indeed cover “the overall appearance, structure, and sequence” of screens in their software, and that Kyocera had thus infringed on same. Brøderbund’s Gary Carlston called the ruling “historic”: “If we don’t have copyright protection for our products, then it is going to be significantly more difficult to maintain a competitive advantage.” Encouraged by this ruling, in 1987 a maker of telecommunications software called Digital Communications Associates sued a company called Softklone Corporation — their name certainly didn’t help their cause — for copying the status display of their terminal software, and won their case as well. The Ten-Foot Rule, it seemed, could be successfully applied to software other than games. Both of these cases were cited by Apple’s lawyers in their own suit against Microsoft.

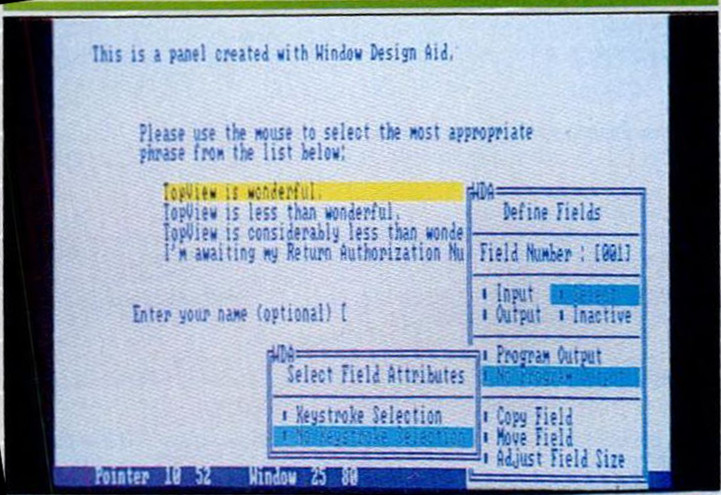

Yet the Ten-Foot Rule, at least when applied to general-purpose software rather than games, struck many as deeply problematic. One of the most important advantages of a GUI was the way it made diverse types of software from diverse developers work and look the same, thereby keeping the user from having to relearn how to do the same basic tasks over and over again. What sort of chaos would follow if people started suing each other willy-nilly over this much-needed uniformity? And what did the Ten-Foot Rule mean for the many GUI environments, on MS-DOS and other platforms, that looked so similar to one another and to MacOS? That, of course, was the real crux of the matter for Microsoft and Apple as they faced one another in court.

The debate over the Ten-Foot Rule and its potential ramifications wasn’t actually a new one, having already been taken up in public by the software industry before Apple filed their lawsuit. Fully thirteen months before that momentous day, Larry Tesler, an Apple executive, clashed heatedly with Bill Gates over this very issue at a technology conference. Tesler insisted that there was no problem inherent in applying the Ten-Foot Rule to operating systems and operating environments: “When someone comes up with a very good and popular look and feel, as we’ve done with the Macintosh, then they can make that available by licensing [it] to other people.”

But Gates was having none of this:

There’s no control of look and feel. I don’t know anybody who has asserted that things like drop-down menus and dialog boxes and just those general form-type aspects are subject to this look-and-feel stuff. Certainly it’s our view that the consistency of the user interface has become a very spreading thing, and that it’s open, generic technology. All of these approaches — how you click on [menu] bars, and certainly all those user-interface techniques and windows — there’s absolutely no restriction in any way on how people use those.

He thus ironically argued against the very premise of the 1985 agreement between Apple and Microsoft — that Apple had created a “visual display” subject to copyright protection, to which they were now granting Microsoft a license for certain products. But then, Gates seldom let deeply-held philosophical beliefs interfere with his pursuit of short-term advantage. In this latest debate as well, Gates’s arguments were undoubtedly self-serving, but they were no less valid in this case for being so. The danger he could point to if this sort of thing should spread was that of every innovative new application seeking copyright protection not just for its code but for the very ideas that made it up. Because form — i.e., look and feel — ideally followed function in software engineering. What would have happened if VisiCorp had been able to copyright the look and feel of the first spreadsheet? (VisiCalc and Lotus 1-2-3 looked pretty much identical from ten feet.) If WordStar had been able to copyright the look and feel of the word processor? If, to choose a truly absurd example, the first individual to devise a command-line interface back in the mists of time had been able to copyright that? It wasn’t at all clear where the lines could be drawn once the law started down this slippery slope. If Apple owned the set of ideas and approaches that everyone now thought of as the GUI in general, where did that leave the rest of the industry?

For this reason, Apple’s lawsuit, when it came, was greeted with deep concern even by many of those who weren’t particularly friendly with Microsoft. “Although Apple has a right to protect the results of its development and marketing efforts,” said the respected Silicon Valley pundit Larry Magid, “it should not try to thwart the obvious direction of the industry.” “If Apple is trying to push this as far as they appear to be trying to push it,” said Dan Bricklin of VisiCalc fame, “this is a sad day for the software industry in America.” More surprisingly, MacOS architect Andy Hertzfeld said that “in general, it’s a horrible thing. Apple could really end up hurting itself.” Most surprisingly of all, even Steve Jobs, now running a new company called NeXT, found Apple’s arguments as dangerous as they were unconvincing: “When we were developing the Macintosh, we kept in mind a famous quote of Picasso: ‘Good artists copy, great artists steal.’ What do I think of the suit? I personally don’t understand it. Can I copyright gravity? No.”

Interestingly, the lawyers pressing the lawsuit on Apple’s behalf didn’t ask for a preliminary injunction that would have forced Microsoft to withdraw Windows from the market. Some legal watchers interpreted this fact as a sign that they themselves weren’t certain about the real merits of their case, and hoped to win it as much through bluster as finely-honed legal arguments. Ditto Apple’s request that the eventual trial be decided by a jury of ordinary people who might be prone to weigh the case based on everyday standards of “fairness,” rather than by a judge who would be well-versed in the niceties of the law and the full ramifications of a verdict against Microsoft.

At this point, and especially given those ramifications, one feels compelled to ask just why Apple chose at this juncture to embark on such a lengthy, expensive, and fraught enterprise as a lawsuit against the company that remained the most important single provider of serious business software for the Macintosh, a platform whose cup still wasn’t exactly running over with such things. By way of an answer, we should consider that John Sculley was as proud a man as most people who rise to his elevated status in business tend to be. The belief, widespread both inside and outside of Apple, that he had let Bill Gates bully, outsmart, and finally rob him blind back in 1985 had to rankle him badly. In addition, Apple in general had long nursed a grievance, unproductive but understandable, against all the outsiders who had copied the interface they had worked so long and hard to perfect; thus those threatened lawsuits against Digital Research and Microsoft all the way back in 1985. A wiser leader might have told his employees to take their competitors’ imperfect copying as proof of Apple’s superiority, might have exhorted them to look toward their next big innovation rather than litigate their innovations of the past. But, at least on March 17, 1988, John Sculley wasn’t that wiser leader. Thus this lawsuit, dangerous not just to Apple and Microsoft but to their entire industry.

Bill Gates, for his part, remained more accustomed to bullying than being bullied. It had been spelled out for him right there in the court filing that a loss to Apple would almost certainly mean the end of Windows, the operating environment which was quite possibly the key to Microsoft’s future. Even widespread fear of such an event, he realized, could be devastating to Windows’s — and thus to Microsoft’s — prospects. So, he struck back fiercely so as to leave no doubt where he stood. Microsoft filed a counter-suit in April of 1988, accusing Apple of breaking the 1985 agreement and of filing their own lawsuit in bad faith, in the hope of creating fear, uncertainty, and doubt around Windows and thus “wrongfully inhibiting” its commercial future. Adding weight to their argument that the original lawsuit was a form of business competition by other means was the fact that Apple was being oddly selective in choosing whom to sue over the alleged copyright violations. Asked why they weren’t going after other products just as similar to MacOS as Windows, such as IBM’s forthcoming OS/2 Presentation Manager, Apple refused to comment.

The first skirmishes took place in the press rather than a courtroom: Sculley accusing Gates of having tricked him into signing the 1985 agreement, Gates saying a contract was a contract, and what sort of a chief executive let himself be tricked anyway? The exchanges just kept getting uglier from there. The technology journalists, naturally, loved every minute of it, while the software industry was thrown into a tizzy, wondering what this would mean for Windows just as it finally seemed to be gaining some traction. Phillipe Kahn, CEO of Borland, described the situation in colorful if non-politically-correct language: it was like “waking up and finding your partner might have AIDS.”

The court case marched forward much more slowly than the tabloid war of words. Gates stated in a sworn deposition that “from a user’s perspective, the visual displays which appear in Windows 2 are virtually identical to those which appear in Windows 1.” “This assertion,” Apple replied, “is contradicted by even the most casual observation of the two products.” On March 18, 1989, Judge William Schwarzer of the Federal District Court in San Francisco marked the one-year anniversary of the case by ruling against Microsoft on this issue, stating that only those attributes of Windows 2 which had also existed in Windows 1 were covered by the 1985 agreement. This meant most notably that the newer GUI’s system of overlapping windows stood outside the boundaries of that document, and thus that, as the judge put it, the 1985 agreement alone “was not a complete defense” for Microsoft. It did not, he ruled, give Microsoft the right “to develop future versions of Windows as it pleases. What Microsoft received was a license to use the visual displays in the named software products as they appeared to the user in November 1985. The displays [of Windows 1 and Windows 2] are fundamentally different.” Microsoft’s stock price promptly plummeted by 27 percent. It was an undeniable setback. “Microsoft’s major defense has been shot down,” crowed Apple’s attorneys.

“Major” was perhaps not the right choice of adjectives, but certainly Microsoft’s simplest possible form of defense had proved insufficient to bail them out. It seemed that total victory could be achieved now only by invalidating the whole notion of visual copyright which underlay both the 1985 agreement and Apple’s lawsuit based on its violation. That meant a long, tough slog at best. And with Windows 3 — the version that Microsoft was convinced would finally be the breakthrough version — getting closer and closer to release and looking more and more like the Macintosh all the while, the stakes were higher than ever.

The question of look and feel and visual copyright as applied to software had implications transcending even the fate of Windows or either company. If Apple’s suit succeeded, it would transform the software business overnight, making it extremely difficult to borrow or build on the ideas of others in the way that software had always done in the past. Bill Gates was an avid student of business history. As was his wont, he now looked back to compare his current plight with that of an earlier titan of industry. Back in 1903, just as the Ford Motor Company was getting off the ground, Henry Ford had been hit with a lawsuit from a group of inventors claiming to own a patent on the very concept of the automobile. He had battled them for years, vowing to fight on even after losing in open court in 1909: “There will be no let-up in the legal fight,” he declared on that dark day. At last, in 1911, he won the case on appeal — winning it not only for Ford Motor Company but for the future of the automobile industry as a field of open competition. His own legal war had similar stakes, Gates believed, and he and Microsoft intended to prosecute it in equally stalwart fashion — to win it not just for themselves but for the future of the software industry. This was necessary, he wrote in a memo, “to help set the boundaries of where copyrights should and should not be applied. We will prevail.”

(Sources: the books The Making of Microsoft: How Bill Gates and His Team Created the World’s Most Successful Software Company by Daniel Ichbiah and Susan L. Knepper, Hard Drive: Bill Gates and the Making of the Microsoft Empire by James Wallace and Jim Erickson, Gates: How Microsoft’s Mogul Reinvented an Industry and Made Himself the Richest Man in America by Stephen Manes and Paul Andrews, and Apple Confidential 2.0: The Definitive History of the World’s Most Colorful Company by Owen W. Linzmayer; Wall Street Journal of September 25 1987; Creative Computing of May 1985; InfoWorld of October 7 1985 and October 20 1986; MacWorld of October 1993; New York Times of March 18 1988 and March 18 1989.)

Footnotes

| ↑1 | Reworked to run under a 68000 architecture, GEM would enjoy some degree of sustained success in another realm: not as an MS-DOS-hosted GUI but as the GUI hosted in the Atari ST’s ROM. In this form, it would survive well into the 1990s. |

|---|---|

| ↑2 | Apple sued Hewlett-Packard at the same time, for an application called NewWave which ran on top of Windows and provided many of the Mac-like features, such as icons representing programs and disks and a desktop workspace, which Windows 2 alone still lacked. But that lawsuit would always remain a sideshow in comparison to the main event to whose fate its own must inevitably be tied. So, in the interest of that aforementioned clarity and concision, we won’t concern ourselves with it here. |

| ↑3 | Both Microsoft and Apple have collected reams of data which they claim prove that their approach is the best one. I do suspect, however, that the original impetus can be found in the fact that MacOS was originally a single-tasking operating system, meaning that only one menu bar would need to be available at any one time. Windows, on the other hand, was designed as a multitasking environment from the start. |