If you wanted to breed a game designer, you could do worse than starting with an engineer father and an artist mother. At any rate, that’s the combination that led to Richard Garriott.

Father Owen had quite a remarkable career in his own right. In 1964 he was at age 33 a professor of electrical engineering at Stanford University when NASA, in the thick of the moon race, put out the call for its fourth group of astronauts. This group of six would be different from all that came before, for, in spite of much grumbling from within and without the organization (not least from the current astronauts themselves), they would be selected from the ranks of civilian scientists and engineers rather than military pilots. Owen applied in the face of long odds: no fewer than 1350 others had the same idea in moon-mad America. He survived round after round of medical and psychological tests and interviews, however, until in May of 1965 none other than the first American to fly into space, Alan Shepard, called him in the middle of a lecture to tell him he was now an astronaut. Owen and family — including a young Richard, born in 1961 — moved to the Houston area, to a suburb called Clear Lake made up almost entirely of people associated with the nearby Manned Spacecraft Center. While Owen trained (first task: learning how to fly a jet), the rest of the family lived the exciting if rather culturally antiseptic lives typical of NASA, surrounded by science and gadgetry and all the fruits of the military-industrial complex. Whether because NASA did not quite trust these scientist-astronauts or because of the simple luck of the draw, only one from Owen’s group of six actually got the chance to go to the moon, and it wasn’t Owen. As a consolation prize, however, Owen flew into space on July 28, 1973, as part of the second crew to visit Skylab, America’s first semi-permanent space station, where he spent nearly two months. After that flight Owen stayed on with NASA, and would eventually fly into space again aboard the space shuttle in November of 1983. And those are just the adventurous highlights of a scientific and engineering career filled with awards, publications, and achievements.

Such a father certainly provided quite an example of achievement for a son, one that Richard took to heart: beginning with his kindergarten year, he entered a project into his school’s science fair every single year until he graduated high school, each one more ambitious than the last. But such an example could also, of course, be as intimidating as it was inspiring. It didn’t help that Owen was by nature an extremely reserved man, sparing of warmth or praise or obvious emotion of any stripe. Richard has spoken of his disappointment at his father’s inability to articulate even the most magical of his experiences: “My dad never told me anything about being in space. He once said it was kind of like scuba diving, but he never said anything with any kind of emotion.” Nor did Owen’s career leave him much time for Richard or his siblings, two older brothers and a younger sister.

The job of parenting therefore fell mostly to Helen Garriott. An earthier, quirkier personality than her husband, Helen’s passion — which she pursued with equal zeal if to unequal recognition as her husband’s scientific career — was art: pottery, silversmithing, painting, even dabblings in conceptual art. While Owen provided occasional words of encouragement, Helen actively helped Richard with his science-fair projects as well as the many other crazy ideas he and his siblings came up with, such as the time that he and brother Robert built a functioning centrifuge (the “Nauseator”) in the family’s garage. With the example of Owen and the more tangible love and support of Helen, all of the children were downright manic overachievers virtually from the moment they could walk, throwing themselves with abandon into projects both obviously worthwhile (the science fairs) and apparently frivolous (the Nauseator, in which the neighborhood children challenged each other to ride until they vomited).

For Richard’s freshman year of high school, 1975-76, Owen temporarily returned the family to Palo Alto, California, home of Stanford, where he had accepted a one-year fellowship. Situated in the heart of Silicon Valley as it was, Richard’s high school there was very tech-savvy. It was here that he was first exposed to computers, via the terminals that the school had placed in every single classroom. He was not particularly excited by them, however; indeed, it was his parents that first got the computer religion. Upon returning to Houston for his sophomore year, Richard dutifully enrolled in his high school’s single one-semester computer course at their behest, in which an entire classroom got to program in BASIC via the school’s single clunky teletype terminal, connected remotely to a CDC Cyber mainframe at some district office or other. Richard aced the class, but was, again, nonplussed. So his parents tried yet again, pushing him to attend a seven-week computer camp held that summer at Oklahoma University. And this time it took.

Those seven weeks were an idyllic time for Richard, during which it all seemed to come together for him in a sort of nerd version of a summer romance. On the very first day at camp, his fellow students dubbed him “Lord British” after he greeted them with a formal “Hello” rather than a simple “Hi.” (The nickname was doubly appropriate in that he was actually born in Britain, during a brief stint of Owen’s at Cambridge University.) The same students also introduced him to Dungeons and Dragons. With the pen-and-paper RPG experience fresh in his mind as well as The Lord of the Rings, which he had just read during the previous school year, Richard finally saw a reason to be inspired by the computers that were the ostensible purpose of the camp; he began to wonder if it might not be possible to build a virtual fantasy world of his own inside their memories. And he also found a summer girlfriend at camp, which never hurts. He came back from Oklahoma a changed kid.

In addition to his experiences at the computer camp, the direction his life would now take was perhaps also prompted by a conversation he had had a few years before, during a routine medical examination conducted (naturally) by a NASA doctor, who informed that his eyesight was getting worse and that he would need to get glasses for the first time. That’s not the end of the world, of course — but then the doctor dropped this bomb: “Hey, Richard, I hate to be the one to break it to you, but you’re no longer eligible to become a NASA astronaut.” Richard claims that he had not been harboring the conscious dream of following in his father’s footsteps, but the news that he could not join his father’s private club nevertheless hit him like a personal rejection. Even in late 1983, as he was amassing fame and money as a game developer beyond anything his father ever earned, he stated to an interviewer that he would “drop everything for the chance to go into space.” Much later he would famously fulfill that dream, but for now his path must be in a different direction. The computer camp gave him that direction: to become a creator of virtual worlds.

Back in suburban Houston, Richard began a D&D recruiting drive, starting with the neighbor kids with whom he’d grown up and working outward from there. By a couple of months into his junior year, Richard with the aid of his ever-supportive mother was hosting weekend-long sessions in the family home. By early 1978, multiple games were going on in different parts of the house, and even some adults had started to turn up, to game or just to smoke and drink and socialize on the front porch.

To understand how this could happen, you have to understand something about Richard. Although his interests — science, D&D, computers, Lord of the Rings — were almost prototypically nerdy, in personality and appearance he was not really your typical introverted high-school geek. He was a trim, good-looking kid with a natural grace that kept the schoolyard bullies at bay. Indeed, he co-opted them; those weekend sessions were remarkable for bringing together all of the usually socially segregated high-school cliques. Most of all, Richard was very glib and articulate for his age, able when he so chose to cajole and charm almost anyone into anything in a way that reminds of none other than that legendary schmoozer Steve Jobs himself. His later friend and colleague Warren Spector once said that Richard “could change reality through the force of will [and] personal charisma,” echoing the legends of Jobs’s own “reality distortion field.” He turned those qualities to good use in finding a way to achieve the ultimate dream of all nerds at this time: regular, everyday access to a computer.

With only one computer class on the curriculum, the school’s single terminal sat unused the vast majority of the time. On the very first day of his junior year, Richard marched into the principal’s office with a proposal. From Dungeons and Dreamers:

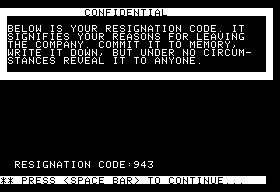

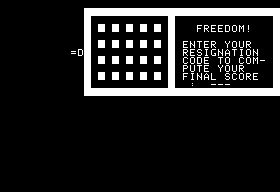

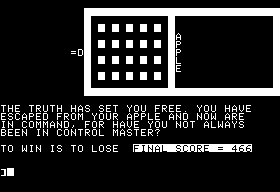

He’d conceive, develop, and program fantasy computer games using the school’s computer [terminal], presenting the principal and the math teacher with a game at the end of each semester. There wasn’t even a computer teacher there to grade him on his skills. To pass the class, he simply had to turn in a game that worked. If he did, he’d get an A. If it didn’t, he’d fail.

Incredibly — and here’s where the reality distortion field really comes into play — the principal agreed. Richard claims that the school decided to count BASIC as his foreign-language credit. (A decision which maybe says a lot about the state of American language training — but I digress…)

Accordingly, when not busy with schoolwork, the science fair (which junior and senior projects also used the computer extensively), tabletop D&D, or the Boy Scouts Explorers computer post he joined and (typically enough) soon became president of, Richard spent his time and energy over the next two years on a series of computer adaptations of D&D. The development environment his school hosted on its aging computer setup was not an easy one; his terminal did not even have a screen, just a teletype. He programmed by first writing out the BASIC code laboriously by hand, reading it through again and again to check for errors. He then typed the code on a tape punch, a mechanical device that resembled a typewriter but that transcribed entered characters onto punched tape (a ribbon of tape onto which holes were punched in patterns to represent each possible character). Finally he could feed this tape into the computer proper via a punched-card reader, and hope for the best. A coding error or typo meant that he got to type the whole thing out again. Likewise, he could only add features and improvements by rewriting and then retyping the previous program from scratch. He took to filling numbered notebooks with code and design notes, one for each iteration of the game, which he called simply D&D. By the end of his senior year he had made it all the way to D&D 28, although some iterations were abandoned as impractical for one reason or another before reaching fruition as an entered, playable game.

In building his games, Richard was largely operating in a vacuum, trying things out for himself to see what worked. He had been exposed to the original Adventure when his Boy Scouts Explorers visited the computer facilities at Lockheed, but, uniquely amongst figures I’ve discussed in this blog, was nonplussed by it: “It was very different from the kind of thing I wanted to write, which was something very freegoing, with lots of options available to you, as opposed to a ‘node’ game like Adventure. At that time, I didn’t know of any other games that would let you go anywhere and do anything.” From the very beginning, then, Richard came down firmly on the side of simulation and emergent narrative, and, indeed, would never take any interest in the budding text-adventure phenomenon. It’s possible that the early proto-CRPGs hosted on the PLATO network would have been more to his taste, but it doesn’t appear that Richard was ever exposed to them. And so his D&D games expressed virtually exclusively his own vision, which he literally built up from scratch, iteration by iteration.

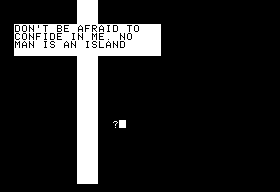

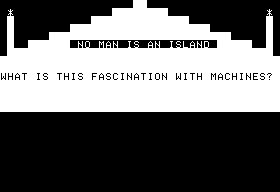

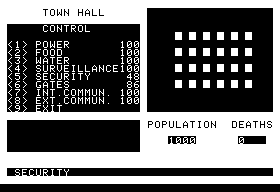

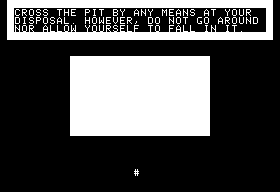

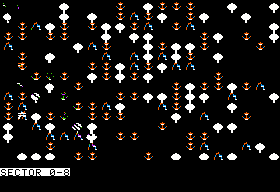

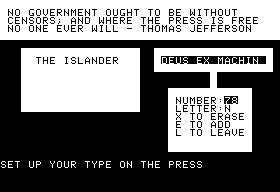

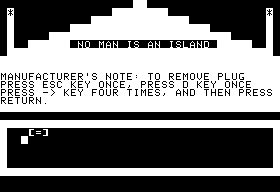

But how did they play? Because they were stored only on spools of tape, we don’t have them to run via emulation. (On the other hand, Richard has donated a paper tape containing one of the games to the University of Texas as part of the Richard Garriott Papers collection. If someone there could either get an old tape reader working to read it in or — if truly dedicated — translate the punches by hand, the results would be fascinating to see.) We do have, however, a pretty good idea of how they operated: more primitive than, but remarkably similar to, the commercial games that would soon make Richard famous. In fact, Richard has often joked that he spent his first fifteen or so years as a designer essentially making the same game over and over. The D&D games, like the Ultimas, show a top-down view of the player’s avatar and surroundings. They run not in real-time but in turns. The player interacts with the game via a set of commands which are each triggered by a single keypress: “N” to go north, “S” to view her character’s vital statistics, “A” to attack, space to do nothing that turn, etc. Because the games were running on a teletype, scenery and monsters could be represented only by ASCII characters; a “G” might represent a goblin, etc. And unlike the later games, the top-down view was maintained even in dungeons. This description reminds one strongly of the roguelikes of today, and of course of their ancestors on the PLATO system. It’s interesting that Richard came up with something so similar working independently. (Although on the other hand, how else was he likely to do it?) Playing the games would have required almost as much patience as writing them, as well as a willingness to burn through reams of paper, for the only option Richard had was to completely redraw the “screen” anew on paper each time the player made a move.

As his time in high school drew toward a close in the spring of 1979, Richard found himself facing a crisis of sorts: not only would he not be able to work on D&D anymore, but he faced losing his privileged access to a computer in general. He was naturally all too aware of the first generation of PCs that had now been on the market for almost two years, but so far his father had been resistant to the idea of buying one for the family, not seeing much future in the little toys as opposed to the hulking systems he was familiar with at NASA. In desperation, Richard turned on the reality distortion field and marched into Owen’s den with a proposal: if he could get the latest, most complicated iteration of D&D working and playable, without any bugs, Owen should buy him the Apple II system he’d been lusting over. Owen was perhaps more resistant to the field than most, being Richard’s father and all, but he did agree to go halfsies if Richard succeeded. Richard of course did just that (as Owen fully expected), and by the end of the summer his summer job earnings along with Owen’s contribution provided for him at last Apple’s just released II Plus model.

Compared to what he had been working with earlier, the Apple II, with its color display and graphics capabilities, its real-time responsiveness, and its ability to actually edit and tinker with a program in memory, must have seemed like a dream. Even the cassette drive he was initially stuck using for storage was an improvement over manually punching holes in paper tape. Richard had just begun exploring the capabilities of his new machine when it was time to head off to Austin, where he had enrolled in the Electrical Engineering program (the closest thing the university yet offered to Computer Science) at the University of Texas.

Richard’s early months at UT were, typically enough for a university freshman, somewhat difficult and unsettling. He had left safe suburban Clear Lake, where he had known everyone and been regarded as a quirky neighborhood star (a sort of Ferris Bueller without the angst), for the big, culturally diverse city of Austin and UT, where he was just one of tens of thousands of students filling huge lecture halls. When not returning home to Houston, something he did frequently, he uncharacteristically spent most of his time holed up alone in his dorm, tinkering on the Apple. It was not until his second semester that he stumbled upon a flyer for something called the “Society for Creative Anachronism,” a group we’ve encountered before in this blog who had a particularly large and active presence in eclectic Austin. He threw himself into SCA with characteristic passion. Soon Richard, who had dabbled in fencing before, was participating in medieval duels, camping outdoors, making and wearing his own armor, arguing chivalry and philosophy in taverns, and learning to shoot a crossbow. Deeming the “Lord British” monicker a bit audacious for a newcomer, he took the name “Shamino” (inspired by the Shimano-brand gears in his bicycle) inside the SCA, playing a rustic woodman-type to which the closest D&D analogue was probably the ranger class. The social world of the Austin SCA would play a huge role in Richard’s future games, with most of his closest friends there receiving a doppelganger inside the computer.

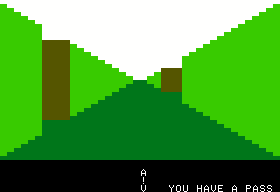

Meanwhile he continued to explore the Apple II. A simplistic but popular genre of games at this time were the maze games, in which the computer generates a maze and expects the player to find her way out of it — think Hunt the Wumpus, only graphical and without all the hazards to avoid. Most examples used the standard top-down view typical of the era, but Richard stumbled over one written by Silas Warner of Muse Software and called simply Escape! which dropped its player into a three-dimensional rendering of a maze, putting her right inside it. “As the maze dropped down into that low perspective, I immediately realized that with one equation, you could create a single-exit maze randomly. My world changed at that moment.”

If you’d like to have a look at this game which so inspired Richard, you can download a copy on an Apple II disk image. After booting the disk on your emulator or real Apple II, type “RUN ESCAPE” at the prompt to begin.

Escape! inspired Richard to try to build the same effect into the dungeon areas of his D&D game, which he was now at work porting to the Apple II. Uncertain how to go about implementing it, he turned to his parents, who helped in ways typical of each. First, his mother explained to him how an artist uses perspective to create the illusion of depth; then, his father helped him devise a set of usable geometry and trigonometry equations he could use to translate his mother’s artistic intuition into computer code. Richard took to calling the Apple II version of his game D&D 28B, since it was essentially a port of the final teletype version to the Apple II, albeit with the addition of the 3D dungeons.

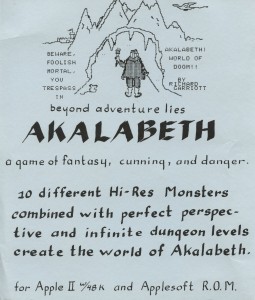

Richard spent the summer of 1980 back in Houston with his family, working at the local ComputerLand store to earn some money. His boss there, John Mayer, noticed the game he was tinkering with, which by this time was getting popular amongst Richard’s friends and colleagues at the store. Mayer did Richard the favor of a lifetime when he suggested that he might want to consider packaging the game up and selling it right there in the shop. Richard therefore put together some packaging typical of the era, sticking a mimeographed printout of the in-game help text and some artwork sketched by his mother into a Ziploc bag along with the game disk itself. (He had by this time been able to purchase a disk drive for his Apple II.) He retitled the game Akalabeth, after one of his tabletop D&D worlds. Deeply skeptical about the whole enterprise, he made somewhere between 15 and 200 copies (sources differ wildly on the exact number), and spent the rest of the summer watching them slowly disappear from the ComputerLand software wall. In this halting fashion a storied career was born.

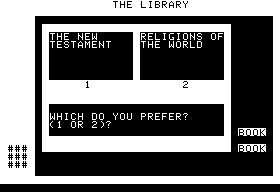

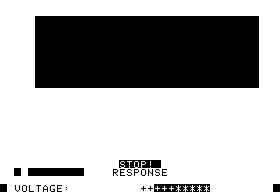

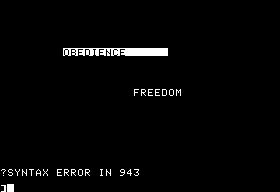

We’ll look at Akalabeth in some detail next time.