Monarchy is like a splendid ship. With all sails set it moves majestically on, but then it hits a rock and sinks forever.

— Fisher Ames

In The Republic, that most famous treatise ever written on the slippery notions of good and bad government, Plato describes what first motivated people to willingly cede some of their personal freedoms to others. He writes that “a state arises, as I conceive, out of the needs of mankind; no one is self-sufficing, but all of us have many wants. Can any other origin of a state be imagined?”

Even in relatively primitive civilizations, the things that need doing outstrip the ability of any single individual to learn how to do them. From this comes specialization, that key marker of civilization. But for specialization to work, a civilization needs a marketplace — a central commons where goods and services can be bought, sold, and traded for. Maintaining such a space, and resolving any disputes that arise in it, requires a central authority. And then, as the fruits of specialization cause a civilization to rise in the world, outsiders inevitably begin to think about taking what it has. Thus a standing army needs to be created. So, already we have the equivalents of a Department of Justice, a Department of Commerce, and a Department of Defense. But now we have another problem: the people staffing all of these bureaucracies, not to mention the soldiers in our army, don’t themselves produce goods and services which they can use to sustain themselves. Thus we now need an Internal Revenue Service of some sort, to collect taxes from the rest of the people — by force, if necessary — so that the bureaucrats and the soldiers have something to live on. And so it continues.

I want to point out a couple of important features of this snippet of the narrative of progress I’ve just outlined. The first is that, of all forms of progress, the growth of government is greeted with the least enthusiasm; for most people, government is the very definition of a necessary evil. At bottom, it becomes necessary because of one incontrovertible fact: that what is best for the individual in a vacuum is almost never what is best for the collective. Government addresses this fundamental imbalance, but in doing so it’s bound to create resentment in the individuals over whom it asserts control. Even if we understand that it’s necessary, even if we agree in principle with the importance of regulating commerce, protecting our country’s borders, even collecting funds to help the young, the old, the sick, and the disadvantaged, how many of us don’t cringe a little when we have to pay the taxman out of our own hard-earned wages? Not for nothing do the people of almost all times and all countries feel a profound ambivalence toward their entire political class, those odd personality types willing to baldly seek power over their peers. Will Durant:

If the average man had had his way there would probably never have been any state. Even today he resents it, classes death with taxes, and yearns for that government which governs least. If he asks for many laws it is only because he is sure that his neighbor needs them; privately he is an unphilosophical anarchist, and thinks laws in his own case superfluous.

The other thing that bears pointing out is that, even though they make up two separate departments in a university, political science and economics are very difficult to pull apart in the real world. Certainly one doesn’t have to be a Marxist to acknowledge that it was commerce that gave rise to government in the first place. In Plough, Sword, and Book, his grand sociological theory of history, Ernest Gellner writes that “property and power are correlative notions. The agricultural revolution gave birth to the exchange and storage of both necessities and wealth, thereby turning power into an unavoidable aspect of social life.” The interconnections between government and economics can be tangled indeed, as in the case of a descriptor like “communism,” technically an economic system but one which, in modern usage at least, presumes much about government as well; a phrase like “communist democracy” rings as an oxymoron to ears brought up in the Western tradition of liberal democracy.

In this light, we can probably forgive the game of Civilization for lumping communism into its list of possible systems of “government” for your civilization, as I hope you’ll be able to forgive me for discussing it in this pair of articles on those systems. (The article that follows this pair will address other aspects of economics in Civilization.) Each of Civilization‘s broadly-drawn governments provides one set of answers to the eternally fraught questions of who should have power in a society, what the limits of that power should be, and whence the power should be derived. As such, they lend themselves better than just about any other aspect of the game to systematic step-by-step analysis. So, that’s what I want to do in this article and the next, looking at each of the six in turn, asking, as has become our standard practice in this series, what we can learn about the real history of government from the game and what we can learn about the game from the real history of government.

That said, there are — also as usual — complications. This is one of the few places where Civilization rather breaks down as a linear narrative of progress. You don’t need to progress through each of the governments one by one in order to be successful at the game. In fact, just the opposite; many players never feel the need to adopt more than a couple of the six governments over the course of their civilization’s millennia of steady progress on other fronts. Likewise, depending on which advances they choose to research when, players of Civilization may see the various governments become available for adoption in any number of different orders and combinations.

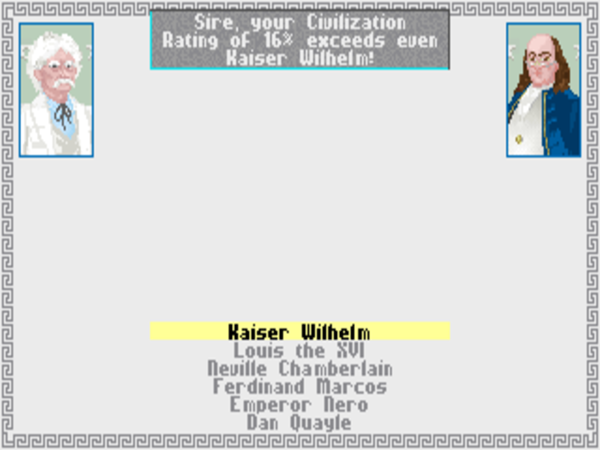

And then too, unlike just about every other element of the game, the effectiveness of the governments in Civilization can’t be ranked in ascending order of desirability by the date of their first appearance in actual human history. If that was the case, communism — or possibly, as we shall see, anarchism — would have to be the best government of all in the game, something that most definitely isn’t true. Government just doesn’t work like that, in history or in the game. Democracy, for example, a form of government inextricably associated with modern developed nations and the likes of Francis Fukuyama’s end of history, is actually a very ancient idea. Given this, I’ve hit upon what feels like a logical method of my own for ordering Civilization‘s systems of government here, in a descending ranking based on the power and status each system in its most theoretical or idealized form vests in its leader or leaders. If that sounds confusing right now, please trust that my logic will become clearer as we move through them.

I do need to emphasize that this overview isn’t written even from 30,000 feet, but rather from something more akin to a low orbit over a massively complicated topic. Do remember as you read on that these strokes I’m laying down are — to mix some metaphors — very broad. I’m well aware that our world has contained and does contain countless debatable edge cases and mangy hybrids. That acknowledged, I do believe that setting aside the details of day-to-day politics and returning to first principles of government, as it were, might just be worthwhile from time to time.

Despotism is the most blunt of all political philosophies, one otherwise known as the law-of-the-jungle or the Lord of the Flies approach to governance. It states simply that he who is strong and crafty enough to gain power over all his rivals shall rule exactly as long as he remains strong and crafty enough to maintain it. For whatever that period may be, the despot and the state he rules are effectively one and the same. Regardless of what the despot might say to justify his rule, in the end despotism is might-makes-right distilled to its purest essence.

“Every state begins in compulsion,” writes Will Durant. In the formative stages of any civilization, despotism truly is an inevitability. In a society with no writing, no philosophy, no concept of human rights or social justice, no other form of government could possibly take hold. “Without autocratic rule,” writes the philosopher and sociologist Herbert Spencer, “the evolution of society could not have commenced.”

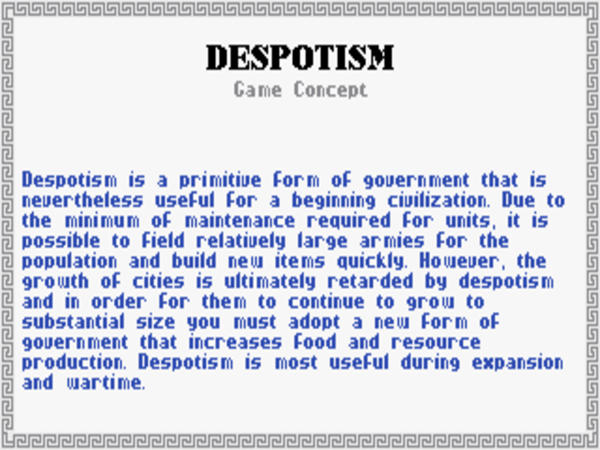

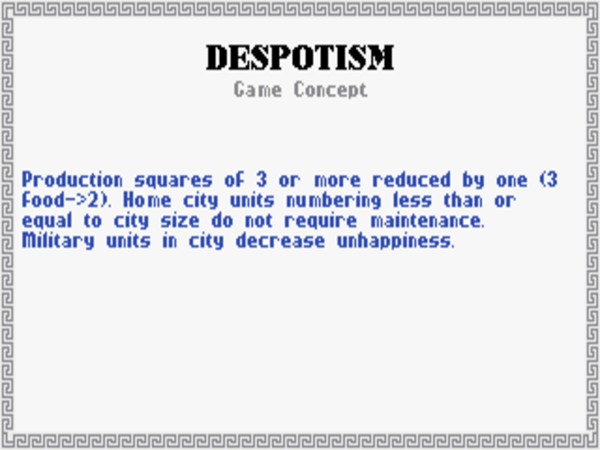

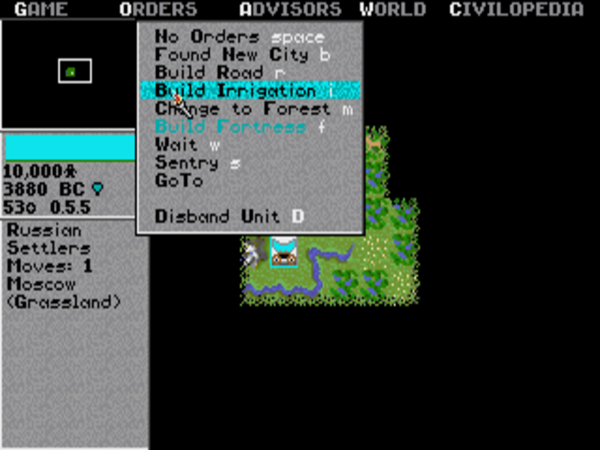

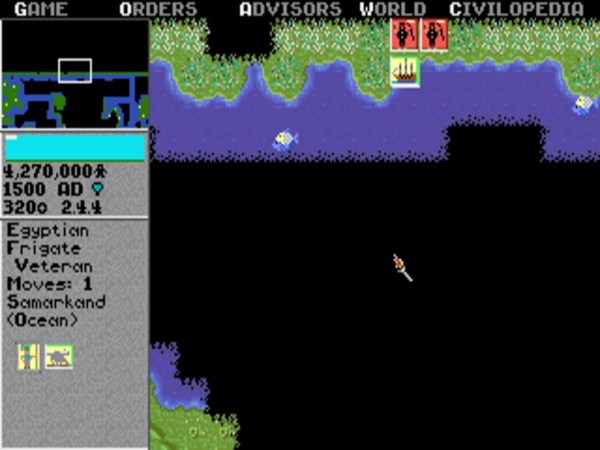

So, it’s perfectly natural that the game of Civilization as well always begins in despotism. In the early stages of the game especially, absolute power has undeniable advantages. Aristotle considered all of the citizens under a despotic government to be nothing more nor less than slaves, and that understanding is reflected in the way the game lets you form them into military units and march them off to war without having to pay them for the privilege, nor having to worry about the morale of the folks left behind at the home front. The military advantages despotism offers are thus quite considerable indeed. If your goal in the game is to conquer the world rather than climb the narrative of progress all the way to Alpha Centauri, you can easily win while remaining a despot throughout.

Yet the game does also levy a toll on despotism, one which, depending on your strategy, can become more and more difficult to bear as it continues. Cities under despotism grow slowly if at all, and are terrible at exploiting the resources available to them. If you do want to make it to Alpha Centauri, you’re thus best advised to leave despotism behind just as quickly as you can.

All of which rings true to history. The economy of a society that lives in fear — a society where ideas are things hated and feared by the ruling junta — will almost always badly lag that of a nation with a more sophisticated form of government. In particular, despotism is a terrible system for managing an industrial or post-industrial economy geared toward anything but perpetual war. Political scientist Bernard Crick:

Most autocracies (and military governments) are in agrarian societies. Attempts to industrialize either lead to democratization as power is spread and criticism is needed, or to concentrations of power as if towards totalitarianism but usually resulting in chronic economic and political instability. The true totalitarian regimes were war economies, whether at war or not, rejecting “mere” economic criteria.

Although the economic drawbacks of despotism are modeled, the structure of the game of Civilization doesn’t give it a good way of reflecting perhaps the most crippling of all the disadvantages of despotism in the real world: its inherent instability. A government destined to last only as long as the cult of personality at its center continues to breathe is hardly ideal for a real civilization’s long-term health. But in the game, the notion of a ruler outside yourself exists not at all; you play a sort of vaguely defined immortal who controls your civilization through the course of thousands of years. Unlike a real-world despot, you don’t have to constantly watch your back for rivals, don’t have to abide by the rule of history that he who lives by the sword shall often die by the sword. And you don’t have to worry about the chaos that ensues when a real despot dies — by natural causes or otherwise — and his former underlings throw themselves into a bloody struggle to find out who will replace him.

For the ruthless power-grabbing despot in the real world, who’s in this only to gratify his own megalomaniacal urges, this supposed disadvantage is of limited importance at best. For those who live on after him, though, it’s far more problematic. Indeed, a good test for deciding whether a given country’s government is in fact a despotic regime is to ask yourself whether it’s clear what will happen when the current leader dies of natural causes, is killed, steps down, or is toppled from power. Ask yourself whether, to put it another way, you can imagine the country continuing to be qualitatively the same place after one of those things happens. If the answer to either of those questions is no, the answer to the question of whether the current leader is a despot is very likely yes.

It would be nice if, given the instability of despotism as well as all of the other ethical objections that accompany it, I could write of it as merely a necessary formative stage of government, a stepping stone to better things. But unfortunately, despotism, the oldest form of human government, has stubbornly remained with us down through the millennia. In the twentieth century, it flared up again even in the heart of developed Europe under the flashy new banner of fascism. Thankfully, its inherent weaknesses meant that neither Mussolini’s Italy, Hitler’s Germany, nor Franco’s Spain could survive beyond the deaths of the despots whose names will always be synonymous with them.

And yet despotism still lives on today, albeit often cloaked under a rhetoric of pseudo-legitimacy. Vladimir Putin of Russia, that foremost bogeyman of the modern liberal-democratic West, one of the most stereotypically despotic Bond villains on the current world stage, nevertheless feels the need to hold a sham election every six years. Peek beneath the cloak of democracy in Russia, however, and all the traits of despotism are laid bare. The economic performance of this, the biggest country in the world, is absolutely putrid, to the tune of about 7 percent of the gross national product of the United States, despite being blessed with comparable natural resources. And then there’s the question of what will happen in Russia once Putin’s gone. Tellingly, commentators have been asking that very question with increasing urgency in recent years, as “Putin’s Russia” threatens to become a descriptor of an historical nation unto itself not unlike Hitler’s Germany.

The inventors of monarchy — absolute rule by a single familial lineage rather than absolute rule by a single individual — appear to have been the ancient Egyptians. Its first appearance there in perhaps as early as 3500 BC marks an early attempt to remedy the most obvious weakness of despotism, the lack of any provision for what happens after any given despot is no more. It’s not hard to grasp where the impulse that led to it came from. After all, it’s natural for any father to want to leave a legacy to his son. Why not the country he happens to rule?

At the same time, though, with monarchy we see the first stirrings of a concern that will become more and more prevalent as we continue with this tour of governments: a concern for the legitimacy of a government, with providing a justification for its existence beyond the age-old dictum of might-makes-right. Electing to go right to the source of all things in the view of most pre-modern societies, monarchs from the time of the Egyptian pharaohs claimed to enjoy the blessing of the gods or God, or in some cases to be gods themselves. In the centuries since, there has been no shortage of other justifications, such as claims to tradition, to a constitution, or just to good old superior genes. But more important than the justifications themselves for our purposes is the fact that they existed, a sign of emerging sophistication in political thought. Depending on how compelling those being ruled over found them to be, they insulated the rulers to a lesser or greater degree from the unmitigated law of the jungle.

In time, the continuity engendered by monarchy in many places allowed not just a ruling family but an extended ruling class to arise, who became known as the aristocracy. Ironically for a word so overwhelmingly associated today with inherited wealth and privilege, “aristocracy” when first coined in ancient Greece had nothing to do with family or inheritance. The aristocracy of a society was simply the best people in that society; the notion of aristocratic rule was thus akin to the modern concept of meritocracy. We can still see some of this etymology in the synonym for “aristocrat” of “noble,” which has long been taken to mean, depending on context, either a high-born member of the ruling class or a brave, upright, trustworthy sort of person in general; think of Rousseau’s “noble savages.” (The word “aristocrat” hasn’t been quite so lucky in recent years, bearing with it today a certain connotation of snobbery and out-of-touchness.)

Aristocrats of the old school were one of the bedrocks of the idealized theory of government outlined by Aristotle in Politics; an “autocracy” ruled by true aristocrats was according to him one of the best forms of government, although it could easily decay into what he called “oligarchy.” Yet the fact was that ancient Greece and Rome were every bit as obsessed with bloodlines as would be the wider Europe in centuries to come, and it didn’t take long for the ruling classes to assert that the best way to ensure the best people had control of the government was to simply pass power from high-born father to high-born son. Whether under the ancients’ original definition of the word or its more modern usage, writes the historian of aristocracy William Doyle, “the essence of aristocracy is inequality. It rests on the presumption that some people are naturally better than others.” For thousands of years, this presumption was at the core of political and social orders throughout Europe and most of the world.

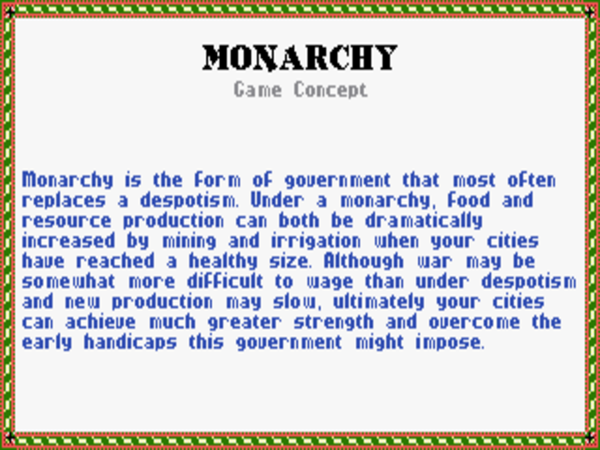

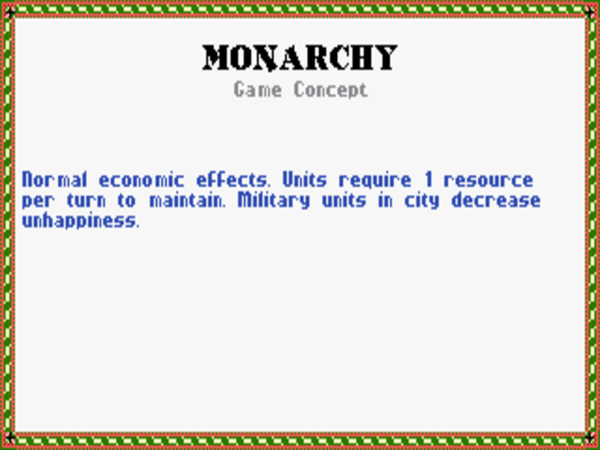

“Monarchy seems the best balanced government in the game,” note Johnny L. Wilson and Alan Emrich in Civilization: or Rome on 640K a Day. And, indeed, the game of Civilization takes monarchy as its sort of baseline standard government. Your economy is subject to no special advantages or disadvantages, reflecting the fact that historical monarchies have tended to be, generally speaking, somewhat less tyrannical than despotic governments, thanks not least to a diffusion of power among what can become quite a substantial number of aristocratic elites. This is a good thing in economic as well as ethical terms; a population that spends less time cowering in fear has more time to be productive. But it does mean that the player of the game needs to pay more to maintain a military under a monarchical government, a reflection of that same diffusion of power.

Once again, though, Civilization‘s structure makes it unable to portray the most important drawbacks of monarchy from the standpoint of societal development. A country that employs as a philosophy of governance such a system of nepotism taken to the ultimate extreme is virtually guaranteed to wind up in the hands of a terrible leader within a few generations — for, despite the beliefs that informed aristocratic privilege down through all those centuries, there’s little actual reason to believe that such essential traits of leadership as wisdom, judgment, forbearance, and decisiveness are heritable. Indeed, the royal family of many a country may have wound up ironically less qualified for the job of running it than the average citizen, thanks to a habit of inbreeding in the name of maintaining those precious bloodlines, which could sometimes lead to unusual incidences of birth defects and mental disorders. The ancient Egyptians made even brother-sister marriages a commonplace affair among the pharaohs, and were rewarded with an unusual number of truly batshit crazy monarchs.

Thus even despotism has an advantage over monarchy in the quest to avoid really, really bad leaders. At least the despot who rises to the top of the heap through strength and wiles has said strength and wiles to rely on as ruler. The histories of monarchies tend to be a series of wild oscillations between boom and bust, all depending on who’s in charge at the time; if Junior is determined to smash up the car, mortgage the house, and invest the family fortune in racehorses after Daddy dies, there’s not much anyone can do about it. Consider the classic example of England during the Renaissance period. The reigns of Elizabeth I and James I yielded great feats of exploration and scientific discovery, major military victories, and the glories of Shakespearean theater. Then along came poor old Charles I, who within 25 years had managed to bankrupt the treasury, spark a civil war, get himself beheaded, and prompt the (brief-lived) abolition of the very concept of a King of England. With leadership like that, a country doesn’t need external enemies to eat itself alive.

The need for competent leadership in an ever more complicated world has caused monarchy, even more so than despotism, to fall badly out of fashion in the last century; I tend to think the final straw was the abject failure of the European kings and queens, almost all of whom were in family with one another in one way or another, to do anything to stop the slow, tragically pointless march up to World War I. Monarchies where the monarch still wields real power today are confined to special situations, such as the micro-states of Monaco and Liechtenstein, and special regions of the world, such as the Middle East.

In Europe, for all those centuries the heart of the monarchical tradition, a surprising number of countries have elected to retain their royal families as living, breathing national-heritage sites, but they’re kept so far removed from the levers of real political power that the merest hint of a political opinion from one of them can set off a national scandal. I confess that I personally don’t understand this desire to keep a phantom limb of the monarchical past alive, and think the royals can darn well turn in the keys to their taxpayer-funded palaces and go get real jobs like the rest of us. I find the fondness for kings and queens particularly baffling in the case of Scandinavia, a place where equality has otherwise become such a fundamental cultural value. But then, I grew up in a country with no monarchical tradition, and I am told that maintaining the tradition of royalty brings in more tourist dollars than it costs tax dollars in Britain at least. I suppose it’s harmless enough.

The notion of a certain group of people who are inherently better-suited to rule than any others is sadly less rare than non-national-heritage monarchies in the modern world. Still, because almost all remaining believers in such a notion believe the group in question is the one to which they themselves belong, such points of view have an inherent problem gaining traction in the court of public opinion writ large.

Of all the forms of government in Civilization, the republic is the most difficult to get a handle on. If we look the word up in the dictionary, we learn little more for sure about any given republic than that it’s a nation that’s not a monarchy. Beyond that, it can often seem that the republic is in the eye of the beholder. In truth, it’s doubtful whether the republic should be considered a concrete system of government at all in the sense of its companions in Civilization. It’s become one of those words everyone likes to use whose real definition no one seems to know. Astonishingly, more than three-quarters of the sovereign nations in the world today have the word “republic” somewhere in their full official name, a range encompassing everything from the most regressive religious dictatorships to the most progressive liberal democracies. Growing up in American schools, I remember it being drilled into me that “the United States is a republic, not a democracy!” because, rather than deciding on public policy via direct vote, citizens elect representatives to lead the nation. Yet such a distinction is not only historically idiosyncratic, it’s also practically pointless. By this standard, no more than one or two real democracies have ever existed in the history of the world, and none after ancient times. Such a thing would, needless to say, be utterly impossible to administer in this complicated modern world of ours. Anyway, if you really insist on getting pedantic about definitions, you can always use the term “representative democracy.”

I suspect that the game of Civilization‘s inclusion of the republic is most heavily informed by the ancient Roman Republic, which can be crudely described as a form of sharply limited democracy, where ordinary citizens of a certain stature were given a limited ability to check the powers of the aristocracy. Every legionnaire of the Republic had engraved upon his shield the motto “Senatus Populusque Romanus”: “The Senate and the People of Rome.” Aristocrats made up the vast majority of the former institution, with the plebeians, or common people, largely relegated to a subsidiary role in the so-called plebeian tribunes. The vestiges of such a philosophy of government survive to this day in places like the British Parliament, with its split between a House of Lords — which has become all but powerless in this modern age of democracy, little more than yet another living national-heritage site — and a House of Commons.

In that same historical spirit, the republic in the game functions as a sort of halfway point between monarchy and democracy, subject to a milder form of the same advantages and disadvantages as the latter — advantages and disadvantages which I think are best discussed when we get to democracy itself.

I don’t want to move on from the republic quite yet, however, because when we trace these roots back to ancient times we do find something else there of huge conceptual importance. Namely, we find the idea of the state as an ongoing entity unto itself, divorced from the person or family that happens to rule it. This may seem a subtle distinction, but it really is an important one.

The despot or the monarch for all intents and purposes is the state. Thus the royal we you may remember from Shakespeare; “Our sometime sister, now our queen,” says Claudius in Hamlet in reference to his wife, who is also the people’s queen. The ruler and the state he rules truly are one. But with the arrival of the republic on the scene, the individual ruler is separate from and — dare I say it? — of less overall significance than the institution of the state itself. He becomes a mere caretaker of a much greater national legacy. This is important not least because it opens the door to a system of government that prioritizes the good of the state over that of its leaders, that emphasizes the ultimate sovereignty of the state as a whole, in the form of all of the citizens that make it up. It opens the door to, as John Adams famously said after the drafting of the American Constitution, “a government of laws and not men.” In other words, it makes the philosophy of government safe for democracy.

(Sources: the books Civilization, or Rome on 640K A Day by Johnny L. Wilson and Alan Emrich, The Story of Civilization Volume I: Our Oriental Heritage by Will Durant, The End of History and the Last Man by Francis Fukuyama, The Rise and Fall of Ancient Egypt by Toby Wilkinson, The Republic by Plato, Politics by Aristotle, Plough, Sword, and Book: The Structure of Human History by Ernest Gellner, Aristocracy: A Very Short Introduction by William Doyle, Democracy: A Very Short Introduction by Bernard Crick, Plato: A Very Short Introduction by Julia Annas, Political Philosophy: A Very Short Introduction by David Miller, and Hamlet by William Shakespeare.)