I’ve already spent a lot of time on the history of one of the two great corporate survivors from the early years of videogames, Electronic Arts. But I’ve neglected the older of the pair, Activision, because that company originally made games only for consoles, a field of gaming history I’ve largely left to others who are far better qualified than I to write about it. Still, as we get into the middle years of the 1980s Activision suddenly becomes very relevant indeed to computer-gaming history. Given that, it’s worth taking the time now to look back on Activision’s founding and earliest days, to trace the threads that led to some important titles that are very much in my wheelhouse. In my view the single most important figure in Activision’s early history, and one that too often goes undercredited in preference to an admittedly brilliant team of programmers and designers, is Jim Levy, Activision’s president through the first seven-and-a-half years of its existence. So, as the title of this article would imply, one of my agendas today will be to do a little something to correct that.

The penultimate year of the 1970s was a trying time at Atari. The Atari VCS console, eventually to become an indelible totem of an era not just for gamers but for everyone, had a difficult first year after its October 1977 introduction, with sales below expectations and boxes piling up in warehouses. Internally the company was in chaos, as a full-on war took place between Nolan Bushnell, Atari’s engineering-minded founder, and its current CEO, Ray Kassar, a slick East Coast plutocrat whom new owners Warner Communications had installed with orders to clean up Bushnell’s hippie playground and turn it into an operation properly focused on marketing and the bottom line. Kassar won the war in December of 1978: Bushnell was fired. From that point on Atari became a very different sort of place. Bushnell had loved his engineers and programmers, but Kassar had no intrinsic interest in Atari’s products and little regard for the engineers and programmers who made them. The reign of Kassar as sole authority at Atari began auspiciously. Even before Bushnell’s departure, Kassar’s promotional efforts, with a strong assist from a fortuitous craze for a Japanese stand-up-arcade import called Space Invaders, had begun to revive the VCS; the Christmas of 1978, although nothing compared to Christmases to come, was strong enough to clear out some of those warehouses.

David Crane, Bob Whitehead, Larry Kaplan, and Alan Miller were known as the “Fantastic Four” at Atari during those days because the games they had (individually) programmed accounted for as much as 60 percent of the company’s total cartridge sales. Like most of Atari’s technical staff, they were none too happy with this new, Kassar-led version of the company. Trouble started in earnest when Kassar distributed a memo to his programmers listing Atari’s top-selling titles along with their sales, with an implied message of “Give us more like these, please!” The message the Fantastic Four took away, however, was that they were each generating millions for Atari whilst toiling in complete anonymity for salaries of around $30,000 per year. Miller, whose history with Atari went back to playing the original Pong machine at Andy Capp’s Tavern and who was always the most vocal and business-oriented of the group, drafted a contract modeled after those used in the book and music industries that would award him royalties and credit, in the form of a blurb on the game boxes and manuals, for his work; he then sent it to Kassar. Kassar’s reply was vehemently in the negative, allegedly comparing programmers to “towel designers” and saying their contribution to the games’ success was about as great as that of the person on the assembly line who put the packages together. Miller talked to Crane, Whitehead, and Kaplan, convincing them to join him in seeking to start their own company to write their own games to compete with those of Atari. Such a venture would be the first of its kind.

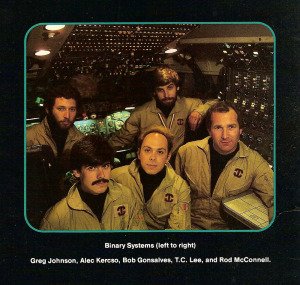

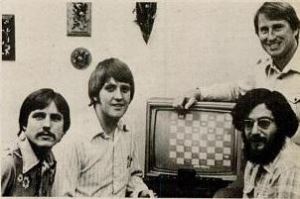

The Fantastic Four, 1980: from left, Bob Whitehead, David Crane, Larry Kaplan, and Alan Miller (standing)

Through the lawyer they consulted, they met Jim Levy, a 35-year-old businessman who had just been laid off after six years at a sinking ship of a company called GRT Corporation, located right there in Sunnyvale, California, also home to Atari. GRT had mainly manufactured prerecorded tapes for music labels, but had also run a few independent labels of their own on the side. Eager to break into that other, creative part of the industry, Levy had come up with a scheme to take the labels off their erstwhile parent’s hands, and had secured $350,000 in venture capital for the purpose. But when his lawyer introduced him to the Fantastic Four that plan changed immediately. Here was a chance to get in on the ground floor of a whole new creative industry — and that really was, as we shall see, how Levy regarded game making, as a primarily creative endeavor. He convinced his venture capitalists to double their initial investment, and on October 1, 1979, after the Fantastic Four had one by one tendered their resignations to Atari as an almost unremarked part of a general brain drain that was going on under Kassar’s new regime, VSYNC, Inc., was born. But that name, an homage to the “vertical sync” signal that Atari VCS programmers lived and died by (see Nick Montfort and Ian Bogost’s Racing the Beam), obviously wouldn’t do. And so, after finding that “Computervision” was already taken, Levy came up with “Activision,” a combination of “action” and “vision” — or, if you like, “action” and “television.” It didn’t hurt that the name would come before that of the company they knew was doomed to become their arch-nemesis, Atari, in sales brochures, phone books, and corporate listings. By January of 1980, when they quietly announced their existence to select industry insiders at the Consumer Electronics Show — among them a very unhappy and immediately threatening Kassar — Activision included 8 people. By that year’s end, it would include 15. And by early 1983, when Activision 1.0 peaked, it would include more than 400.

Aware from the beginning of the potential for legal action on Atari’s part, Activision’s lawyer had made sure that the Fantastic Four exited Atari with nothing but the clothes on their backs. The first task of the new company thus became to engineer their own VCS development system. Much of this work was accomplished in the spare bedroom of Crane’s apartment, even before Levy got the financing locked down and found them an office. Activision was thus able to release their first four games in relatively short order, in July of 1980. Following Atari’s own approach to naming games in the early days, Dragster, Boxing, Checkers, and Fishing Derby had names that were rather distressingly literal. But the boxes were brighter and more exciting than Atari’s, the manuals all included head shots of their designers along with their signatures and personal thoughts on their creations, and, most importantly, most gamers agreed that the quality was much higher than what they’d come to expect from Atari’s own recent releases. Activision would refine their approach — not to mention their game naming — over the next few years, but these things would remain constants.

In spite of the example of a thriving software industry on early PCs like the TRS-80 and Apple II, it seems to have literally never occurred to Atari that anyone could or would do what Activision had done and develop software for “their” VCS. That lack of expectation had undoubtedly been buttressed by the fact that the VCS, a notoriously difficult machine to program for even those in the know, was a closed box, its secrets and development tools secured behind the new hi-tech electric door locks Kassar had had installed at Atari almost as soon as he arrived. The Fantastic Four, however, carried all that precious knowledge around with them in their heads. Atari sued Activision right away for alleged theft of “trade secrets,” but had a hard time coming up with anything they had actually done wrong. There simply was no law against figuring out — or remembering — how the VCS worked and writing games for it. And so Atari employed the time-honored technique of trying to bury their smaller competitor under lawsuits that would be very expensive to defend regardless of their merits. That might have worked — except that Activision made an astonishingly successful debut. This was the year that the VCS really took off, and Activision was there to reap the rewards right along with Atari, selling more than $60 million worth of games during their first year. The levelheaded Levy, who had anticipated a legal storm from the beginning, simply treated it as another tax or other business expense, budgeting a certain amount every quarter to keeping Atari at bay.

Under Levy’s guidance, Activision now proceeded to write a playbook that many of the publishers we’ve already met would later draw from liberally. Activision’s designer/programmers were always shown as cool people doing cool things. Steve Cartwright, designer of Barnstorming, was photographed, with scarf blowing rakishly in the wind, about to take to the skies in a real biplane; Carol Shaw, designer of River Raid and one of the vanishingly small number of female programmers writing videogames, appeared on her racing bike; Larry (no relation to Alan) Miller, designer of Enduro, could be seen perched on the hood of a classic car. Certainly Trip Hawkins would take note of Activision’s publicity techniques when he came up with his own ideas for promoting his “electronic artists” like rock stars. Almost from the beginning Activision fostered a sense of community with their fans through a glossy newsletter, Activisions, full of puzzles and contests and pictures and news of the latest goings-on around the offices in addition to plugs for the newest games — a practice Infocom and EA among others would also take to heart. Activision, however, did it all on a whole different scale. By 1983 they were sending out 400,000 copies of every newsletter issue, and receiving more than 10,000 pieces of fan mail every week.

In those early years Activision practically defined themselves as the anti-Atari. If Atari was closed and faceless, they would be open and welcoming, throwing grand shindigs for press and fans to promote their games, like the “Barnstorming Parade,” featuring, once again, Cartwright in a real airplane; the “Decathlon Party,” featuring 1976 Olympic Decathlon Gold Medal winner Bruce Jenner, to promote The Activision Decathlon; or the “Rumble in the Jungle” to promote their biggest hit of all, Crane’s Pitfall!. While, as Jenner’s presence will attest, they weren’t above a spot of celebratory endorsing now and again, they also maintained a certain artistic integrity in sticking to original game concepts and refusing any sort of licensing deals, whether of current arcade hits or media properties. This again placed them in marked contrast to Atari, who, in the wake of their licensed version of Taito’s arcade hit Space Invaders that had almost singlehandedly transformed the VCS from a modest success to a full-fledged cultural phenomenon in the pivotal year of 1980, never saw a license they didn’t want. Our games, Levy never tired of saying, are original works that can stand on their own merits. As for the licensed stuff: “People will take one look because they know the movie title. But if an exciting game isn’t there, forget it. Our audiences are too sophisticated. You can’t fool them.” Such respect for his audience, whether real or feigned or a bit of both, was another thing that endeared Activision to them.

Released in April of 1982 just as the videogame craze hit its peak — revenues that year reached fully half those of the music industry — Pitfall! was Activision 1.0’s commercial high-water mark, selling more than 4 million copies, more than any of Atari’s own games except Pac-Man. Pitfall! would go on to become the urtext of an entire genre of side-scrolling platform games. In more immediate terms, it made Crane something of a minor celebrity as well as a very wealthy young man indeed; one magazine even dared to compare his earnings from Pitfall! with those of Michael Jackson from Thriller, although that was probably laying it on a bit thick. Meanwhile four other Activision games — Laser Blast, Kaboom!, Freeway, and River Raid — had passed the 1 million mark in sales, and dozens of other new publishers had followed Activision’s example by rolling up their sleeves, figuring out how the VCS worked and how they could develop for it, and jumping into the market. The resulting flood of cartridges, many of them sold at a fraction of Activision’s price point and still more of them substandard even by Atari’s less than exacting standards, would be blamed by Levy for much of what happened next.

In April of 1983, Levy confidently predicted in his keynote speech for The First — and, as it would turn out, last — Video Games Conference that the industry would triple in size within five years. In June, Activision went public, creating a number of new millionaires. It was, depending on how you look at it, the best or the worst possible timing. Just weeks later began in earnest the Great Videogame Crash of 1983, and everything went to hell for Activision, just as for the rest of the industry. Activision had always been a happy place, the sort of company whose president could suddenly announce that he was taking everyone along with their significant others to Hawaii for four days to celebrate Pitfall!‘s success; where the employees could move their managers’ offices en masse into the bathrooms for April Fool’s without fear of reprisal; whose break rooms were always bursting with doughnuts and candy. Thus November 10, 1983, was particularly hard to take. That was the day Levy laid off a quarter of Activision’s workforce. It was his birthday. It was also only the first of many painful downsizings.

Levy had bought into the contemporary conventional wisdom that home computers were destined to replace game consoles in the hearts and minds of consumers, that the home-computer market was going to blow up so big as to dwarf the VCS craze at its height. His plan was thus to turn Activision into a publisher of home-computer software rather than game cartridges. His biggest problem looked to be bridging the chasm that lay between the recently expired fad of the consoles and the projected sustained domination of the home computer. The painful fact was that, even on the heels of a hugely successful 1983, all of the home-computer models combined still had nowhere near the market penetration of the Atari VCS alone at its peak. There simply weren’t enough buyers out there to sustain a company of the size to which Activision had so quickly grown. The only way to bridge the chasm was to glide over on the millions they had socked away in the bank during the boom years whilst brutally downsizing to stretch those millions farther. Activision 2.0 would have to be, at least for the time being, a bare shadow of Activision 1.0’s size. Yet “the time being” soon began to look like perpetuity, especially as the cash reserves began to dry up. In 1983, Activision 1.0 had revenues of $158 million; in 1986, three years into Levy’s remaking/remodeling, Activision 2.0 had revenues of $17 million. The fundamental problem, which grew all too clear as Activision 2.0’s life went on, was that the home-computer boom had fizzled about a decade early and about 90 percent short of its expected size.

With Activision, despite the frantic downsizing, projected to lose $18 million in 1984, when Columbia Pictures made it known that they would be interested in letting David Crane do a game based on their new movie Ghostbusters, Levy quietly forgot all his old prejudices against licensed products. Ghostbusters the game was literally an afterthought; the movie had already been in theaters a week or two when Activision and Columbia started discussing the idea. The deal was closed within days, and Crane was told he had exactly six weeks to come up with the game before Ghostbusters mania died down — which was just as well, as he was planning to get married in six weeks. Exactly these sorts of external pressures had undone Atari licensed games like Pac-Man and E.T., and were a big part of the reason that Levy had heretofore avoided licenses. Luckily, Crane already had been working on a game for the Commodore 64 he called Car Wars (no apparent relation to the Steve Jackson Games board game, the license for which was held by Origin Systems), which had the player undertaking a series of missions whilst racing around a city map battling other vehicles. Each successful mission earned money she could use to upgrade her car for the next, more difficult level. Crane realized it should be possible to retrofit ghosts and lots of other paraphernalia from the movie onto the idea. Realizing that he couldn’t possibly do it all on his own, he recruited a team of four others to help him. Ghostbusters thus became the first Activision game to abandon the single-auteur model of development that had been the standard until then. In its wake almost every other project also became a team project, a concession to the technical realities of developing for the more advanced Commodore 64 and other home computers versus the old VCS. With the help of his assistants, Crane was able to add many charming little touches to Ghostbusters, like sampled taglines from the movie (“He slimed me!”) and a chiptunes version of Ray Parker, Jr.’s monster hit of a theme song, complete with onscreen words and a bouncing ball to help you sing along.

David Crane was Activision’s King Midas. Despite its rushed development, Ghostbusters turned out to be a very playable game, even a surprisingly sophisticated one, what with its CRPG-like in-game economy and the thread of story that linked all of the ghost-busting missions together. It even has a real ending, with the slamming of the dimension door that’s been setting all of these ghosts loose in our universe. Released in plenty of time for Christmas 1984 and doubtless buoyed by the fact that Ghostbusters the movie just kept going and going — it would eventually become the most successful comedy of the 1980s — Ghostbusters became Activision 2.0’s biggest hit by a light year and one of the bestselling Commodore 64 games of all time, selling well into the hundreds of thousands. Like relatively few Commodore 64 games but almost all of the real blockbusters, it became hugely popular in both North America and Europe, where Activision, unlike most of their peers who published there if at all through the likes of U.S. Gold, had set up a real semi-autonomous operation — Activision U.K. — during the boom years. And it was of course widely ported to other platforms popular on both sides of the pond.

It’s at this point that Levy’s story and Activision’s get really interesting. Having proved through Ghostbusters that his company could make the magic happen on the Commodore 64 as well as the Atari VCS, however much more modest the commercial rewards for even a huge hit on the former platform were destined to be, Levy now began to push through a series of aggressively innovative, high-concept titles, often over the considerable misgivings of the board of directors with which Activision’s IPO had saddled him. I don’t want to overstate the case; it’s not as if Levy transformed Activision overnight into an art-house publisher. The next few years would bring plenty of solid action games alongside the occasional adventure as well as, what with Activision having popped the lid off this particular can of worms to so much success, more licensed titles: Aliens, Labyrinth, Transformers, and, just to show that not all licenses are winners, a computerized adaptation of Lucasfilm’s infamous flop Howard the Duck that managed to be almost as bad as its inspiration. Yet betwixt and between all this expected product Levy found room for the weird and the wacky and occasionally the visionary. He made his agenda clear in press interviews. Rhetorically drawing on his music-industry experience despite the fact that he had never actually worked on the creative side of that industry, he cast Activision’s already storied history as that of a plucky artist-driven indie label that went “head to head with the majors” and thereby proved that “the artist can be trusted,” whilst chastising competitors for “a certain stagnation in creative style, concept, and content.” The game industry was — or should be — driven by its greatest assets, its creators. The job of him and the other business-oriented people was just to facilitate their art and to get it before the public.

Writing a game is close to the whole concept of songwriting and composing. Then you get involved later on with the ink-and-paper people for packaging. There are a lot of similarities between the record business and what we do.

There was a certain amount of calculation in such statements, just as there was in Trip Hawkins’s campaigns on behalf of his own electronic artists. Yet, also as in Hawkins’s case, I believe the core sentiment was very sincere. Levy genuinely did believe he was witnessing the birth of a new form (or forms) of art, and genuinely did feel a responsibility to nurture it. Garry Kitchen, a veteran programmer who had joined Activision during the boom years, tells of how Levy during the period of Activision 2.0 kept rejecting his ideas for yet more simple action games: “Do something different, innovate!” How many other game-industry CEOs can you imagine saying such a thing then or now?

At this point, then, I’d like to very briefly tell you about a handful of Activision’s titles from 1985 and 1986. In some cases more interesting as ideas than as playable works, no one could accuse any of what follows of failing to heed Levy’s command to “innovate!” Some aren’t actually games at all, fulfilling another admonition of Levy to his programmers: to stop always thinking in terms of rules and scores and winners and losers.

The first fruit of the creative pressure Levy put on Kitchen was The Designer’s Pencil. Yet another impressive implementation of a Macintosh-inspired interface on an 8-bit computer, The Designer’s Pencil is, depending on how you look at it, either a thoroughly unique programming environment or an equally unique paint program. Rather than painting directly to the screen, you construct a script to control the actions of the eponymous pencil. You can also play sounds and music while the pencil is about its business. A drawing and animation program for people who can’t draw and a visual introduction to programming, The Designer’s Pencil is most of all just a neat little toy.

Responding to users of The Designer’s Pencil who begged for ways to make their creations interactive, Kitchen later provided Garry Kitchen’s GameMaker: The Computer Game Design Kit. Four separate modules let you make the component pieces of your game: Scenes, Sprites, Music, and Sound. Then you can wrap it all together in a blanket of game logic using the Editor. Inevitably more complicated to work with than The Designer’s Pencil, GameMaker is still entirely joystick-driven if you want it to be and remarkably elegant given the complexity of its task. It was by far the most powerful software of its kind for the Commodore 64, the action-game equivalent of EA’s Adventure Construction Set. In a sign of just how far the industry had come in a few years, GameMaker included a reimplementation of Pitfall!, Activision’s erstwhile state-of-the-art blockbuster, as a freebie, just one of several examples of what can be done and how.

Described by its creator Russell Lieblich as a “Zen” game, Web Dimension has an infinite number of lives, no score, and no time limit. The ostensible theme is evolution: levels allegedly progress through atoms, planets, amoebae, jellyfish, germs, eggs, embryos, and finally astronauts. But good luck actually making those associations. The game is best described as, as a contemporary reviewer put it, “a musical fantasy of color, sight, and sound,” on the same wavelength as if less ambitious than Automata’s Deus Ex Machina. As with that game, the soundtrack is the most important part of Web Dimension. Lieblich considered himself a musician first, programmer second, and not actually much of a gamer: “I’m not really into games, but I love music, so I designed a musical game that doesn’t keep score.” Like Deus Ex Machina not much of a game in conventional terms, Web Dimension is interesting as a piece of interactive art.

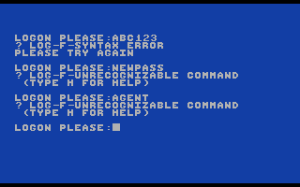

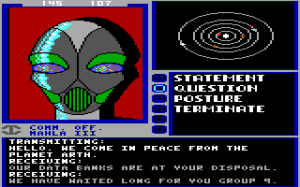

Hacker begins with a screen that’s blank but for a single blinking login prompt. You’re trying to break into a remote computer system with absolutely nothing to go on. Literally: in contrast to the likes of the Ultima or Infocom games, Hacker‘s box contained only a card telling how to boot the game and an envelope of hints for the weak and/or frustrated. Discovering the rules that govern the game is the game. Designed and programmed by Steve Cartwright, creator of Barnstorming amongst others, it was another achievement of an Activision old guard who continued to prove they had plenty of new tricks up their sleeves. This weird experiment of a game actually turned into a surprising commercial success, Activision 2.0’s second biggest seller after Ghostbusters. Playing it is a disorienting, sinister, oddly evocative experience until you figure out what’s going on and what you’re doing, whereupon it suddenly all becomes anticlimactic. “Anticlimactic” also best describes the sequel, Hacker II: The Doomsday Papers; Hacker is the kind of thing that can only really work once.

Little Computer People is today the most remembered creation of Activision’s experimental years, having been an important influence on a little something called The Sims. It’s yet another work by the indefatigable David Crane, his final major achievement at Activision. The fiction, which Crane hewed to relentlessly even in interviews, has you helping out as a member of the Activision Little Computer People Research Group, looking into the activities of the LCPs who have recently been discovered living inside computers. When you start the program you’re greeted by a newly built house perfect for the man and his dog who soon move in. Every single copy of Little Computer People contained a unique LCP with his own personality, a logistical nightmare for Activision’s manufacturing process. He lives his life on a realistic albeit compressed daily schedule, with 24 hours inside the computer passing in 6 outside it. Depending on the time of day and his personality and mood as well as your handling, the little fellow might prefer to relax with a book in his favorite armchair, play music on his piano, exercise, chat on the phone, play with his dog, watch television, listen to the record collection you’ve (hopefully) provided, or play a game of cards with you — when he isn’t sleeping, brushing his teeth, or showering, that is. You can try to get him to do your bidding via a parser interface, but if you aren’t polite enough about it or if he’s just feeling cranky the answer is likely to be a big fat “No!”

While Little Computer People was frequently dismissed as pointless by the more goal-oriented among us, other players developed strong if sometimes dysfunctional attachments to their LCPs. For example, in an article for Retro Gamer, Kim Wild told of her fruitless year-long struggle to get her hygienically challenged LCP just to take a shower already. In its way Little Computer People offered as many tempting mysteries as any adventure game. Contemporary online services teemed with controversy over the contents of a certain upstairs closet into which the LCP would periodically disappear, only to reappear with a huge smile on his face. The creepy majority view was that he had a woman stashed in there, although a vocal minority opted for the closet being a private liquor cabinet.

While it didn’t sell in anything like the quantities of some of Crane’s other games, Little Computer People was a moderate success. Further cementing its connection to The Sims, Crane and Activision planned a series of expansions that would have added new houses and other environments for the LCPs and maybe even the possibility of having more than one of them, and thus of watching them interact with one another instead of only with their dogs and their players. For reasons that will have to wait for a future article, however, that would never happen.

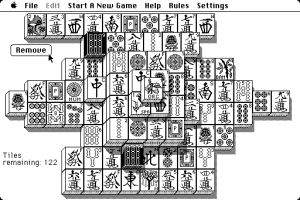

Activision’s first notable release for the new 68000-based machines was Shanghai. A simple solitaire tile-matching exercise that uses mahjong tiles — and not, it should be emphasized, an implementation of the much more complex actual game of mahjong — Shanghai was created by Brodie Lockard, a former gymnast who’d become paralyzed following a bad fall and used his “solitaire mahjong” almost as a form of therapy in the years that followed. Particularly in its original Macintosh incarnation, it’s lovely to look at and dangerously addictive. Like many of Activision’s experimental titles of the period, Shanghai cut against most of the trends in gaming of the mid-1980s. Its gameplay was almost absurdly simple while other games were reveling ever more in complexity; it was playable in short bursts of a few minutes rather than demanding a commitment of hours; its simple but atmospheric calligraphic visuals and feel of leisurely contemplation made a marked contrast to the flash and action of other games; its pure abstraction was the polar opposite to other games’ ever-growing focus on the experiential. Moderately successful in its day, Shanghai was perhaps the most prescient of all Activision’s games from this period, forerunner to our current era of undemanding, bite-sized mobile gaming. Indeed, it would eventually spawn what seems like a million imitators that remain staples on our smartphones and tablets today. And it would also spawn, the modern world being what it is, lots and lots of legal battles over who actually invented solitaire mahjong; there’s still considerable debate about whether Lockard merely adopted an existing Chinese game to the computer or invented a new one from whole cloth.

There are still other fascinating titles whose existence we owe to Jim Levy’s Activision 2.0. In fact, I’m going to use my next two articles to tell you about two more of them — inevitably, given our usual predilections around here, the most narrative-focused of the bunch.

(Lots of print sources for this one, including: Commodore Magazine of June 1987, February 1988, and July 1989; Billboard of June 16 1979, July 14 1979, September 15 1979, June 19 1982, and November 3 1984; InfoWorld of August 4 1980 and November 5 1984; Compute!’s Gazette of March 1985; Creative Computing of September 1983 and November 1984; Antic of June 1984; Retro Gamer 18, 25, 79, and 123; Commodore Horizons of May 1985; Commodore User of April 1985; Zzap! of December 1985; San Jose Mercury of February 18 1988; New York Times of January 13 1983; Commodore Power Play of October/November 1985; Lodi News Sentinel of April 4 1981; the book Zap!: The Rise and Fall of Atari by Scott Cohen; and the entire 7-issue run of the Activisions newsletter. Online sources include Gamasutra’s histories of Activision and Atari and Brad Fregger’s memories of Shanghai‘s development.)