Science without religion is lame, religion without science is blind.

— Albert Einstein

If you ever feel like listening to two people talking past one another, put a strident atheist and a committed theist in a room together and ask them to discuss The God Question. The strident atheist — who, as a colleague of the psychologist and philosopher of religion William James once put it, “believes in No-God and worships Him” — will trot out a long series of supremely obvious, supremely tedious Objective Truths. He’ll talk about evolution, about the “God of the gaps” theory of religion as a mere placeholder for all the things we don’t yet understand, about background radiation from the Big Bang, about the age-old dilemma of how a righteous God could allow all of the evil and suffering which plague our world. He’ll talk and talk and talk, all the while presuming that the theist couldn’t possibly be intelligent enough to have considered any of these things for herself, and that once she’s been exposed to them at last her God delusion will vanish in a puff of incontrovertible logic. The theist, for her part, is much less equipped to argue in this fashion, but she does her best, trying to explain using the crude tool of words her ineffable experiences that transcend language. But her atheist friend, alas, has no time, patience, or possibly capability for transcendence.

My own intention today certainly isn’t to convince you of the existence or non-existence of God. Being a happy agnostic — one of what the Catholic historian Hugh Ross Williamson called “the wishy-washy boneless mediocrities who flap around in the middle” — I make a poor advocate for either side of the debate. But I will say that, while I feel a little sorry for those people who have made themselves slaves to religious dogma and thereby all but lost the capacity to reason in many areas of their lives, I also feel for those who have lost or purged the capacity to get beyond logic and quantities and experience the transcendent.

“One must have musical ears to know the value of a symphony,” writes William James. “One must have been in love one’s self to understand a lover’s state of mind. Lacking the heart or ear, we cannot interpret the musician or the lover justly, and are even likely to consider him weak-minded or absurd.” Richard Dawkins, one of the more tedious of our present-day believers in No-God, spends the better part of a chapter in his book The God Delusion twisting himself into knots over the Einstein quote that opens this article, trying to logically square the belief of the most important scientist of the twentieth century in the universe’s ineffability with the same figure’s claim not to believe in a “personal God.” Like a cat chasing a laser pointer, Dawkins keeps running around trying to pin down that which refuses to be captured. He might be happier if he could learn just to let the mystery be.

In a sense, a game which hopes to capture the more transcendent aspects of life runs into the same barriers as the unadulteratedly rational person hoping to understand them. Many commenters, myself included, have criticized games over the years for a certain thematic niggardliness, a refusal to look beyond the physics of tanks and trains and trebuchets and engage with the concerns of higher art. We’ve tended to lay this failure at the feet of a design culture that too often celebrates immaturity, but that may not be entirely fair. Computers are at bottom calculating machines, meaning they’re well-suited to simulating easily quantifiable physical realities. But how do you quantify love, beauty, or religious experience? It can be dangerous even to try. At worst — and possibly at best as well — you can wind up demeaning the very ineffabilities you wished to celebrate.

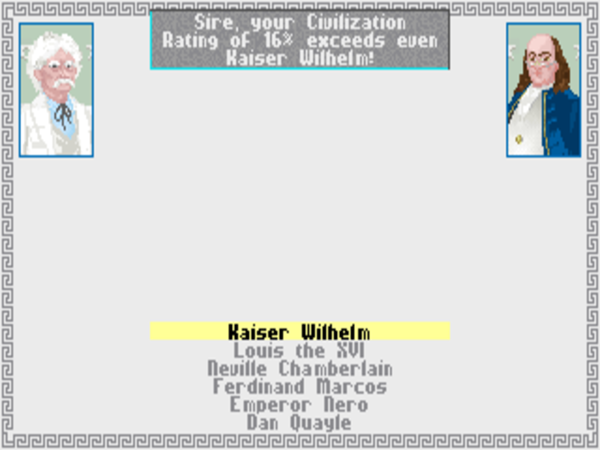

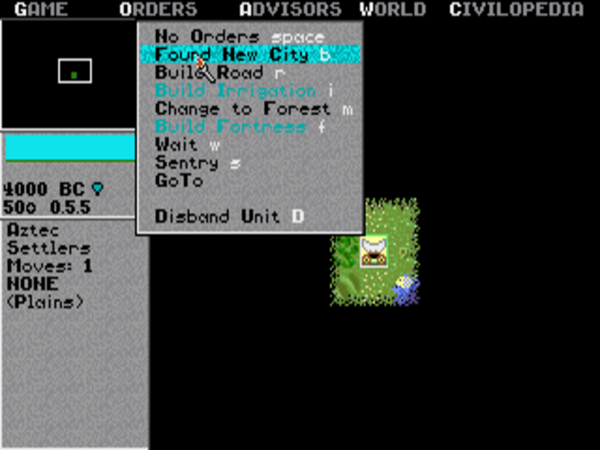

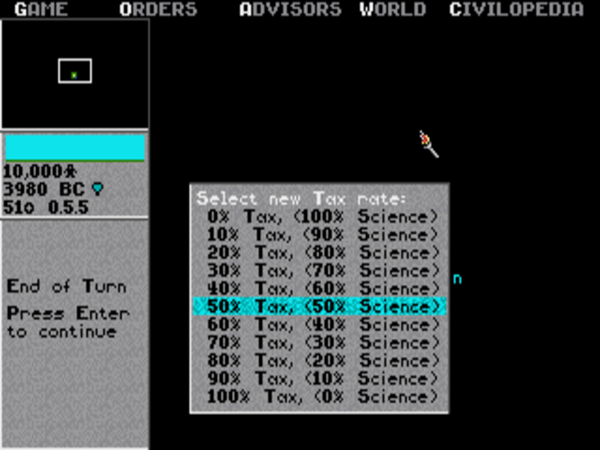

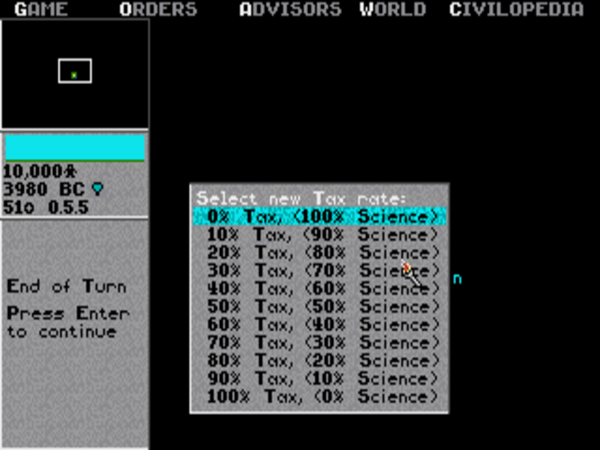

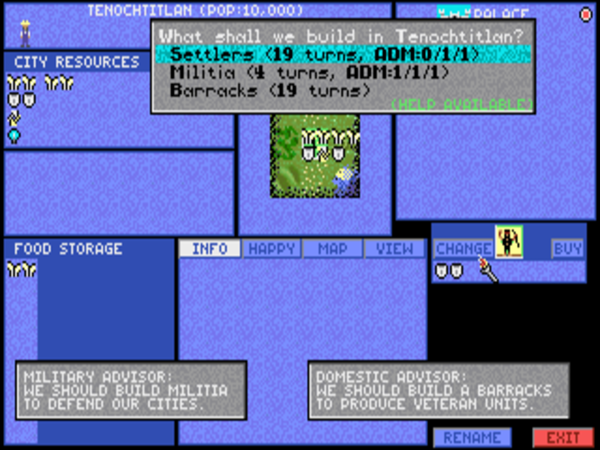

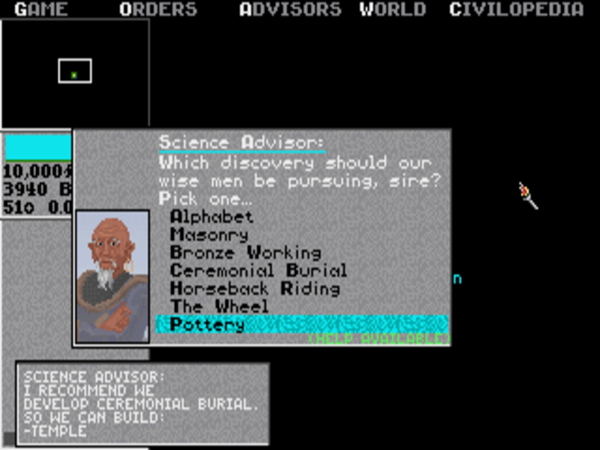

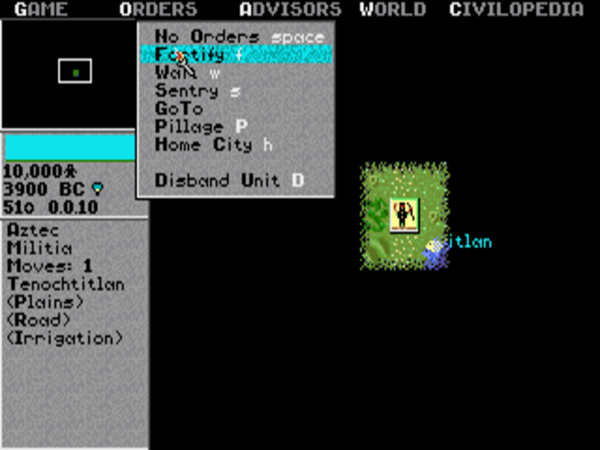

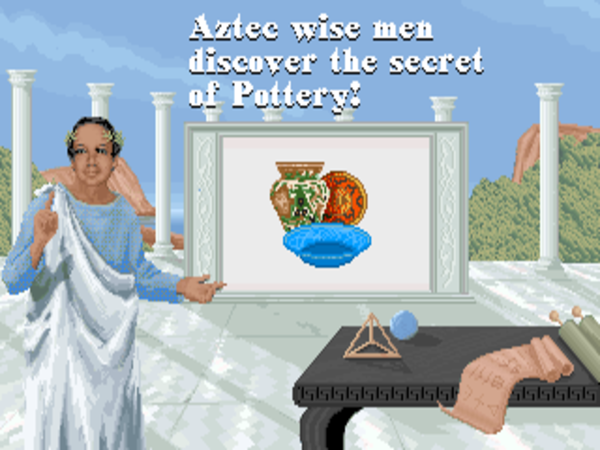

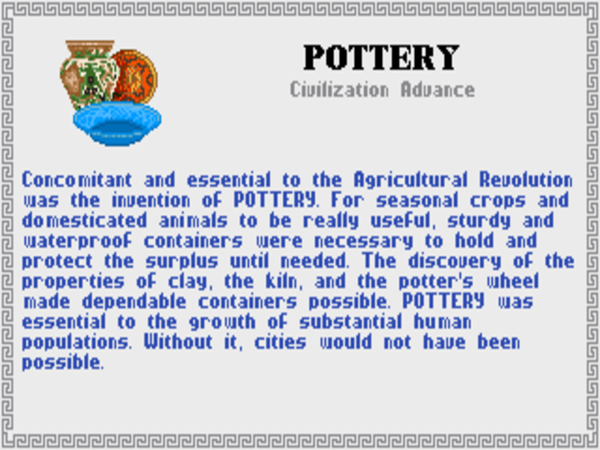

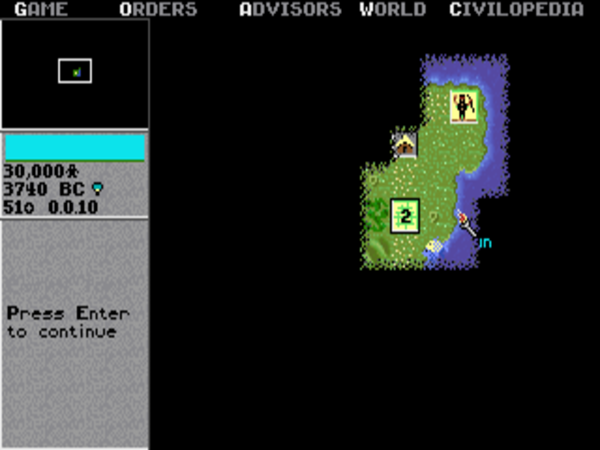

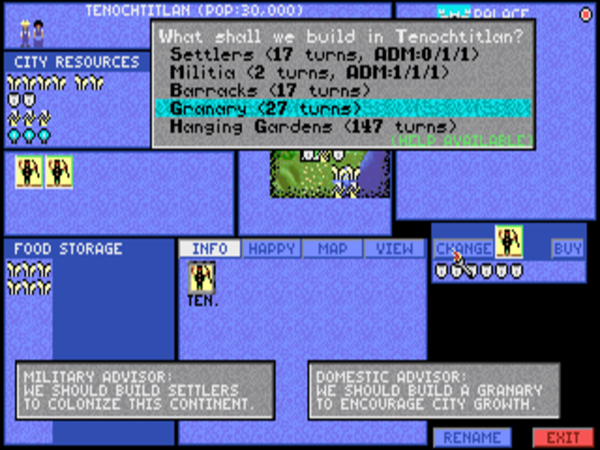

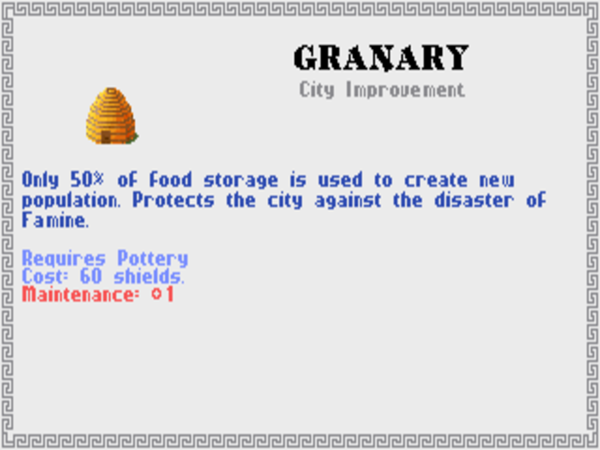

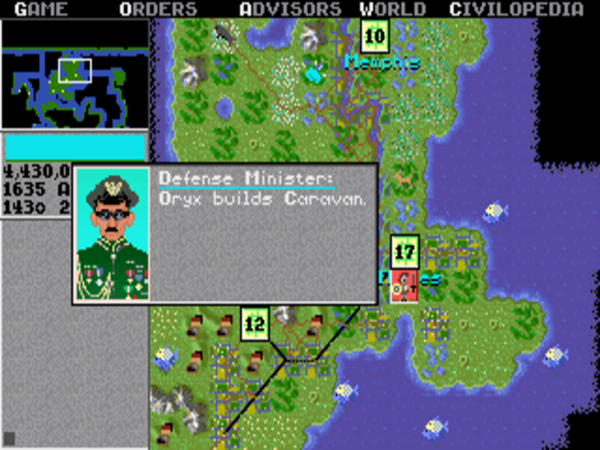

Civilization as well falls victim to this possibly irreconcilable dilemma. In creating their game of everything, their earnest attempt to capture the entirety of the long drama of human civilization, Sid Meier and Bruce Shelley could hardly afford to leave out religion, one of said drama’s prime driving forces. Indeed, they endeavored to give it more than a token part, including the pivotal advances of Ceremonial Burial, Mysticism, and Religion — the last really a stand-in for Christianity, giving you the opportunity to build “cathedrals” — along with such religious Wonders of the World as the Oracle of Delphi, the Sistine Chapel, and the church music of Johann Sebastian Bach. Yet it seems that they didn’t know quite what to do with these things in mechanical, quantifiable, computable terms.

The Civilopedia thus tells us that religion was important in history only because “it brought peace of mind and the ability to get on with the work of life.” In that spirit, all of the advances and Wonders dealing with religion serve in one way or another to decrease the unhappiness level of your cities — a level which, if it gets too high, can throw a city and possibly even your entire civilization into revolt. “The role of religion in Sid Meier’s Civilization,” note Johnny L. Wilson and Alan Emrich in Civilization: or Rome on 640K a Day, “is basically the cynical role of pacifying the masses rather than serving as an agent for progress.” This didn’t sit terribly well with Wilson in particular, who happened to be an ordained Baptist minister. Nor could it have done so with Sid Meier, himself a lifelong believer. But, really, what else were they to do with religion in the context of a numbers-oriented strategy game?

I don’t have an answer to that question, but I do feel compelled to make the argument the game fails to make, to offer a defense of religion — and particularly, what with Civilization being a Western game with a Western historical orientation, Christianity — as a true agent of progress rather than a mere panacea. In these times of ours, when science and religion seem to be at war and the latter is all too frequently read as the greatest impediment to our continued progress, such a defense is perhaps more needed than ever.

Richard Dawkins smugly pats himself on the back for his fair-mindedness when, asked if he really considers religion to be the root of all evil in the world, he replies that no, “religion is not the root of all evil, for no one thing is the root of everything.” And yet, he tells us:

Imagine, with John Lennon, a world with no religion. Imagine no suicide bombers, no 9/11, no 7/7, no Crusades, no witch hunts, no Gunpowder Plot, no Indian partition, no Israeli/Palestinian Wars, no Serb/Croat/Muslim massacres, no persecution of Jews as “Christ-killers,” no Northern Ireland “troubles,” no “honour killings,” no shiny-suited bouffant-haired televangelists fleecing gullible people of their money (“God wants you to give till it hurts”). Imagine no Taliban to blow up ancient statues, no public beheadings of blasphemers, no flogging of female skin for the crime of showing an inch of it.

Fair points all; the record of religious — and not least Christian — atrocities is well-established. In the interest of complete fairness, however, let’s also acknowledge that but for religion those ancient statues whose destruction at the hands of the Taliban Dawkins so rightfully decries, not to mention his Jews being persecuted by Christians, would never have existed in the first place. Scholar of early Christianity Bart D. Ehrman — who, in case it matters, is himself today a reluctant non-believer — describes a small subset of the other things the world would lack if Christianity alone had never come to be:

The ancient triumph of Christianity proved to be the single greatest cultural transformation our world has ever seen. Without it the entire history of Late Antiquity would not have happened as it did. We would never have had the Middle Ages, the Reformation, the Renaissance, or modernity as we know it. There could never have been a Matthew Arnold. Or any of the Victorian poets. Or any of the other authors of our canon: no Milton, no Shakespeare, no Chaucer. We would have had none of our revered artists: no Michelangelo, Leonardo da Vinci, or Rembrandt. And none of our brilliant composers: no Mozart, Handel, or Bach.

One could say that such an elaborate counterfactual sounds more impressive than it really is; the proverbial butterfly flapping its wings somewhere in antiquity could presumably also have deprived us of all those things. Yet I think Ehrman’s deeper point is that all of the things and people he mentions, along with the modern world order and even the narrative of progress that has done so much to shape it, are at heart deeply Christian, whether they express any beliefs about God or not. I realize that’s an audacious statement to make, so let me try to unpack it as carefully as possible.

In earlier articles, I’ve danced around the idea of the narrative of progress as a prescriptive ethical framework — a statement of the way things ought to be — rather than a descriptive explication of the way they actually are. Let me try to make that idea clearer now by turning to one of the most important documents to emerge from the Enlightenment, the era that spawned the narrative of progress: the American Declaration of Independence.

We don’t need to read any further than the beginning of the second paragraph to find what we’re looking for: “We hold these truths to be self-evident, that all men are created equal, that they are endowed by their Creator with certain unalienable Rights, that among these are Life, Liberty, and the pursuit of Happiness.” No other sentence I’ve ever read foregrounds the metaphysical aspect of progress quite like this one, the most famous sentence in the Declaration, possibly the most famous in all of American history. It’s a sentence that still gives me goosebumps every time I read it, thanks not least to that first clause: “We hold these truths to be self-evident.” This might just be the most sweeping logical hand-wave I’ve ever seen. Nowhere in 1776 were any of these “truths” about human equality “self-evident.” The very notion that a functioning society could ever be founded on the principle of equality among people was no more than a century old. Over the course of that century, philosophers such as Immanuel Kant and John Locke had expended thousands of pages in justifying what Thomas Jefferson was now dismissing as a given not worthy of discussion. With all due caveats to the scourge of slavery and the oppression of women and all the other imperfections of the young United States to come, the example that a society could indeed be built around equal toleration and respect for everyone was one of the most inspiring the world has ever known — and one that had very little to do with strict rationality.

Even today, there is absolutely no scientific basis to a claim that all people are equal. Science clearly tells us just the opposite: that some people are smarter, stronger, and healthier than other people. Still, the modern progressive ideal, allegedly so rational, continues to take as one of its most important principles Jefferson’s leap of faith. Nor does Jefferson’s extra-rational idealism stand alone. Consider that one version of the narrative of progress, the one bound up with Georg Wilhelm Friedrich Hegel’s eschatology of an end to which all of history is leading, tells us that that end will be achieved when all people are allowed to work in their individual thymos-fulfilling roles, as they ought to be. But “ought to,” of course, has little relevance in logic or science.

If the progressive impulse cannot be ascribed to pure rationality, we have to ask ourselves where Jefferson’s noble hand-wave came from. In a move that will surprise none of you who’ve read this far, I’d like to propose that the seeds of progressivism lie in the earliest days of the upstart religion of Christianity.

“The past is a foreign country,” wrote L.P. Hartley in The Go-Betweens. “They do things differently there.” And no more recent past is quite so foreign to us as the time before Jesus Christ was (actually or mythically) born. Bart D. Ehrman characterizes pre-Christian Mediterranean civilization as a culture of “dominance”:

In a culture of dominance, those with power are expected to assert their will over those who are weaker. Rulers are to dominate their subjects, patrons their clients, masters their slaves, men their women. This ideology was not merely a cynical grab for power or a conscious mode of oppression. It was the commonsense, millennia-old view that virtually everyone accepted and shared, including the weak and marginalized.

This ideology affected both social relations and government policy. It made slavery a virtually unquestioned institution promoting the good of society; it made the male head of the household a sovereign despot over all those under him; it made wars of conquest, and the slaughter they entailed, natural and sensible for the well-being of the valued part of the human race (that is, those invested with power).

With such an ideology one would not expect to find governmental welfare programs to assist weaker members of society: the poor, homeless, hungry, or oppressed. One would not expect to find hospitals to assist the sick, injured, or dying. One would not expect to find private institutions of charity designed to help those in need.

There’s a telling scene late in The Iliad which says volumes about the ancient system of ethics, and how different it was from our own. Achilles is about to inflict the killing blow on a young Trojan warrior, who begs desperately for his life. Achilles’s reply follows:

“Come, friend, you too must die. Why moan about it so?

Even Patroclus died, a far, far better man than you.

And look, you see how handsome and powerful I am?

The son of a great man, the mother who gave me life

a deathless goddess. But even for me, I tell you,

death and the strong force of fate are waiting.

There will come a dawn or sunset or high noon

when a man will take my life in battle too —

flinging a spear perhaps

or whipping a deadly arrow off his bow.”

Life and death in Homer are matters of fate, not of morality. Mercy is neither given nor expected by his heroes.

Of course, the ancients had gods — plenty of them, in fact. A belief in a spiritual realm of the supernatural is far, far older than human civilization, dating back to the primitive animism of the earliest hunter-gatherers. By the time Homer first chanted the passage above, the pantheon of Greek gods familiar to every schoolchild today had been around for many centuries. Yet these gods, unsurprisingly, reflected the culture of dominance, so unspeakably brutal to our sensibilities, that we see in The Iliad, a poem explicitly chanted in homage to them.

The way these gods were worshiped was different enough from what we think of as religion today to raise the question of whether the word even applies to ancient sensibilities. Many ancient cultures seem to have had no concept or expectation of an afterlife (thus rather putting the lie to one argument frequently trotted out by atheists, that the entirety of the God Impulse can be explained by the very natural human dread of death). The ancient Romans carved the phrase “non fui; fui; non sum; non curo” on gravestones, which translates to “I was not; I was; I am not; I care not.” It’s a long way from “at rest with God.”

Another, even more important difference was the non-exclusivity of the ancient gods. Ancient “religion” was not so much a creed or even a collection of creeds as it was a buffet of gods from which one could mix and match as one would. When one civilization encountered another, it was common for each to assimilate the gods of the other, creating a sort of divine mash-up. Sumerian gods blended with the Babylonian, who blended with the Greek, who were given Latin names and assimilated by the Romans… there was truly a god for every taste and for every need. If you were unlucky in love, you might want to curry favor with Aphrodite; if the crops needed rain, perhaps you should sacrifice to Demeter; etc., etc. The notion of converting to a religion, much less that of being “born again” or the like, would have been greeted by the ancients with complete befuddlement. [1]There were just three exceptions to the rule of non-exclusivity, all of them also rare pre-Christian examples of monotheism. The Egyptian pharaoh Akhenaten decreed around 1350 BC that his kingdom’s traditional pantheon of gods be replaced with the single sun god Aten. But his new religion was accepted only resentfully, with the old gods continuing to be worshiped in secret, and was gradually done away with after his death. Then there was Zoroastrianism, a religion with some eyebrow-raising similarities to the later religion of Christianity which sprung up in Iran in the sixth century BC. It still has active adherents today. And then of course there were the Jews, whose single God would brook no rivals in His people’s hearts and minds. But the heyday of an independent kingdom of Judah was brief indeed, and in the centuries that followed the Jews were regarded as a minor band of oddball outcasts, a football to be kicked back and forth by their more powerful neighbors.

And then into this milieu came Jesus Christ, only to be promptly, as Douglas Adams once put it, “nailed to a tree for saying how great it would be to be nice to people for a change.” It’s very difficult to adequately convey just how revolutionary Christianity, a religion based on love and compassion rather than dominance, really was. I defer one more time to Bart D. Ehrman:

Leaders of the Christian church preached and urged an ethic of love and service. One person was not more important than another. All were on the same footing before God: the master was no more significant than the slave, the husband than the wife, the powerful than the weak, or the robust than the diseased.

The very idea that society should serve the poor, the sick, and the marginalized became a distinctively Christian concern. Without the conquest of Christianity, we may well never have had institutionalized welfare for the poor or organized healthcare for the sick. Billions of people may never have embraced the idea that society should serve the marginalized or be concerned with the well-being of the needy, values that most of us in the West have simply assumed are “human” values.

Christianity carried within it as well a notion of freedom of choice that would be critical to the development of liberal democracy. Unlike the other belief systems of the ancient world, which painted people as hapless playthings of their gods, Christianity demanded that you choose whether to follow Christ’s teachings and thus be saved; you held the fate of your own soul in your own hands. If ordinary people have agency over their souls, why not agency over their governments?

But that was the distant future. For the people of the ancient world, Christianity’s tenet that they — regardless of who they were — were worthy of receiving the same love and compassion they were urged to bestow upon others had an immense, obvious appeal. Hegel, a philosopher of ambiguous personal spiritual beliefs who saw religions as intellectual memes arising out of the practical needs of the people who created them, would later describe Christianity as the perfect slave religion, providing the slaves who made up the bulk of its adherents during the early years with the tool of their own eventual liberation.

And so, over the course of almost 300 years, Christianity gradually bubbled up from the most wretched and scorned members of society to finally reach the Roman Emperor Constantine, the most powerful man in the world, in his luxurious palace. The raw numbers accompanying its growth are themselves amazing. At the time of Jesus Christ’s death, the entirety of the Christian religion consisted of his 20 or so immediate disciples. By the time Constantine converted in AD 312, there were about 3 million Christians in the world, despite persecution by the same monarch’s predecessors. In the wake of Constantine’s official sanction, Christianity grew to as many as 35 million disciples by AD 400. And today roughly one-third of the world’s population — almost 2.5 billion people — call themselves Christians of one kind or another. For meme theorists, Christianity provides perhaps the ultimate example of an idea that was so immensely appealing on its own merits that it became literally unstoppable. And for political historians, its takeover of the Roman Empire provides perhaps the first example in history of a class revolution, a demonstration of the power of the masses to shake the palaces of the elites.

Which is not to say that everything about the Christian epoch would prove better than what had come before it. “There is no need of force and injury because religion cannot be forced,” wrote the Christian scholar Lactantius hopefully around AD 300. “It is a matter that must be managed by words rather than blows, so that it may be voluntary.” Plenty would conspicuously fail to take his words to heart, beginning just 35 years later with the appropriately named Firmicus, an advisor with the newly Christianized government of Rome, who told his liege that “your severity should be visited in every way on the crime of idolatry.” The annals of the history that followed are bursting at the seams with petty tyrants, from Medieval warlords using the cross on their shields to justify their blood lust to modern-day politicians of the Moral Majority railing against “those sorts of people,” who have adopted the iconography of Christianity whilst missing the real point entirely. This aspect of Christianity’s history cannot and should not be ignored.

That said, I don’t want to belabor too much more today Christianity’s long history as a force for both good and ill. I’ll just note that the Protestant Reformation of the sixteenth and seventeenth centuries, which led to the bloodiest war in human history prior to World War I, also brought with it a new vitality to the religion, doing much to spark that extraordinary acceleration in the narrative of progress which began in the eighteenth century. I remember discussing the narrative of progress with a conservative Catholic acquaintance of mine who’s skeptical of the whole notion’s spiritual utility. “That’s a very Protestant idea,” he said about it, a little dismissively. “Yeah,” I had to agree after some thought. “I guess you’re right.” Protestantism is still linked in the popular imagination with practical progress; the phrase “Protestant work ethic” still crops up again and again, and studies continue to show that large-scale conversions to Protestantism are usually accompanied — for whatever reason — by increases in a society’s productivity and a decline in criminality.

One could even argue that it was really the combination of the ethos of love, compassion, equality, and personal agency that had been lurking within Christianity from the beginning with this new Protestant spirit of practical, worldly achievement in the old ethos’s service that led to the Declaration of Independence and the United States of America, that “shining city on a hill” inspiring the rest of the world. (The parallels between this worldly symbol of hope and the Christian Heaven are, I trust, so obvious as to not be worth going into here.) It took the world almost 2000 years to make the retrospectively obvious leap from the idea that all people are equal before God to the notion that all people are equal, period. Indeed, in many ways we still haven’t quite gotten there, even in our most “civilized” countries. Nevertheless, it’s hard to imagine the second leap being made absent the first; the seeds of the Declaration of Independence were planted in the New Testament of the Christian Bible.

Of course, counterfactuals will always have their appeal. If, as many a secular humanist has argued over the years, equality and mutual respect really are just a rationally better way to order a society, it’s certainly possible we would have gotten as far as we have today by some other route — possibly even have gotten farther, if we had been spared some of the less useful baggage which comes attached to Christianity. In the end, however, we have only one version of history which we can truly judge: the one that actually took place. So, credit where it’s due.

Said credit hasn’t always been forthcoming. In light of the less inspiring aspects of Christianity’s history, there’s a marked tendency in some circles to condemn its faults without acknowledging its historical virtues, often accompanied by a romanticizing of the pre-Christian era. By the time Constantine converted to Christianity in AD 312, thus transforming it at a stroke from an upstart populist movement to the status it still holds today as the dominant religion of the Western world, the Roman Empire was getting long in the tooth indeed, and the thousand years of regress and stagnation that would come to be called the Dark Ages were looming. Given the timing, it’s all too easily for historians of certain schools to blame Christianity for what followed. Meanwhile many a libertine professor of art or literature has talked of the ancients’ comfort with matters of the body and sexuality, contrasting it favorably with the longstanding Christian discomfort with same.

But our foremost eulogizer of the ancient ways remains that foremost critic of the narrative of progress in general, Friedrich Nietzsche. His homage to the superiority of might makes right over Christian compassion carries with it an unpleasant whiff of the Nazi ideology that would burst into prominence thirty years after his death:

The sick are the greatest danger for the well. The weaker, not the stronger, are the strong’s undoing. It is not fear of our fellow man which we should wish to see diminished; for fear rouses those who are strong to become terrible in turn themselves, and preserves the hard-earned and successful type of humanity. What is to be dreaded by us more than any other doom is not fear but rather the great disgust, not fear but rather the great pity — disgust and pity for our human fellows.

The morbid are our greatest peril — not the “bad” men, not the predatory beings. Those born wrong, the miscarried, the broken — they it is, the weakest who are undermining the vitality of the race, poisoning our trust in life, and putting humanity in question. Every look of them is a sigh — “Would I were something other! I am sick and tired of what I am.” In this swamp soil of self-contempt, every poisonous weed flourishes, and all so small, so secret, so dishonest, and so sweetly rotten. Here swarm the worms of sensitiveness and resentment, here the air smells odious with secrecy, with what is not to be acknowledged; here is woven endlessly the net of the meanest conspiracies, the conspiracy of those who suffer against those who succeed and are victorious; here the very aspect of the victorious is hated — as if health, success, strength, pride, and the sense of power were in themselves things vicious, for which one ought eventually to make bitter expiation. Oh, how these people would themselves like to inflict expiation, how they thirst to be the hangmen! And all the while their duplicity never confesses their hatred to be hatred.

To be sure, there were good things about the ancient ways. When spiritual beliefs are a buffet, there’s little point in fighting holy wars; while the ancients fought frequently and violently over many things large and small, they generally didn’t go to war over their gods. Even governmental suppression of religious faith, which forms such an important part of the early legends of Christianity, was apparently suffered by few other groups of believers. [2]The most well-documented incidence of same occurred in 186 BC, and targeted worshipers of Bacchus, the famously rowdy god of wine. These drunkards got in such a habit of going on raping-and-pillaging sprees through the countryside that the Roman Senate, down to its last nerve with the bro-dudes of the classical world, rounded up 7000 of them for execution and pulled down their temples all over Roman territory. Still, it’s hard to believe that very many of our post-Christ romanticizers of the ancient ways would really choose to go back there if push came to shove — least of all among them Nietzsche, a sickly, physically weak man who suffered several major mental breakdowns over the course of his life. He wouldn’t have lasted a day among his beloved Bronze Age Greeks; ironically, it was only the fruits of the progress he so decried that allowed him to fulfill his own form of thymos.

At any rate, I hope I’ve made a reasonable case for Christianity as a set of ideas that have done the world much good, perhaps even enough to outweigh the atrocities committed in the religion’s name. At this juncture, I do want to emphasize again that one’s opinion of Christian values need not have any connection with one’s belief in the veracity of the Christian God. For my part, I try my deeply imperfect best to live by those core values of love, compassion, and equality, but I have absolutely no sense of an anthropomorphic God looking down from somewhere above, much less a desire to pray to Him.

It even strikes me as reasonable to argue that the God part of Christianity has outlived His essentialness; one might say that the political philosophy of secular humanism is little more than Christianity where faith in God is replaced with faith in human rationality. Certainly the world today is more secular than it’s ever been, even as it’s also more peaceful and prosperous than it’s ever been. A substantial portion of those 2.5 billion nominal Christians give lip service but little else to the religion; I think about the people all across Europe who still let a small part of their taxes go to their country’s official church out of some vague sense of patriotic obligation, despite never actually darkening any physical church’s doors.

Our modern world’s peace and prosperity would seem to be a powerful argument for secularism. Yet a question is still frequently raised: does a society lose something important when it loses the God part of Christianity — or for that matter the God part of any other religion — even if it retains most of the core values? Some, such as our atheist friend Richard Dawkins, treat the very notion of religiosity as social capital with contempt, another version of the same old bread-and-circuses coddling of the masses, keeping them peaceful and malleable by telling them that another, better life lies in wait after they die, thus causing them to forgo opportunities for bettering their lots in this life. But, as happens with disconcerting regularity, Dawkins’s argument here is an oversimplification. As we’ve seen already, a belief in an afterlife isn’t a necessary component of spiritual belief (although, as the example of Christianity proves, it certainly can’t hurt a religion’s popularity). It’s more interesting to address the question not through the micro lens of what is good for an individual or even a collection of individuals in society, but rather through the macro lens of what is good for society as an entity unto itself.

And it turns out that there are plenty of people, many of them not believers themselves, who express concern over what else a country loses as it loses its religion. The most immediately obvious of the problematic outcomes is a declining birth rate. The well-known pension crisis in Europe, caused by the failure of populations there to replace themselves, correlates with the fact that Europe is by far the most secular place in the world. More abstractly but perhaps even more importantly, the decline in organized religion in Europe and in North America has contributed strongly to a loss of communal commons. There was a time not that long ago when the local church was the center of a community’s social life, not just a place of worship but one of marriages, funerals, pot lucks, swap meets, dances, celebrations, and fairs, a place where people from all walks of life came together to flirt, to socialize, to hash out policy, to deal with crises, and to help those less fortunate. Our communities have grown more diffuse with the decline of religion, on both a local and a national scale.

Concern about the loss of religion as a binding social force, balanced against a competing and equally valid concern for the plight of those who choose not to participate in the majority religion, has provoked much commentary in recent decades. We live more and more isolated lives, goes the argument, cut off from our peers, existing in a bubble of multimedia fantasy and empty consumerism, working only to satisfy ourselves. Already in 1995, before the full effect of the World Wide Web and other new communications technologies had been felt, the political scientist Robert D. Putnam created a stir in the United States with his article “Bowling Alone: America’s Declining Social Capital,” which postulated that civic participation of the sort that had often been facilitated by churches was on a worrisome decline. For many critics of progress, the alleged isolating effect of technology has only made the decline more worrisome in more recent years. In Denmark, the country where I live now — and a country which is among the most secular even in secular Europe — newly arrived immigrants have sometimes commented to me about the isolating effect of even the comprehensive government-administered secular safety net: how elderly people who would once have been taken care of by their families get shunted off to publicly-funded nursing homes instead, how children can cut ties with their families as soon as they reach university age thanks to a generous program of student stipends.

The state of Christianity in many countries today, as more of a default or vestigial religion than a vital driving faith, is often contrasted unfavorably with that of Islam, its monotheistic younger brother which still trails it somewhat in absolute numbers of believers but seems to attract far more passion and devotion from those it has. Mixed with reluctant admiration of Islam’s vitality is fear of the intolerance it supposedly breeds and the acts of terrorism carried out in its name. I’ve had nothing to say about Islam thus far — in my defense, neither does the game of Civilization — and the end of this long article isn’t the best place to start analyzing it. I will note, however, that the history of Islam, like that of Christianity, has encompassed both inspirational achievements and horrible atrocities. Rather than it being Islam itself that is incompatible with liberal democracy, there seems to be something about conditions in the notorious cauldron of conflict that is the Middle East — perhaps the distortions produced by immense wealth sitting there just underground in the form of oil and the persistent Western meddling that oil has attracted — which has repeatedly stunted those countries’ political and economic development. Majority Muslim nations in other parts of the world, such as Indonesia and Senegal, do manage to exist as reasonably free and stable democracies. Ultimately, the wave of radical Islamic terrorism that has provoked such worldwide panic since September 11, 2001, may have at least as much to do with disenfranchisement and hopelessness as it does with religion. If and when the lives of the young Muslim men who are currently most likely to become terrorists improve, their zeal to be religious martyrs will likely fade — as quite likely will, for better or for worse, the zeal of many of them for their religion in general. After all, we’ve already seen this movie play out with Christianity in the starring role.

As for Christianity, the jury is still out on the effects of its decline in a world which has to a large extent embraced its values but may not feel as much of a need for its God and for its trappings of worship. One highly optimistic techno-progressivist view — one to which I’m admittedly very sympathetic — holds that the ties that bind us together haven’t really been weakened so very much at all, that the tyranny of geography over our circles of empathy is merely being replaced, thanks to new technologies of communication and travel, by true communities of interest, where physical location need be of only limited relevance. Even the demographic crisis provoked by declining birth rates might be solved by future technologies which produce more wealth for everyone with less manpower. And the fact remains that, taken in the abstract, fewer people on this fragile planet of ours is really a very good thing. We shall see.

I realize I’ve had little to say directly about the game of Civilization in this article, but I’m not quite willing to apologize for that. As I stated at the outset, the game’s handling of religion isn’t terribly deep; there just isn’t a lot of “there” there when it comes to religion and Civilization. Yet religion has been so profoundly important to the development of real-world civilization that this series of articles would have felt incomplete if I didn’t try to remedy the game’s lack by addressing the topic in some depth. And in another way, of course, the game of Civilization would never have existed without the religion of Christianity in particular, simply because so much of the animating force of the narrative of progress, which in turn is the animating force of Civilization, is rooted in Christian values. In that sense, then, this article has been all about the game of Civilization — as it has been all about the values underpinning so much of the global order we live in today.

(Sources: the books Civilization, or Rome on 640K A Day by Johnny L. Wilson and Alan Emrich, The Story of Civilization Volume I: Our Oriental Heritage by Will Durant, The Better Angels of Our Nature: Why Violence Has Declined by Steven Pinker, The End of History and the Last Man by Francis Fukuyama, The Iliad by Homer, Lectures on the Philosophy of History by Georg Wilhelm Friedrich Hegel, The Varieties of Religious Experience by William James, The Genealogy of Morals by Friedrich Nietzsche, The Communist Manifesto by Karl Marx and Friedrich Engels, The Human Use of Human Beings by Norbert Wiener, The Hitchhiker’s Guide to the Galaxy by Douglas Adams, The Triumph of Christianity: How a Forbidden Religion Swept the World by Bart D. Ehrman, The Past is a Foreign Country by David Lowenthal, Bowling Alone: America’s Declining Social Capital by Robert D. Putnam, A Christmas Carol by Charles Dickens, and The God Delusion by Richard Dawkins.)

Footnotes

| ↑1 | There were just three exceptions to the rule of non-exclusivity, all of them also rare pre-Christian examples of monotheism. The Egyptian pharaoh Akhenaten decreed around 1350 BC that his kingdom’s traditional pantheon of gods be replaced with the single sun god Aten. But his new religion was accepted only resentfully, with the old gods continuing to be worshiped in secret, and was gradually done away with after his death. Then there was Zoroastrianism, a religion with some eyebrow-raising similarities to the later religion of Christianity which sprung up in Iran in the sixth century BC. It still has active adherents today. And then of course there were the Jews, whose single God would brook no rivals in His people’s hearts and minds. But the heyday of an independent kingdom of Judah was brief indeed, and in the centuries that followed the Jews were regarded as a minor band of oddball outcasts, a football to be kicked back and forth by their more powerful neighbors. |

|---|---|

| ↑2 | The most well-documented incidence of same occurred in 186 BC, and targeted worshipers of Bacchus, the famously rowdy god of wine. These drunkards got in such a habit of going on raping-and-pillaging sprees through the countryside that the Roman Senate, down to its last nerve with the bro-dudes of the classical world, rounded up 7000 of them for execution and pulled down their temples all over Roman territory. |