UCLA will become the first station in a nationwide computer network which, for the first time, will link together computers of different makes and using different machine languages into one time-sharing system. Creation of the network represents a major step in computer technology and may serve as the forerunner of large computer networks of the future. The ambitious project is supported by the Defense Department’s Advanced Research Projects Agency (ARPA), which has pioneered many advances in computer research, technology, and applications during the past decade.

The system will, in effect, pool the computer power, programs, and specialized know-how of about fifteen computer-research centers, stretching from UCLA to MIT. Other California network stations (or nodes) will be located at the Rand Corporation and System Development Corporation, both of Santa Monica; the Santa Barbara and Berkeley campuses of the University of California; Stanford University and the Stanford Research Institute.

The first stage of the network will go into operation this fall as a sub-net joining UCLA, Stanford Research Institute, UC Santa Barbara, and the University of Utah. The entire network is expected to be operational in late 1970.

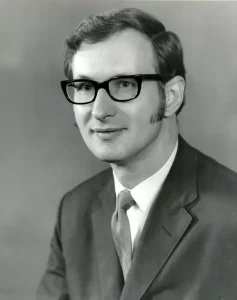

Engineering professor Leonard Kleinrock, who heads the UCLA project, describes how the network might handle a sample problem:

Programmers at Computer A have a blurred photo which they want to bring into focus. Their program transmits the photo to Computer B, which specializes in computer graphics, and instructs Computer B’s program to remove the blur and enhance the contrast. If B requires specialized computational assistance, it may call on Computer C for help. The processed work is shuttled back and forth until B is satisfied with the photo, and then sends it back to Computer A. The messages, ranging across the country, can flash between computers in a matter of seconds, Dr. Kleinrock says.

Each computer in the network will be equipped with its own interface message processor (IMP), which will double as a sort of translator among the Babel of computers languages and as a message handler and router.

Computer networks are not an entirely new concept, notes Dr. Kleinrock. The SAGE radar defense system of the fifties was one of the first, followed by the airlines’ SABRE reservation system. However, [both] are highly specialized and single-purpose systems, in contrast to the planned ARPA system which will link a wide assortment of different computers for a wide range of unclassified research functions.

“As of now, computer networks are still in their infancy,” says Dr. Kleinrock. “But as they grow up and become more sophisticated, we will probably see the spread of ‘computer utilities,’ which, like present electric and telephone utilities, will serve individual homes and offices across the country.”

— UCLA press release dated July 3, 1969 (which may include the first published use of the term “router”)

In July of 1968, Larry Roberts sent out a request for bids to build the ARPANET’s interface message processors — the world’s very first computer routers. More than a dozen proposals were received in response, some of them from industry heavy hitters like DEC and Raytheon. But when Roberts and Bob Taylor announced their final decision at the end of the year, everyone was surprised to learn that they had given the contract to the comparatively tiny firm of Bolt Beranek and Newman.

BBN, as the company was more typically called, came up in our previous article as well; J.C.R. Licklider was working there at the time he wrote his landmark paper on “human-computer symbiosis.” Formed in 1948 as an acoustics laboratory, BBN moved into computers in a big way during the 1950s, developing in the process a symbiotic relationship of its own with MIT. Faculty and students circulated freely between the university and BBN, which became a hacker refuge, tolerant of all manner of eccentricity and uninterested in such niceties as dress codes and stipulated working hours. A fair percentage of BBN’s staff came to consist of MIT dropouts, young men who had become too transfixed by their computer hacking to keep up with the rest of their coursework.

BBN’s forte was one-off, experimental contracts, not the sort of thing that led directly to salable commercial products but that might eventually do so ten or twenty years in the future. In this sense, the ARPANET was right up their alley. They won the bid by submitting a more thoughtful, detailed proposal than anyone else, even going so far as to rewrite some of ARPA’s own specifications to make the IMPs operate more efficiently.

Like all of the other bidders, BBN didn’t propose to build the IMPs from scratch, but rather to adapt an existing computer for the purpose. Their choice was the Honeywell 516, one of a new generation of robust integrated-circuit-based “minicomputers,” which distinguished themselves by being no larger than the typical refrigerator and being able to run on ordinary household current. Since the ARPANET would presumably need a lot of IMPs if it proved successful, the relatively cheap and commonplace Honeywell model seemed a wise choice.

Still, the plan was to start as small as possible. The first version of the ARPANET to go online would include just four IMPs, linking four research clusters together. Surprisingly, MIT was not to be one of them; it was left out because the other inaugural sites were out West and ARPA didn’t want to pay AT&T for a transcontinental line right off the bat. Instead the Universities of California at Los Angeles and Santa Barbara each got the honor of being among the first to join the ARPANET, as did the University of Utah and the Stanford Research Institute (SRI), an adjunct to Stanford University. ARPA wanted BBN to ship the first turnkey IMP to UCLA by September of 1969, and for all four of the inaugural nodes to be up and running by the end of the year. Meeting those deadlines wouldn’t be easy.

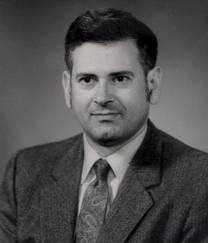

The project leader at BBN was Frank Heart, a man known for his wide streak of technological paranoia — he had a knack for zeroing in on all of the things that could go wrong with any given plan — and for being “the only person I knew who spoke in italics,” as his erstwhile BBN colleague Severo Ornstein puts it. (“Not that he was inflexible or unpleasant — just definite.”) Ornstein himself, having moved up in the world of computing since his days as a hapless entry-level “Crosstelling” specialist on the SAGE project, worked under Heart as the principal hardware architect, while an intense young hacker named Will Crowther, who loved caving and rock climbing almost as much as computers, supervised the coding. At the start, they all considered the Honeywell 516 a well-proven machine, given that it had been on the market for a few years already. They soon learned to their chagrin, however, that no one had ever pushed it as hard as they were now doing; obscure flaws in the hardware nearly derailed the project on more than one occasion. But they got it done in the end. The first IMP was shipped across the country to UCLA right on schedule.

The team from Bolt Beranek and Newman who created the world’s first routers. Severo Ornstein stands at the extreme right, Will Crowther just next to him. Frank Heart is near the center, the only man wearing a necktie.

On July 20, 1969, American astronaut Neil Armstrong stepped onto the surface of the Moon, marking one culmination of that which had begun with the launch of the Soviet Union’s first Sputnik satellite twelve years earlier. Five and a half weeks after the Moon landing, another, much quieter result of Sputnik became a reality. The first public demonstration of a functioning network router was oddly similar to some of the first demonstrations of Samuel Morse’s telegraph, in that it was an exercise in sending a message around a loop that led it right back to the place where it had first come from. A Scientific Data Systems Sigma 7 computer at UCLA sent a data packet to the IMP that had just been delivered, which was sitting right beside it. Then the IMP duly read the packet’s intended destination and sent it back where it had come from, to appear as text on a monitor screen.

There was literally nowhere else to send it, for only one IMP had been built to date and only this one computer was yet possessed of the ability to talk to it. The work of preparing the latter had been done by a team of UCLA graduate students working under Leonard Kleinrock, the man whose 1964 book had popularized the idea of packet switching. “It didn’t look like anything,” remembers Steve Crocker, a member of Kleinrock’s team. But looks can be deceiving; unlike the crowd of clueless politicians who had once watched Morse send a telegraph message in a ten-mile loop around the halls of the United States Congress, everyone here understood the implications of what they were witnessing. The IMPs worked.

Bob Taylor, the man who had pushed and pushed until he found a way to make the ARPANET happen, chose to make this moment of triumph his ironic exit cue. A staunch opponent of the Vietnam War, he had been suffering pangs of conscience over his role as a cog in the military-industrial complex for a long time, even as he continued to believe in the ARPANET’s future value for the civilian world. After Richard Nixon was elected president in November of 1968, he had decided that he would stay on just long enough to get the IMPs finished, by which point the ARPANET as a whole would hopefully be past the stage where cancellation was a realistic possibility. He stuck to that decision; he resigned just days after the first test of an IMP. His replacement was Larry Roberts — another irony, given that Taylor had been forced practically to blackmail Roberts into joining ARPA in the first place. Taylor himself would land at Xerox’s new Palo Alto Research Center, where over the course of the new decade he would help to invent much else that has become an everyday part of our digital lives.

About a month after the test of the first IMP, BBN shipped a second one, this time to the Stanford Research Institute. It was connected to its twin at UCLA by an AT&T long-distance line. Another, local cable was run from it to SRI’s Scientific Data Systems 940 computer, which was normally completely incompatible with UCLA’s Sigma machine despite coming from the same manufacturer. In this case, however, programmers at the two institutions had hacked together a method of echoing text back and forth between their computers — assuming it worked, that is; they had had no way of actually finding out.

On October 29, 1969, a UCLA student named Charley Kline, sitting behind his Sigma 7 terminal, called up SRI on an ordinary telephone to initiate the first real test of the ARPANET. Computer rooms in those days were noisy places, what with all of the ventilation the big beasts required, so the two human interlocutors had to fairly shout into their respective telephones. “I’m going to type an L,” Kline yelled, and did so. “Did you get the L?” His opposite number acknowledged that he had. Kline typed an O. “Did you get the O?” Yes. He typed a G.

“The computer just crashed,” said the man at SRI.

“History now records how clever we were to send such a prophetic first message, namely ‘LO,'” says Leonard Kleinrock today with a laugh. They had been trying to manage “LOGIN,” which itself wouldn’t have been a challenger to Samuel Morse’s “What hath God wrought?” in the eloquence sweepstakes — but then, these were different times.

At any rate, the bug which had caused the crash was fixed before the day was out, and regular communications began. UC Santa Barbara came online in November, followed by the University of Utah in December. Satisfied with this proof of concept, ARPA agreed to embark on the next stage of the project, extending the network to the East Coast. In March of 1970, the ARPANET reached BBN itself. Needless to say, this achievement — computer networking’s equivalent to telephony’s spanning of the continent back in 1915 — went entirely unnoticed by an oblivious public. BBN was followed before the year was out by MIT, Rand, System Development Corporation, and Harvard University.

It would make for a more exciting tale to say that the ARPANET revolutionized computing immediately, but such was not the case. In its first couple of years, the network was neither a raging success nor an abject failure. On the one hand, its technical underpinnings advanced at a healthy clip; BBN steadily refined their IMPs, moving them away from modified general-purpose computers and toward the specialized routers we know today. Likewise, the network they served continued to grow; by the end of 1971, the ARPANET had fifteen nodes. But despite it all, it remained frustratingly underused; a BBN survey conducted about two years in revealed that the ARPANET was running at just 2 percent of its theoretical capacity.

The problem was one of computer communication at a higher level than that of the IMPs. Claude Shannon had told the world that information was information in a networking context, and the minds behind the ARPANET had taken his tautology to heart. They had designed a system for shuttling arbitrary blocks of data about, without concerning themselves overmuch about the actual purpose of said data. But the ability to move raw data from computer to computer availed one little if one didn’t know how to create meaning out of all those bits. “It was like picking up the phone and calling France,” Frank Heart of BBN would later say. “Even if you get the connection to work, if you don’t speak French you’ve got a little problem.”

What was needed were higher-level protocols that could run on top of the ARPANET’s packet switching — a set of agreed-upon “languages” for all of these disparate computers to use when talking with one another in order to accomplish something actually useful. Seeing that no one else was doing so, BBN and MIT finally deigned to provide them. First came Telnet, a protocol to let one log into a remote computer and interact with it at a textual command line just as if one was sitting right next to it at a local terminal. And then came the File Transfer Protocol, or FTP, which allowed one to move files back and forth between two computers, optionally performing useful transformations on them in the process, such as going from EBCDIC to ASCII text encoding or vice versa. It is a testament to how well the hackers behind these protocols did their jobs that both have remained with us to this day. Still, the application that really made the ARPANET come alive — the one that turned it almost overnight from a technological experiment to an indispensable tool for working and even socializing — was the next one to come along.

Jack Ruina was now long gone as the head of all of ARPA; that role was now filled by a respected physicist named Steve Lukasik. Lukasik would later remember how Larry Roberts came into his office one day in April of 1972 to try to convince him to use the ARPANET personally. “What am I going to do on the ARPANET?” the non-technical Lukasik asked skeptically.

“Well,” mused Roberts, “you could do email.”

Email wasn’t really a new idea at the time. By the mid-1960s, the largest computer at MIT had hundreds of users, who logged in as many as 30 at a time via local terminals. An undergraduate named Tom Van Vleck noticed that some users had gotten in a habit of passing messages to one another by writing them up in text files with names such as “TO TOM,” then dropping them into a shared directory. In 1965, he created what was probably the world’s first true email system in order to provide them with a more elegant solution. Just like all of the email systems that would follow it, it gave each user a virtual mailbox to which any other user could direct a virtual letter, then see it delivered instantly. Replying, forwarding, address books, carbon copies — all of the niceties we’ve come to expect — followed in fairly short order, at MIT and in many other institutions. Early in 1972, a BBN programmer named Ray Tomlinson took what struck him as the logical next step, by creating a system for sending email between otherwise separate computers — or “hosts,” as they were known in the emerging parlance of the ARPANET.

Thanks to FTP, Tomlinson already had a way of doing the grunt work of moving the individual letters from computer to computer. His biggest dilemma was a question of addressing. It was reasonable for the administrators of any single host to demand that every user have a unique login ID, which could also function as her email address. But it would be impractical to insist on unique IDs across the entire ARPANET. And even if it was possible, how was the computer on which an electronic missive had been composed to know which other computer was home to the intended recipient? Trying to maintain a shared central database of every login for every computer on the ARPANET didn’t strike Tomlinson as much of a solution.

His alternative approach, which he would later describe as no more than “obvious,” would go on to become an icon of the digital age. Each email address would consist of a local user name followed by an “at” sign (@) and the name of the host on which it lived. Just as a paper letter moves from an address in a town, then to a larger postal hub, then onward to a hub in another region, and finally to another individual street address, email would use its suffix to find the correct host on the ARPANET. Once it arrived there, said host could drill down further and route it to the correct user. “Now, there’s a nice hack,” said one of Tomlinson’s colleagues; that was about as effusive as a compliment could get in hacker circles.

Steve Lukasik reluctantly allowed Larry Roberts to install an ARPANET terminal in his office for the purpose of reading and writing email. Within days, the skeptic became an evangelist. He couldn’t believe how useful email actually was. He sent out a directive to anyone who was anyone at ARPA, whether their work involved computers or not: all were ordered to accept a terminal in their office. “The way to communicate with me is through electronic mail,” he announced categorically. He soon acquired a “portable” terminal which was the size of a suitcase and weighed 30 pounds, but which came equipped with a modem that would allow him to connect to the ARPANET from any location from which he could finagle access to an ordinary telephone. He became the prototype for millions of professional road warriors to come, dialing into the office constantly from conference rooms, from hotel rooms, from airport lounges. He became perhaps the first person in the world who wasn’t already steeped in computing to make the services the ARPANET could provide an essential part of his day-to-day life.

But he was by no means the last. “Email was the biggest surprise about the ARPANET,” says Leonard Kleinrock. “It was an ad-hoc add-on by BBN, and it just blossomed. And that sucked a lot of people in.” Within a year of Lukasik’s great awakening, three quarters of all the traffic on the ARPANET consisted of emails flying to and fro, and the total volume of traffic on the network had grown by a factor of five and a half.

With a supportive ARPA administrator behind them and applications like email beginning to prove their network’s real-world usefulness, it struck the people who had designed and built the ARPANET that it was time for a proper coming-out party. They settled on the International Conference on Computers and Communications, which was to be held at the Washington, D.C., Hilton hotel in October of 1972. Almost every institution connected to the ARPANET sent representatives toting terminals and demonstration software, while AT&T ran a special high-capacity line into the hotel’s ballroom to get them all online.

More than a thousand people traipsed through the exhibition over the course of two and half days, taking in several dozen demonstrations of what the ARPANET could do now and might conceivably be able to do in the future. It was the first that some of them had ever heard of the network, or even of the idea of computer networking in general.

One of the demonstrations bore an ironic resemblance to the SAGE system that had first proved that wide-area computer networking could work at all. Leonard Kleinrock:

One of the things that was demonstrated there was a distributed air-traffic-control system. The idea was there would be some air traffic on the system. And as a plane moved out of its region, it would be picked up by another computer in the next region, data would be exchanged, and collectively the computers would be managing airspace over a large region. This package also had the ability to freeze the simulation at any given point, take the program on Machine A, squeeze it over to Machine B, and then continue the simulation with the user being none the wiser. So there were really some sophisticated things going on there.

Severo Ornstein, who was also present, must have been having flashbacks to his early experiences with Crosstelling…

A Stanford researcher named Vint Cerf remembers three principal reactions among the attendees.

The first came from the die-hard circuit-switching people from the telephone industry, who didn’t believe packet switching could possibly work. And they were stunned because it did work. It was almost like the rail industry disbelieving that airplanes could really fly until they saw one in flight. The second group were the people who didn’t know anything about computer communications at all to speak of, and who were sort of overwhelmed by the whole thing. But then there was the third group: the people who were just as excited as little kids because of all these neat things that were going on.

Amidst the demonstrations of email, file transfers, remote logins, even remote software development, the hit of the show was something far more frivolous. Stanford’s AI Lab had brought a program called Parry, a “simulation” — we would call it a chatbot today — of a patient undergoing psychological therapy. They had wired Parry up to talk with another program called Eliza, a computerized psychotherapist created by one Joseph Weizenbaum at MIT back in 1966.

Good evening. Tell me your problems. Please terminate input with a period or a question mark.

People get on my nerves sometimes.

I am not sure I understand you.

You should pay more attention.

Suppose you should pay more attention.

You're entitled to your own opinion.

What makes you think I am entitled to my own opinion?

It bothers me just to be around people in general.

Please go on.

I went to the track at Bay Meadows a while back.

Why do you say that just now?

There's a lot of crooked gambling going on there.

Please go on.

I gamble on horses.

What does that suggest to you?

Do you know anything about bookies?

What makes you think I know anything about bookies?

A bookie didn't pay me off once.

What does that suggest to you?

I beat up the bookie who did not pay off, and I thought he might use his friends in the underworld to get even with me.

What are you really talking about?

Have you ever really thought about the mafia?

Suppose you ever really thought about the mafia.

Let's talk about something else.

And so it went. As Joseph Weizenbaum himself was at pains to point out later in his career, Eliza and Parry were nothing more than clever parlor tricks, a set of rote semantic transformations and randomized catchphrases meant to convey a tissue-thin simulacrum of real understanding, convincing only to the naïve and those actively determined to believe. Their presence here as the shabby best that the strong-AI contingent could offer, surrounded by so many genuinely visionary demonstrations of computing’s humanistic, networked future, ought to have demonstrated to the thoughtful observer how one vision of computing was delivering on its promises while the other manifestly was not. But no matter: the crowd ate it up. It seems there was no shortage of gullible true believers in the Hilton ballroom during those exciting two and a half days.

The International Conference on Computers and Communications provided the ARPANET with some of its first press coverage beyond academic journals. Within computing circles, however, the ARPANET’s existence hadn’t gone unnoticed even by those who, thanks to accidents of proximity, had no opportunity to participate in it. During the early 1970s, would-be ARPANET equivalents popped up in a number of places outside the continental United States. There was ALOHANET, which used radio waves to join the various campuses of the University of Hawaii, which were located on different islands, into one computing neighborhood. There was the National Physical Laboratory (NPL) network in Britain, which served that country’s research community in much the same way that ARPANET served computer scientists in the United States. (The NPL network’s design actually dated back to the mid-1960s, and some of its proposed architecture had influenced the ARPANET, making it arguably more a case of parallel evolution than of learning from example.) Most recently, there was a network known as CYCLADES in development in France.

All of which is to say that computer networking in the big picture was looking more and more like the early days of telephony: a collection of discrete networks that served their own denizens well but had no way of communicating with one another. This wouldn’t do at all; ever since the time when J.C.R. Licklider had been pushing his Intergalactic Computer Network, proponents of wide-area computer networking had had a decidedly internationalist, even utopian streak. As far as they were concerned, the world’s computers — all of the world’s computers, wherever they happened to be physically located — simply had to find a way to talk to one another.

The problem wasn’t one of connectivity in its purest sense. As we saw in earlier articles, telephony had already found ways of going where wires could not easily be strung decades before. And by now, many of telephony’s terrestrial radio and microwave beams had been augmented or replaced by communications satellites — another legacy of Sputnik — that served to bind the planet’s human voices that much closer together. There was no intrinsic reason that computers couldn’t talk to one another over the same links. The real problem was rather that the routers on each of the extant networks used their own protocols for talking among themselves and to the computers they served. The routers of the ARPANET, for example, used something called the Network Control Program, or NCP, which had been codified by a team from Stanford led by Steve Crocker, based upon the early work of BBN hackers like Will Crowther. Other networks used completely different protocols. How were they to make sense of one another? Larry Roberts came to see this as computer networking’s next big challenge.

He happened to have working just under him at ARPA a fellow named Bob Kahn, a bright spark who had already achieved much in computing in his 35 years. Roberts now assigned Kahn the task of trying to make sense of the international technological Tower of Babel that was computer networking writ large. Kahn in turn enlisted Stanford’s Vint Cerf as a collaborator.

The two theorized and argued with one another and with their academic colleagues for about a year, then published their conclusions in the May 1974 issue of IEEE Transactions on Communications, in an article entitled “A Protocol for Packet Network Intercommunication.” It introduced to the world a new word: the “Internet,” shorthand for Khan and Cerf’s envisioned network of networks. The linchpin of their scheme was a sort of meta-network of linked “gateways,” special routers that handled all traffic going in and out of the individual networks; if the routers on the ARPANET were that network’s interstate highway system, its gateway would become its international airport. A host wishing to send a packet to a computer outside its own network would pass it to its local gateway using its network’s standard protocols, but would include within the packet information about the particular “foreign” computer it was trying to reach. The gateway would then rejigger the packet into a universal standard format and send it over the meta-network to the gateway of the network to which the foreign computer belonged. Then this gateway would rejigger the packet yet again, into a format suitable for passing over the network behind it to reach its ultimate destination.

Kahn and Cerf detailed a brand-new protocol to allow the gateways on the meta-network to talk among themselves. They called it the Transmission Control Protocol, or TCP. It gave each computer on the networks served by the gateways the equivalent of a telephone number. These “TCP addresses” — which we now call “IP addresses,” for reasons we’ll get to shortly — originally consisted of three fields, each containing a number between 0 and 255. The first field stipulated the network to which the host belonged; think of it as a telephone number’s country code. The other two fields identified the specific computer on that network. “Network identification allows up to 256 distinct networks,” wrote Kahn and Cerf. “This seems sufficient for the foreseeable future. Similarly, the TCP identifier field permits up to 65,536 distinct [computers] to be addressed, which seems more than sufficient for any given network.” Time would prove these statements to be among their few failures of vision.

It wasn’t especially easy to convince the managers of other networks, who came from different cultures and were all equally convinced that their way of doings things was the best way, to accept the standard being shoved in their faces by the long and condescending arm of the American government. Still, the reality was that TCP was as solid and efficient a protocol as anyone could ask for, and there were huge advantages to be had by linking up with the ARPANET, where more cutting-edge computer research was happening than anywhere else. Late in 1975, the NPL network in Britain, the second largest in the world, officially joined up. After that, the Internet began to take on an unstoppable momentum of its own. In 1981, with the number of individual networks on it barreling with frightening speed toward the limit of 256, a new addressing scheme was hastily adopted, one which added a fourth field to each computer’s telephone number to create the format we are still familiar with today.

Amidst all the enthusiasm for communicating across networks, the distinctions between them were gradually lost. The Internet became just the Internet, and no one much knew or cared whether any given computer was on the ARPANET or the NPL network or somewhere else. The important thing was, it was on the Internet. The individual networks’ internal protocols came slowly to resemble that of the Internet, just because it made everything easier from a technical standpoint. In 1978, in a reflection of these trends, the TCP protocol was split into a matched pair of them called TCP/IP. The part that was called the Transmission Control Protocol was equally useful for pushing packets around a network behind a gateway, while the Internet Protocol was reserved for the methods that gateways used to pass packets across network boundaries. (This is the reason that we now refer to IP addresses rather than TCP addresses.) Beginning on January 1, 1983, all computers on the ARPANET were required to use TCP rather than NCP even when they were only talking among themselves behind their gateway.

Alas, by that point ARPA itself was not what it once had been; the golden age of blue-sky computer research on the American taxpayer’s dime had long since faded into history. One might say that the beginning of the end came as early as the fall of 1969, when a newly fiscally conservative United States Congress, satisfied that the space race had been won and the Soviets left in the country’s technological dust once again, passed an amendment to the next year’s Department of Defense budget which specified that any and all research conducted by agencies like ARPA must have “a direct and apparent relationship” to the actual winning of wars by the American military. Dedicated researchers and administrators found that they could still keep their projects alive afterward by providing such justifications in the form of lengthy, perhaps deliberately obfuscated papers, but it was already a far cry from the earlier days of effectively blank checks. In 1972, as if to drive home a point to the eggheads in its ranks who made a habit of straying too far out of their lanes, the Defense Department officially renamed ARPA to DARPA: the Defense Advanced Research Projects Agency.

Late in 1973, Larry Roberts left ARPA. His replacement the following January was none other than J.C.R. Licklider, who had reluctantly agreed to another tour of duty in the Pentagon only when absolutely no one else proved willing to step up.

But, just as this was no longer quite the same ARPA, it was no longer quite the same Lick. He had continued to be a motivating force for computer networking from behind the scenes at MIT during recent years, but his decades of burning the candle at both ends, of living on fast food and copious quantities of Coca Cola, were now beginning to take their toll. He suffered from chronic asthma which left him constantly puffing at an inhaler, and his hands had a noticeable tremor that would later reveal itself to be an early symptom of Parkinson’s disease. In short, he was not the man to revive ARPA in an age of falling rather than rising budgets, of ever increasing scrutiny and internecine warfare as everyone tried to protect their own pet projects, at the expense of those of others if necessary. “When there is scarcity, you don’t have a community,” notes Vint Cerf, who perchance could have made a living as a philosopher if he hadn’t chosen software engineering. “All you have is survival.”

Lick did the best he could, but after Steve Lukasik too left, to be replaced by a tough cookie who grilled everyone who proposed doing anything about its concrete military value, he felt he could hold on no longer. Lick’s second tenure at ARPA ended in September of 1975. Many computing insiders would come to mark that day as the one when a door shut forever on this Defense Department agency’s oddly idealistic past. When it came to new projects at least, DARPA from now on would content itself with being exactly what its name said it ought to be. Luckily, the Internet already existed, and had already taken on a life of its own.

Lick wound up back at MIT, the congenial home to which this prodigal son had been regularly returning since 1950. He took his place there among the younger hackers of the Dynamic Modeling Group, whose human-focused approach to computing caused him to favor them over their rivals at the AI Lab. If Lick wasn’t as fast on his feet as he once had been, he could still floor you on occasion with a cogent comment or the perfect question.

Some of the DMG folks who now surrounded him would go on to form Infocom, an obsession of the early years of this website, a company whose impact on the art of digital storytelling can still be felt to this day.[1]In fact, Lick agreed to join Infocom’s board of directors, although his role there was a largely ceremonial one; he was not a gamer himself, and had little knowledge of or interest in the commercial market for home-computer games that had begun to emerge by the beginning of the 1980s. Still, everyone involved with the company remembers that he genuinely exulted at Infocom’s successes and commiserated with their failures, just as he did with those of all of his former students. One of them was a computer-science student named Tim Anderson, who met the prophet in their ranks often in the humble surroundings of a terminal room.

He signed up for his two hours like everybody else. You’d come in and find this old guy sitting there with a bottle of Coke and a brownie. And it wasn’t even a good brownie; he’d be eating one of those vending-machine things as if that was a perfectly satisfying lunch. Then I also remember that he had these funny-colored glasses with yellow lenses; he had some theory that they helped him see better.

When you learned what he had done, it was awesome. He was clearly the father of us all. But you’d never know it from talking to him. Instead, there was always a sense that he was playing. I always felt that he liked and respected me, even though he had no reason to: I was no smarter than anybody else. I think everybody in the group felt the same way, and that was a big part of what made the group the way it was.

In 1979, Lick penned the last of his periodic prognostications of the world’s networked future, for a book of essays about the abstract future of computing that was published by the MIT Press. As before, he took the year 2000 as the watershed point.

On the whole, computer technology continues to advance along the curve it has followed in its three decades of history since World War II. The amount of information that can be stored for a given period or processed in a given way at unit cost doubles every two years. (The 21 years from 1979 to 2000 yielded ten doublings, for a factor of about 1000.) Wave guides, optical fibers, rooftop satellite antennas, and coaxial cables provide abundant bandwidth and inexpensive digital transmission both locally and over long distances. Computer consoles with good graphics displays and speech input and output have become almost as common as television sets. Some pocket computers are fully programmable, as powerful as IBM 360/40s used to be, and are equipped with both metallic and radio connectors to computer-communication networks.

An international network of digital computer-communication networks serves as the main and essential medium of informational interaction for governments, institutions, corporations, and individuals. The Multinet [i.e., Internet], as it is called, is hierarchical — some of the component networks are themselves networks of networks — and many of the top-level networks are national networks. The many sub-networks that comprise this network of networks are electronically and physically interconnected. Most of them handle real-time speech as well as computer messages, and some handle video.

The Multinet has supplanted the postal system for letters, the dial-telephone system for conversations and teleconferences, standalone batch-processing and time-sharing systems for computation, and most filing cabinets, microfilm repositories, document rooms, and libraries for information storage and retrieval. Many people work at home, interacting with clients and coworkers through the Multinet, and many business offices (and some classrooms) are little more than organized interconnections of such home workers and their computers. People shop through the Multinet, using its funds-transfer functions, and a few receive delivery of small items through adjacent pneumatic-tube networks. Routine shopping and appointment scheduling are generally handled by private-secretary-like programs called OLIVERs which know their masters’ needs. Indeed, the Multinet handles scheduling of almost everything schedulable. For example, it eliminates waiting to be seated at restaurants and if you place your order through it it can eliminate waiting to be served…

But for the first time, Lick also chose to describe a dystopian scenario to go along with the utopian one, stating that the former was just as likely as the latter if open standards like TCP/IP, and the spirit of cooperation that they personified, got pushed away in favor of closed networks and business models. If that happened, the world’s information spaces would be siloed off from one another, and humanity would have lost a chance it never even realized it had.

Because their networks are diverse and uncoordinated, recalling the track-gauge situation in the early days of railroading, the independent “value-added-carrier” companies capture only the fringes of the computer-communication market, the bulk of it being divided between IBM (integrated computer-communication systems based on satellites) and the telecommunications companies (transmission services but not integrated computer-communication services, no remote-computing services)…

Electronic funds transfer has not replaced money, as it turns out, because there were too many uncoordinated bank networks and too many unauthorized and inexplicable transfers of funds. Electronic message systems have not replaced mail, either, because there were too many uncoordinated governmental and commercial networks, with no network at all reaching people’s homes, and messages suffered too many failures of transfers…

Looking back on these two scenarios from the perspective of 2022, when we stand almost exactly as far beyond Lick’s watershed point as he stood before it, we can note with gratification that his more positive scenario turned out to be the more correct one; if some niceties such as computer speech recognition didn’t arrive quite on his time frame, the overall network ecosystem he described certainly did. We might be tempted to contemplate at this point that the J.C.R. Licklider of 1979 may have been older in some ways than his 64 years, being a man who had known as much failure as success over the course of a career spanning four and a half impossibly busy decades, and we might be tempted to ascribe his newfound willingness to acknowledge the pessimistic as well as the optimistic to these factors alone.

But I believe that to do so would be a mistake. It is disarmingly easy to fall into a mindset of inevitability when we consider the past, to think that the way things turned out are the only way they ever could have. In truth, the open Internet we are still blessed with today, despite the best efforts of numerous governments and corporations to capture and close it, may never have been a terribly likely outcome; we may just possibly have won an historical lottery. When you really start to dig into the subject, you find that there are countless junctures in the story where things could have gone very differently indeed.

Consider: way back in 1971, amidst the first rounds of fiscal austerity at ARPA, Larry Roberts grew worried about whether he would be able to convince his bosses to continue funding the fledgling ARPANET at all. Determined not to let it die, he entered into serious talks with AT&T about the latter buying the whole kit and caboodle. After months of back and forth, AT&T declined, having decided there just wasn’t any money to be made there. What would have happened if AT&T had said yes, and the ARPANET had fallen into the hands of such a corporation at this early date? Not only digital history but a hugely important part of recent human history would surely have taken a radically different course. There would not, for instance, have ever been a TCP/IP protocol to run the Internet if ARPA had washed their hands of the whole thing before Robert Kahn and Vint Cerf could create it.

And so it goes, again and again and again. It was a supremely unlikely confluence of events, personalities, and even national moods that allowed the ARPANET to come into being at all, followed by an equally unlikely collection of same that let its child the Internet survive down to the present day with its idealism a bit tarnished but basically intact. We spend a lot of time lamenting the horrific failures of history. This is understandable and necessary — but we should also make some time here and there for its crazy, improbable successes.

On October 4, 1985, J.C.R. Licklider finally retired from MIT for good. His farewell dinner that night had hundreds of attendees, all falling over themselves to pay him homage. Lick himself, now 70 years old and visibly infirm, accepted their praise shyly. He seemed most touched by the speakers who came to the podium late in the evening, after the big names of academia and industry: the group of students who had taken to calling themselves “Lick’s kids” — or, in hacker parlance, “lixkids.”

“When I was an undergraduate,” said one of them, “Lick was just a nice guy in a corner office who gave us all a wonderful chance to become involved with computers.”

“I’d felt I was the only one,” recalled another of the lixkids later. “That somehow Lick and I had this mystical bond, and nobody else. Yet during that evening I saw that there were 200 people in the room, 300 people, and that all of them felt that same way. Everybody Lick touched felt that he was their hero and that he had been an extraordinarily important person in their life.”

J.C.R. Licklider died on June 26, 1990, just as the networked future he had so fondly envisioned was about to become a tangible reality for millions of people, thanks to a confluence of three factors: an Internet that was descended from the original ARPANET, itself the realization of Lick’s own Intergalactic Computer Network; a new generation of cheap and capable personal computers that were small enough to sit on desktops and yet could do far more than the vast majority of the machines Lick had had a chance to work on; and a new and different way of navigating texts and other information spaces, known as hypertext theory. In the next article, we’ll see how those three things yielded the World Wide Web, a place as useful and enjoyable for the ordinary folks of the world as it is for computing’s intellectual elites. Lick, for one, wouldn’t have had it any other way.

(Sources: the books A Brief History of the Future: The Origins of the Internet by John Naughton; Where Wizards Stay Up Late: The Origins of the Internet by Katie Hafner and Matthew Lyon, Hackers: Heroes of the Computer Revolution by Steven Levy, From Gutenberg to the Internet: A Sourcebook on the History of Information Technology edited by Jeremy M. Norman, The Dream Machine by M. Mitchell Waldrop, A History of Modern Computing (2nd ed.) by Paul E. Ceruzzi, Communication Networks: A Concise Introduction by Jean Walrand and Shyam Parekh, Computing in the Middle Ages by Severo M. Ornstein, and The Computer Age: A Twenty-Year View edited by Michael L. Dertouzos and Joel Moses.)

Footnotes

| ↑1 | In fact, Lick agreed to join Infocom’s board of directors, although his role there was a largely ceremonial one; he was not a gamer himself, and had little knowledge of or interest in the commercial market for home-computer games that had begun to emerge by the beginning of the 1980s. Still, everyone involved with the company remembers that he genuinely exulted at Infocom’s successes and commiserated with their failures, just as he did with those of all of his former students. |

|---|

Lee

May 6, 2022 at 5:48 pm

Jimmy, when I was a formative sysadmin geek in the 90s, I was taught that the “T” in TCP was for “Transmission,” rather than “Transport.” I’ve actually not ever heard “Transport Control Protocol” before reading this piece.

Do you have some historic sourcing on “transmission” vs “transport”? Is this one of those areas where history has corrupted an acronym, like how an unfortunately large number of people today seem to think the “I” in RAID stands for “independent” instead of the correct “inexpensive”? Would love to know.

Really enjoying this series. Thanks for writing it!

Jimmy Maher

May 6, 2022 at 8:06 pm

I’d love that to be the case, but I’m afraid it was just an error on my part. I checked the original journal article from 1974, and it was indeed called Transmission Control Protocol from the start. Thanks for pointing it out!

Derek

May 6, 2022 at 6:24 pm

Hearing the story of the first message sent via the internet, I’ve always assumed that once they got the system back up, they tried to type LOGIN again. Which would mean that the first three letters on the internet were “L O L”.

Jimmy Maher

May 6, 2022 at 8:06 pm

:)

David Boddie

May 6, 2022 at 11:09 pm

A small correction: “before Robert Kahn and Vint Cern could create it” (Cerf)

You were getting ahead of yourself, perhaps. ;-)

Jimmy Maher

May 7, 2022 at 7:22 am

Indeed. :) Thanks!

Keith Palmer

May 6, 2022 at 11:48 pm

I’ve been reading this series with interest, although the first comment I’ve really thought of making is a small anecdote about a possibly early reference to ARPANET, or rather “content on it.” The September-October 1976 issue of Creative Computing included three reviews of Joseph Weizenbaum’s Computer Power and Human Reason, and the most critical of them (by John McCarthy) mentioned it had “originally ‘appeared’ in a public file on the ARPA net.” If this series gets around to mentioning Usenet (which I understand wasn’t “on the Internet” to begin with), I could mention a reference to it from 1985 I once came across.

David Ghandehari

May 6, 2022 at 11:57 pm

Just a “little” background on IP vs TCP, and TCP’s less-well-known little brother, UDP. I’ve been telling my kindergartner about them to put him to sleep, lately, works great!

Internet Protocol is focused on getting packets to their destination — just routing. It has 3 major limitations:

1. It’s unreliable. Routers buffer packets if too many arrive at once, but if those buffers get too big, it causes all sorts of backup problems. So, the buffers are small, and when full, the buffers just drop packets. There are no guarantees about delivery of any given packet.

2. It’s unordered. Two packets, 1 and 2, sent from node A to node B might take different routes. One might be slower, and so packet 2 could easily arrive at B before packet 1.

3. It’s a fixed size. Generally around ~1500 bytes, but if your IP packet is bigger than fits on any leg of the journey, it’ll be dropped.

TCP then solves these problems by sending ACKnowledge packets back to say which IP packets were received, and retransmits lost packets. It also assigns an order number to each packet, and reorders packets that arrive out of order. Finally, TCP uses a metaphor of a streaming connection to avoid packet size concerns. You write bytes and it will break them into IP packets somehow. The other side gets bytes in the same order.

TCP has a big problem, too, though. It’s called Head-of-Line Blocking. If packet 73 gets dropped, then any packets after 73 will be held up until 73 is successfully ACKed. A lot of times, those other packets have nothing to do with packet 73, they are just being transmitted on the same TCP connection. This can add unpredictable latency to real-time latency-sensitive applications, like voice chat, video chat, and games.

So, these apps tend to use UDP, which stands for User Datagram Protocol. It’s much like IP in that it is unreliable and unordered, but it allows for much bigger packets, up to 64kb. And if they need ordering or reliability, they build it themselves, often in a lighterweight or partial way.

Google came up with QUIC, which has been approved to become HTTP/3. It is based on UDP to avoid the Head-of-Line Blocking problem, but it pretty much provides everything that TCP and HTTP and TLS (encryption) does. Things move very slowly in the Internet world, because no one can break compatibility with anything, but I think eventually we will move pretty much everything off of HTTP/TLS/TCP/IP and onto QUIC/UDP/IP.

Daniel Wolf

May 7, 2022 at 5:49 am

Typo: “bids to built” -> “bids to build”

Jimmy Maher

May 7, 2022 at 7:23 am

Thanks!

Nate

May 8, 2022 at 5:19 am

Enjoying this series. Thanks

Typo: “United Sates Congress”

Jimmy Maher

May 8, 2022 at 6:42 am

Thanks!

Adam Sampson

May 8, 2022 at 3:32 pm

“Stanford’s AI Lab had brought a program called Parry, an evolution of Eliza, a computerized “psychotherapist” — we would call it a chatbot today — originally created by one Joseph Weizenbaum at MIT in 1966.”

That maybe undersells PARRY a bit – it simulates a patient rather than a therapist, and it’s somewhat more complex than ELIZA. The SAILDART archive shows it was new in 1972 and was still being developed in 1978; as with ZORK at MIT, SAIL eventually had to limit ARPANET guest users’ access to it to reduce system load. The conversation you’re quoting is between the two programs, and is from RFC 439 in September 1972: “PARRY was running at SAIL and DOCTOR [ELIZA] at BBN Tenex, both being accessed from UCLA/SEX”.

The complete “Scenarios for using ARPANET” handout from the ICCC 1972 conference, with instructions for using all the services that participants had set up, is available here courtesy of Jack Haverty: https://github.com/larsbrinkhoff/its-archives/blob/master/dmcg/ICCCScenarios1972.pdf

It’s interesting to see what applications the participants thought would be worth showing off fifty years ago – there are several chatbots and other games, including MIT’s JOTTO, which is effectively Wordle.

Jimmy Maher

May 9, 2022 at 7:46 am

Ah, I didn’t get the detail that Parry was talking *to* Eliza. Edit made. Thanks!

Conor

May 15, 2022 at 4:25 pm

I’m really enjoying this series. I read a story this week about the new internet cables that Google, Meta, and Amazon are laying, and I felt like I actually had context for my reading.

https://restofworld.org/2022/google-meta-underwater-cables/

Matt Krzesniak

May 18, 2022 at 11:41 pm

Another great article!

I’m guessing this should be neighborhood?

“There was ALOHANET, which used radio waves to join the various campuses of the University of Hawaii, which were located on different islands, into one computing neighorbood.”

Jimmy Maher

May 19, 2022 at 4:43 am

Yes. Thanks!

Nathanael

May 29, 2022 at 5:56 am

Worth discussing the next stage, which generally gets left out of the histories: the massive network expansions related to CSNet and NSFNet in the US.

There were arguments over whether to use the “official” OSI model, or TCP/IP. TCP/IP was chosen. Shortly after this buildout all the universities in the US started having real-time always-on Internet connections usable by anyone — rather than the “delayed”, low-bandwidth, sometimes only on during certain hours of the day, internet connections which many had before (those older Internet connections were primarily useable for email or Usenet, but not for “live” activities). Similar things happened in other countries.

This is often a missing stage in the histories; it comes after the part discussed here, and before the WWW. There are some write-ups and lectures recorded on Youtube about it though.

Wouter Lammers

June 18, 2022 at 6:34 pm

I spotted a “Seeing that no else was doing so”. “no one” or “nobody” maybe?

Enjoying this series a lot!

Jimmy Maher

June 19, 2022 at 1:21 pm

Thanks!

Peter Olausson

June 27, 2022 at 7:39 am

Minute remark: Charley Kline seems to be the preferred spelling of the pioneer’s name.

I hope there’s a statue or something of Licklider somewhere. And Derek, I really liked the LOL-remark!

Jimmy Maher

June 27, 2022 at 3:22 pm

Thanks! Sadly, Licklider is not immortalized anywhere that I know of. It would be nice to see something on the MIT campus if nowhere else…

Not Fenimore

July 24, 2022 at 3:59 am

The picture is labeled “Stephen Lukasik, ARPA head and original email-obsessed road warrior.” – it should presumably be “Larry Roberts”?

Jimmy Maher

July 24, 2022 at 9:41 am

Nope, it’s as it should be.