New World Computing’s Heroes of Might and Magic II: The Succession Wars is different from the strategy-game sequels we’ve previously examined in this series in a couple of important ways. For one thing, it followed much more quickly on the heels of its predecessor: the first Heroes shipped in September of 1995, this follow-up just over one year later. This means that it doesn’t represent as dramatic a purely technological leap as do Civilization II and Master of Orion II; Heroes I as well was able to take advantage of SVGA graphics, CD-ROM, and all the other transformations the average home computer underwent during the first half of the 1990s. But the Heroes series as a whole is also conceptually different from the likes of Civilization and Master of Orion. It’s a smaller-scale affair, built around human-crafted rather than procedurally-generated maps, with more overt, pre-scripted narrative elements. All of these factors cause Heroes II to blur the boundaries between the fiction-driven and the systems-driven sequel. Its campaign — which is, as we’ll see, only one part of what it has to offer — is presented as a direct continuation of the story, such as it was, of Heroes I. At the same time, though, it strikes me as safe to say that no one bought the sequel out of a burning desire to find out what happens to the sons of Lord Morglin Ironfist, the star of the first game’s sketchy campaign. They rather bought it because they wanted a game that did what Heroes I had done, only even better. And fortunately for them, this is exactly what they got.

Heroes II doesn’t revamp its predecessor to the point of feeling like a different game entirely, as Master of Orion II arguably does. The scant amount of time separating it from its inspiration wouldn’t have allowed for that even had its creators wished it. It would surely not have appeared so quickly — if, indeed, it ever appeared at all — absent the new trend of strategy-game sequels. But as it was, Jon Van Caneghem, the founder of New World Computing and the mastermind of Heroes I and II, approached it as he had his earlier Might and Magic CRPG series, which had seen five installments by the time he (temporarily) shifted his focus to strategy gaming. “We weren’t making a sequel for the first time,” says the game’s executive producer Mark Caldwell. “So we did as we always did. Take ideas we couldn’t use or didn’t have time to implement in the previous game and work them into the next one. Designing a computer game, at least at [New World], was always about iterating. Just start, get something working, then get feedback and iterate.” In the case of Heroes II, it was a matter of capitalizing on the first game’s strengths — some of which hadn’t been entirely clear to its own makers until it was released and gamers everywhere had fallen in love with it — and punching up its relatively few weaknesses.

Ironically, the aspects of Heroes I that people seemed to appreciate most of all were those that caused it to most resemble the Might and Magic CRPGs, whose name it had borrowed more for marketing purposes than out of any earnest belief that it was some sort of continuation of that line. Strategy designers at this stage were still in the process of learning how the inclusion of individuals with CRPG-like names and statistics, plus a CRPG-like opportunity to level them up as they gained experience, could allow an often impersonal-feeling style of game to forge a closer emotional connection with its players. The premier examples before Heroes I were X-COM, which had the uncanny ability to make the player’s squad of grizzled alien-fighting soldiers feel like family, and Master of Magic, whose own fantasy heroes proved to be so memorable that they almost stole the show, much to the surprise of that game’s designer. Likewise, Jon Van Caneghem had never intended for the up to eight heroes you can recruit to your cause in Heroes of Might and Magic to fill as big a place in players’ hearts as they did. He originally thought he was making “a pure strategy game that was meant to play and feel like chess.” But a rudimentary leveling system along with names and character portraits for the heroes, all borrowed to some extent from his even earlier strategy game King’s Bounty, sneaked in anyway, and gamers loved it. The wise course was clearly to double down on the heroes in Heroes II.

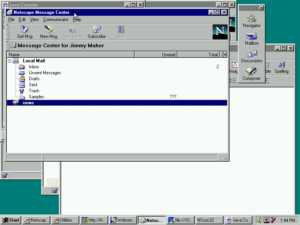

Thus we get here a much more fleshed-out system for building up the capabilities of our fantasy subordinates, and building up our emotional bond with them in the process. The spells they can cast, in combat and elsewhere, constitute the single most extensively revamped part of the game. Not only are there many more of them, but they’ve been slotted into a magic system complex enough for a full-fledged CRPG, with spell books and spell points and all the other trimmings. And there are now fourteen secondary skills outside the magic system for the heroes to learn and improve as they level up, from Archery (increases the damage a hero’s minions do in ranged combat) to Wisdom (allows the hero to learn higher level spells), from Ballistics (increases the damage done by the hero’s catapults during town sieges) to Scouting (lets the hero see farther when moving around the map).

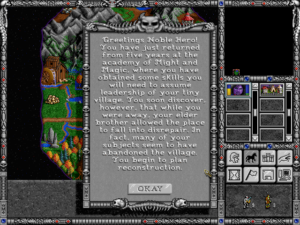

Another thing that CRPGs had and strategy games usually lacked was a strong element of story. Heroes I did little more than gesture in that direction; its campaign was a set of generic scenarios that were tied together only by the few sentences of almost equally generic text in a dialog box that introduced each of them. The campaign in Heroes II, on the other hand, goes much further. It’s the story of a war between the brothers Roland and Archibald, good and evil respectively, the sons of the protagonist of Heroes I. You can choose whose side you wish to fight on at the outset, and even have the opportunity to switch sides midstream, among other meta-level decisions. Some effects and artifacts carry over from scenario to scenario during the campaign, giving the whole experience that much more of a sense of continuity. And the interstices between the scenarios are filled with illustrations and voice-over narration. The campaign isn’t The Lord of the Rings by any means — if you’re like me, you’ll have forgotten everything about the story roughly one hour after finishing it — but it more than serves its purpose while you’re playing.

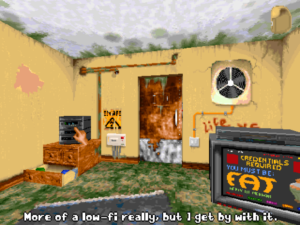

Instead of just a few lines of text, each scenario in the campaign game is introduced this time by some lovely pixel art and a well-acted voice-over.

These are the major, obvious improvements, but they’re joined by a host of smaller ones that do just as much in the aggregate to make Heroes II an even better, richer game. When you go into battle, the field of action has been made bigger — or, put another way, the hexes on which the combatants stand have been made smaller, giving space for twice as many of them on the screen. This results in engagements that feel less cramped, both physically and tactically; ranged weapons especially really come into their own when given more room to roam, as it were. The strategic maps too can be larger, up to four times so — or for that matter smaller, again up to four times so. This creates the potential for scenarios with wildly different personalities, from vast open-world epics to claustrophobic cage matches.

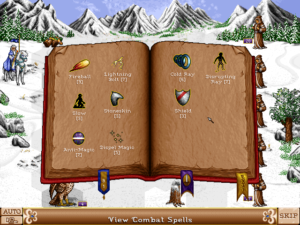

The tactical battlefields are larger now, with a richer variety of spells to employ in ingenious combinations.

And then, inevitably, there’s simply more stuff everywhere you turn. There are two new factions to play as or against, making a total of six in all; more types of humans, demi-humans, and monsters to recruit and fight against; more types of locations to visit on the maps; more buildings to construct in your towns; way more cool weapons and artifacts to discover and give to your heroes; more and more varying standalone scenarios to play in addition to the campaign.

One of the two new factions is the necromancers, who make the returning warlocks seem cute and cuddly by comparison. Necromancer characters start with, appropriately enough, the “necromancy” skill, which gives them the potential to raise huge armies of skeletons from their opponents’ corpses after they’ve vanquished them. This has been called unbalancing, and it probably is in at least some situations, but it’s also a heck of a lot of fun, not to mention the key to beating a few of the most difficult scenarios.

The other new faction is the wizards. They can eventually recruit lightning-flinging titans, who are, along with the warlocks’ black dragons, the most potent single units in the game.

After Heroes II was released, New World delegated the task of making an expansion pack to Cyberlore Studios, an outfit with an uncanny knack for playing well with others’ intellectual property. (At the time, Cyberlore had just created a well-received expansion pack for Blizzard’s Warcraft II.) Heroes of Might and Magic II: The Price of Loyalty, the result of Cyberlore’s efforts, comes complete with not one but four new campaigns, each presented with the same lavishness as the chronicles of Roland and Archibald, along with still more new creatures, locations, artifacts, and standalone scenarios. All are welcome.

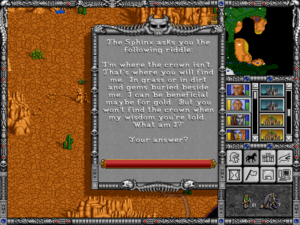

There are even riddles. Sigh… you can’t win them all, I guess. I feel about riddles in games the way Indiana Jones does about snakes in archaeological sites.

But wait, I can hear you saying: didn’t you just complain in those articles about Civilization II and Master of Orion II that just adding more stuff doesn’t automatically or even usually make a game better? I did indeed, and I’ve been thinking about why my reaction to Heroes II is so different. Some of it undoubtedly comes down to purely personal preferences. The hard truth is that I’ve always been more attracted to Civilization as an idea than an actual game; impressive as Civilization I was in the context of its time, I’ll go to my grave insisting that there are even tighter, even more playable designs than that one in the Sid Meier canon, such as Pirates! and Railroad Tycoon. I’m less hesitant to proclaim Master of Orion I a near-perfect strategy masterpiece, but my extreme admiration for it only makes me unhappy with the sequel, which removed some of the things I liked best about the original in favor of new complexities that I find less innovative and less compelling. I genuinely love Heroes I as well — and thus love the sequel even more for not trying to reinvent this particular wheel, for trying only to make it glide along that much more smoothly.

I do think I can put my finger on some objective reasons why Heroes II manages to add so much to its predecessor’s template without adding any more tedium. It’s starting from a much sparser base, for one thing; Heroes I is a pretty darn simple beast as computer strategy games of the 1990s go. The places where Heroes II really slathers on the new features — in the realms of character development, narrative, and to some extent tactical combat — are precisely those where its predecessor feels most under-developed. The rest of the new stuff, for all its quantity, adds variety more so than mechanical complexity or playing time. A complete game of Heroes II doesn’t take significantly longer to play than a complete game of Heroes I (unless you’re playing on one of those new epic-sized maps, of course). That’s because you won’t even see most of the new stuff in any given scenario. Heroes II gives its scenario designers a toolbox with many more bits and pieces to choose from, so that the small subset of them you see as a player each time out is always fresh and surprising. You have to play an awful lot of scenarios to exhaust all this game has to offer. It, on the other hand, will never exhaust you with fiddly details.

Anyway, suffice to say that I love Heroes II dearly. I’ve without a doubt spent more hours with it than any other game I’ve written about on this site to date. One reason for that is that my wife, who would never be caught dead playing a game like Civilization II or Master of Orion II, likes this one almost as much as I do. We’ve whiled away many a winter evening in multiplayer games, sitting side by side on the sofa with our laptops. (If that isn’t a portrait of the modern condition, I don’t know what is…) Sure, Heroes II is a bit slow to play this way by contemporary standards, being turn-based, and with consecutive rather than simultaneous turns at that, but that’s what good tunes on the stereo are for, isn’t it? We don’t like to fight each other, so we prefer the scenarios that let us cooperate — another welcome new feature. Or, failing that, we just agree to play until we’re the only two factions left standing.

What makes Heroes II such a great game in the eyes of both of us, and such a superb example of an iterative sequel done well? Simply put, everything that was fun in the first game is even more fun in the sequel. It still combines military strategy with the twin joys of exploration and character development in a way that I’ve never seen bettered. (My wife, bless her heart, is more interested in poking her head into every nook and cranny of a map and accessorizing her heroes like they’ve won a gift certificate to Lord & Taylor than she is in actually taking out the enemy factions, which means that’s usually down to me…) The strengthened narrative elements, not only between but within scenarios — a system of triggers now allows the scenario designer to advance the story even as you play — only makes the stew that much richer. Meanwhile the whole game is exquisitely polished, showing in its interface’s every nuance the hours and hours of testing and iterating that went into it before its release.

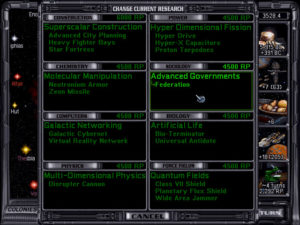

In this respect and many others, the strengths of Heroes II are the same as those of Heroes I. Both, for example, manage to dodge some of the usual problems of grand-strategy games by setting their sights somewhat lower than the 4X likes of Civilization and Master of Orion. There is no research tree here, meaning that the place where 4X strategizing has a tendency to become most rote is deftly dodged. Very, very little is rote about Heroes; many of its human-designed maps are consciously crafted to force you to abandon your conventional thinking. (The downside of this is a certain puzzle-like quality to some of the most difficult scenarios — a One True Way to Win that you must discover through repeated attempts and repeated failures — but even here, the thrill of figuring them outweighs the pain if you ask me.) Although the problem of the long, anticlimactic ending — that stretch of time after you know you’re going to win — isn’t entirely absent, some scenarios do have alternative victory conditions, and the fact that most of them can be played in a few hours at most from start to finish helps as well. The game never gets overly bogged down by tedious micromanagement, thanks to some sagacious limits that have been put in place, most notably the maximum of eight heroes you’re allowed to recruit, meaning that you can never have more than eight armies in the field. (It’s notable and commendable that New World resisted the temptation that must surely have existed to raise this limit in Heroes II.) The artificial intelligence of your computer opponents isn’t great by any means, but somehow even that doesn’t feel so annoying here; if the more difficult scenarios must still become that way by pitting your human cleverness against silicon-controlled hordes that vastly outnumber you, there are at least always stated reasons for the disparity to hand in a narrative-driven game like this one.

Also like its predecessor, Heroes II is an outlier among the hit games of the late 1990s, being turn-based rather than real-time, and relying on 2D pixel art rather than 3D graphics. Jon Van Caneghem has revealed in interviews that he actually did come alarmingly close to chasing both of those trends, but gave up on them for reasons having more to do with budgetary and time constraints than any sort of purist design ideology. For my part, I can only thank the heavens that such practicalities forced him to keep it old-school in the end. Heroes II still looks great today, which would probably not be the case if it was presented in jaggy 1990s 3D. New World’s artists had a distinct style, one that also marked the Might and Magic CRPG series: light, colorful, whimsical, and unabashedly cartoon-like, in contrast to the darker hued, ultra-violent aesthetic that marked so much of the industry in the post-DOOM era. Heroes II is perhaps slightly murkier in tone and tint than the first game, but it remains a warming ray of sunshine when stood up next to its contemporaries, who always seem to be trying too hard to be an epic saga, man. Its more whimsical touches never lose their charm: the vampires who go Blahhh! like Count Chocula when they make an attack, the medusae who slink around the battlefield like supermodels on the catwalk. Whatever else you can say about it, you can never accuse Heroes of Might and Magic of taking itself too seriously.

But there is one more way that Heroes II improves on Heroes I, and it is in some senses the most important of them all. The sequel includes a scenario construction kit, the very same tool that was used to build the official maps; the only thing missing is a way to make the cut scenes that separate the campaign scenarios. It came at the perfect time to give Heroes II a vastly longer life than it otherwise would have enjoyed, even with all of its other merits.

The idea of gaming construction kits was already a venerable one by the time of Heroes II‘s release. Electronic Arts made it something of their claim to fame in their early years, with products like Pinball Construction Set, Adventure Construction Set, and Racing Destruction Set. Meanwhile EA’s affiliated label Strategic Simulations had a Wargame Construction Set and Unlimited Adventures (the latter being a way of making new scenarios for the company’s beloved Gold Box CRPG engine). But all of these products were hampered somewhat by the problem of what you the buyer were really to do with a new creation into which you had poured your imagination, talent, and time. You could share it among your immediate circle of friends, assuming they had all bought (or pirated) the same game you had, maybe even upload it to a bulletin board or two or to a commercial online service like CompuServe, but doing so only let you reach a tiny cross-section of the people who might be able and willing to play it. And this in turn led to you asking yourself an unavoidable question: is this thing I want to make really worth the effort if it will hardly get played?

The World Wide Web changed all that at a stroke, as it did so much else in computing and gaming. The rise of a free and open, easily navigable Internet meant that you could now share your creation with everyone with the same base game you had bought. And so gaming construction kits of all stripes suddenly became much more appealing, were allowed to begin to fulfill their potential at last. Heroes of Might and Magic II is a prime case in point.

A bustling community of amateur Heroes II designers sprang up on the Internet after the game’s release, to stretch it in all sorts of delightful ways that New World had never anticipated. The best of the thousands of scenarios they produced are so boldly innovative as to make the official ones seem a bit dull and workmanlike by comparison. For example, “Colossal Cavern” lives up to its classic text-adventure namesake by re-imagining Heroes II as a game of dungeon delving and puzzle solving rather than strategic conquest. “Go Ask Alice,” by contrast, turns it into a game of chess with living pieces, like in Alice in Wonderland. “The Road Home” is a desperate chase across a sprawling map with enemy armies that outnumber you by an order of magnitude hot on your heels. And “Agent of Heaven” is a full campaign — one of a surprising number created by enterprising fans — that lets you live out ancient Chinese history, from the age of Confucius through the rise of the Qin and Han dynasties; it’s spread over seven scenarios, with lengthy journal entries to read between and within them as you go along.

The scenario editor has its limits as a vehicle for storytelling, but it goes farther than you might expect. Text boxes like these feature in many scenarios, and not only as a way of introducing them. The designer can set them to appear when certain conditions are fulfilled, such as a location visited for the first time by the player or a given number of days gone by. In practice, the most narratively ambitious scenarios tend to be brittle and to go off the rails from a storytelling perspective as soon as you do something in the wrong order, but one can’t help but be impressed by the lengths to which some fans went. Call it the triumph of hope over experience…

As the size and creative enthusiasm of its fan community will attest, Heroes II was hugely successful in commercial terms, leaving marketers everywhere shaking their heads at its ability to be so whilst bucking the trends toward real-time gameplay and 3D graphics. I can give you no hard numbers on its sales, but anecdotal and circumstantial evidence alone would place it not too far outside the ballpark of Civilization II‘s sales of 3 million copies. Certainly its critical reception was nothing short of rapturous; Computer Gaming World magazine pronounced it “nearly perfect,” “a five-star package that will suck any strategy gamer into [a] black hole of addictive fun.” The expansion too garnered heaps of justified praise and stellar sales when it arrived some nine months after the base game. The only loser in the equation was Heroes I, a charming little game in its own right that was rendered instantly superfluous by the superior sequel in the eyes of most gamers.

Personally, though, I’m still tempted to recommend that you start with Heroes I and take the long way home, through the whole of one of the best series in the history of gaming. Then again, time is not infinite, and mileages do vary. The fact is that this series tickles my sweet spots with uncanny precision. Old man that I’m fast becoming, I prefer its leisurely turn-based gameplay to the frenetic pace of real-time strategy. At the same time, though, I do appreciate that it plays quickly in comparison to a 4X game. I love its use of human-crafted scenarios, which I almost always prefer to procedurally-generated content, regardless of context. And of course, as a dyed-in-the-wool narratological gamer, I love the elements of story and character-building that it incorporates so well.

So, come to think of it, this might not be such a bad place to start with Heroes of Might and Magic after all. Or to finish, for that matter — if only it wasn’t for Heroes III. Now there’s a story in itself…

(Sources: Retro Gamer 239; Computer Gaming World of February 1997 and September 1997; XRDS: The ACM Magazine for Students of Summer 2017. Online sources include Matt Barton’s interview with John Van Caneghem.

Heroes of Might and Magic II is available for digital purchase on GOG.com, in a “Gold” edition that includes the expansion pack.

And here’s a special treat for those of you who’ve made it all the way down here to read the fine print. I’ve put together a zip file of all of the Heroes II scenarios from a “Millennium” edition of the first three Heroes games that was released in 1999. It includes a generous selection of fan-made scenarios, curated for quality. You’ll also find the “Agent of Heaven” campaign mentioned above, which, unlike the three other fan-made scenarios aforementioned, wasn’t a part of the Millennium edition. To access the new scenarios, rename the folder “MAPS” in your Heroes II installation directory to something else for safekeeping, then unzip the downloaded archive into the installation directory. The next time you start Heroes II, you should find all of the new scenarios available through the standard “New Game” menu. Note that some of the more narratively ambitious new scenarios feature supplemental materials, found in the “campaigns” and “Journals” folders. Have fun!)