In addition to Defender of the Crown, Bob Jacob and Cinemaware were able to deliver two more of their planned four launch titles to Mindscape before the end of 1986. Only Bill Williams’s Sinbad and the Throne of the Falcon fell hopelessly behind schedule, getting pushed well into the following year. Of the games that did make it, Sculptured Software’s Atari ST game S.D.I. is mildly interesting as a time capsule of its era, Doug Sharp’s Macintosh game King of Chicago much more so as an important experiment in interactive narrative. Today I’ll endeavor to give each game its just deserts.

The scenario of S.D.I. is almost hilariously of its time, a weird stew of science fiction and contemporary geopolitics that quotes Ronald Reagan’s speeches in its manual and could never have emerged more than a year or so earlier or later. It’s 2017, the Cold War has gone on business-as-usual for another thirty years, and Ronald Reagan’s vaunted Strategic Defense Initiative is approaching completion at last. In response, a large group of hardliners in the Soviet military have seized control of many of their country’s ICBM sites to launch a preemptive first strike, while also — this being 2017 and all — flooding Earth orbit with fighter planes to blow up those S.D.I. satellites that are already online. This being a computer game and all, the nascent trillion-dollar S.D.I. program comes down to one guy with the square-jawed name of Sloan McCormick, who’s expected to jump into his spaceship to shoot down the rebel fighters in between manually shooting rogue ICBMs out of the sky using the S.D.I. satellites. He’s of course played by you. If you succeed in holding the hardliners’ attacks at bay for long enough, you’ll get a distress call from the legitimate Soviet government’s central command station, whereupon — just in case anyone was thinking you hadn’t done enough for the cause already — you’ll have to singlehandedly enter the station and rescue it from a final assault by the hardliners. Succeed and you’ll get your trademark Cinemaware reward in the form of Natalya, the sultry commander of the station who’s inexplicably in love/lust with you. Who said glasnost was dead?

Like Defender of the Crown, S.D.I. very nearly missed its planned launch. It took John Cutter stepping in and riding herd over a Sculptured Software that seemed to be just a little out of their depth to push the project along to completion. It isn’t a terrible game, but it is the Cinemaware game that feels least like a Cinemaware game, well earning its status as the forgotten black sheep of the family. Natalya aside, its cinematic influences are minimal. The manual tries heroically to draw a line of concordance through heroes like Flash Gordon and Han Solo to end up at Sloan McCormick, but even it must admit to an important difference: “This time the danger comes, not from an alien invasion, but from a force here on Earth.” Likewise, S.D.I. doesn’t conform to the normal Cinemaware ethos of (in Jacob’s words) “no typing, get you right into the game, no manual.” Flying around in space blasting rebels requires memorizing a number of keyboard commands that can be found nowhere other than the ideally unnecessary manual. What with its demanding, non-stop action broken down into distinct stages, S.D.I. reminds me of nothing so much as Access Software’s successful line of Commodore 64 action games that included Beach-Head and Raid Over Moscow; S.D.I. also shares something of a theme with the latter game, although it didn’t provoke anything like the same controversy. Unfortunately, Cinemaware’s take on the concept just isn’t executed as well. The “flight simulator” where you spend the majority of your time is a particular disappointment; your enemies follow a few distressingly predictable flight patterns, while your control over your own ship is nonsensically limited to gentle turns, climbs, and dives. And the Elite-inspired docking mini-game you have to go through every time you return to your base is just infuriating. But perhaps most distressing, especially to the Amiga owners who finally got their hands on the game when it was ported to their platform almost a year later, were the workmanlike graphics, created in-house by Sculptured Software. One could normally count on great graphics even from Cinemaware games whose gameplay was a bit questionable, but not so much this time. Even Natalya, well-endowed as she was, couldn’t compete with those fetching Saxon lasses from Defender of the Crown.

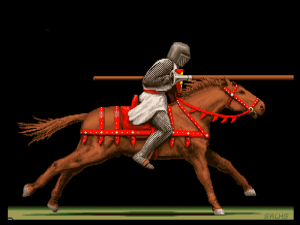

King of Chicago is a far more innovative game. This interactive gangster flick stars you as Pinky Callahan, an ambitious young hoodlum in 1931 Chicago. Al Capone has just been sent away for tax evasion, creating an opening for you and your North Side gang of Irishmen, principal rivals of Capone’s Chicago Outfit. But to unite the Chicago underworld under your personal leadership you’ll first have to oust the Old Man who currently runs your own gang. Only then you can start on the Chicago Outfit — or, as the game calls them, the “South Siders.” Swap out medieval England for Prohibition-era Chicago and the scenario isn’t all that far removed from Defender of the Crown: conquer all of the territory on the map that’s held by your ethnic rivals. The experience of playing the two games, however, could hardly be more different.

Like Defender of the Crown, King of Chicago isn’t so interested in the actual history it references as it is in movie history. It doesn’t even bother to get the dates right; the game begins months before the real Capone was sentenced and sent away. Victory in King of Chicago must mean the North Siders rising again to take over the whole city, a scenario as ahistorical as the Saxons defeating the Normans to regain control of England. (Cinemaware did seem to have a thing for historical lost causes, didn’t they?) Prohibition-era Chicago is just a stage set for King of Chicago, Al Capone just a name to drop. The only place where the game notably departs from gangster-movie clichés is in making you and your gang a bunch of Irishmen rather than Italians — and if you don’t pay attention to one or two last names it’s easy to miss even that, given that there’s no voice acting and thus no accents to spot. Otherwise all of the expected tropes are there, from Pinky’s weeping mother who gives all the money he sends her to the church to his devious, high-maintenance girlfriend Lola. But then, as Bob Jacob so memorably put it, all Cinemaware really had to do was “rise to the level of copycat, and we’d be considered a breakthrough.” Fair enough. As homages go — and you’ll find very few computer-game fictions of the 1980s that aren’t an homage to more established media of one sort of another — King of Chicago is one of the better of its era.

Indeed, some may find it a bit too true to its inspirations. King of Chicago is notable for just how hardcore a take on the gangster genre it is. Pinky is a punk. You can play him as a devious sneak or a violent, impulsive psychopath, but he remains a punk. There’s no redemption to be found amongst King of Chicago‘s many possible story arcs, just crime and bloody murder and revenge and, if all goes well, control of the whole of Chicago. While the ledger quietly omits the brothels that provided so much of the real Chicago mob’s income, that’s about the only place where the game soft-pedals. Even Pinky’s interactions with Lola are peppered with crude remarks about how her skills in bed make up for her other failings. Bob Jacob’s original conception of Cinemaware as games for adults finds its fullest expression here, at least if what constitutes “adult” in your view is jaded sex and casual violence.

More interestingly, King of Chicago represents one of Cinemaware’s most earnest and ambitious attempt at creating an interactive narrative with at least a modicum of depth. You could convert a play-through into a screenplay and have it read as, if not precisely a good screenplay, at least one that wasn’t totally ridiculous. Not coincidentally, King of Chicago contains far more text than the average Cinemaware game. Its formal approach is also unique: it’s essentially a hypertext narrative, years before that term came into common usage. You control Pinky through a bewildering thicket of story branches by clicking on multiple-choice thought bubbles above his head. Occasionally a little action game emerges to provide a change of pace, but these are relatively deemphasized in comparison with most Cinemaware games. If S.D.I. stands at the purely reactive, action-oriented end of the Cinemaware scale, King of Chicago stands at the opposite pole of cerebral storymaking. It has a certain — and I know Bob Jacob would hate this description — literary quality about it in comparison to its stablemates. You can see its unusual narrative sophistication not least in its female cast. While not exactly what you’d call progressive in its handling of women, King of Chicago does give them actual personalities and roles to enact in the drama, rather than regarding them strictly as prizes for a job well done. In this respect it once again stands out as almost unique in the Cinemaware catalog.

King of Chicago was the creation of a thirty-something former fifth-grade teacher named Doug Sharp, another of Jacob’s old contacts from his days as a software agent that were serving him so well now as a software entrepreneur in his own right. Sharp had first been exposed to microcomputers during the late 1970s, when he was teaching school in the educational-computing hotbed of Minnesota, home of the Minnesota Educational Computing Consortium and their seminal edutainment game The Oregon Trail amongst other innovations. His habit of taking his school’s Apple IIs home with him on weekends soon led to a job writing educational software for Control Data and Science Research Associates. In 1984 he and a partner, Mike Johnson, started working on a spiritual successor to Silas Warner’s Robot War that they called ChipWits. Programmable robots remained the theme, but they were now programmed using a visual, icon-based language instead of Robot War‘s cryptic assembly-language-style code. ChipWits represented a kindler, gentler approach to recreational robot programming all the way around. Instead of focusing on free-form robot-against-robot combat, the game was built as a series of missions, a collection of discrete challenges that the player’s cute little robot had to overcome in the course of a grand and non-violent adventure. Written initially for the Commodore 64, ChipWits became one of the breakout stars of the January 1985 Winter Consumer Electronics Show, and did moderately well once released by Epyx shortly thereafter. The agent who brokered that publishing deal was, you guessed it, Bob Jacob, while Kellyn Beeck, soon to become Cinemaware’s most prolific game designer but then in charge of software acquisitions at Epyx, was the latter company’s signatory to the contract.

Sharp’s next game King of Chicago became the first of the eventual Cinemaware titles to go into development, several months before Jacob would even officially form his company. Sharp threw himself into the project with a will. He “collected all the classic gangster films. I picked apart what I enjoyed most about them and used this information to come up with my characters and storyline.” He worked with a graduate student in the University of Toronto’s drama department named Paul Walsh to learn the subtle nuances of pacing and dialog that make a good play or movie. Walsh became quite taken with the project for a while there in his own right. He had a blast coming up with new episodes for Sharp to sort through, chop up, and, truth be told, often discard. “When you work on a play,” Walsh said, “you have to cut out so much good stuff. With this, all your good ideas get thrown in.” True as ever to Cinemaware’s theme, Jacob would wind up giving Walsh a credit as “Dialog Coach” in the finished product. (Walsh would go on to a long and still-ongoing career as a professor, playwright, dramaturg, and translator of Ibsen.)

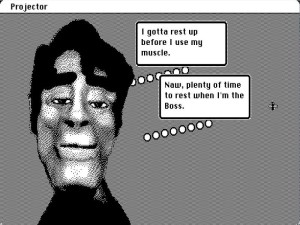

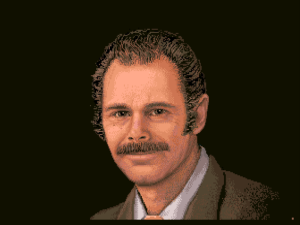

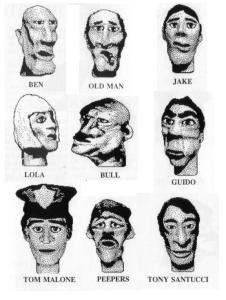

Apart from Walsh and some music contributed by Eric Rosser, that original Macintosh King of Chicago was the work of Doug Sharp alone. When the coding and writing got to be too much, he would retreat into his workshop to mold the heads of his various characters out of clay. Once crudely digitized and imported into the game, their grotesque shapes — some of the gangsters seem to have been afflicted with whatever strange illness led to Elephant Man Joseph Merrick — certainly gave the game a unique look, if one perhaps more appropriate to a horror movie than a gangster flick.

Apart from Walsh and some music contributed by Eric Rosser, that original Macintosh King of Chicago was the work of Doug Sharp alone. When the coding and writing got to be too much, he would retreat into his workshop to mold the heads of his various characters out of clay. Once crudely digitized and imported into the game, their grotesque shapes — some of the gangsters seem to have been afflicted with whatever strange illness led to Elephant Man Joseph Merrick — certainly gave the game a unique look, if one perhaps more appropriate to a horror movie than a gangster flick.

But no matter. What’s most interesting about King of Chicago is what’s going on beneath its surface. What might first appear to be a simple branching narrative in the tradition of Choose Your Own Adventure turns out to be something much more sophisticated. It is in fact a hugely innovative leap into uncharted waters in the fraught field of ludic narrative. I want to take some time here to talk about what King of Chicago does and how it does it because these qualities make it, so much less splashy than Defender of the Crown though its surface appearance and commercial debut may have been, of equal importance in its own way. More hypertext narrative than traditional adventure game, King of Chicago does its level best to make a story with you rather than merely tell you a story. This distinction is a very important one.

The story in a storytelling game lies waiting to be discovered — but not written — by you as you make your way through the game. Storytelling games can offer strong, interesting stories, but do so at the expense of player freedom. You generally have local agency only, meaning that you may have some options about the order in which you explore the storyworld and even how you cause events to progress, but you’re nevertheless tightly bound to the overall plot created by the game’s designer. The canonical example of a storytelling game, a perpetual touchstone of scholars from Janet Murray to Chris Crawford, is Infocom’s Planetfall, particularly the death therein of your poor little robot companion Floyd. Every player who completes Planetfall will have experienced the same basic story. She may have seen that story in a slightly different order than another player and even solved its problems in slightly different ways, but Floyd will always sacrifice himself at the climactic moment, and all of the other major plot events will always play out in the same way. Storytelling games are Calvinist in philosophy: free will is just an illusion, your destiny foreordained before you even get started. Still, fixed as their overall plots may be, they allow plenty of space for puzzle solving, independent investigation of the environment, and all those other things we tend to wrap up under the convenient term of “gameplay.” I’m of the opinion that experiencing a story through the eyes of a person who represents you the player, whom you control, can do wonders to immerse you in that story and deepen the impact it has on you. Some folks, however, take the Infocom style of interactive fiction’s explicit promise of an interactivity that turns out to exist only at the most granular level as a betrayal of the medium’s potential. This has led them to chase after an alternative in the form of the storymaking game.

The idealized storymaking game is one that turns you loose in a robustly simulated storyworld and allows you to create your own story in conjunction with the inhabitants of that world. [1]I should note at this point that the terms “storytelling game” and “storymaking game” are hardly set in stone. Some prefer to talk of “canned narratives” and “emergent narratives.” Some, such as Brian Moriarty, have even flipped the terms around, considering the stories in storymaking games to be stories made beforehand by a human designer, and the stories in storytelling games to be stories made up and told on the fly by the computer. Doug Sharp himself seems to favor Moriarty’s usage, but I find my approach more intuitive. Regardless, it’s best not to get too hung-up on ever-shifting terminology in this area, and just try to understand the concepts. Unfortunately, it remains an unsolved and possibly unsolvable problem, for we lack a computerized intelligence capable of responding to the player when the scope of action allowed to her includes literally anything she can dream of doing. Since an infinite number of possibilities cannot be anticipated and coded for by a human, the computer would need to be able to improvise on the fly, and that’s not something computers are notably good at doing. If we somehow could find a way around this problem, we’d just ram up against another: stories of any depth almost universally require words to tell, and computers are terrible at generating natural language. In a presentation on King of Chicago for the 1989 Game Developers Conference, Sharp guessed that artificial intelligence would reach a point around 2030 where what he calls “fat and deep,” AI-driven storymaking games would become possible. As of today, though, it doesn’t look like we’ll get there within the next fifteen years. We may never get there at all. Strong AI remains, at it always has, a chimera lurking a few decades out there in some murky future.

That said, there’s a large middle ground between the fixed, unalterable story arc of a Planetfall and the complete freedom of our idealized storymaking game. Somewhere inside that middle ground rests the field of choice-based or hypertext literature, which generally gives the player a great deal of control over where the story goes in comparison to a traditional adventure game of the Infocom stripe, if nothing close to the freedom promised by a true storymaking game. The hypertext author figures out all of the different ways that she is willing to allow the story to go beforehand and then hand-crafts lots and lots of text to correspond with all of her various narrative tributaries. The player still isn’t really making her own story, since she can’t possibly do anything that hasn’t been anticipated by the story’s author. Yet if the choices are varied and interesting enough it almost doesn’t matter.

The adventure game and the hypertext are two very distinct forms; fans of one are by no means guaranteed to be fans of the other. Each is in some sense an exploration of story, but in very different ways. If the adventure game is concerned with the immersive experience of story, the hypertext is concerned with possibilities, with that question we all ask ourselves all the time, even when we know we should know better: what would have happened if I had done something else? The natures of the two forms dictate the ways that we approach them. Most adventure games are long-form works which players are expected to experience just once. Most hypertexts by contrast are written under the assumption that the player will want to engage with them multiple times, making different choices and exploring the different possible outcomes. This makes up for the fact that the average playthrough of the average hypertext, with its bird’s-eye view of the story, takes a small fraction of the time of the average playthrough of the average adventure game, with its worm’s-eye view. It also, not incidentally for Doug Sharp’s purposes, dovetails nicely with the Cinemaware concept of games that play out in no more time than it takes to watch a film, but that, unlike (most) films, can be revisited many times.

Narrative-oriented computer games in the early days hewed almost uniformly to the adventure-game model. Partly this was a matter of tradition; parsers and puzzles had become so established in the wake of Adventure and Scott Adams that it was seemingly hard for many authors to even conceive of alternative models of interaction (witness Nine Princes in Amber, a game that founders on the rocks between text adventure and hypertext). And partly this was a matter of technical constraints; those early machines were so starved for memory that the idea of a complex branching narrative, most of which the player would never see in any given playthrough, was a luxury authors could barely even conceive of affording. Thus during the early 1980s hypertexts were commonly found not on computers but in the hugely popular Choose Your Own Adventure line of children’s books and the many spin-offs and competitors it spawned.

The firewall began to come down at last in 1986, after designers began to realize that it was okay to dump parsers and puzzles if their design goals leaned in another direction, and after microcomputers had progressed enough from the days of 16 K and cassette tapes to crack open the door to more narrative experimentation. We’ve already looked closely at a couple of the works that resulted. Portal and Alter Ego each had the courage to abandon the parser, but neither takes full advantage of the new possibilities that come with placing a computer program — a real simulated storyworld — behind the multiple choices of Choose Your Own Adventure. Portal is an exploration of a fixed, immutable story that has already happened rather than an exercise in making a new one. Alter Ego is more ambitious in its way, being an interactive story of a life that keeps track of your alter ego’s level of psychological, interpersonal, and economic achievement. Still, it doesn’t adapt the story it tells all that well to either your evolving personality or your evolving life situation, forcing you to power through largely the same set of vignettes every single time you play. King of Chicago, on the other hand, pushes the envelope of narratological possibility harder than any game that had yet appeared on a PC at the time of its release.

Here’s how Sharp describes his conception of his interactive movie:

A guy in a projection booth with hours and hours of film about a group of gangsters. The film is not on reels but in short clips of from a few seconds to a few minutes long. The clips hang all over the walls of the projection room. The projectionist knows exactly what’s on each clip and can grab a new one and thread it into the projector instantly. The audience is out there in the theater shouting out suggestions and the projectionist is listening and taking the suggestions into account but also factoring in what clips he’s already shown, because he wants to put together a real story with a beginning, middle, and end, subplots, introduction and development of characters and the whole narrative works. I wanted to minimize hard branches, to keep the cuts between clips as unpredictable as possible. Yet the story had to make sense, guys couldn’t die and reappear later, you couldn’t treat the gangster’s moll like dirt and expect her to cover your back later.

The second-to-last sentence is key. Hypertexts prior to King of Chicago had almost all been built out of predictable hard branches: “If you decide to do A, turn to page X; if you decide to do B, turn to page Y.” Such an approach all too often devolves after a play or two into a process of methodically lawn-mowering through the branches, looking for the path not yet taken until branches or patience is exhausted. Sharp, however, wanted a story that could feel fresh and surprising over many plays. In short, he wanted to deliver an exciting new gangster movie to his player each time. To do so, he would have to avoid the predictability of hard branches. He dubbed the system he came up with to do so Dramaton.

Like real life, Dramaton deals in probabilities and happenstance as much as cause and effect. The game as a whole can be thought of as a big bag of potential scenes, each described and “shot” much like a single scene from a movie, with the important difference that each offers Pinky one or more choices to make as it plays out. These choices can lead to a limited amount of the dreaded hard branching within each scene. Where Dramaton mixes things up, though, is in the way it chooses the next scene. Rather than inflexibly dictating what comes next via a hard branch, each episode alters a variety of variables reflecting the state of the storyworld and Pinky’s place within it. Some of these are true/false flags. (Has Pinky bumped off the Old Man to assume control of the gang? Has the eminently bribeable Alderman Burke been elected mayor?) Others are numeric measurements. (How happy is Pinky’s girl Lola with her beau? How does the rest of the gang feel about him? How well are the North Siders doing in Chicago at large? How agitated are the police by the gangsters’ activities?)

After an episode is complete, a narrative generator — what Sharp calls the Narraton — looks at all of these factors, then adds a healthy dose of good old randomness to choose an appropriate next episode that fits with what has come before. The player’s specific choices in an episode can also have a direct impact on what happens next, but with rare exceptions such choices are used more to whittle down the field of possibilities than to force a single, pre-determined follow-up episode. For example, if the player has just decided it might be a good idea to go see what’s up with Lola, the following episode will be restricted to those involving her.

To facilitate choosing an appropriate episode, each is assigned “keys,” amounting to the state of affairs in the storyworld that would ideally hold sway for it to fit perfectly into the overall context of the current story. For instance, an episode in which Lola goads Pinky, Lady Macbeth-style, for his failings and lack of ambition might require a low “Lola Happiness” number and a low “Pinky Reputation” score. An episode in which Pinky hears some other gangsters grumbling about the Old Man and must decide how to respond might require a relatively low “Old Man Reputation” number but a high “Gang Confidence” score (thus leading them to feel empowered to speak up). The closer the current reality of the storyworld corresponds with a given episode’s indexes, the more likely that episode is to be chosen.

This method of weaving scenes together had some interesting implications for Sharp himself as he wrote the game, turning the process into something more akin to guiding a child’s growth than constructing a dead piece of technology. He could “improvise” as an author: “If I got a great idea for a new episode, I could set it up in its own sequence, assign it keys, and trust that it would be selected appropriately.” Thus he was actually approaching the storymaking ideal despite being forced to work with fixed chunks of story rather than being able to cause the computer to improvise its own story; he was creating a narrative capable of surprising even him, the author. He notes that there are quite likely episodes in King of Chicago that have never been seen by any player ever because the indexes assigned to them can never be matched closely enough to trigger them — dead ends left behind as the storyworld organically grew and evolved under his careful stewardship.

For the ordinary player of the finished product, there must obviously come a point where episodes begin to repeat themselves and King of Chicago loses its interest. Sharp did his best, however, to delay that point as long as possible. He estimates that all of the episodes in the game played one after another would take about eight hours to get through, while the player is likely to see no more than 20 percent of them in any given playthrough. For a while anyway each of the gangster movies you and King of Chicago generate together really does feel unique. Even the opening scene that kicks off the movie varies with the vicissitudes of the random-number generator. The storyworld of King of Chicago, where your actions have an effect on your own fate and that of those around you but aren’t the whole of the story, can feel shockingly real in contrast to both the canned fictions of adventure games and the hard branches of those less ambitious hypertext narratives that still dominate the genre even today.

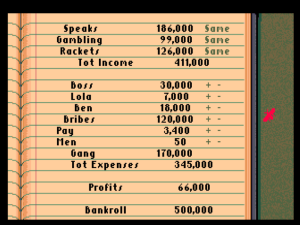

Unfortunately less effective is the simple economic strategy game that’s grafted onto the interpersonal stories. Here you control how much effort you put into your various criminal endeavors — speakeasies, gambling, and rackets — as well as how much you pay your right-hand man and bean counter Ben, the various officials you bribe, the foot soldiers in the gang, Lola, and of course yourself. In the original Macintosh version of the game this process is almost unbelievably tedious. You’re forced to learn about and control your empire via a question-and-answer session with Ben that takes absolutely forever and that has to be repeated over and over as the months pass. You can easily end up spending more total time having these inane dialogs with Ben then you do with the entire rest of the game.

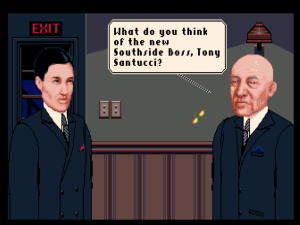

Thankfully, the Macintosh version is not the final or definitive one. Over a year after the original release the game finally appeared on the Amiga in a version that isn’t so much a port as a complete remake. While Sharp still acted as programmer and narratologist, Cinemaware’s in-house team completely redid the graphics, ditching Sharp’s Potato Heads in favor of hand-drawn portraits of tough mugs and pouting dames that could be dropped easily into any vintage James Cagney flick. Sharp, meanwhile, took the opportunity to tighten up the narrative, removing some wordy exposition and pointless scenes, rewriting others. The occasional action games were also vastly improved to reflect the Amiga’s capabilities. Best of all, the endless question-and-answer sessions with Ben were replaced with a simple interactive ledger giving an easily adjustable overview of the state of your criminal empire. The strategy angle is still a bit undercooked — the numbers never quite add up from month to month, and cause and effect is far from consistently clear — but it goes from being a tedious time sink to an occasional distraction. The Amiga version plays out in about half the time of the original, with a corresponding additional dramatic thrust.

Of S.D.I. and King of Chicago, the latter would turn out to be the more successful in the long run, managing to sell more than 50,000 copies — albeit most of them in its vastly improved version for the Amiga and (eventually) the Atari ST, Apple IIGS, and IBM PC rather than its original Macintosh incarnation. Despite its relative commercial success, it’s always been amongst the most polarizing of the Cinemaware games, dismissed by some — unfairly in my opinion, for all the reasons I’ve just so copiously documented — as little more than a computerized Choose Your Own Adventure book. Future Cinemaware games would take their cue from Defender of the Crown rather than its companions on the label’s debut marquee. I wish I could say I expect to be revisiting the ideas behind Doug Sharp’s Dramaton soon, whether via a game from Cinemaware or anyone else, but such bold experiments in interactive narrative have been much less common than one might wish in the history of computer gaming. This just makes it all the more important to credit them when we find them.

(The sources listed in the previous article apply to this one as well. In addition: Commodore Power Play of August/September 1985; Doug Sharp’s blog; and two presentations given by Sharp, one from the 1989 Game Developers Conference and the other from the 1995 American Association of Artificial Intelligence Symposium on Interactive Story Systems.)

Footnotes

| ↑1 | I should note at this point that the terms “storytelling game” and “storymaking game” are hardly set in stone. Some prefer to talk of “canned narratives” and “emergent narratives.” Some, such as Brian Moriarty, have even flipped the terms around, considering the stories in storymaking games to be stories made beforehand by a human designer, and the stories in storytelling games to be stories made up and told on the fly by the computer. Doug Sharp himself seems to favor Moriarty’s usage, but I find my approach more intuitive. Regardless, it’s best not to get too hung-up on ever-shifting terminology in this area, and just try to understand the concepts. |

|---|