The biggest problem with the Macintosh hardware was pretty obvious, which was its limited expandability. But the problem wasn’t really technical as much as philosophical, which was that we wanted to eliminate the inevitable complexity that was a consequence of hardware expandability, both for the user and the developer, by having every Macintosh be identical. It was a valid point of view, even somewhat courageous, but not very practical, because things were still changing too fast in the computer industry for it to work, driven by the relentless tides of Moore’s Law.

— original Macintosh team-member Andy Hertzfeld

Jef Raskin and Steve Jobs didn’t agree on much, but they did agree on their loathing for expansion slots. The absence of slots was one of the bedrock attributes of Raskin’s original vision for the Macintosh, the most immediately obvious difference between it and Apple’s then-current flagship product, the Apple II. In contrast to Steve Wozniak’s beloved hacker plaything, Raskin’s computer for the people would be as effortless to set up and use as a stereo, a television, or a toaster.

When Jobs took over the Macintosh project — some, including Raskin himself, would say stole it — he changed just about every detail except this one. Yet some members of the tiny team he put together, fiercely loyal to their leader and his vision of a “computer for the rest of us” though they were, were beginning to question the wisdom of this aspect of the machine by the time the Macintosh came together in its final form. It was a little hard in January of 1984 not to question the wisdom of shipping an essentially unexpandable appliance with just 128 K of memory and a single floppy-disk drive for a price of $2495. At some level, it seemed, this just wasn’t how the computer market worked.

Jobs would reply that the whole point of the Macintosh was to change how computers worked, and with them the workings of the computer market. He wasn’t entirely without concrete arguments to back up his position. One had only to glance over at the IBM clone market — always Jobs’s first choice as the antonym to the Mac — to see how chaotic a totally open platform could be. Clone users were getting all too familiar with the IRQ and memory-address conflicts that could result from plugging two cards that were determined not to play nice together into the same machine, and software developers were getting used to chasing down obscure bugs that only popped up when their programs ran on certain combinations of hardware.

Viewed in the big picture, we could actually say that Jobs was prescient in his determination to stamp out that chaos, to make every Macintosh the same as every other, to make the platform in general a thoroughly known quantity for software developers. The norm in personal computing as most people know it — whether we’re talking phones, tablets, laptops, or increasingly even desktop computers — has long since become sealed boxes of one stripe or another. But there are some important factors that make said sealed boxes a better idea now than they were back then. For one thing, the pace of hardware and software development alike has slowed enough that a new computer can be viable just as it was purchased for ten years or more. For another, prices have come down enough that throwing a device away and starting over with a new one isn’t so cost-prohibitive as it once was. With personal computers still exotic, expensive machines in a constant state of flux at the time of the Mac’s introduction, the computer as a sealed appliance was a vastly more problematic proposition.

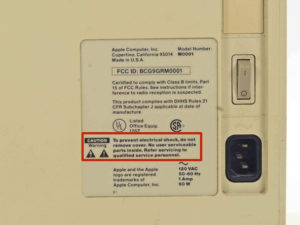

Determined to do everything possible to keep users out of the Mac’s innards, Apple used Torx screws for which screwdrivers weren’t commonly available to seal it, and even threatened users with electrocution should they persist in trying to open it. The contrast with the Apple II, whose top could be popped in seconds using nothing more than a pair of hands to reveal seven tempting expansion slots, could hardly have been more striking.

It was the early adopters who spotted the potential in that first slow, under-powered Macintosh, the people who believed Jobs’s promise that the machine’s success or failure would be determined by the number who bought it in its first hundred days on the market, who bore the brunt of Apple’s decision to seal it as tightly as Fort Knox. When Apple in September of 1984 released the so-called “Fat Mac” with 512 K of memory, the quantity that in the opinion of just about everyone — including most of those at Apple not named Steve Jobs — the machine should have shipped with in the first place, owners of the original model were offered the opportunity to bring their machines to their dealers and have them retro-fitted to the new specifications for $995. This “deal” sparked considerable outrage and even a letter-writing campaign that tried to shame Apple into bettering the terms of the upgrade. Disgruntled existing owners pointed out that their total costs for a 512 K Macintosh amounted to $3490, while a Fat Mac could be bought outright by a prospective new member of the Macintosh fold for $2795. “Apple should have bent over backward for the people who supported it in the beginning,” said one of the protest’s ringleaders. “I’m never going to feel the same about Apple again.” Apple, for better or for worse never a company that was terribly susceptible to such public shaming, sent their disgruntled customers a couple of free software packages and told them to suck it up.

Barely fifteen months later, when Apple released the Macintosh Plus with 1 MB of memory among other advancements, the merry-go-round spun again. This time the upgrade would cost owners of the earlier models over $1000, along with lots of downtime while their machines sat in queues at their dealers. With software developers rushing to take advantage of the increased memory of each successive model, dedicated users could hardly stand to regard each successive upgrade as optional. As things stood, then, they were effectively paying a service charge of about $1000 per year just to remain a part of the Macintosh community. Owning a Mac was like owning a car that had to go into the shop for a week for a complete engine overhaul once every year. Apple, then as now, was famous for the loyalty of their users, but this was stretching even that legendary goodwill to the breaking point.

For some time voices within Apple had been mumbling that this approach simply couldn’t continue if the Macintosh was to become a serious, long-lived computing platform; Apple simply had to open the Mac up, even if that entailed making it a little more like all those hated beige IBM clones. During the first months after the launch, Steve Jobs was able to stamp out these deviations from his dogma, but as sales stalled and his relationship with John Sculley, the CEO he’d hand-picked to run the company he’d co-founded, deteriorated, the grumblers grew steadily more persistent and empowered.

The architect of one of the more startling about-faces in Apple’s corporate history would be Jean-Louis Gassée, a high-strung marketing executive newly arrived in Silicon Valley from Apple’s French subsidiary. Gassée privately — very privately in the first months after his arrival, when Jobs’s word still was law — agreed with many on Apple’s staff that the only way to achieve the dream of making the Macintosh into a standard to rival or beat the Intel/IBM/Microsoft trifecta was to open the platform. Thus he quietly encouraged a number of engineers to submit proposals on what direction they would take the platform in if given free rein. He came to favor the ideas of Mike Dhuey and Brian Berkeley, two young engineers who envisioned a machine with slots as plentiful and easily accessible as those of the Apple II or an IBM clone. Their “Little Big Mac” would be based around the 32-bit Motorola 68020 chip rather than the 16-bit 68000 of the current models, and would also sport color — another Jobsian heresy.

In May of 1985, Jobs made the mistake of trying to recruit Gassée into a rather clumsy conspiracy he was formulating to oust Sculley, with whom he was now in almost constant conflict. Rather than jump aboard the coup train, Gassée promptly blew the whistle to Sculley, precipitating an open showdown between Jobs and Sculley in which, much to Jobs’s surprise, the entirety of Apple’s board backed Sculley. Stripped of his power and exiled to a small office in a remote corner of Apple’s Cupertino campus, Jobs would soon depart amid recriminations and lawsuits to found a new venture called NeXT.

Gassée’s betrayal of Jobs’s confidence may have had a semi-altruistic motivation. Convinced that the Mac needed to open up to survive, perhaps he concluded that that would only happen if Jobs was out of the picture. Then again, perhaps it came down to a motivation as base as personal jealousy. With a penchant for leather and a love of inscrutable phraseology — “the Apple II smelled like infinity” is a typical phrase from his manifesto The Third Apple, “an invitation to voyage into a region of the mind where technology and poetry exist side by side, feeding each other” — Gassée seemed to self-consciously adopt the persona of a Gallic version of Jobs himself. But regardless, with Jobs now out of the picture Gassée was able to consolidate his own power base, taking over Jobs’s old role as leader of the Macintosh division. He went out and bought a personalized license plate for his sports car: “OPEN MAC.”

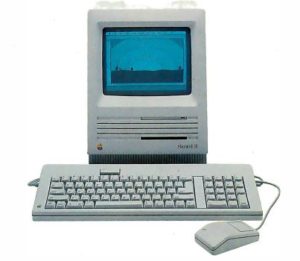

Coming some four months after Jobs’s final departure, the Mac Plus already included such signs of the changing times as a keyboard with arrow keys and a numeric keypad, anathema to Jobs’s old mouse-only orthodoxy. But much, much bigger changes were also well underway. Apple’s 1985 annual report, released in the spring of 1986, dropped a bombshell: a Mac with slots was on the way. Dhuey and Berkeley’s open Macintosh was now proceeding… well, openly.

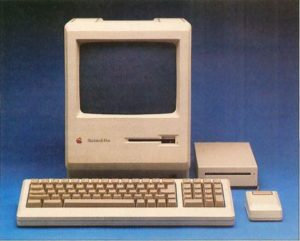

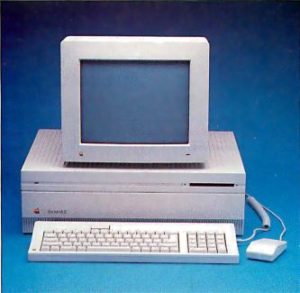

When it debuted five months behind schedule in March of 1987, the Macintosh II was greeted as a stunning but welcome repudiation of much of what the Mac had supposedly stood for. In place of the compact all-in-one-case designs of the past, the new Mac was a big, chunky box full of empty space and empty slots — six of them altogether — with the monitor an item to be purchased separately and perched on top. Indeed, one could easily mistake the Mac II at a glance for a high-end IBM clone; its big, un-stylish case even included a cooling fan, an item that placed even higher than expansion slots and arrow keys on Steve Jobs’s old list of forbidden attributes.

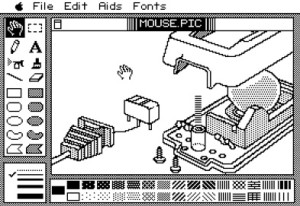

Apple’s commitment to their new vision of a modular, open Macintosh was so complete that the Mac II didn’t include any on-board video at all; the buyer of the $6500 machine would still have to buy the video card of her choice separately. Apple’s own high-end video card offered display capabilities unprecedented in a personal computer: a palette of over 16 million colors, 256 of them displayable onscreen at any one time at resolutions as high as 640 X 480. And, in keeping with the philosophy behind the Mac II as a whole, the machine was ready and willing to accept a still more impressive graphics card just as soon as someone managed to make one. The Mac II actually represented colors internally using 48 bits, allowing some 281 trillion different shades. These idealized colors were then translated automatically into the closest approximations the actual display hardware could manage. This fidelity to the subtlest vagaries of color would make the Mac II the favorite of people working in many artistic and image-processing fields, especially when those aforementioned even better video cards began to hit the market in earnest. Even today no other platform can match the Mac in its persnickety attention to the details of accurate color reproduction.

Some of the Mac II’s capabilities truly were ahead of their time. Here we see a desktop extended across two monitors, each powered by its own video card.

The irony wasn’t lost on journalists or users when, just weeks after the Mac II’s debut, IBM debuted their new PS/2 line, marked by sleeker, slimmer cases and many features that would once have been placed on add-on-cards now integrated into the motherboards. While Apple was suddenly encouraging the sort of no-strings-attached hardware hacking on the Macintosh that had made their earlier Apple II so successful, IBM was trying to stamp that sort of thing out on their own heretofore open platform via their new Micro Channel Architecture, which demanded that anyone other than IBM who wanted to expand a PS/2 machine negotiate a license and pay for the privilege. “The original Mac’s lack of slots stunted its growth and forced Apple to expand the machine by offering new models,” wrote Byte. “With the Mac II, Apple — and, more importantly, third-party developers — can expand the machine radically without forcing you to buy a new computer. This is the design on which Apple plans to build its Macintosh empire.” It seemed like the whole world of personal computing was turning upside down, Apple turning into IBM and IBM turning into Apple.

If so, however, Apple’s empire would be a very exclusive place. By the time you’d bought a monitor, video card, hard drive, keyboard — yes, even the keyboard was a separate item — and other needful accessories, a Mac II system could rise uncomfortably close to the $10,000 mark. Those who weren’t quite flush enough to splash out that much money could still enjoy a taste of the Mac’s new spirit of openness via the simultaneously released Mac SE, which cost $3699 for a hard-drive-equipped model. The SE was a 68000-based machine that looked much like its forefathers — built-in black-and-white monitor included — but did have a single expansion slot inside its case. The single slot was a little underwhelming in comparison to the Mac II, but it was better than nothing, even if Apple did still recommend that customers take their machines to their dealers if they wanted to actually install something in it. Apple’s not-terribly-helpful advice for those needing to employ more than one expansion card was to buy an “integrated” card that combined multiple functions. If you couldn’t find a card that happened to combine exactly the functions you needed, you were presumably just out of luck.

During the final years of the 1980s, Apple would continue to release new models of the Mac II and the Mac SE, now established as the two separate Macintosh flavors. These updates enhanced the machines with such welcome goodies as 68030 processors and more memory, but, thanks to the wonders of open architecture, didn’t immediately invalidate the models that had come before. The original Mac II, for instance, could be easily upgraded from the 68020 to the 68030 just by dropping a card into one of its slots.

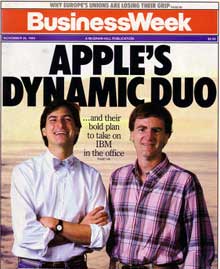

The Steve Jobs-less Apple, now thoroughly under the control of the more sober and pragmatic John Sculley, toned down the old visionary rhetoric in favor of a more businesslike focus. Even the engineers dutifully toed the new corporate line, at least publicly, and didn’t hesitate to denigrate Apple’s erstwhile visionary-in-chief in the process. “Steve Jobs thought that he was right and didn’t care what the market wanted,” Mike Dhuey said in an interview to accompany the Mac II’s release. “It’s like he thought everyone wanted to buy a size-nine shoe. The Mac II is specifically a market-driven machine, rather than what we wanted for ourselves. My job is to take all the market needs and make the best computer. It’s sort of like musicians — if they make music only to satisfy their own needs, they lose their audience.” Apple, everyone was trying to convey, had grown up and left all that changing-the-world business behind along with Steve Jobs. They were now as sober and serious as IBM, their machines ready to take their places as direct competitors to those of Big Blue and the clonesters.

To a rather surprising degree, the world of business computing accepted Apple and the Mac’s new persona. Through 1986, the machines to which the Macintosh was most frequently compared were the Commodore Amiga and Atari ST. In the wake of the Mac II and Mac SE, however, the Macintosh was elevated to a different plane. Now the omnipresent point of comparison was high-end IBM compatibles; the Amiga and ST, despite their architectural similarities, seldom even saw their existence acknowledged in relation to the Mac. There were some good reasons for this neglect beyond the obvious ones of pricing and parent-company rhetoric. For one, the Macintosh was always a far more polished experience for the end user than either of the other 68000-based machines. For another, Apple had enjoyed a far more positive reputation with corporate America than Commodore or Atari had even well before any of the three platforms in question had existed. Still, the nature of the latest magazine comparisons was a clear sign that Apple’s bid to move the Mac upscale was succeeding.

Whatever one thought of Apple’s new, more buttoned-down image, there was no denying that the market welcomed the open Macintosh with a matching set of open arms. Byte went so far as to call the Mac II “the most important product that Apple has released since the original Apple II,” thus elevating it to a landmark status greater even than that of the first Mac model. While history hasn’t been overly kind to that judgment, the fact remains that third-party software and hardware developers, who had heretofore been stymied by the frustrating limitations of the closed Macintosh architecture, burst out now in myriad glorious ways. “We can’t think of everything,” said an ebullient Jean-Louis Gassée. “The charm of a flexible, open product is that people who know something you don’t know will take care of it. That’s what they’re doing in the marketplace.” The biannual Macworld shows gained a reputation as the most exciting events on the industry’s calendar, the beat to which every journalist lobbied to be assigned. The January 1988 show in San Francisco, the first to reflect the full impact of Apple’s philosophical about-face, had 20,000 attendees on its first day, and could have had a lot more than that had there been a way to pack them into the exhibit hall. Annual Macintosh sales more than tripled between 1986 and 1988, with cumulative sales hitting 2 million machines in the latter year. And already fully 200,000 of the Macs out there by that point were Mac IIs, an extraordinary number really given that machine’s high price. Granted, the Macintosh had hit the 2-million mark fully three years behind the pace Steve Jobs had foreseen shortly after the original machine’s introduction. But nevertheless, it did look like at least some of the more modest of his predictions were starting to come true at last.

An Apple Watch 27 years before its time? Just one example of the extraordinary innovation of the Macintosh market was the WristMac from Ex Machina, a “personal information manager” that could be synchronized with a Mac to take the place of your appointment calendar, to-do list, and Rolodex.

While the Macintosh was never going to seriously challenge the IBM standard on the desks of corporate America when it came to commonplace business tasks like word processing and accounting, it was becoming a fixture in design departments of many stripes, and the staple platform of entire niche industries — most notably, the publishing industry, thanks to the revolutionary combination of Aldus PageMaker (or one of the many other desktop-publishing packages that followed it) and an Apple LaserWriter printer (or one of the many other laser printers that followed it). By 1989, Apple could claim about 10 percent of the business-computing market, making them the third biggest player there after IBM and Compaq — and of course the only significant player there not running a Microsoft operating system. What with Apple’s premium prices and high profit margins, third place really wasn’t so bad, especially in comparison with the moribund state of the Macintosh of just a few years before.

So, the Macintosh was flying pretty high as the curtain began to come down on the 1980s. It’s instructive and more than a little ironic to contrast the conventional wisdom that accompanied that success with the conventional wisdom of today. Despite the strong counterexample of Nintendo’s exploding walled garden over in the videogame-console space, the success the Macintosh had enjoyed since Apple’s decision to open up the platform was taken as incontrovertible proof that openness in terms of software and hardware alike was the only viable model for computing’s future. In today’s world of closed iOS and Android ecosystems and computing via disposable black boxes, such an assertion sounds highly naive.

But even more striking is the shift in the perception of Steve Jobs. In the late 1980s, he was loathed even by many strident Mac fans, whilst being regarded in the business and computer-industry press and, indeed, much of the popular press in general as a dilettante, a spoiled enfant terrible whose ill-informed meddling had very nearly sunk a billion-dollar corporation. John Sculley, by contrast, was lauded as exactly the responsible grown-up Apple had needed to scrub the company of Jobs’s starry-eyed hippie meanderings and lead them into their bright businesslike present. Today popular opinion on the two men has neatly reversed itself: Sculley is seen as the unimaginative corporate wonk who mismanaged Jobs’s brilliant vision, Jobs as the greatest — or at least the coolest — computing visionary of all time. In the end, of course, the truth must lie somewhere in the middle. Sculley’s strengths tended to be Jobs’s weaknesses, and vice versa. Apple would have been far better off had the two been able to find a way to continue to work together. But, in Jobs’s case especially, that would have required a fundamental shift in who these men were.

The loss among Apple’s management of that old Jobsian spirit of zealotry, overblown and impractical though it could sometimes be, was felt keenly by the Macintosh even during these years of considerable success. Only Jean-Louis Gassée was around to try to provide a splash of the old spirit of iconoclastic idealism, and everyone had to agree in the end that he made a rather second-rate Steve Jobs. When Sculley tried on the mantle of visionary — as when he named his fluffy corporate autobiography Odyssey and subtitled it “a journey of adventure, ideas, and the future” — it never quite seemed to fit him right. The diction was always off somehow, like he was playing a Silicon Valley version of Mad Libs. “This is an adventure of passion and romance, not just progress and profit,” he told the January 1988 Macworld attendees, apparently feeling able to wax a little more poetic than usual before this audience of true believers. “Together we set a course for the world which promises to elevate the self-esteem of the individual rather than a future of subservience to impersonal institutions.” (Apple detractors might note that elevating their notoriously smug users’ self-esteem did indeed sometimes seem to be what the company was best at.)

It was hard not to feel that the Mac had lost something. Jobs had lured Sculley from Pepsi because the latter was widely regarded as a genius of consumer marketing; the Pepsi Challenge, one of the most iconic campaigns in the long history of the cola wars, had been his brainchild. And yet, even before Jobs’s acrimonious departure, Sculley, bowing to pressure from Apple’s stockholders, had oriented the Macintosh almost entirely toward taking on the faceless legions of IBM and Compaq that dominated business computing. Consumer computing was largely left to take care of itself in the form of the 8-bit Apple II line, whose final model, the technically impressive but hugely overpriced IIGS, languished with virtually no promotion. Sculley, a little out of his depth in Silicon Valley, was just following the conventional wisdom that business computing was where the real money was. Businesspeople tended to be turned off by wild-eyed talk of changing the world; thus Apple’s new, more sober facade. And they were equally turned off by any whiff of fun or, God forbid, games; thus the old sense of whimsy that had been one of the original Mac’s most charming attributes seemed to leach away a little more with each successive model.

Those who pointed out that business computing had a net worth many times that of home computing weren’t wrong, but they were missing something important and at least in retrospect fairly obvious: namely, the fact that most of the companies who could make good use of computers had already bought them by now. The business-computing industry would doubtless continue to be profitable for many and even to grow steadily alongside the economy, but its days of untapped potential and explosive growth were behind it. Consumer computing, on the other hand, was still largely virgin territory. Millions of people were out there who had been frustrated by the limitations of the machines at the heart of the brief-lived first home-computer boom, but who were still willing to be intrigued by the next generation of computing technology, still willing to be sold on computers as an everyday lifestyle accessory. Give them a truly elegant, easy-to-use computer — like, say, the Macintosh — and who knew what might happen. This was the vision Jef Raskin had had in starting the ball rolling on the Mac back in 1979, the one that had still been present, if somewhat obscured even then by a high price, in the first released version of the machine with its “the computer for the rest of us” tagline. And this was the vision that Sculley betrayed after Jobs’s departure by keeping prices sky-high and ignoring the consumer market.

“We don’t want to castrate our computers to make them inexpensive,” said Jean-Louis Gassée. “We make Hondas, we don’t make Yugos.” Fair enough, but the Mac was priced closer to Mercedes than Honda territory. And it was common knowledge that Apple’s profit margins remained just about the fattest in the industry, thus raising the question of how much “castration” would really be necessary to make a more reasonably priced Mac. The situation reached almost surrealistic levels with the release of the Mac IIfx in March of 1990, an admittedly “wicked fast” addition to the product line but one that cost $9870 sans monitor or video card, thus replacing the metaphorical with the literal in Gassée’s favored comparison: a complete Mac IIfx system cost more than most actual brand-new Hondas. By now, the idea of the Mac as “the computer for the rest of us” seemed a bitter joke.

Apple was choosing to fight over scraps of the business market when an untapped land of milk and honey — the land of consumer computing — lay just over the horizon. Instead of the Macintosh, the IBM-compatible machines lurched over in fits and starts to fill that space, adopting in the process most of the Mac’s best ideas, even if they seldom managed to implement those ideas quite as elegantly. By the time Apple woke up to what was happening in the 1990s and rushed to fill the gap with a welter of more reasonably priced consumer-grade Macs, it was too late. Computing as most Americans knew it was exclusively a Wintel world, Macs incompatible, artsy-fartsy oddballs. All but locked out of the fastest-growing sectors of personal computing, the very sectors the Macintosh had been so perfectly poised to absolutely own, Apple was destined to have a very difficult 1990s. So difficult, in fact, that they would survive the decade’s many lows only by the skin of their teeth.

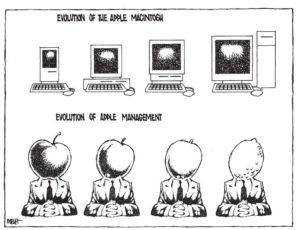

This cartoon by Tom Meyer, published in the San Francisco Chronicle, shows the emerging new popular consensus about Apple by the early 1990s: increasingly overpriced, bloated designs and increasingly clueless management.

Now that the 68000 Wars have faded into history and passions have cooled, we can see that the Macintosh was in some ways almost as ill-served by its parent company as was the Commodore Amiga by its. Apple’s management in the post-Jobs era, like Commodore’s, seemed in some fundamental way not to get the very creation they’d unleashed on the world. And so, as with the Amiga, it was left to the users of the Macintosh to take up the slack, to keep the vision thing in the equation. Thankfully, they did a heck of a job with that. Something in the Mac’s DNA, something which Apple’s new sobriety could mask but never destroy, led it to remain a hotbed of inspiring innovations that had little to do with the nuts and bolts of running a day-to-day business. Sometimes seemingly in spite of Apple’s best efforts, the most committed Mac loyalists never forgot the Jobsian rhetoric that had greeted the platform’s introduction, continuing to see it as something far more compelling and beautiful than a tool for business. A 1988 survey by Macworld magazine revealed that 85 percent of their readers, the true Mac hardcore, kept their Macs at home, where they used them at least some of the time for pleasure rather than business.

So, the Mac world remained the first place to look if you wanted to see what the artists and the dreamers were getting up to with computers. We’ve already seen some examples of their work in earlier articles. In the course of the next few, we’ll see some more.

(Sources: Amazing Computing of February 1988, April 1988, May 1988, and August 1988; Info of July/August 1988; Byte of May 1986, June 1986, November 1986, April 1987, October 1987, and June 1990; InfoWorld of November 26 1984; Computer Chronicles television episodes entitled “The New Macs,” “Macintosh Business Software,” “Macworld Special 1988,” “Business Graphics Part 1,” “Macworld Boston 1988,” “Macworld San Francisco 1989,” and “Desktop Presentation Software Part 1”; the books West of Eden: The End of Innocence at Apple Computer by Frank Rose, Apple Confidential 2.0: The Definitive History of the World’s Most Colorful Computer Company by Owen W. Linzmayer, and Insanely Great: The Life and Times of Macintosh, the Computer that Changed Everything by Steven Levy; Andy Hertzfeld’s website Folklore.)